Feed aggregator

Accelerating NetOps transformation with Ansible Automation Platform

Against the Federal Moratorium on State-Level Regulation of AI

Cast your mind back to May of this year: Congress was in the throes of debate over the massive budget bill. Amidst the many seismic provisions, Senator Ted Cruz dropped a ticking time bomb of tech policy: a ten-year moratorium on the ability of states to regulate artificial intelligence. To many, this was catastrophic. The few massive AI companies seem to be swallowing our economy whole: their energy demands are overriding household needs, their data demands are overriding creators’ copyright, and their products are triggering mass unemployment as well as new types of clinical ...

Building platforms using kro for composition

Recent industry developments, such as Amazon’s announcement of the new EKS capabilities, highlight a trend toward supporting platforms with managed GitOps, cloud resource operators, and composition tooling. In particular, the involvement of Kube Resource Orchestrator (kro)—a young, cross-cloud initiative—reflects growing ecosystem interest in simplifying Kubernetes-native resource grouping. Its inclusion in the capabilities package signals that major cloud providers recognize the value of the SIG Cloud Provider–maintained project and its potential role in future platform-engineering workflows.

This is a win for platform engineers. The composition of Kubernetes resources is becoming increasingly important as declarative Infrastructure as Code (IaC) tooling expands the number of objects we manage. For example, CNCF graduated project Crossplane, and the cloud-specific alternatives, such as AWS Controller for Kubernetes (ACK), which is packaged with EKS Capabilities both can add hundreds or even thousands of new CRDs to a cluster.

With composition available as a managed service, platform teams can focus on their mission to build what is unique to their business but common to their teams. They achieve this by combining composition with encapsulation of all associated processes and decoupled delivery across any target environment.

The rise of Kubernetes-native composition

The core value of kro lies in the idea of a ResourceGraphDefinition. Each definition abstracts many Kubernetes objects behind a single API. This API specifies what users may configure when requesting an instance, which resources are created per request, how those sub-resources depend on each other, and what status should be exposed back to the users and dependent resources. kro then acts as a controller that responds to these definitions by creating a new user-facing CRD and managing requests against it through an optimized resource DAG. This abstraction can reduce reliance on tools such as Helm, Kustomize, or hand-written operators when creating consistent patterns.

The collaboration between and the investment across cloud vendors contributing to kro is a bright sign for our industry. However, challenges remain for end users adopting these frameworks. It can often feel like they are trapped in the “How to draw an owl” meme, where kro helps teams sketch the ovals for the head and body, but drawing the rest of the platform owl requires a big leap for the platform engineers doing the work.

Where kro fits in platform design

Effective platforms demonstrate results across three outcomes based on time to delivery:

- Time for a user to get a new service they depend on to deliver their value

- Time to patch all instances of an existing service or capability

- Time to introduce a new business-compliant capability

Across the industry, we see platforms not only improving these metrics but fundamentally shifting beliefs about what is possible. Users are getting the tools they need to take new ideas to production in minutes, not months. A handful of engineers are managing continuous compliance and regular patching. Specialists bring their requirements directly to users without a central team bottleneck.

Universally successful platforms that deliver on these outcomes are designed around three principles:

Composition over simple abstraction

Composition enables teams to build from low-level components to high-value through common abstraction APIs. kro’s ResourceGroups provide an additional Kubernetes-native approach alongside Crossplane compositions, Helm charts, and Kratix Promises.

Encapsulation of configuration, policy, and process

Enterprise platforms must provide more than resources. They need clear ways to capture all the weird and wonderful (business-critical) requirements and processes they have built over the years. Yes, this can mean declarative code, but also imperative API calls, operational workflows that incorporate manual steps, legacy integrations with off-line systems, and, of course, interactions with non-Kubernetes resources. Safe composition depends on the ability to apply a single testable change that covers all affected systems.

Decoupled delivery across many environments

Organizations of sufficient scale and complexity need to support complex topologies, including multi-cluster Kubernetes and non-Kubernetes-based infrastructure. Platforms need to enable timely upgrades across their entire topology to reduce CVE risk while managing diverse and specialized compute, including modern options like GPUs and Functions-as-a-Service (FaaS), as well as legacy options such as mainframes or Red Hat Virtualization.

Achieving overall scalability, auditability, and resilience requires prioritizing each in the proper context. Centralized planning gives control. Decentralized delivery allows scale. A platform should enable the definition of rules and enforcement in a central orchestrator, then rely on distributed deployment engines to deliver the capability in the correct places and form. This avoids the limits of tightly coupled orchestration and reduces the operational burden of scale.

kro is strong in the first principle. It offers a clear, Kubernetes-native composition that lets teams package complex deployments, hide unnecessary details, and encode organizational defaults. Features such as CEL templating demonstrate a focus on helping engineers manage dependencies across Kubernetes objects when creating higher-level abstractions.

Where platforms need more than kro

It is important to acknowledge that kro does not aim to address the second or third principles. This is not a criticism. It reflects a focused scope, following the Unix philosophy of doing one thing well while integrating cleanly with the wider ecosystem.

kro is a powerful mechanism for packaging resource definitions and orchestrating them within a single cluster. It does not try to manage resources across clusters, handle workflows such as approvals, or integrate with systems such as ServiceNow, mainframes, or proprietary APIs that require imperative actions. The power comes from its Kubernetes-native design, which makes it easy to integrate with tools such as for scheduling, Kyverno or OPA for policy as code (PaC), and IaC controllers such as Crossplane.

The harder challenge is how to meet all three principles in a sustainable way. How can you make platform changes that are both quick and safe? The simplest answer is to enable encapsulated and testable packages that allow changes across infrastructure, configuration, policy, and process from a single implementation.

Platform orchestration frameworks—such as Kratix and others in the ecosystem—aim to address these workflow and multi-environment needs. Kratix provides a Kubernetes-native framework for delivering managed services that reflect organizational standards, with support for long-running workflows, integration with enterprise systems, and managed delivery to clusters, airgapped hardware, or mainframes. kro provides composition rather than orchestration, which allows these tools to complement each other.

Looking ahead at a growing ecosystem

The project’s multi-vendor contributions and Kubernetes SIG governance reflect growing community engagement around kro. Many contributors highlight the value of a portable, Kubernetes-native model for grouping and orchestrating resources, and the importance of reducing manual dependency management for platform teams.

The next stage for organizations is understanding how kro fits into their broader architecture. kro is an important tool for composition. Ultimately, platform value comes from tying that composition to capabilities that encapsulate configuration, policy, process workflows, and decoupled deployment across diverse environments.

Emerging standards will help organisations meet the core tests of platform value: safe self-service, consistent compliance, simple fleet upgrades, and a contribution model that scales. With standards come tools that enable platform engineers to continue to reuse capabilities, collaborate more effectively, and deliver predictable behavior across clusters and clouds.

Harden your AI systems: Applying industry standards in the real world

Upcoming Speaking Engagements

This is a current list of where and when I am scheduled to speak:

- I’m speaking and signing books at the Chicago Public Library in Chicago, Illinois, USA, at 6:00 PM CT on February 5, 2026. Details to come.

- I’m speaking at Capricon 44 in Chicago, Illinois, USA. The convention runs February 5-8, 2026. My speaking time is TBD.

- I’m speaking at the Munich Cybersecurity Conference in Munich, Germany on February 12, 2026.

- I’m speaking at Tech Live: Cybersecurity in New York City, USA on March 11, 2026.

- I’m giving the Ross Anderson Lecture at the University of Cambridge’s Churchill College on March 19, 2026...

Friday Squid Blogging: Giant Squid Eating a Diamondback Squid

I have no context for this video—it’s from Reddit—but one of the commenters adds some context:

Hey everyone, squid biologist here! Wanted to add some stuff you might find interesting.

With so many people carrying around cameras, we’re getting more videos of giant squid at the surface than in previous decades. We’re also starting to notice a pattern, that around this time of year (peaking in January) we see a bunch of giant squid around Japan. We don’t know why this is happening. Maybe they gather around there to mate or something? who knows! but since so many people have cameras, those one-off monster-story encounters are now caught on video, like this one (which, btw, rips. This squid looks so healthy, it’s awesome)...

Building Trustworthy AI Agents

The promise of personal AI assistants rests on a dangerous assumption: that we can trust systems we haven’t made trustworthy. We can’t. And today’s versions are failing us in predictable ways: pushing us to do things against our own best interests, gaslighting us with doubt about things we are or that we know, and being unable to distinguish between who we are and who we have been. They struggle with incomplete, inaccurate, and partial context: with no standard way to move toward accuracy, no mechanism to correct sources of error, and no accountability when wrong information leads to bad decisions...

AIs Exploiting Smart Contracts

I have long maintained that smart contracts are a dumb idea: that a human process is actually a security feature.

Here’s some interesting research on training AIs to automatically exploit smart contracts:

AI models are increasingly good at cyber tasks, as we’ve written about before. But what is the economic impact of these capabilities? In a recent MATS and Anthropic Fellows project, our scholars investigated this question by evaluating AI agents’ ability to exploit smart contracts on Smart CONtracts Exploitation benchmark (SCONE-bench)a new benchmark they built comprising 405 contracts that were actually exploited between 2020 and 2025. On contracts exploited after the latest knowledge cutoffs (June 2025 for Opus 4.5 and March 2025 for other models), Claude Opus 4.5, Claude Sonnet 4.5, and GPT-5 developed exploits collectively worth $4.6 million, establishing a concrete lower bound for the economic harm these capabilities could enable. Going beyond retrospective analysis, we evaluated both Sonnet 4.5 and GPT-5 in simulation against 2,849 recently deployed contracts without any known vulnerabilities. Both agents uncovered two novel zero-day vulnerabilities and produced exploits worth $3,694, with GPT-5 doing so at an API cost of $3,476. This demonstrates as a proof-of-concept that profitable, real-world autonomous exploitation is technically feasible, a finding that underscores the need for proactive adoption of AI for defense...

Lima v2.0: New features for secure AI workflows

On November 6th, the Lima project team shipped the second major release of Lima. In this release, the team are expanding the project focus to cover AI as well as containers.

What is Lima ?

Lima (Linux Machines) is a command line tool to launch a local Linux virtual machine, with the primary focus on running containers on a laptop.

The project began in May 2021, with the aim of promoting containerd including nerdctl (contaiNERD CTL) to Mac users. The project joined the CNCF in September 2022 as a Sandbox project, and was promoted to the Incubating level in October 2025. Through the growth of the project, the scope has expanded to support non-container workloads and non-macOS hosts too.

See also: “Lima becomes a CNCF incubating project”.

Updates in v2.0

Plugins

Lima now provides the plugin infrastructure that allows third-parties to implement new features without modifying Lima itself:

- VM driver plugins: for additional hypervisors.

- CLI plugins: for additional subcommands of the `limactl` command.

- URL scheme plugins: for additional URL schemes to be passed as `limactl create SCHEME:SPEC` .

The plugin interfaces are still experimental and subject to change. The interfaces will be stabilized in future releases.

GPU acceleration

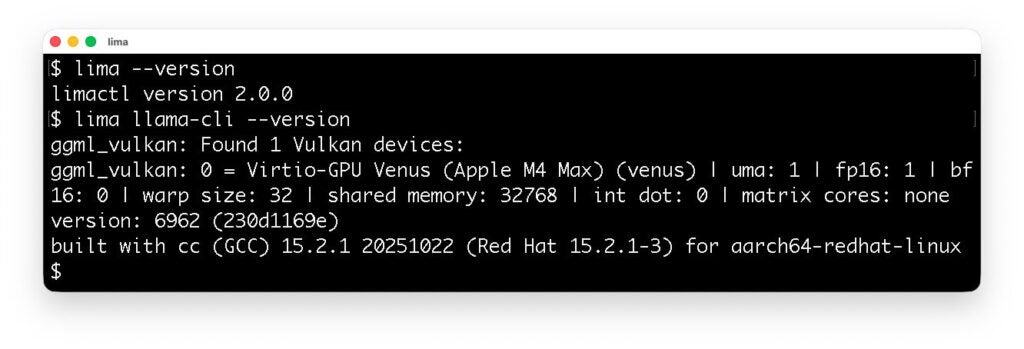

Lima now supports the VM driver for krunkit, providing GPU acceleration for Linux VM running on macOS hosts.

The following screenshot shows that llama.cpp running in Lima detects the Apple M4 Max processor as a virtualized GPU.

Model Context Protocol

Lima now provides Model Context Protocol (MCP) tools for reading, writing, and executing local files using a VM sandbox:

globlist_directoryread_filerun_shell_commandsearch_file_contentwrite_file

Lima’s MCP tools are inspired by Google Gemini CLI’s built-in tools, and can be used as a secure alternative for those built-in tools. See the configuration guide here: https://lima-vm.io/docs/config/ai/outside/mcp/

Other improvements

- The

`limactl start`command now accepts the`--progress`flag to show the progress of the provisioning scripts. - The `

limactl (create|edit|start)`commands now accept the`--mount-only DIR`flag to only mount the specified host directory. In Lima v1.x, this had to be specified in a very complex syntax:`--set ".mounts=[{\"location\":\"$(pwd)\", \"writable\":true}]"` . - The

`limactl shell`command now accepts the`--preserve-env`flag to propagate the environment variables from the host to the guest. - UDP ports are now forwarded by default in addition to TCP ports.

- Multiple host users can now run Lima simultaneously. This allows running Lima as a separate user account for enhanced security, using “Alcoholless” Homebrew.

See also the release note: https://github.com/lima-vm/lima/releases/tag/v2.0.0 .

We appreciate all the contributors who made this release possible, especially Ansuman Sahoo who contributed the VM driver plugin subsystem and the krunkit VM driver, through the Google Summer of Code (GSoC) 2025.

Expanding the focus to hardening AI

While Lima was originally made for promoting containerd to Mac users, it has been known to be useful for a variety of other use cases as well. One of the most notable emerging use cases is to run an AI coding agent inside a VM in order to isolate the agent from direct access to host files and commands. This setup ensures that even if an AI agent is deceived by malicious instructions searched from the Internet (e.g., fake package installations), any potential damage is confined within the VM or limited to files specified to be mounted from the host.

There are two kinds of scenarios to run an AI agent with Lima: AI inside Lima, and AI outside Lima.

AI inside Lima

This is the most common scenario; just run an AI agent inside Lima. The documentation features several examples of hardening AI agents running in Lima:

A local LLM can be used too, with the GPU acceleration feature available in the krunkit VM driver.

AI outside Lima

This scenario refers to running an AI agent as a host process outside Lima. Lima covers this scenario by providing the MCP tools that intercept file accesses and command executions.

Getting started: AI inside Lima

This section introduces how to run an AI agent (Gemini CLI) inside Lima so as to prevent the AI from directly accessing host files and commands.

If you are using Homebrew, Lima can be installed using:

brew install limaFor other installation methods, see https://lima-vm.io/docs/installation/ .

An instance of the Lima virtual machine can be created and started by running `limactl start`. However, as the default configuration mounts the entire home directory from the host, it is highly recommended to limit the mount scope to the current directory (.), especially when running an AI agent:

mkdir -p ~/test

cd ~/test

limactl start --mount-only .To allow writing to the mount directory, append the `:w` suffix to the mount specification:

limactl start --mount-only .:wFor example, you can run AI agents such as Gemini CLI. This can be installed and executed inside Lima using the `lima` commands as follows:

lima sudo snap install node --classic

lima sudo npm install -g @google/gemini-cli

lima geminiGemini CLI can arbitrarily read, write, and execute files inside the VM, however, it cannot access host files except mounted ones.

To run other AI agents, see https://lima-vm.io/docs/examples/ai/.

Introducing the Red Hat Ansible Lightspeed intelligent assistant

From incident responder to security steward: My journey to understanding Red Hat's open approach to vulnerability management

The Sleep Test: How Embracing Chaos Unlocks API Resilience

React2Shell Continued: What to know and do about the 2 latest CVEs

FBI Warns of Fake Video Scams

The FBI is warning of AI-assisted fake kidnapping scams:

Criminal actors typically will contact their victims through text message claiming they have kidnapped their loved one and demand a ransom be paid for their release. Oftentimes, the criminal actor will express significant claims of violence towards the loved one if the ransom is not paid immediately. The criminal actor will then send what appears to be a genuine photo or video of the victim’s loved one, which upon close inspection often reveals inaccuracies when compared to confirmed photos of the loved one. Examples of these inaccuracies include missing tattoos or scars and inaccurate body proportions. Criminal actors will sometimes purposefully send these photos using timed message features to limit the amount of time victims have to analyze the images...

Slash VM provisioning time on Red Hat Openshift Virtualization using Red Hat Ansible Automation Platform

DDoS in November

Microsoft Patch Tuesday, December 2025 Edition

Microsoft today pushed updates to fix at least 56 security flaws in its Windows operating systems and supported software. This final Patch Tuesday of 2025 tackles one zero-day bug that is already being exploited, as well as two publicly disclosed vulnerabilities.

Despite releasing a lower-than-normal number of security updates these past few months, Microsoft patched a whopping 1,129 vulnerabilities in 2025, an 11.9% increase from 2024. According to Satnam Narang at Tenable, this year marks the second consecutive year that Microsoft patched over one thousand vulnerabilities, and the third time it has done so since its inception.

The zero-day flaw patched today is CVE-2025-62221, a privilege escalation vulnerability affecting Windows 10 and later editions. The weakness resides in a component called the “Windows Cloud Files Mini Filter Driver” — a system driver that enables cloud applications to access file system functionalities.

“This is particularly concerning, as the mini filter is integral to services like OneDrive, Google Drive, and iCloud, and remains a core Windows component, even if none of those apps were installed,” said Adam Barnett, lead software engineer at Rapid7.

Only three of the flaws patched today earned Microsoft’s most-dire “critical” rating: Both CVE-2025-62554 and CVE-2025-62557 involve Microsoft Office, and both can exploited merely by viewing a booby-trapped email message in the Preview Pane. Another critical bug — CVE-2025-62562 — involves Microsoft Outlook, although Redmond says the Preview Pane is not an attack vector with this one.

But according to Microsoft, the vulnerabilities most likely to be exploited from this month’s patch batch are other (non-critical) privilege escalation bugs, including:

–CVE-2025-62458 — Win32k

–CVE-2025-62470 — Windows Common Log File System Driver

–CVE-2025-62472 — Windows Remote Access Connection Manager

–CVE-2025-59516 — Windows Storage VSP Driver

–CVE-2025-59517 — Windows Storage VSP Driver

Kev Breen, senior director of threat research at Immersive, said privilege escalation flaws are observed in almost every incident involving host compromises.

“We don’t know why Microsoft has marked these specifically as more likely, but the majority of these components have historically been exploited in the wild or have enough technical detail on previous CVEs that it would be easier for threat actors to weaponize these,” Breen said. “Either way, while not actively being exploited, these should be patched sooner rather than later.”

One of the more interesting vulnerabilities patched this month is CVE-2025-64671, a remote code execution flaw in the Github Copilot Plugin for Jetbrains AI-based coding assistant that is used by Microsoft and GitHub. Breen said this flaw would allow attackers to execute arbitrary code by tricking the large language model (LLM) into running commands that bypass the guardrails and add malicious instructions in the user’s “auto-approve” settings.

CVE-2025-64671 is part of a broader, more systemic security crisis that security researcher Ari Marzuk has branded IDEsaster (IDE stands for “integrated development environment”), which encompasses more than 30 separate vulnerabilities reported in nearly a dozen market-leading AI coding platforms, including Cursor, Windsurf, Gemini CLI, and Claude Code.

The other publicly-disclosed vulnerability patched today is CVE-2025-54100, a remote code execution bug in Windows Powershell on Windows Server 2008 and later that allows an unauthenticated attacker to run code in the security context of the user.

For anyone seeking a more granular breakdown of the security updates Microsoft pushed today, check out the roundup at the SANS Internet Storm Center. As always, please leave a note in the comments if you experience problems applying any of this month’s Windows patches.

AI vs. Human Drivers

Two competing arguments are making the rounds. The first is by a neurosurgeon in the New York Times. In an op-ed that honestly sounds like it was paid for by Waymo, the author calls driverless cars a “public health breakthrough”:

In medical research, there’s a practice of ending a study early when the results are too striking to ignore. We stop when there is unexpected harm. We also stop for overwhelming benefit, when a treatment is working so well that it would be unethical to continue giving anyone a placebo. When an intervention works this clearly, you change what you do...