CNCF Projects

Dragonfly v2.4.0 is released

Dragonfly v2.4.0 is released! Thanks to all of the contributors who made this Dragonfly release happen.

New features and enhancements

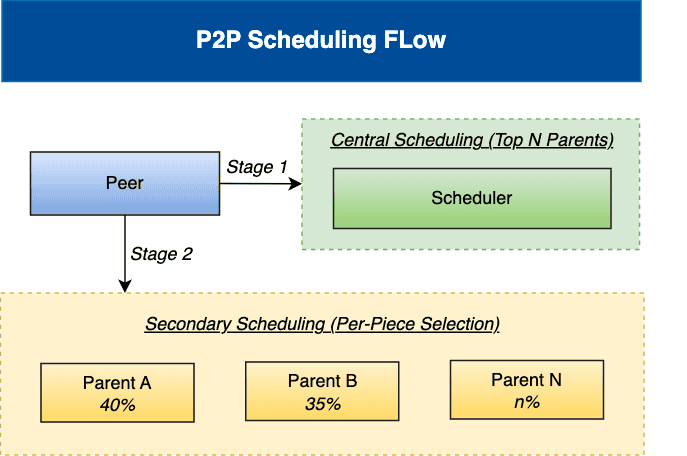

load-aware scheduling algorithm

A two-stage scheduling algorithm combining central scheduling with node-level secondary scheduling to optimize P2P download performance, based on real-time load awareness.

For more information, please refer to the Scheduling.

Vortex protocol support for P2P file transfer

Dragonfly provides the new Vortex transfer protocol based on TLV to improve the download performance in the internal network. Use the TLV (Tag-Length-Value) format as a lightweight protocol to replace gRPC for data transfer between peers. TCP-based Vortex reduces large file download time by 50% and QUIC-based Vortex by 40% compared to gRPC, both effectively reducing peak memory usage.

For more information, please refer to the TCP Protocol Support for P2P File Transfer and QUIC Protocol Support for P2P File Transfer.

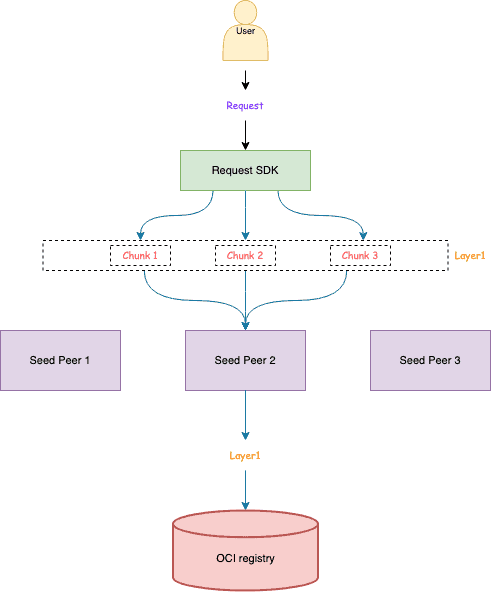

Request SDK

A SDK for routing User requests to Seed Peers using consistent hashing, replacing the previous Kubernetes Service load balancing approach.

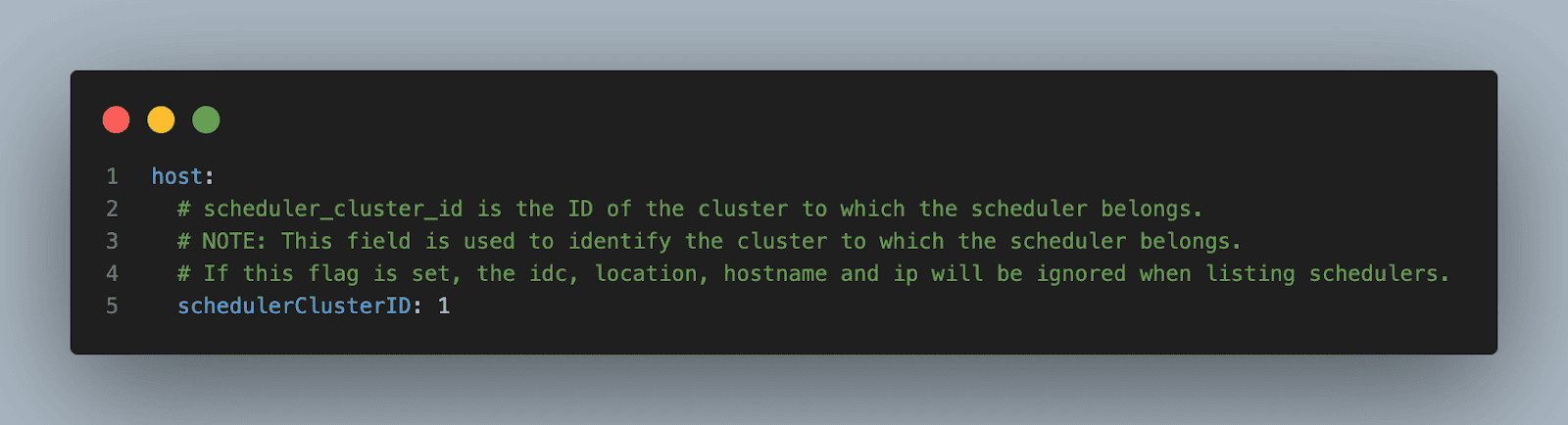

Simple multi‑cluster Kubernetes deployment with scheduler cluster ID

Dragonfly supports a simplified feature for deploying and managing multiple Kubernetes clusters by explicitly assigning a schedulerClusterID to each cluster. This approach allows users to directly control cluster affinity without relying on location‑based scheduling metadata such as IDC, hostname, or IP.

Using this feature, each Peer, Seed Peer, and Scheduler determines its target scheduler cluster through a clearly defined scheduler cluster ID. This ensures precise separation between clusters and predictable cross‑cluster behavior.

For more information, please refer to the Create Dragonfly Cluster Simple.

Performance and resource optimization for Manager and Scheduler components

Enhanced service performance and resource utilization across Manager and Scheduler components while significantly reducing CPU and memory overhead, delivering improved system efficiency and better resource management.

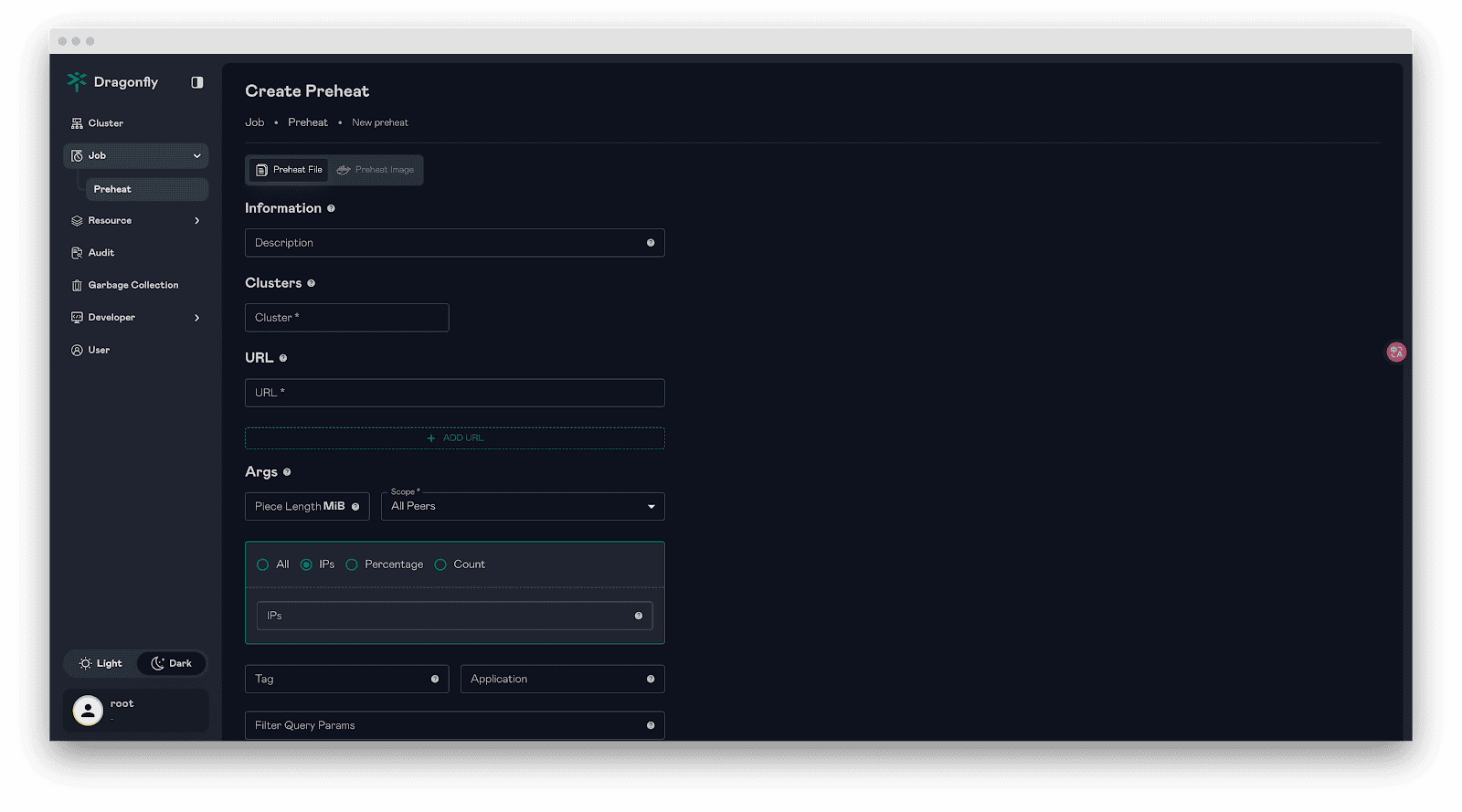

Enhanced preheating

- Support for IP-based peer selection in preheating jobs with priority-based selection logic where IP specification takes highest priority, followed by count-based and percentage-based selection.

- Support for preheating multiple URLs in a single request.

- Support for preheating file and image via Scheduler gRPC interface.

Calculate task ID based on image blob SHA256 to avoid redundant downloads

The Client now supports calculating task IDs directly from the SHA256 hash of image blobs, instead of using the download URL. This enhancement prevents redundant downloads and data duplication when the same blob is accessed from different registry domains.

Cache HTTP 307 redirects for split downloads

Support for caching HTTP 307 (Temporary Redirect) responses to optimize Dragonfly’s multi-piece download performance. When a download URL is split into multiple pieces, the redirect target is now cached, eliminating redundant redirect requests and reducing latency.

Go Client deprecated and replaced by Rust client

The Go client has been deprecated and replaced by the Rust Client. All future development and maintenance will focus exclusively on the Rust client, which offers improved performance, stability, and reliability.

For more information, please refer to the dragoflyoss/client.

Additional enhancements

- Enable 64K page size support for ARM64 in the Dragonfly Rust client.

- Fix missing git commit metadata in dfget version output.

- Support for config_path of io.containerd.cri.v1.images plugin for containerd V3 configuration.

- Replaces glibc DNS resolver with hickory-dns in reqwest to implement DNS caching and prevent excessive DNS lookups during piece downloads.

- Support for the –include-files flag to selectively download files from a directory.

- Add the –no-progress flag to disable the download progress bar output.

- Support for custom request headers in backend operations, enabling flexible header configuration for HTTP requests.

- Refactored log output to reduce redundant logging and improve overall logging efficiency.

Significant bug fixes

- Modified the database field type from text to longtext to support storing the information of preheating job.

- Fixed panic on repeated seed peer service stops during Scheduler shutdown.

- Fixed broker authentication failure when specifying the Redis password without setting a username.

Nydus

New features and enhancements

- Nydusd: Add CRC32 validation support for both RAFS V5 and V6 formats, enhancing data integrity verification.

- Nydusd: Support resending FUSE requests during nydusd restoration, improving daemon recovery reliability.

- Nydusd: Enhance VFS state saving mechanism for daemon hot upgrade and failover.

- Nydusify: Introduce Nydus-to-OCI reverse conversion capability, enabling seamless migration back to OCI format.

- Nydusify: Implement zero-disk transfer for image copy, significantly reducing local disk usage during copy operations.

- Snapshotter: Builtin blob.meta in bootstrap for blob fetch reliability for RAFS v6 image.

Significant bug fixes

- Nydusd: Fix auth token fetching for access_token field in registry authentication.

- Nydusd: Add recursive inode/dentry invalidation for umount API.

- Nydus Image: Fix multiple issues in optimize subcommand and add backend configuration support.

- Snapshotter: Implement lazy parent recovery for proxy mode to handle missing parent snapshots.

We encourage you to visit the d7y.io website to find out more.

Others

You can see CHANGELOG for more details.

Links

- Dragonfly Website: https://d7y.io/

- Dragonfly Repository: https://github.com/dragonflyoss/dragonfly

- Dragonfly Client Repository: https://github.com/dragonflyoss/client

- Dragonfly Console Repository: https://github.com/dragonflyoss/console

- Dragonfly Charts Repository: https://github.com/dragonflyoss/helm-charts

- Dragonfly Monitor Repository: https://github.com/dragonflyoss/monitoring

Dragonfly Github

Introducing Node Readiness Controller

In the standard Kubernetes model, a node’s suitability for workloads hinges on a single binary "Ready" condition. However, in modern Kubernetes environments, nodes require complex infrastructure dependencies—such as network agents, storage drivers, GPU firmware, or custom health checks—to be fully operational before they can reliably host pods.

Today, on behalf of the Kubernetes project, I am announcing the Node Readiness Controller. This project introduces a declarative system for managing node taints, extending the readiness guardrails during node bootstrapping beyond standard conditions. By dynamically managing taints based on custom health signals, the controller ensures that workloads are only placed on nodes that met all infrastructure-specific requirements.

Why the Node Readiness Controller?

Core Kubernetes Node "Ready" status is often insufficient for clusters with sophisticated bootstrapping requirements. Operators frequently struggle to ensure that specific DaemonSets or local services are healthy before a node enters the scheduling pool.

The Node Readiness Controller fills this gap by allowing operators to define custom scheduling gates tailored to specific node groups. This enables you to enforce distinct readiness requirements across heterogeneous clusters, ensuring for example, that GPU equipped nodes only accept pods once specialized drivers are verified, while general purpose nodes follow a standard path.

It provides three primary advantages:

- Custom Readiness Definitions: Define what ready means for your specific platform.

- Automated Taint Management: The controller automatically applies or removes node taints based on condition status, preventing pods from landing on unready infrastructure.

- Declarative Node Bootstrapping: Manage multi-step node initialization reliably, with a clear observability into the bootstrapping process.

Core concepts and features

The controller centers around the NodeReadinessRule (NRR) API, which allows you to define declarative gates for your nodes.

Flexible enforcement modes

The controller supports two distinct operational modes:

- Continuous enforcement

- Actively maintains the readiness guarantee throughout the node’s entire lifecycle. If a critical dependency (like a device driver) fails later, the node is immediately tainted to prevent new scheduling.

- Bootstrap-only enforcement

- Specifically for one-time initialization steps, such as pre-pulling heavy images or hardware provisioning. Once conditions are met, the controller marks the bootstrap as complete and stops monitoring that specific rule for the node.

Condition reporting

The controller reacts to Node Conditions rather than performing health checks itself. This decoupled design allows it to integrate seamlessly with other tools existing in the ecosystem as well as custom solutions:

- Node Problem Detector (NPD): Use existing NPD setups and custom scripts to report node health.

- Readiness Condition Reporter: A lightweight agent provided by the project that can be deployed to periodically check local HTTP endpoints and patch node conditions accordingly.

Operational safety with dry run

Deploying new readiness rules across a fleet carries inherent risk. To mitigate this, dry run mode allows operators to first simulate impact on the cluster. In this mode, the controller logs intended actions and updates the rule's status to show affected nodes without applying actual taints, enabling safe validation before enforcement.

Example: CNI bootstrapping

The following NodeReadinessRule ensures a node remains unschedulable until its CNI agent is functional. The controller monitors a custom cniplugin.example.net/NetworkReady condition and only removes the readiness.k8s.io/acme.com/network-unavailable taint once the status is True.

apiVersion: readiness.node.x-k8s.io/v1alpha1

kind: NodeReadinessRule

metadata:

name: network-readiness-rule

spec:

conditions:

- type: "cniplugin.example.net/NetworkReady"

requiredStatus: "True"

taint:

key: "readiness.k8s.io/acme.com/network-unavailable"

effect: "NoSchedule"

value: "pending"

enforcementMode: "bootstrap-only"

nodeSelector:

matchLabels:

node-role.kubernetes.io/worker: ""

Demo:

Getting involved

The Node Readiness Controller is just getting started, with our initial releases out, and we are seeking community feedback to refine the roadmap. Following our productive Unconference discussions at KubeCon NA 2025, we are excited to continue the conversation in person.

Join us at KubeCon + CloudNativeCon Europe 2026 for our maintainer track session: Addressing Non-Deterministic Scheduling: Introducing the Node Readiness Controller.

In the meantime, you can contribute or track our progress here:

- GitHub: https://sigs.k8s.io/node-readiness-controller

- Slack: Join the conversation in #sig-node-readiness-controller

- Documentation: Getting Started

New Conversion from cgroup v1 CPU Shares to v2 CPU Weight

I'm excited to announce the implementation of an improved conversion formula from cgroup v1 CPU shares to cgroup v2 CPU weight. This enhancement addresses critical issues with CPU priority allocation for Kubernetes workloads when running on systems with cgroup v2.

Background

Kubernetes was originally designed with cgroup v1 in mind, where CPU shares were defined simply by assigning the container's CPU requests in millicpu form.

For example, a container requesting 1 CPU (1024m) would get (cpu.shares = 1024).

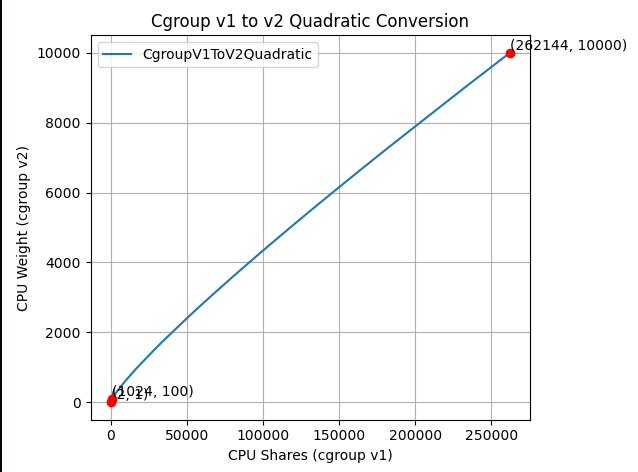

After a while, cgroup v1 started being replaced by its successor, cgroup v2. In cgroup v2, the concept of CPU shares (which ranges from 2 to 262144, or from 2¹ to 2¹⁸) was replaced with CPU weight (which ranges from [1, 10000], or 10⁰ to 10⁴).

With the transition to cgroup v2,

KEP-2254

introduced a conversion formula to map cgroup v1 CPU shares to cgroup v2 CPU

weight. The conversion formula was defined as: cpu.weight = (1 + ((cpu.shares - 2) * 9999) / 262142)

This formula linearly maps values from [2¹, 2¹⁸] to [10⁰, 10⁴].

While this approach is simple, the linear mapping imposes a few significant problems and impacts both performance and configuration granularity.

Problems with previous conversion formula

The current conversion formula creates two major issues:

1. Reduced priority against non-Kubernetes workloads

In cgroup v1, the default value for CPU shares is 1024, meaning a container

requesting 1 CPU has equal priority with system processes that live outside

of Kubernetes' scope.

However, in cgroup v2, the default CPU weight is 100, but the current

formula converts 1 CPU (1024m) to only ≈39 weight - less than 40% of the

default.

Example:

- Container requesting 1 CPU (1024m)

- cgroup v1:

cpu.shares = 1024(equal to default) - cgroup v2 (current):

cpu.weight = 39(much lower than default 100)

This means that after moving to cgroup v2, Kubernetes (or OCI) workloads would de-facto reduce their CPU priority against non-Kubernetes processes. The problem can be severe for setups with many system daemons that run outside of Kubernetes' scope and expect Kubernetes workloads to have priority, especially in situations of resource starvation.

2. Unmanageable granularity

The current formula produces very low values for small CPU requests, limiting the ability to create sub-cgroups within containers for fine-grained resource distribution (which will possibly be much easier moving forward, see KEP #5474 for more info).

Example:

- Container requesting 100m CPU

- cgroup v1:

cpu.shares = 102 - cgroup v2 (current):

cpu.weight = 4(too low for sub-cgroup configuration)

With cgroup v1, requesting 100m CPU which led to 102 CPU shares was manageable in the sense that sub-cgroups could have been created inside the main container, assigning fine-grained CPU priorities for different groups of processes. With cgroup v2 however, having 4 shares is very hard to distribute between sub-cgroups since it's not granular enough.

With plans to allow writable cgroups for unprivileged containers, this becomes even more relevant.

New conversion formula

Description

The new formula is more complicated, but does a much better job mapping between cgroup v1 CPU shares and cgroup v2 CPU weight:

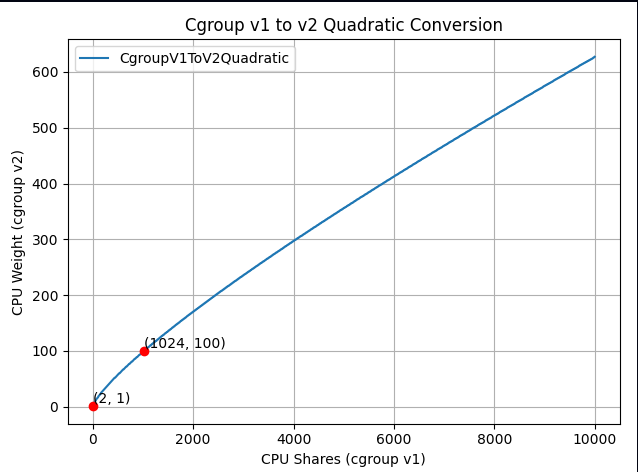

$$cpu.weight = \lceil 10^{(L^{2}/612 + 125L/612 - 7/34)} \rceil, \text{ where: } L = \log_2(cpu.shares)$$The idea is that this is a quadratic function to cross the following values:

- (2, 1): The minimum values for both ranges.

- (1024, 100): The default values for both ranges.

- (262144, 10000): The maximum values for both ranges.

Visually, the new function looks as follows:

And if you zoom in to the important part:

The new formula is "close to linear", yet it is carefully designed to map the ranges in a clever way so the three important points above would cross.

How it solves the problems

-

Better priority alignment:

- A container requesting 1 CPU (1024m) will now get a

cpu.weight = 102. This value is close to cgroup v2's default 100. This restores the intended priority relationship between Kubernetes workloads and system processes.

- A container requesting 1 CPU (1024m) will now get a

-

Improved granularity:

- A container requesting 100m CPU will get

cpu.weight = 17, (see here). Enables better fine-grained resource distribution within containers.

- A container requesting 100m CPU will get

Adoption and integration

This change was implemented at the OCI layer. In other words, this is not implemented in Kubernetes itself; therefore the adoption of the new conversion formula depends solely on the OCI runtime adoption.

For example:

- runc: The new formula is enabled from version 1.3.2.

- crun: The new formula is enabled from version 1.23.

Impact on existing deployments

Important: Some consumers may be affected if they assume the older linear conversion formula. Applications or monitoring tools that directly calculate expected CPU weight values based on the previous formula may need updates to account for the new quadratic conversion. This is particularly relevant for:

- Custom resource management tools that predict CPU weight values.

- Monitoring systems that validate or expect specific weight values.

- Applications that programmatically set or verify CPU weight values.

The Kubernetes project recommends testing the new conversion formula in non-production environments before upgrading OCI runtimes to ensure compatibility with existing tooling.

Where can I learn more?

For those interested in this enhancement:

- Kubernetes GitHub Issue #131216 - Detailed technical analysis and examples, including discussions and reasoning for choosing the above formula.

- KEP-2254: cgroup v2 - Original cgroup v2 implementation in Kubernetes.

- Kubernetes cgroup documentation - Current resource management guidance.

How do I get involved?

For those interested in getting involved with Kubernetes node-level features, join the Kubernetes Node Special Interest Group. We always welcome new contributors and diverse perspectives on resource management challenges.

Ingress NGINX: Statement from the Kubernetes Steering and Security Response Committees

In March 2026, Kubernetes will retire Ingress NGINX, a piece of critical infrastructure for about half of cloud native environments. The retirement of Ingress NGINX was announced for March 2026, after years of public warnings that the project was in dire need of contributors and maintainers. There will be no more releases for bug fixes, security patches, or any updates of any kind after the project is retired. This cannot be ignored, brushed off, or left until the last minute to address. We cannot overstate the severity of this situation or the importance of beginning migration to alternatives like Gateway API or one of the many third-party Ingress controllers immediately.

To be abundantly clear: choosing to remain with Ingress NGINX after its retirement leaves you and your users vulnerable to attack. None of the available alternatives are direct drop-in replacements. This will require planning and engineering time. Half of you will be affected. You have two months left to prepare.

Existing deployments will continue to work, so unless you proactively check, you may not know you are affected until you are compromised. In most cases, you can check to find out whether or not you rely on Ingress NGINX by running kubectl get pods --all-namespaces --selector app.kubernetes.io/name=ingress-nginx with cluster administrator permissions.

Despite its broad appeal and widespread use by companies of all sizes, and repeated calls for help from the maintainers, the Ingress NGINX project never received the contributors it so desperately needed. According to internal Datadog research, about 50% of cloud native environments currently rely on this tool, and yet for the last several years, it has been maintained solely by one or two people working in their free time. Without sufficient staffing to maintain the tool to a standard both ourselves and our users would consider secure, the responsible choice is to wind it down and refocus efforts on modern alternatives like Gateway API.

We did not make this decision lightly; as inconvenient as it is now, doing so is necessary for the safety of all users and the ecosystem as a whole. Unfortunately, the flexibility Ingress NGINX was designed with, that was once a boon, has become a burden that cannot be resolved. With the technical debt that has piled up, and fundamental design decisions that exacerbate security flaws, it is no longer reasonable or even possible to continue maintaining the tool even if resources did materialize.

We issue this statement together to reinforce the scale of this change and the potential for serious risk to a significant percentage of Kubernetes users if this issue is ignored. It is imperative that you check your clusters now. If you are reliant on Ingress NGINX, you must begin planning for migration.

Thank you,

Kubernetes Steering Committee

Kubernetes Security Response Committee

Experimenting with Gateway API using kind

This document will guide you through setting up a local experimental environment with Gateway API on kind. This setup is designed for learning and testing. It helps you understand Gateway API concepts without production complexity.

Caution:

This is an experimentation learning setup, and should not be used for production. The components used on this document are not suited for production usage. Once you're ready to deploy Gateway API in a production environment, select an implementation that suits your needs.Overview

In this guide, you will:

- Set up a local Kubernetes cluster using kind (Kubernetes in Docker)

- Deploy cloud-provider-kind, which provides both LoadBalancer Services and a Gateway API controller

- Create a Gateway and HTTPRoute to route traffic to a demo application

- Test your Gateway API configuration locally

This setup is ideal for learning, development, and experimentation with Gateway API concepts.

Prerequisites

Before you begin, ensure you have the following installed on your local machine:

- Docker - Required to run kind and cloud-provider-kind

- kubectl - The Kubernetes command-line tool

- kind - Kubernetes in Docker

- curl - Required to test the routes

Create a kind cluster

Create a new kind cluster by running:

kind create cluster

This will create a single-node Kubernetes cluster running in a Docker container.

Install cloud-provider-kind

Next, you need cloud-provider-kind, which provides two key components for this setup:

- A LoadBalancer controller that assigns addresses to LoadBalancer-type Services

- A Gateway API controller that implements the Gateway API specification

It also automatically installs the Gateway API Custom Resource Definitions (CRDs) in your cluster.

Run cloud-provider-kind as a Docker container on the same host where you created the kind cluster:

VERSION="$(basename $(curl -s -L -o /dev/null -w '%{url_effective}' https://github.com/kubernetes-sigs/cloud-provider-kind/releases/latest))"

docker run -d --name cloud-provider-kind --rm --network host -v /var/run/docker.sock:/var/run/docker.sock registry.k8s.io/cloud-provider-kind/cloud-controller-manager:${VERSION}

Note: On some systems, you may need elevated privileges to access the Docker socket.

Verify that cloud-provider-kind is running:

docker ps --filter name=cloud-provider-kind

You should see the container listed and in a running state. You can also check the logs:

docker logs cloud-provider-kind

Experimenting with Gateway API

Now that your cluster is set up, you can start experimenting with Gateway API resources.

cloud-provider-kind automatically provisions a GatewayClass called cloud-provider-kind. You'll use this class to create your Gateway.

It is worth noticing that while kind is not a cloud provider, the project is named as cloud-provider-kind as it provides features that simulate a cloud-enabled environment.

Deploy a Gateway

The following manifest will:

- Create a new namespace called

gateway-infra - Deploy a Gateway that listens on port 80

- Accept HTTPRoutes with hostnames matching the

*.exampledomain.examplepattern - Allow routes from any namespace to attach to the Gateway.

Note: In real clusters, prefer Same or Selector values on the

allowedRoutesnamespace selector field to limit attachments.

Apply the following manifest:

---

apiVersion: v1

kind: Namespace

metadata:

name: gateway-infra

---

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: gateway

namespace: gateway-infra

spec:

gatewayClassName: cloud-provider-kind

listeners:

- name: default

hostname: "*.exampledomain.example"

port: 80

protocol: HTTP

allowedRoutes:

namespaces:

from: All

Then verify that your Gateway is properly programmed and has an address assigned:

kubectl get gateway -n gateway-infra gateway

Expected output:

NAME CLASS ADDRESS PROGRAMMED AGE

gateway cloud-provider-kind 172.18.0.3 True 5m6s

The PROGRAMMED column should show True, and the ADDRESS field should contain an IP address.

Deploy a demo application

Next, deploy a simple echo application that will help you test your Gateway configuration. This application:

- Listens on port 3000

- Echoes back request details including path, headers, and environment variables

- Runs in a namespace called

demo

Apply the following manifest:

apiVersion: v1

kind: Namespace

metadata:

name: demo

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/name: echo

name: echo

namespace: demo

spec:

ports:

- name: http

port: 3000

protocol: TCP

targetPort: 3000

selector:

app.kubernetes.io/name: echo

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/name: echo

name: echo

namespace: demo

spec:

selector:

matchLabels:

app.kubernetes.io/name: echo

template:

metadata:

labels:

app.kubernetes.io/name: echo

spec:

containers:

- env:

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

image: registry.k8s.io/gateway-api/echo-basic:v20251204-v1.4.1

name: echo-basic

Create an HTTPRoute

Now create an HTTPRoute to route traffic from your Gateway to the echo application. This HTTPRoute will:

- Respond to requests for the hostname

some.exampledomain.example - Route traffic to the echo application

- Attach to the Gateway in the

gateway-infranamespace

Apply the following manifest:

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: echo

namespace: demo

spec:

parentRefs:

- name: gateway

namespace: gateway-infra

hostnames: ["some.exampledomain.example"]

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: echo

port: 3000

Test your route

The final step is to test your route using curl. You'll make a request to the Gateway's IP address with the hostname some.exampledomain.example. The command below is for POSIX shell only, and may need to be adjusted for your environment:

GW_ADDR=$(kubectl get gateway -n gateway-infra gateway -o jsonpath='{.status.addresses[0].value}')

curl --resolve some.exampledomain.example:80:${GW_ADDR} http://some.exampledomain.example

You should receive a JSON response similar to this:

{

"path": "/",

"host": "some.exampledomain.example",

"method": "GET",

"proto": "HTTP/1.1",

"headers": {

"Accept": [

"*/*"

],

"User-Agent": [

"curl/8.15.0"

]

},

"namespace": "demo",

"ingress": "",

"service": "",

"pod": "echo-dc48d7cf8-vs2df"

}

If you see this response, congratulations! Your Gateway API setup is working correctly.

Troubleshooting

If something isn't working as expected, you can troubleshoot by checking the status of your resources.

Check the Gateway status

First, inspect your Gateway resource:

kubectl get gateway -n gateway-infra gateway -o yaml

Look at the status section for conditions. Your Gateway should have:

Accepted: True- The Gateway was accepted by the controllerProgrammed: True- The Gateway was successfully configured.status.addressespopulated with an IP address

Check the HTTPRoute status

Next, inspect your HTTPRoute:

kubectl get httproute -n demo echo -o yaml

Check the status.parents section for conditions. Common issues include:

- ResolvedRefs set to False with reason

BackendNotFound; this means that the backend Service doesn't exist or has the wrong name - Accepted set to False; this means that the route couldn't attach to the Gateway (check namespace permissions or hostname matching)

Example error when a backend is not found:

status:

parents:

- conditions:

- lastTransitionTime: "2026-01-19T17:13:35Z"

message: backend not found

observedGeneration: 2

reason: BackendNotFound

status: "False"

type: ResolvedRefs

controllerName: kind.sigs.k8s.io/gateway-controller

Check controller logs

If the resource statuses don't reveal the issue, check the cloud-provider-kind logs:

docker logs -f cloud-provider-kind

This will show detailed logs from both the LoadBalancer and Gateway API controllers.

Cleanup

When you're finished with your experiments, you can clean up the resources:

Remove Kubernetes resources

Delete the namespaces (this will remove all resources within them):

kubectl delete namespace gateway-infra

kubectl delete namespace demo

Stop cloud-provider-kind

Stop and remove the cloud-provider-kind container:

docker stop cloud-provider-kind

Because the container was started with the --rm flag, it will be automatically removed when stopped.

Delete the kind cluster

Finally, delete the kind cluster:

kind delete cluster

Next steps

Now that you've experimented with Gateway API locally, you're ready to explore production-ready implementations:

- Production Deployments: Review the Gateway API implementations to find a controller that matches your production requirements

- Learn More: Explore the Gateway API documentation to learn about advanced features like TLS, traffic splitting, and header manipulation

- Advanced Routing: Experiment with path-based routing, header matching, request mirroring and other features following Gateway API user guides

A final word of caution

This kind setup is for development and learning only. Always use a production-grade Gateway API implementation for real workloads.

Cluster API v1.12: Introducing In-place Updates and Chained Upgrades

Cluster API brings declarative management to Kubernetes cluster lifecycle, allowing users and platform teams to define the desired state of clusters and rely on controllers to continuously reconcile toward it.

Similar to how you can use StatefulSets or Deployments in Kubernetes to manage a group of Pods, in Cluster API you can use KubeadmControlPlane to manage a set of control plane Machines, or you can use MachineDeployments to manage a group of worker Nodes.

The Cluster API v1.12.0 release expands what is possible in Cluster API, reducing friction in common lifecycle operations by introducing in-place updates and chained upgrades.

Emphasis on simplicity and usability

With v1.12.0, the Cluster API project demonstrates once again that this community is capable of delivering a great amount of innovation, while at the same time minimizing impact for Cluster API users.

What does this mean in practice?

Users simply have to change the Cluster or the Machine spec (just as with previous Cluster API releases), and Cluster API will automatically trigger in-place updates or chained upgrades when possible and advisable.

In-place Updates

Like Kubernetes does for Pods in Deployments, when the Machine spec changes also Cluster API performs rollouts by creating a new Machine and deleting the old one.

This approach, inspired by the principle of immutable infrastructure, has a set of considerable advantages:

- It is simple to explain, predictable, consistent and easy to reason about with users and engineers.

- It is simple to implement, because it relies only on two core primitives, create and delete.

- Implementation does not depend on Machine-specific choices, like OS, bootstrap mechanism etc.

As a result, Machine rollouts drastically reduce the number of variables to be considered when managing the lifecycle of a host server that is hosting Nodes.

However, while advantages of immutability are not under discussion, both Kubernetes and Cluster API are undergoing a similar journey, introducing changes that allow users to minimize workload disruption whenever possible.

Over time, also Cluster API has introduced several improvements to immutable rollouts, including:

- Support for in-place propagation of changes affecting Kubernetes resources only, thus avoiding unnecessary rollouts

- A way to Taint outdated nodes with PreferNoSchedule, thus reducing Pod churn by optimizing how Pods are rescheduled during rollouts.

- Support for the delete first rollout strategy, thus making it easier to do immutable rollouts on bare metal / environments with constrained resources.

The new in-place update feature in Cluster API is the next step in this journey.

With the v1.12.0 release, Cluster API introduces support for update extensions allowing users to make changes on existing machines in-place, without deleting and re-creating the Machines.

Both KubeadmControlPlane and MachineDeployments support in-place updates based on the new update extension, and this means that the boundary of what is possible in Cluster API is now changed in a significant way.

How do in-place updates work?

The simplest way to explain it is that once the user triggers an update by changing the desired state of Machines, then Cluster API chooses the best tool to achieve the desired state.

The news is that now Cluster API can choose between immutable rollouts and in-place update extensions to perform required changes.

Importantly, this is not immutable rollouts vs in-place updates; Cluster API considers both valid options and selects the most appropriate mechanism for a given change.

From the perspective of the Cluster API maintainers, in-place updates are most useful for making changes that don't otherwise require a node drain or pod restart; for example: changing user credentials for the Machine. On the other hand, when the workload will be disrupted anyway, just do a rollout.

Nevertheless, Cluster API remains true to its extensible nature, and everyone can create their own update extension and decide when and how to use in-place updates by trading in some of the benefits of immutable rollouts.

For a deep dive into this feature, make sure to attend the session In-place Updates with Cluster API: The Sweet Spot Between Immutable and Mutable Infrastructure at KubeCon EU in Amsterdam!

Chained Upgrades

ClusterClass and managed topologies in Cluster API jointly provided a powerful and effective framework that acts as a building block for many platforms offering Kubernetes-as-a-Service.

Now with v1.12.0 this feature is making another important step forward, by allowing users to upgrade by more than one Kubernetes minor version in a single operation, commonly referred to as a chained upgrade.

This allows users to declare a target Kubernetes version and let Cluster API safely orchestrate the required intermediate steps, rather than manually managing each minor upgrade.

The simplest way to explain how chained upgrades work, is that once the user triggers an update by changing the desired version for a Cluster, Cluster API computes an upgrade plan, and then starts executing it. Rather than (for example) update the Cluster to v1.33.0 and then v1.34.0 and then v1.35.0, checking on progress at each step, a chained upgrade lets you go directly to v1.35.0.

Executing an upgrade plan means upgrading control plane and worker machines in a strictly controlled order, repeating this process as many times as needed to reach the desired state. The Cluster API is now capable of managing this for you.

Cluster API takes care of optimizing and minimizing the upgrade steps for worker machines, and in fact worker machines will skip upgrades to intermediate Kubernetes minor releases whenever allowed by the Kubernetes version skew policies.

Also in this case extensibility is at the core of this feature, and upgrade plan runtime extensions can be used to influence how the upgrade plan is computed; similarly, lifecycle hooks can be used to automate other tasks that must be performed during an upgrade, e.g. upgrading an addon after the control plane update completed.

From our perspective, chained upgrades are most useful for users that struggle to keep up with Kubernetes minor releases, and e.g. they want to upgrade only once per year and then upgrade by three versions (n-3 → n). But be warned: the fact that you can now easily upgrade by more than one minor version is not an excuse to not patch your cluster frequently!

Release team

I would like to thank all the contributors, the maintainers, and all the engineers that volunteered for the release team.

The reliability and predictability of Cluster API releases, which is one of the most appreciated features from our users, is only possible with the support, commitment, and hard work of its community.

Kudos to the entire Cluster API community for the v1.12.0 release and all the great releases delivered in 2025! If you are interested in getting involved, learn about Cluster API contributing guidelines.

What’s next?

If you read the Cluster API manifesto, you can see how the Cluster API subproject claims the right to remain unfinished, recognizing the need to continuously evolve, improve, and adapt to the changing needs of Cluster API’s users and the broader Cloud Native ecosystem.

As Kubernetes itself continues to evolve, the Cluster API subproject will keep advancing alongside it, focusing on safer upgrades, reduced disruption, and stronger building blocks for platforms managing Kubernetes at scale.

Innovation remains at the heart of Cluster API, stay tuned for an exciting 2026!

Useful links:

Headlamp in 2025: Project Highlights

This announcement is a recap from a post originally published on the Headlamp blog.

Headlamp has come a long way in 2025. The project has continued to grow – reaching more teams across platforms, powering new workflows and integrations through plugins, and seeing increased collaboration from the broader community.

We wanted to take a moment to share a few updates and highlight how Headlamp has evolved over the past year.

Updates

Joining Kubernetes SIG UI

This year marked a big milestone for the project: Headlamp is now officially part of Kubernetes SIG UI. This move brings roadmap and design discussions even closer to the core Kubernetes community and reinforces Headlamp’s role as a modern, extensible UI for the project.

As part of that, we’ve also been sharing more about making Kubernetes approachable for a wider audience, including an appearance on Enlightening with Whitney Lee and a talk at KCD New York 2025.

Linux Foundation mentorship

This year, we were excited to work with several students through the Linux Foundation’s Mentorship program, and our mentees have already left a visible mark on Headlamp:

- Adwait Godbole built the KEDA plugin, adding a UI in Headlamp to view and manage KEDA resources like ScaledObjects and ScaledJobs.

- Dhairya Majmudar set up an OpenTelemetry-based observability stack for Headlamp, wiring up metrics, logs, and traces so the project is easier to monitor and debug.

- Aishwarya Ghatole led a UX audit of Headlamp plugins, identifying usability issues and proposing design improvements and personas for plugin users.

- Anirban Singha developed the Karpenter plugin, giving Headlamp a focused view into Karpenter autoscaling resources and decisions.

- Aditya Chaudhary improved Gateway API support, so you can see networking relationships on the resource map, as well as improved support for many of the new Gateway API resources.

- Faakhir Zahid completed a way to easily manage plugin installation with Headlamp deployed in clusters.

- Saurav Upadhyay worked on backend caching for Kubernetes API calls, reducing load on the API server and improving performance in Headlamp.

New changes

Multi-cluster view

Managing multiple clusters is challenging: teams often switch between tools and lose context when trying to see what runs where. Headlamp solves this by giving you a single view to compare clusters side-by-side. This makes it easier to understand workloads across environments and reduces the time spent hunting for resources.

View of multi-cluster workloads

View of multi-cluster workloads

Projects

Kubernetes apps often span multiple namespaces and resource types, which makes troubleshooting feel like piecing together a puzzle. We’ve added Projects to give you an application-centric view that groups related resources across multiple namespaces – and even clusters. This allows you to reduce sprawl, troubleshoot faster, and collaborate without digging through YAML or cluster-wide lists.

View of the new Projects feature

View of the new Projects feature

Changes:

- New “Projects” feature for grouping namespaces into app- or team-centric projects

- Extensible Projects details view that plugins can customize with their own tabs and actions

Navigation and Activities

Day-to-day ops in Kubernetes often means juggling logs, terminals, YAML, and dashboards across clusters. We redesigned Headlamp’s navigation to treat these as first-class “activities” you can keep open and come back to, instead of one-off views you lose as soon as you click away.

View of the new task bar

View of the new task bar

Changes:

- A new task bar/activities model lets you pin logs, exec sessions, and details as ongoing activities

- An activity overview with a “Close all” action and cluster information

- Multi-select and global filters in tables

Thanks to Jan Jansen and Aditya Chaudhary.

Search and map

When something breaks in production, the first two questions are usually “where is it?” and “what is it connected to?” We’ve upgraded both search and the map view so you can get from a high-level symptom to the right set of objects much faster.

View of the new Advanced Search feature

View of the new Advanced Search feature

Changes:

- An Advanced search view that supports rich, expression-based queries over Kubernetes objects

- Improved global search that understands labels and multiple search items, and can even update your current namespace based on what you find

- EndpointSlice support in the Network section

- A richer map view that now includes Custom Resources and Gateway API objects

Thanks to Fabian, Alexander North, and Victor Marcolino from Swisscom, and also to Aditya Chaudhary.

OIDC and authentication

We’ve put real work into making OIDC setup clearer and more resilient, especially for in-cluster deployments.

View of user information for OIDC clusters

View of user information for OIDC clusters

Changes:

- User information displayed in the top bar for OIDC-authenticated users

- PKCE support for more secure authentication flows, as well as hardened token refresh handling

- Documentation for using the access token using

-oidc-use-access-token=true - Improved support for public OIDC clients like AKS and EKS

- New guide for setting up Headlamp on AKS with Azure Entra-ID using OAuth2Proxy

Thanks to David Dobmeier and Harsh Srivastava.

App Catalog and Helm

We’ve broadened how you deploy and source apps via Headlamp, specifically supporting vanilla Helm repos.

Changes:

- A more capable Helm chart with optional backend TLS termination, PodDisruptionBudgets, custom pod labels, and more

- Improved formatting and added missing access token arg in the Helm chart

- New in-cluster Helm support with an

--enable-helmflag and a service proxy

Thanks to Vrushali Shah and Murali Annamneni from Oracle, and also to Pat Riehecky, Joshua Akers, Rostislav Stříbrný, Rick L,and Victor.

Performance, accessibility, and UX

Finally, we’ve spent a lot of time on the things you notice every day but don’t always make headlines: startup time, list views, log viewers, accessibility, and small network UX details. A continuous accessibility self-audit has also helped us identify key issues and make Headlamp easier for everyone to use.

View of the Learn section in docs

View of the Learn section in docs

Changes:

- Significant desktop improvements, with up to 60% faster app loads and much quicker dev-mode reloads for contributors

- Numerous table and log viewer refinements: persistent sort order, consistent row actions, copy-name buttons, better tooltips, and more forgiving log inputs

- Accessibility and localization improvements, including fixes for zoom-related layout issues, better color contrast, improved screen reader support, and expanded language coverage

- More control over resources, with live pod CPU/memory metrics, richer pod details, and inline editing for secrets and CRD fields

- A refreshed documentation and plugin onboarding experience, including a “Learn” section and plugin showcase

- A more complete NetworkPolicy UI and network-related polish

- Nightly builds available for early testing

Thanks to Jaehan Byun and Jan Jansen.

Plugins and extensibility

Discovering plugins is simpler now – no more hopping between Artifact Hub and assorted GitHub repos. Browse our dedicated Plugins page for a curated catalog of Headlamp-endorsed plugins, along with a showcase of featured plugins.

View of the Plugins showcase

View of the Plugins showcase

Headlamp AI Assistant

Managing Kubernetes often means memorizing commands and juggling tools. Headlamp’s new AI Assistant changes this by adding a natural-language interface built into the UI. Now, instead of typing kubectl or digging through YAML you can ask, “Is my app healthy?” or “Show logs for this deployment,” and get answers in context, speeding up troubleshooting and smoothing onboarding for new users. Learn more about it here.

New plugins additions

Alongside the new AI Assistant, we’ve been growing Headlamp’s plugin ecosystem so you can bring more of your workflows into a single UI, with integrations like Minikube, Karpenter, and more.

Highlights from the latest plugin releases:

- Minikube plugin, providing a locally stored single node Minikube cluster

- Karpenter plugin, with support for Azure Node Auto-Provisioning (NAP)

- KEDA plugin, which you can learn more about here

- Community-maintained plugins for Gatekeeper and KAITO

Thanks to Vrushali Shah and Murali Annamneni from Oracle, and also to Anirban Singha, Adwait Godbole, Sertaç Özercan, Ernest Wong, and Chloe Lim.

Other plugins updates

Alongside new additions, we’ve also spent time refining plugins that many of you already use, focusing on smoother workflows and better integration with the core UI.

View of the Backstage plugin

View of the Backstage plugin

Changes:

- Flux plugin: Updated for Flux v2.7, with support for newer CRDs, navigation fixes so it works smoothly on recent clusters

- App Catalog: Now supports Helm repos in addition to Artifact Hub, can run in-cluster via /serviceproxy, and shows both current and latest app versions

- Plugin Catalog: Improved card layout and accessibility, plus dependency and Storybook test updates

- Backstage plugin: Dependency and build updates, more info here

Plugin development

We’ve focused on making it faster and clearer to build, test, and ship Headlamp plugins, backed by improved documentation and lighter tooling.

View of the Plugin Development guide

View of the Plugin Development guide

Changes:

- New and expanded guides for plugin architecture and development, including how to publish and ship plugins

- Added i18n support documentation so plugins can be translated and localized

- Added example plugins: ui-panels, resource-charts, custom-theme, and projects

- Improved type checking for Headlamp APIs, restored Storybook support for component testing, and reduced dependencies for faster installs and fewer updates

- Documented plugin install locations, UI signifiers in Plugin Settings, and labels that differentiated shipped, UI-installed, and dev-mode plugins

Security upgrades

We've also been investing in keeping Headlamp secure – both by tightening how authentication works and by staying on top of upstream vulnerabilities and tooling.

Updates:

- We've been keeping up with security updates, regularly updating dependencies and addressing upstream security issues.

- We tightened the Helm chart's default security context and fixed a regression that broke the plugin manager.

- We've improved OIDC security with PKCE support, helping unblock more secure and standards-compliant OIDC setups when deploying Headlamp in-cluster.

Conclusion

Thank you to everyone who has contributed to Headlamp this year – whether through pull requests, plugins, or simply sharing how you're using the project. Seeing the different ways teams are adopting and extending the project is a big part of what keeps us moving forward. If your organization uses Headlamp, consider adding it to our adopters list.

If you haven't tried Headlamp recently, all these updates are available today. Check out the latest Headlamp release, explore the new views, plugins, and docs, and share your feedback with us on Slack or GitHub – your feedback helps shape where Headlamp goes next.

Announcing the Checkpoint/Restore Working Group

The community around Kubernetes includes a number of Special Interest Groups (SIGs) and Working Groups (WGs) facilitating discussions on important topics between interested contributors. Today we would like to announce the new Kubernetes Checkpoint Restore WG focusing on the integration of Checkpoint/Restore functionality into Kubernetes.

Motivation and use cases

There are several high-level scenarios discussed in the working group:

- Optimizing resource utilization for interactive workloads, such as Jupyter notebooks and AI chatbots

- Accelerating startup of applications with long initialization times, including Java applications and LLM inference services

- Using periodic checkpointing to enable fault-tolerance for long-running workloads, such as distributed model training

- Providing interruption-aware scheduling with transparent checkpoint/restore, allowing lower-priority Pods to be preempted while preserving the runtime state of applications

- Facilitating Pod migration across nodes for load balancing and maintenance, without disrupting workloads.

- Enabling forensic checkpointing to investigate and analyze security incidents such as cyberattacks, data breaches, and unauthorized access.

Across these scenarios, the goal is to help facilitate discussions of ideas between the Kubernetes community and the growing Checkpoint/Restore in Userspace (CRIU) ecosystem. The CRIU community includes several projects that support these use cases, including:

- CRIU - A tool for checkpointing and restoring running applications and containers

- checkpointctl - A tool for in-depth analysis of container checkpoints

- criu-coordinator - A tool for coordinated checkpoint/restore of distributed applications with CRIU

- checkpoint-restore-operator - A Kubernetes operator for managing checkpoints

More information about the checkpoint/restore integration with Kubernetes is also available here.

Related events

Following our presentation about transparent checkpointing at KubeCon EU 2025, we are excited to welcome you to our panel discussion and AI + ML session at KubeCon + CloudNativeCon Europe 2026.

Connect with us

If you are interested in contributing to Kubernetes or CRIU, there are several ways to participate:

- Join our meeting every second Thursday at 17:00 UTC via the Zoom link in our meeting notes; recordings of our prior meetings are available here.

- Chat with us on the Kubernetes Slack: #wg-checkpoint-restore

- Email us at the wg-checkpoint-restore mailing list

Rook v1.19 Storage Enhancements

The Rook v1.19 release is out! v1.19 is another feature-filled release to improve storage for Kubernetes. Thanks again to the community for all the great support in this journey to deploy storage in production.

The statistics continue to show Rook is widely used in the community, with over 13.3K Github stars, and Slack members and X followers constantly increasing.

If your organization deploys Rook in production, we would love to hear about it. Please see the Adopters page to add your submission. As an upstream project, we don’t track our users, but we appreciate the transparency of those who are deploying Rook!

We have a lot of new features for the Ceph storage provider that we hope you’ll be excited about with the v1.19 release!

NVMe-oF Gateway

NVMe over Fabrics allows RBD volumes to be exposed and accessed via the NVMe/TCP protocol. This enables both Kubernetes pods within the cluster and external clients outside the cluster to connect to Ceph block storage using standard NVMe-oF initiators, providing high-performance block storage access over the network.

NVMe-oF is supported by Ceph starting in the recent Ceph Tentacle release. The initial integration with Rook is now completed, and ready for testing in experimental mode, which means that it is not production ready and only intended for testing. As a large new feature, this will take some time before we declare it stable. Please test out the feature and let us know your feedback!

See the NVMe-oF Configuration Guide to get started.

Ceph CSI 3.16

The v3.16 release of Ceph CSI has a range of features and improvements for the RBD, CephFS, NFS drivers. Similar to v1.18, this release is again supported both by the Ceph CSI operator and Rook’s direct mode of configuration. The Ceph CSI operator is still configured automatically by Rook. We will target v1.20 to fully document the Ceph CSI operator configuration.

In this release, new Ceph CSI features include:

- NVMe-oF CSI driver for provisioning and mounting volumes over the NVMe over Fabrics protocol

- Improved fencing for RBD and CephFS volumes during node failure

- Block volume usage statistics

- Configurable block encryption cipher

Concurrent Cluster Reconciles

Previous to this release, when multiple Ceph clusters are configured in the same cluster, they each have been reconciled serially by Rook. If one cluster is having health issues, it would block all other subsequent clusters from being reconciled.

To improve the reconcile of multiple clusters, Rook now enables clusters to be reconciled concurrently. Concurrency is enabled by increasing the operator setting ROOK_RECONCILE_CONCURRENT_CLUSTERS (in operator.yaml or the helm setting reconcileConcurrentClusters) to a value greater than 1. If resource requests and limits are set on the operator, they may need to be increased to accommodate the concurrent reconciles.

While this is a relatively small change, to be conservative due to the difficulty of testing the concurrency, we have marked this feature experimental. Please let us know if the concurrency works smoothly for you or report any issues!

When clusters are reconciled concurrently, the rook operator log will contain the logging intermingled between all the clusters in progress. To improve the troubleshooting, we have updated many of the log entries with the namespace and/or cluster name.

Breaking changes

There are a few minor changes to be aware of during upgrades.

CephFS

- The behavior of the activeStandby property in the CephFilesystem CRD has changed. When set to false, the standby MDS daemon deployment will be scaled down and removed, rather than only disabling the standby cache while the daemon remains running.

Helm

- The rook-ceph-cluster chart has changed where the Ceph image is defined, to allow separate settings for the repository and tag. See the example values.yaml for the new repository and tag settings. If you were previously specifying the ceph image in the cephClusterSpec, remove it at the time of upgrade while specifying the new properties.

External Clusters

- In external mode, if you specify a Ceph admin keyring (not the default recommendation), Rook will no longer create CSI Ceph clients automatically. The CSI client keyrings will only be created by the external Python script. This removes the duplication between the Python script and the operator from creating the same users.

Versions

Supported Ceph Versions

Rook v1.19 has removed support for Ceph Reef v18 since it has reached end of life. If you are still running Reef, upgrade at least to Ceph Squid v19 before upgrading to Rook v1.19.

Ceph Squid and Ceph Tentacle are the supported versions with Rook v1.19.

Kubernetes v1.30 — v1.35

Kubernetes v1.30 is now the minimum version supported by Rook through the latest K8s release v1.35. Rook CI runs tests against these versions to ensure there are no issues as Kubernetes is updated. If you still require running an older K8s version, we haven’t done anything to prevent running Rook, we simply just do not have test validation on older versions.

What’s Next?

As we continue the journey to develop reliable storage operators for Kubernetes, we look forward to your ongoing feedback. Only with the community is it possible to continue this fantastic momentum.

There are many different ways to get involved in the Rook project, whether as a user or developer. Please join us in helping the project continue to grow on its way beyond the v1.17 milestone!

Rook v1.19 Storage Enhancements was originally published in Rook Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.

Uniform API server access using clientcmd

If you've ever wanted to develop a command line client for a Kubernetes API,

especially if you've considered making your client usable as a kubectl plugin,

you might have wondered how to make your client feel familiar to users of kubectl.

A quick glance at the output of kubectl options might put a damper on that:

"Am I really supposed to implement all those options?"

Fear not, others have done a lot of the work involved for you.

In fact, the Kubernetes project provides two libraries to help you handle

kubectl-style command line arguments in Go programs:

clientcmd and

cli-runtime

(which uses clientcmd).

This article will show how to use the former.

General philosophy

As might be expected since it's part of client-go,

clientcmd's ultimate purpose is to provide an instance of

restclient.Config

that can issue requests to an API server.

It follows kubectl semantics:

- defaults are taken from

~/.kubeor equivalent; - files can be specified using the

KUBECONFIGenvironment variable; - all of the above settings can be further overridden using command line arguments.

It doesn't set up a --kubeconfig command line argument,

which you might want to do to align with kubectl;

you'll see how to do this

in the "Bind the flags" section.

Available features

clientcmd allows programs to handle

kubeconfigselection (usingKUBECONFIG);- context selection;

- namespace selection;

- client certificates and private keys;

- user impersonation;

- HTTP Basic authentication support (username/password).

Configuration merging

In various scenarios, clientcmd supports merging configuration settings:

KUBECONFIG can specify multiple files whose contents are combined.

This can be confusing, because settings are merged in different directions

depending on how they are implemented.

If a setting is defined in a map, the first definition wins,

subsequent definitions are ignored.

If a setting is not defined in a map, the last definition wins.

When settings are retrieved using KUBECONFIG,

missing files result in warnings only.

If the user explicitly specifies a path (in --kubeconfig style),

there must be a corresponding file.

If KUBECONFIG isn't defined,

the default configuration file, ~/.kube/config, is used instead,

if present.

Overall process

The general usage pattern is succinctly expressed in

the clientcmd package documentation:

loadingRules := clientcmd.NewDefaultClientConfigLoadingRules()

// if you want to change the loading rules (which files in which order), you can do so here

configOverrides := &clientcmd.ConfigOverrides{}

// if you want to change override values or bind them to flags, there are methods to help you

kubeConfig := clientcmd.NewNonInteractiveDeferredLoadingClientConfig(loadingRules, configOverrides)

config, err := kubeConfig.ClientConfig()

if err != nil {

// Do something

}

client, err := metav1.New(config)

// ...

In the context of this article, there are six steps:

- Configure the loading rules.

- Configure the overrides.

- Build a set of flags.

- Bind the flags.

- Build the merged configuration.

- Obtain an API client.

Configure the loading rules

clientcmd.NewDefaultClientConfigLoadingRules() builds loading rules which will use either the contents of the KUBECONFIG environment variable,

or the default configuration file name (~/.kube/config).

In addition, if the default configuration file is used,

it is able to migrate settings from the (very) old default configuration file

(~/.kube/.kubeconfig).

You can build your own ClientConfigLoadingRules,

but in most cases the defaults are fine.

Configure the overrides

clientcmd.ConfigOverrides is a struct storing overrides which will be applied over the settings loaded from the configuration derived using the loading rules.

In the context of this article,

its primary purpose is to store values obtained from command line arguments.

These are handled using the pflag library,

which is a drop-in replacement for Go's flag package,

adding support for double-hyphen arguments with long names.

In most cases there's nothing to set in the overrides; I will only bind them to flags.

Build a set of flags

In this context, a flag is a representation of a command line argument,

specifying its long name (such as --namespace),

its short name if any (such as -n),

its default value,

and a description shown in the usage information.

Flags are stored in instances of

the FlagInfo struct.

Three sets of flags are available, representing the following command line arguments:

- authentication arguments (certificates, tokens, impersonations, username/password);

- cluster arguments (API server, certificate authority, TLS configuration, proxy, compression)

- context arguments (cluster name,

kubeconfiguser name, namespace)

The recommended selection includes all three with a named context selection argument and a timeout argument.

These are all available using the Recommended…Flags functions.

The functions take a prefix, which is prepended to all the argument long names.

So calling

clientcmd.RecommendedConfigOverrideFlags("")

results in command line arguments such as --context, --namespace, and so on.

The --timeout argument is given a default value of 0,

and the --namespace argument has a corresponding short variant, -n.

Adding a prefix, such as "from-", results in command line arguments such as

--from-context, --from-namespace, etc.

This might not seem particularly useful on commands involving a single API server,

but they come in handy when multiple API servers are involved,

such as in multi-cluster scenarios.

There's a potential gotcha here: prefixes don't modify the short name,

so --namespace needs some care if multiple prefixes are used:

only one of the prefixes can be associated with the -n short name.

You'll have to clear the short names associated with the other prefixes'

--namespace , or perhaps all prefixes if there's no sensible

-n association.

Short names can be cleared as follows:

kflags := clientcmd.RecommendedConfigOverrideFlags(prefix)

kflags.ContextOverrideFlags.Namespace.ShortName = ""

In a similar fashion, flags can be disabled entirely by clearing their long name:

kflags.ContextOverrideFlags.Namespace.LongName = ""

Bind the flags

Once a set of flags has been defined,

it can be used to bind command line arguments to overrides using

clientcmd.BindOverrideFlags.

This requires a

pflag FlagSet

rather than one from Go's flag package.

If you also want to bind --kubeconfig, you should do so now,

by binding ExplicitPath in the loading rules:

flags.StringVarP(&loadingRules.ExplicitPath, "kubeconfig", "", "", "absolute path(s) to the kubeconfig file(s)")

Build the merged configuration

Two functions are available to build a merged configuration:

clientcmd.NewInteractiveDeferredLoadingClientConfigclientcmd.NewNonInteractiveDeferredLoadingClientConfig

As the names suggest, the difference between the two is that the first can ask for authentication information interactively, using a provided reader, whereas the second only operates on the information given to it by the caller.

The "deferred" mention in these function names refers to the fact that the final configuration will be determined as late as possible. This means that these functions can be called before the command line arguments are parsed, and the resulting configuration will use whatever values have been parsed by the time it's actually constructed.

Obtain an API client

The merged configuration is returned as a

ClientConfig instance.

An API client can be obtained from that by calling the ClientConfig() method.

If no configuration is given

(KUBECONFIG is empty or points to non-existent files,

~/.kube/config doesn't exist,

and no configuration is given using command line arguments),

the default setup will return an obscure error referring to KUBERNETES_MASTER.

This is legacy behaviour;

several attempts have been made to get rid of it,

but it is preserved for the --local and --dry-run command line arguments in --kubectl.

You should check for "empty configuration" errors by calling clientcmd.IsEmptyConfig()

and provide a more explicit error message.

The Namespace() method is also useful:

it returns the namespace that should be used.

It also indicates whether the namespace was overridden by the user

(using --namespace).

Full example

Here's a complete example.

package main

import (

"context"

"fmt"

"os"

"github.com/spf13/pflag"

v1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/tools/clientcmd"

)

func main() {

// Loading rules, no configuration

loadingRules := clientcmd.NewDefaultClientConfigLoadingRules()

// Overrides and flag (command line argument) setup

configOverrides := &clientcmd.ConfigOverrides{}

flags := pflag.NewFlagSet("clientcmddemo", pflag.ExitOnError)

clientcmd.BindOverrideFlags(configOverrides, flags,

clientcmd.RecommendedConfigOverrideFlags(""))

flags.StringVarP(&loadingRules.ExplicitPath, "kubeconfig", "", "", "absolute path(s) to the kubeconfig file(s)")

flags.Parse(os.Args)

// Client construction

kubeConfig := clientcmd.NewNonInteractiveDeferredLoadingClientConfig(loadingRules, configOverrides)

config, err := kubeConfig.ClientConfig()

if err != nil {

if clientcmd.IsEmptyConfig(err) {

panic("Please provide a configuration pointing to the Kubernetes API server")

}

panic(err)

}

client, err := kubernetes.NewForConfig(config)

if err != nil {

panic(err)

}

// How to find out what namespace to use

namespace, overridden, err := kubeConfig.Namespace()

if err != nil {

panic(err)

}

fmt.Printf("Chosen namespace: %s; overridden: %t\n", namespace, overridden)

// Let's use the client

nodeList, err := client.CoreV1().Nodes().List(context.TODO(), v1.ListOptions{})

if err != nil {

panic(err)

}

for _, node := range nodeList.Items {

fmt.Println(node.Name)

}

}

Happy coding, and thank you for your interest in implementing tools with familiar usage patterns!

CoreDNS-1.14. Release

OpenCost: Reflecting on 2025 and looking ahead to 2026

The OpenCost project has had a fruitful year in terms of releases, our wonderful mentees and contributors, and fun gatherings at KubeCons.

If you’re new to OpenCost, it is an open-source cost and resources management tool that is an Incubating project in the Cloud Native Computing Foundation (CNCF). It was created by IBM Kubecost and continues to be maintained and supported by IBM Kubecost, Randoli, and a wider community of partners, including the major cloud providers.

OpenCost releases

The OpenCost project had 11 releases in 2025. These include new features and capabilities that improve the experience for both users and contributors. Here are a few highlights:

- Promless: OpenCost can be configured to run without Prometheus, using environment variables which can be set using helm. Users will be able to run OpenCost using the Collector Datasource (beta) which can be run without Prometheus.

- OpenCost MCP server: AI agents can now query cost data in real-time using natural language. They can analyze spending patterns across namespaces, pods, and nodes, generate cost reports and recommendations automatically, and provide other insights from OpenCost data.

- Export system: The project now has a generic export framework to make it possible to export cost data in a type-safe way.

- Diagnostics system: OpenCost has a complete diagnostic framework with an interface, runners, and export capabilities.

- Heartbeat system: You can do system health tracking with timestamped heartbeat events for export and more.

- Cloud providers: There are continued improvements for users to track cloud and multi-cloud metrics. We appreciate contributions from Oracle (including providing hosting for our demo) and DigitalOcean (for recent cloud services provider work).

Thanks to our maintainers and contributors who make these releases possible and successful, including our mentees and community contributors as well.

Mentorship and community management

Our project has been committed to mentorship through the Linux Foundation for a while, and we continue to have fantastic mentees who bring innovation and support to the community. Manas Sivakumar was a summer 2025 mentee and worked on writing Integration tests for OpenCost’s enterprise readiness. Manas’ work is now part of the OpenCost integration testing pipeline for all future contributions.

- Adesh Pal, a mentee, made a big splash with the OpenCost MCP server. The MCP server now comes by default and needs no configuration. It outputs readable markdown on metrics as well as step-by-step suggestions to make improvements.

- Sparsh Raj has been in our community for a while and has become our most recent mentee. Sparsh has written a blog post on KubeModel, the foundation of OpenCost’s Data Model 2.0. Sparsh’s work will meet the needs for a robust and scalable data model that can handle Kubernetes complexity and constantly shifting resources.

- On the community side, Tamao Nakahara was brought into the IBM Kubecost team for a few months of open source and developer experience expertise. Tamao helped organize the regular OpenCost community meetings, leading actions around events, the website, and docs. On the website, Tamao improved the UX for new and returning users, and brought in Ginger Walker to help clean up the docs.

Events and talks

As a CNCF incubating project, OpenCost participated in the key KubeCon events. Most recently, the team was at KubeCon + CloudNativeCon Atlanta 2025, where maintainer Matt Bolt from IBM Kubecost kicked off the week with a Project Lightning talk. During a co-located event that day, Rajith Attapattu, CTO of contributing company Randoli, also gave a talk on OpenCost. Dee Zeis, Rajith, and Tamao also answered questions at the OpenCost kiosk in the Project Pavilion.

Earlier in the year, the team was also at both KubeCon + CloudNativeCon in London and Japan, giving talks and running the OpenCost kiosks.

2026!

What’s in store for OpenCost in the coming year? Aside from meeting all of you at future KubeCon + CloudNativeCon’s, we’re also excited about a few roadmap highlights. As mentioned, our LFX mentee Sparsh is working on KubeModel, which will be important for improvements to OpenCost’s data model. As AI continues to increase in adoption, the team is also working on building out costing features to track AI usage. Finally, supply chain security improvements are a priority.

We’re looking forward to seeing more of you in the community in the next year!

CoreDNS-1.14.0 Release

Kubernetes v1.35: Restricting executables invoked by kubeconfigs via exec plugin allowList added to kuberc

Did you know that kubectl can run arbitrary executables, including shell

scripts, with the full privileges of the invoking user, and without your

knowledge? Whenever you download or auto-generate a kubeconfig, the

users[n].exec.command field can specify an executable to fetch credentials on

your behalf. Don't get me wrong, this is an incredible feature that allows you

to authenticate to the cluster with external identity providers. Nevertheless,

you probably see the problem: Do you know exactly what executables your kubeconfig

is running on your system? Do you trust the pipeline that generated your kubeconfig?

If there has been a supply-chain attack on the code that generates the kubeconfig,

or if the generating pipeline has been compromised, an attacker might well be

doing unsavory things to your machine by tricking your kubeconfig into running

arbitrary code.

To give the user more control over what gets run on their system, SIG-Auth and SIG-CLI added the credential plugin policy and allowlist as a beta feature to

Kubernetes 1.35. This is available to all clients using the client-go library,

by filling out the ExecProvider.PluginPolicy struct on a REST config. To

broaden the impact of this change, Kubernetes v1.35 also lets you manage this without

writing a line of application code. You can configure kubectl to enforce

the policy and allowlist by adding two fields to the kuberc configuration

file: credentialPluginPolicy and credentialPluginAllowlist. Adding one or

both of these fields restricts which credential plugins kubectl is allowed to execute.

How it works

A full description of this functionality is available in our official documentation for kuberc, but this blog post will give a brief overview of the new security knobs. The new features are in beta and available without using any feature gates.

The following example is the simplest one: simply don't specify the new fields.

apiVersion: kubectl.config.k8s.io/v1beta1

kind: Preference

This will keep kubectl acting as it always has, and all plugins will be

allowed.

The next example is functionally identical, but it is more explicit and therefore preferred if it's actually what you want:

apiVersion: kubectl.config.k8s.io/v1beta1

kind: Preference

credentialPluginPolicy: AllowAll

If you don't know whether or not you're using exec credential plugins, try

setting your policy to DenyAll:

apiVersion: kubectl.config.k8s.io/v1beta1

kind: Preference

credentialPluginPolicy: DenyAll

If you are using credential plugins, you'll quickly find out what kubectl is

trying to execute. You'll get an error like the following.

Unable to connect to the server: getting credentials: plugin "cloudco-login" not allowed: policy set to "DenyAll"

If there is insufficient information for you to debug the issue, increase the logging verbosity when you run your next command. For example:

# increase or decrease verbosity if the issue is still unclear

kubectl get pods --verbosity 5

Selectively allowing plugins

What if you need the cloudco-login plugin to do your daily work? That is why

there's a third option for your policy, Allowlist. To allow a specific plugin,

set the policy and add the credentialPluginAllowlist:

apiVersion: kubectl.config.k8s.io/v1beta1

kind: Preference

credentialPluginPolicy: Allowlist

credentialPluginAllowlist:

- name: /usr/local/bin/cloudco-login

- name: get-identity

You'll notice that there are two entries in the allowlist. One of them is

specified by full path, and the other, get-identity is just a basename. When

you specify just the basename, the full path will be looked up using