Feed aggregator

Part Four of The Kryptos Sculpture

Two people found the solution. They used the power of research, not cryptanalysis, finding clues amongst the Sanborn papers at the Smithsonian’s Archives of American Art.

This comes as an awkward time, as Sanborn is auctioning off the solution. There were legal threats—I don’t understand their basis—and the solvers are not publishing their solution.

Serious F5 Breach

This is bad:

F5, a Seattle-based maker of networking software, disclosed the breach on Wednesday. F5 said a “sophisticated” threat group working for an undisclosed nation-state government had surreptitiously and persistently dwelled in its network over a “long-term.” Security researchers who have responded to similar intrusions in the past took the language to mean the hackers were inside the F5 network for years.

During that time, F5 said, the hackers took control of the network segment the company uses to create and distribute updates for BIG IP, a line of server appliances that F5 ...

Canada Fines Cybercrime Friendly Cryptomus $176M

Financial regulators in Canada this week levied $176 million in fines against Cryptomus, a digital payments platform that supports dozens of Russian cryptocurrency exchanges and websites hawking cybercrime services. The penalties for violating Canada’s anti money-laundering laws come ten months after KrebsOnSecurity noted that Cryptomus’s Vancouver street address was home to dozens of foreign currency dealers, money transfer businesses, and cryptocurrency exchanges — none of which were physically located there.

On October 16, the Financial Transactions and Reports Analysis Center of Canada (FINTRAC) imposed a $176,960,190 penalty on Xeltox Enterprises Ltd., more commonly known as the cryptocurrency payments platform Cryptomus.

FINTRAC found that Cryptomus failed to submit suspicious transaction reports in cases where there were reasonable grounds to suspect that they were related to the laundering of proceeds connected to trafficking in child sexual abuse material, fraud, ransomware payments and sanctions evasion.

“Given that numerous violations in this case were connected to trafficking in child sexual abuse material, fraud, ransomware payments and sanctions evasion, FINTRAC was compelled to take this unprecedented enforcement action,” said Sarah Paquet, director and CEO at the regulatory agency.

In December 2024, KrebsOnSecurity covered research by blockchain analyst and investigator Richard Sanders, who’d spent several months signing up for various cybercrime services, and then tracking where their customer funds go from there. The 122 services targeted in Sanders’s research all used Cryptomus, and included some of the more prominent businesses advertising on the cybercrime forums, such as:

-abuse-friendly or “bulletproof” hosting providers like anonvm[.]wtf, and PQHosting;

-sites selling aged email, financial, or social media accounts, such as verif[.]work and kopeechka[.]store;

-anonymity or “proxy” providers like crazyrdp[.]com and rdp[.]monster;

-anonymous SMS services, including anonsim[.]net and smsboss[.]pro.

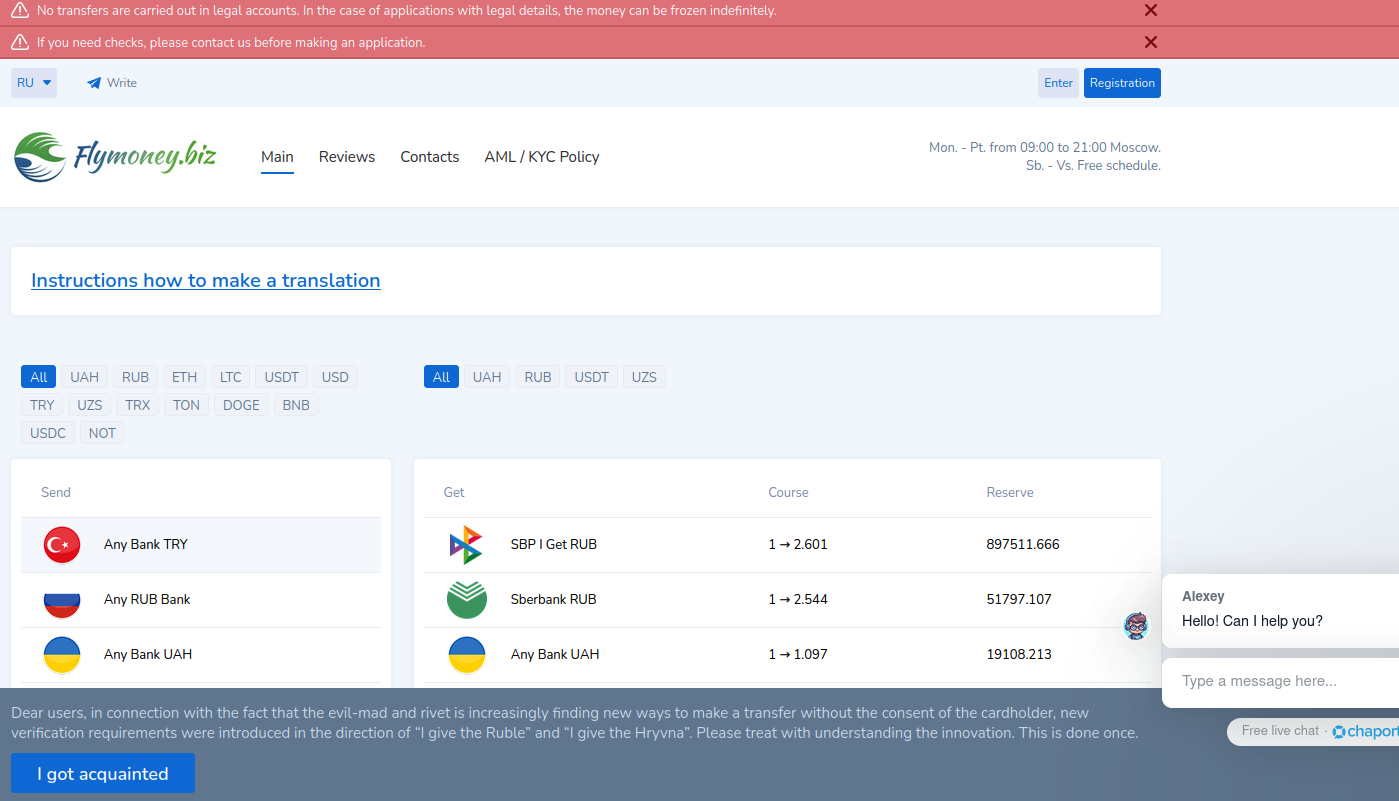

Flymoney, one of dozens of cryptocurrency exchanges apparently nested at Cryptomus. The image from this website has been machine translated from Russian.

Sanders found at least 56 cryptocurrency exchanges were using Cryptomus to process transactions, including financial entities with names like casher[.]su, grumbot[.]com, flymoney[.]biz, obama[.]ru and swop[.]is.

“These platforms were built for Russian speakers, and they each advertised the ability to anonymously swap one form of cryptocurrency for another,” the December 2024 story noted. “They also allowed the exchange of cryptocurrency for cash in accounts at some of Russia’s largest banks — nearly all of which are currently sanctioned by the United States and other western nations.”

Reached for comment on FINTRAC’s action, Sanders told KrebsOnSecurity he was surprised it took them so long.

“I have no idea why they don’t just sanction them or prosecute them,” Sanders said. “I’m not let down with the fine amount but it’s also just going to be the cost of doing business to them.”

The $173 million fine is a significant sum for FINTRAC, which imposed 23 such penalties last year totaling less than $26 million. But Sanders says FINTRAC still has much work to do in pursuing other shadowy money service businesses (MSBs) that are registered in Canada but are likely money laundering fronts for entities based in Russia and Iran.

In an investigation published in July 2024, CTV National News and the Investigative Journalism Foundation (IJF) documented dozens of cases across Canada where multiple MSBs are incorporated at the same address, often without the knowledge or consent of the location’s actual occupant.

Their inquiry found that the street address for Cryptomus parent Xeltox Enterprises was listed as the home of at least 76 foreign currency dealers, eight MSBs, and six cryptocurrency exchanges. At that address is a three-story building that used to be a bank and now houses a massage therapy clinic and a co-working space. But the news outlets found none of the MSBs or currency dealers were paying for services at that co-working space.

The reporters also found another collection of 97 MSBs clustered at an address for a commercial office suite in Ontario, even though there was no evidence any of these companies had ever arranged for any business services at that address.

Failures in Face Recognition

Interesting article on people with nonstandard faces and how facial recognition systems fail for them.

Some of those living with facial differences tell WIRED they have undergone multiple surgeries and experienced stigma for their entire lives, which is now being echoed by the technology they are forced to interact with. They say they haven’t been able to access public services due to facial verification services failing, while others have struggled to access financial services. Social media filters and face-unlocking systems on phones often won’t work, they say...

A Cybersecurity Merit Badge

Scouting America (formerly known as Boy Scouts) has a new badge in cybersecurity. There’s an image in the article; it looks good.

I want one.

Follow Up - Preventing Upgrade Failures from etcd v3.5 to v3.6

We have identified and fixed an additional scenario that may cause upgrade failures when moving from etcd v3.5 to v3.6. This post contains details, the fix, and additional workarounds. Please refer to issue 20793 to get detailed technical information.

Issue

In a previous post — How to Prevent a Common Failure when Upgrading etcd v3.5 to v3.6 — we described an upgrade issue affecting etcd versions in v3.5.1-v3.5.19. That issue was addressed in v3.5.20. However, a follow-up investigation revealed that the original fix did not cover all scenarios.

Your API Catalog Just Got an Upgrade

7 Common Kubernetes Pitfalls (and How I Learned to Avoid Them)

It’s no secret that Kubernetes can be both powerful and frustrating at times. When I first started dabbling with container orchestration, I made more than my fair share of mistakes enough to compile a whole list of pitfalls. In this post, I want to walk through seven big gotchas I’ve encountered (or seen others run into) and share some tips on how to avoid them. Whether you’re just kicking the tires on Kubernetes or already managing production clusters, I hope these insights help you steer clear of a little extra stress.

1. Skipping resource requests and limits

The pitfall: Not specifying CPU and memory requirements in Pod specifications. This typically happens because Kubernetes does not require these fields, and workloads can often start and run without them—making the omission easy to overlook in early configurations or during rapid deployment cycles.

Context: In Kubernetes, resource requests and limits are critical for efficient cluster management. Resource requests ensure that the scheduler reserves the appropriate amount of CPU and memory for each pod, guaranteeing that it has the necessary resources to operate. Resource limits cap the amount of CPU and memory a pod can use, preventing any single pod from consuming excessive resources and potentially starving other pods. When resource requests and limits are not set:

- Resource Starvation: Pods may get insufficient resources, leading to degraded performance or failures. This is because Kubernetes schedules pods based on these requests. Without them, the scheduler might place too many pods on a single node, leading to resource contention and performance bottlenecks.

- Resource Hoarding: Conversely, without limits, a pod might consume more than its fair share of resources, impacting the performance and stability of other pods on the same node. This can lead to issues such as other pods getting evicted or killed by the Out-Of-Memory (OOM) killer due to lack of available memory.

How to avoid it:

- Start with modest

requests(for example100mCPU,128Mimemory) and see how your app behaves. - Monitor real-world usage and refine your values; the HorizontalPodAutoscaler can help automate scaling based on metrics.

- Keep an eye on

kubectl top podsor your logging/monitoring tool to confirm you’re not over- or under-provisioning.

My reality check: Early on, I never thought about memory limits. Things seemed fine on my local cluster. Then, on a larger environment, Pods got OOMKilled left and right. Lesson learned. For detailed instructions on configuring resource requests and limits for your containers, please refer to Assign Memory Resources to Containers and Pods (part of the official Kubernetes documentation).

2. Underestimating liveness and readiness probes

The pitfall: Deploying containers without explicitly defining how Kubernetes should check their health or readiness. This tends to happen because Kubernetes will consider a container “running” as long as the process inside hasn’t exited. Without additional signals, Kubernetes assumes the workload is functioning—even if the application inside is unresponsive, initializing, or stuck.

Context:

Liveness, readiness, and startup probes are mechanisms Kubernetes uses to monitor container health and availability.

- Liveness probes determine if the application is still alive. If a liveness check fails, the container is restarted.

- Readiness probes control whether a container is ready to serve traffic. Until the readiness probe passes, the container is removed from Service endpoints.

- Startup probes help distinguish between long startup times and actual failures.

How to avoid it:

- Add a simple HTTP

livenessProbeto check a health endpoint (for example/healthz) so Kubernetes can restart a hung container. - Use a

readinessProbeto ensure traffic doesn’t reach your app until it’s warmed up. - Keep probes simple. Overly complex checks can create false alarms and unnecessary restarts.

My reality check: I once forgot a readiness probe for a web service that took a while to load. Users hit it prematurely, got weird timeouts, and I spent hours scratching my head. A 3-line readiness probe would have saved the day.

For comprehensive instructions on configuring liveness, readiness, and startup probes for containers, please refer to Configure Liveness, Readiness and Startup Probes in the official Kubernetes documentation.

3. “We’ll just look at container logs” (famous last words)

The pitfall: Relying solely on container logs retrieved via kubectl logs. This often happens because the command is quick and convenient, and in many setups, logs appear accessible during development or early troubleshooting. However, kubectl logs only retrieves logs from currently running or recently terminated containers, and those logs are stored on the node’s local disk. As soon as the container is deleted, evicted, or the node is restarted, the log files may be rotated out or permanently lost.

How to avoid it:

- Centralize logs using CNCF tools like Fluentd or Fluent Bit to aggregate output from all Pods.

- Adopt OpenTelemetry for a unified view of logs, metrics, and (if needed) traces. This lets you spot correlations between infrastructure events and app-level behavior.

- Pair logs with Prometheus metrics to track cluster-level data alongside application logs. If you need distributed tracing, consider CNCF projects like Jaeger.

My reality check: The first time I lost Pod logs to a quick restart, I realized how flimsy “kubectl logs” can be on its own. Since then, I’ve set up a proper pipeline for every cluster to avoid missing vital clues.

4. Treating dev and prod exactly the same

The pitfall: Deploying the same Kubernetes manifests with identical settings across development, staging, and production environments. This often occurs when teams aim for consistency and reuse, but overlook that environment-specific factors—such as traffic patterns, resource availability, scaling needs, or access control—can differ significantly. Without customization, configurations optimized for one environment may cause instability, poor performance, or security gaps in another.

How to avoid it:

- Use environment overlays or kustomize to maintain a shared base while customizing resource requests, replicas, or config for each environment.

- Extract environment-specific configuration into ConfigMaps and / or Secrets. You can use a specialized tool such as Sealed Secrets to manage confidential data.

- Plan for scale in production. Your dev cluster can probably get away with minimal CPU/memory, but prod might need significantly more.

My reality check: One time, I scaled up replicaCount from 2 to 10 in a tiny dev environment just to “test.” I promptly ran out of resources and spent half a day cleaning up the aftermath. Oops.

5. Leaving old stuff floating around

The pitfall: Leaving unused or outdated resources—such as Deployments, Services, ConfigMaps, or PersistentVolumeClaims—running in the cluster. This often happens because Kubernetes does not automatically remove resources unless explicitly instructed, and there is no built-in mechanism to track ownership or expiration. Over time, these forgotten objects can accumulate, consuming cluster resources, increasing cloud costs, and creating operational confusion, especially when stale Services or LoadBalancers continue to route traffic.

How to avoid it:

- Label everything with a purpose or owner label. That way, you can easily query resources you no longer need.

- Regularly audit your cluster: run

kubectl get all -n <namespace>to see what’s actually running, and confirm it’s all legit. - Adopt Kubernetes’ Garbage Collection: K8s docs show how to remove dependent objects automatically.

- Leverage policy automation: Tools like Kyverno can automatically delete or block stale resources after a certain period, or enforce lifecycle policies so you don’t have to remember every single cleanup step.

My reality check: After a hackathon, I forgot to tear down a “test-svc” pinned to an external load balancer. Three weeks later, I realized I’d been paying for that load balancer the entire time. Facepalm.

6. Diving too deep into networking too soon

The pitfall: Introducing advanced networking solutions—such as service meshes, custom CNI plugins, or multi-cluster communication—before fully understanding Kubernetes' native networking primitives. This commonly occurs when teams implement features like traffic routing, observability, or mTLS using external tools without first mastering how core Kubernetes networking works: including Pod-to-Pod communication, ClusterIP Services, DNS resolution, and basic ingress traffic handling. As a result, network-related issues become harder to troubleshoot, especially when overlays introduce additional abstractions and failure points.

How to avoid it:

- Start small: a Deployment, a Service, and a basic ingress controller such as one based on NGINX (e.g., Ingress-NGINX).

- Make sure you understand how traffic flows within the cluster, how service discovery works, and how DNS is configured.

- Only move to a full-blown mesh or advanced CNI features when you actually need them, complex networking adds overhead.

My reality check: I tried Istio on a small internal app once, then spent more time debugging Istio itself than the actual app. Eventually, I stepped back, removed Istio, and everything worked fine.

7. Going too light on security and RBAC

The pitfall: Deploying workloads with insecure configurations, such as running containers as the root user, using the latest image tag, disabling security contexts, or assigning overly broad RBAC roles like cluster-admin. These practices persist because Kubernetes does not enforce strict security defaults out of the box, and the platform is designed to be flexible rather than opinionated. Without explicit security policies in place, clusters can remain exposed to risks like container escape, unauthorized privilege escalation, or accidental production changes due to unpinned images.

How to avoid it:

- Use RBAC to define roles and permissions within Kubernetes. While RBAC is the default and most widely supported authorization mechanism, Kubernetes also allows the use of alternative authorizers. For more advanced or external policy needs, consider solutions like OPA Gatekeeper (based on Rego), Kyverno, or custom webhooks using policy languages such as CEL or Cedar.

- Pin images to specific versions (no more

:latest!). This helps you know what’s actually deployed. - Look into Pod Security Admission (or other solutions like Kyverno) to enforce non-root containers, read-only filesystems, etc.

My reality check: I never had a huge security breach, but I’ve heard plenty of cautionary tales. If you don’t tighten things up, it’s only a matter of time before something goes wrong.

Final thoughts

Kubernetes is amazing, but it’s not psychic, it won’t magically do the right thing if you don’t tell it what you need. By keeping these pitfalls in mind, you’ll avoid a lot of headaches and wasted time. Mistakes happen (trust me, I’ve made my share), but each one is a chance to learn more about how Kubernetes truly works under the hood. If you’re curious to dive deeper, the official docs and the community Slack are excellent next steps. And of course, feel free to share your own horror stories or success tips, because at the end of the day, we’re all in this cloud native adventure together.

Happy Shipping!

Agentic AI’s OODA Loop Problem

The OODA loop—for observe, orient, decide, act—is a framework to understand decision-making in adversarial situations. We apply the same framework to artificial intelligence agents, who have to make their decisions with untrustworthy observations and orientation. To solve this problem, we need new systems of input, processing, and output integrity.

Many decades ago, U.S. Air Force Colonel John Boyd introduced the concept of the “OODA loop,” for Observe, Orient, Decide, and Act. These are the four steps of real-time continuous decision-making. Boyd developed it for fighter pilots, but it’s long been applied in artificial intelligence (AI) and robotics. An AI agent, like a pilot, executes the loop over and over, accomplishing its goals iteratively within an ever-changing environment. This is Anthropic’s definition: “Agents are models using tools in a loop.”...

Hands off Linkerd certificate rotation

This blog post was originally published on Matthew McLane’s Medium blog.

I’ll start by saying that I think Linkerd is a great tool. We use it at work to provide TLS between our pods, which frees us from having to build that functionality directly into our containers. When it works, it’s fantastic! It’s simple to get up and running and just does the job without a lot of extra fuss. For the most part, it’s been a very hands-off experience, which is exactly what we need.

3 Costly Mistakes in App and API Security and How to Avoid Them

Helm Turns 10

Ten years ago, in a hackathon shortly after the release of Kubernetes 1.1.0, Helm was born.

commit ecad6e2ef9523a0218864ec552bbfc724f0b9d3d

Author: Matt Butcher <[email protected]>

Date: Mon Oct 19 17:43:26 2015 -0600

initial add

The first commit can be found on the helm-classic Git repository where the codebase for Helm v1 is located. This is the original Helm, before it merged with Deployment Manager and was folded into the Kubernetes project.

This commit was just the beginning. Helm would be shown off at the first KubeCon, just a few weeks later. From there Helm development would take off and a community of developers and charts would follow.

Happy 10th Birthday, Helm!

Helm Turns 10

Ten years ago, in a hackathon shortly after the release of Kubernetes 1.1.0, Helm was born.

commit ecad6e2ef9523a0218864ec552bbfc724f0b9d3d

Author: Matt Butcher <[email protected]>

Date: Mon Oct 19 17:43:26 2015 -0600

initial add

The first commit can be found on the helm-classic Git repository where the codebase for Helm v1 is located. This is the original Helm, before it merged with Deployment Manager and was folded into the Kubernetes project.

Spotlight on Policy Working Group

(Note: The Policy Working Group has completed its mission and is no longer active. This article reflects its work, accomplishments, and insights into how a working group operates.)

In the complex world of Kubernetes, policies play a crucial role in managing and securing clusters. But have you ever wondered how these policies are developed, implemented, and standardized across the Kubernetes ecosystem? To answer that, let's take a look back at the work of the Policy Working Group.

The Policy Working Group was dedicated to a critical mission: providing an overall architecture that encompasses both current policy-related implementations and future policy proposals in Kubernetes. Their goal was both ambitious and essential: to develop a universal policy architecture that benefits developers and end-users alike.

Through collaborative methods, this working group strove to bring clarity and consistency to the often complex world of Kubernetes policies. By focusing on both existing implementations and future proposals, they ensured that the policy landscape in Kubernetes remains coherent and accessible as the technology evolves.

This blog post dives deeper into the work of the Policy Working Group, guided by insights from its former co-chairs:

Interviewed by Arujjwal Negi.

These co-chairs explained what the Policy Working Group was all about.

Introduction

Hello, thank you for the time! Let’s start with some introductions, could you tell us a bit about yourself, your role, and how you got involved in Kubernetes?

Jim Bugwadia: My name is Jim Bugwadia, and I am a co-founder and the CEO at Nirmata which provides solutions that automate security and compliance for cloud-native workloads. At Nirmata, we have been working with Kubernetes since it started in 2014. We initially built a Kubernetes policy engine in our commercial platform and later donated it to CNCF as the Kyverno project. I joined the CNCF Kubernetes Policy Working Group to help build and standardize various aspects of policy management for Kubernetes and later became a co-chair.

Andy Suderman: My name is Andy Suderman and I am the CTO of Fairwinds, a managed Kubernetes-as-a-Service provider. I began working with Kubernetes in 2016 building a web conferencing platform. I am an author and/or maintainer of several Kubernetes-related open-source projects such as Goldilocks, Pluto, and Polaris. Polaris is a JSON-schema-based policy engine, which started Fairwinds' journey into the policy space and my involvement in the Policy Working Group.

Poonam Lamba: My name is Poonam Lamba, and I currently work as a Product Manager for Google Kubernetes Engine (GKE) at Google. My journey with Kubernetes began back in 2017 when I was building an SRE platform for a large enterprise, using a private cloud built on Kubernetes. Intrigued by its potential to revolutionize the way we deployed and managed applications at the time, I dove headfirst into learning everything I could about it. Since then, I've had the opportunity to build the policy and compliance products for GKE. I lead and contribute to GKE CIS benchmarks. I am involved with the Gatekeeper project as well as I have contributed to Policy-WG for over 2 years and served as a co-chair for the group.

Responses to the following questions represent an amalgamation of insights from the former co-chairs.

About Working Groups

One thing even I am not aware of is the difference between a working group and a SIG. Can you help us understand what a working group is and how it is different from a SIG?

Unlike SIGs, working groups are temporary and focused on tackling specific, cross-cutting issues or projects that may involve multiple SIGs. Their lifespan is defined, and they disband once they've achieved their objective. Generally, working groups don't own code or have long-term responsibility for managing a particular area of the Kubernetes project.

(To know more about SIGs, visit the list of Special Interest Groups)

You mentioned that Working Groups involve multiple SIGS. What SIGS was the Policy WG closely involved with, and how did you coordinate with them?

The group collaborated closely with Kubernetes SIG Auth throughout our existence, and more recently, the group also worked with SIG Security since its formation. Our collaboration occurred in a few ways. We provided periodic updates during the SIG meetings to keep them informed of our progress and activities. Additionally, we utilize other community forums to maintain open lines of communication and ensured our work aligned with the broader Kubernetes ecosystem. This collaborative approach helped the group stay coordinated with related efforts across the Kubernetes community.

Policy WG

Why was the Policy Working Group created?

To enable a broad set of use cases, we recognize that Kubernetes is powered by a highly declarative, fine-grained, and extensible configuration management system. We've observed that a Kubernetes configuration manifest may have different portions that are important to various stakeholders. For example, some parts may be crucial for developers, while others might be of particular interest to security teams or address operational concerns. Given this complexity, we believe that policies governing the usage of these intricate configurations are essential for success with Kubernetes.

Our Policy Working Group was created specifically to research the standardization of policy definitions and related artifacts. We saw a need to bring consistency and clarity to how policies are defined and implemented across the Kubernetes ecosystem, given the diverse requirements and stakeholders involved in Kubernetes deployments.

Can you give me an idea of the work you did in the group?

We worked on several Kubernetes policy-related projects. Our initiatives included:

- We worked on a Kubernetes Enhancement Proposal (KEP) for the Kubernetes Policy Reports API. This aims to standardize how policy reports are generated and consumed within the Kubernetes ecosystem.

- We conducted a CNCF survey to better understand policy usage in the Kubernetes space. This helped gauge the practices and needs across the community at the time.

- We wrote a paper that will guide users in achieving PCI-DSS compliance for containers. This is intended to help organizations meet important security standards in their Kubernetes environments.

- We also worked on a paper highlighting how shifting security down can benefit organizations. This focuses on the advantages of implementing security measures earlier in the development and deployment process.

Can you tell us what were the main objectives of the Policy Working Group and some of your key accomplishments?

The charter of the Policy WG was to help standardize policy management for Kubernetes and educate the community on best practices.

To accomplish this we updated the Kubernetes documentation (Policies | Kubernetes), produced several whitepapers (Kubernetes Policy Management, Kubernetes GRC), and created the Policy Reports API (API reference) which standardizes reporting across various tools. Several popular tools such as Falco, Trivy, Kyverno, kube-bench, and others support the Policy Report API. A major milestone for the Policy WG was promoting the Policy Reports API to a SIG-level API or finding it a stable home.

Beyond that, as ValidatingAdmissionPolicy and MutatingAdmissionPolicy approached GA in Kubernetes, a key goal of the WG was to guide and educate the community on the tradeoffs and appropriate usage patterns for these built-in API objects and other CNCF policy management solutions like OPA/Gatekeeper and Kyverno.

Challenges

What were some of the major challenges that the Policy Working Group worked on?

During our work in the Policy Working Group, we encountered several challenges:

-

One of the main issues we faced was finding time to consistently contribute. Given that many of us have other professional commitments, it can be difficult to dedicate regular time to the working group's initiatives.

-

Another challenge we experienced was related to our consensus-driven model. While this approach ensures that all voices are heard, it can sometimes lead to slower decision-making processes. We valued thorough discussion and agreement, but this can occasionally delay progress on our projects.

-

We've also encountered occasional differences of opinion among group members. These situations require careful navigation to ensure that we maintain a collaborative and productive environment while addressing diverse viewpoints.

-

Lastly, we've noticed that newcomers to the group may find it difficult to contribute effectively without consistent attendance at our meetings. The complex nature of our work often requires ongoing context, which can be challenging for those who aren't able to participate regularly.

Can you tell me more about those challenges? How did you discover each one? What has the impact been? What were some strategies you used to address them?

There are no easy answers, but having more contributors and maintainers greatly helps! Overall the CNCF community is great to work with and is very welcoming to beginners. So, if folks out there are hesitating to get involved, I highly encourage them to attend a WG or SIG meeting and just listen in.

It often takes a few meetings to fully understand the discussions, so don't feel discouraged if you don't grasp everything right away. We made a point to emphasize this and encouraged new members to review documentation as a starting point for getting involved.

Additionally, differences of opinion were valued and encouraged within the Policy-WG. We adhered to the CNCF core values and resolve disagreements by maintaining respect for one another. We also strove to timebox our decisions and assign clear responsibilities to keep things moving forward.

This is where our discussion about the Policy Working Group ends. The working group, and especially the people who took part in this article, hope this gave you some insights into the group's aims and workings. You can get more info about Working Groups here.