Feed aggregator

Cloudflare Scrubs Aisuru Botnet from Top Domains List

For the past week, domains associated with the massive Aisuru botnet have repeatedly usurped Amazon, Apple, Google and Microsoft in Cloudflare’s public ranking of the most frequently requested websites. Cloudflare responded by redacting Aisuru domain names from their top websites list. The chief executive at Cloudflare says Aisuru’s overlords are using the botnet to boost their malicious domain rankings, while simultaneously attacking the company’s domain name system (DNS) service.

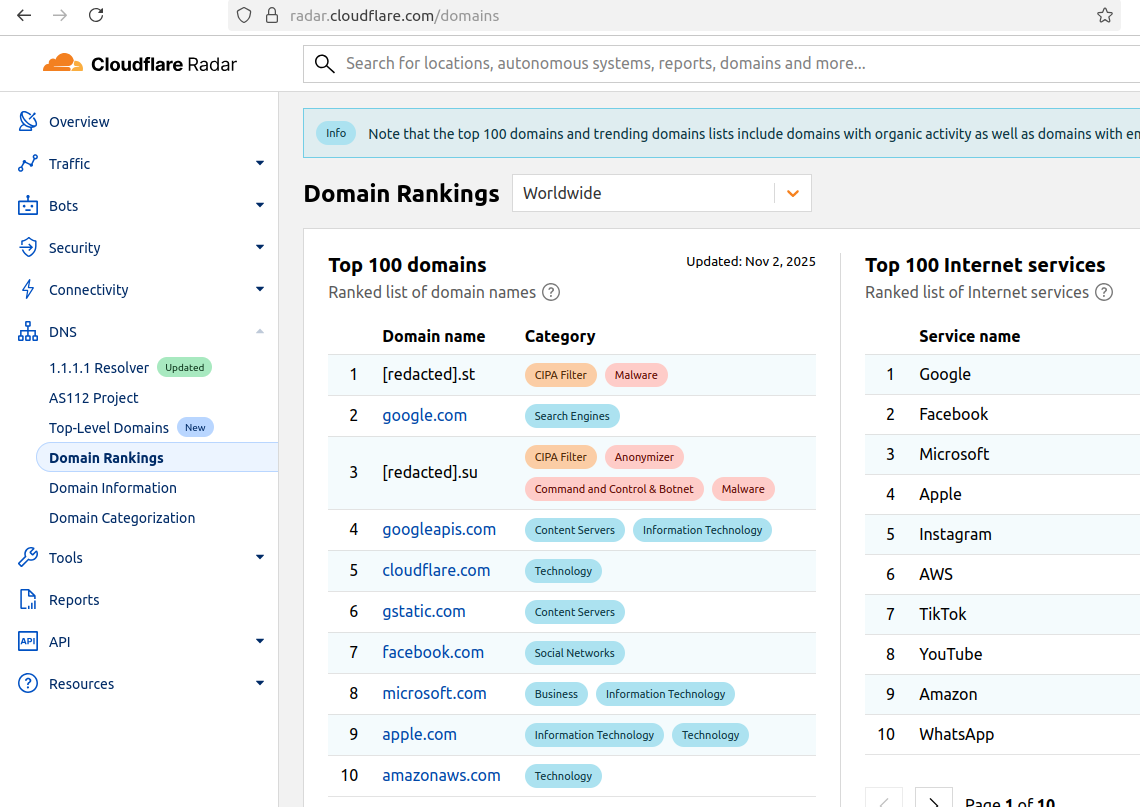

The #1 and #3 positions in this chart are Aisuru botnet controllers with their full domain names redacted. Source: radar.cloudflare.com.

Aisuru is a rapidly growing botnet comprising hundreds of thousands of hacked Internet of Things (IoT) devices, such as poorly secured Internet routers and security cameras. The botnet has increased in size and firepower significantly since its debut in 2024, demonstrating the ability to launch record distributed denial-of-service (DDoS) attacks nearing 30 terabits of data per second.

Until recently, Aisuru’s malicious code instructed all infected systems to use DNS servers from Google — specifically, the servers at 8.8.8.8. But in early October, Aisuru switched to invoking Cloudflare’s main DNS server — 1.1.1.1 — and over the past week domains used by Aisuru to control infected systems started populating Cloudflare’s top domain rankings.

As screenshots of Aisuru domains claiming two of the Top 10 positions ping-ponged across social media, many feared this was yet another sign that an already untamable botnet was running completely amok. One Aisuru botnet domain that sat prominently for days at #1 on the list was someone’s street address in Massachusetts followed by “.com”. Other Aisuru domains mimicked those belonging to major cloud providers.

Cloudflare tried to address these security, brand confusion and privacy concerns by partially redacting the malicious domains, and adding a warning at the top of its rankings:

“Note that the top 100 domains and trending domains lists include domains with organic activity as well as domains with emerging malicious behavior.”

Cloudflare CEO Matthew Prince told KrebsOnSecurity the company’s domain ranking system is fairly simplistic, and that it merely measures the volume of DNS queries to 1.1.1.1.

“The attacker is just generating a ton of requests, maybe to influence the ranking but also to attack our DNS service,” Prince said, adding that Cloudflare has heard reports of other large public DNS services seeing similar uptick in attacks. “We’re fixing the ranking to make it smarter. And, in the meantime, redacting any sites we classify as malware.”

Renee Burton, vice president of threat intel at the DNS security firm Infoblox, said many people erroneously assumed that the skewed Cloudflare domain rankings meant there were more bot-infected devices than there were regular devices querying sites like Google and Apple and Microsoft.

“Cloudflare’s documentation is clear — they know that when it comes to ranking domains you have to make choices on how to normalize things,” Burton wrote on LinkedIn. “There are many aspects that are simply out of your control. Why is it hard? Because reasons. TTL values, caching, prefetching, architecture, load balancing. Things that have shared control between the domain owner and everything in between.”

Alex Greenland is CEO of the anti-phishing and security firm Epi. Greenland said he understands the technical reason why Aisuru botnet domains are showing up in Cloudflare’s rankings (those rankings are based on DNS query volume, not actual web visits). But he said they’re still not meant to be there.

“It’s a failure on Cloudflare’s part, and reveals a compromise of the trust and integrity of their rankings,” he said.

Greenland said Cloudflare planned for its Domain Rankings to list the most popular domains as used by human users, and it was never meant to be a raw calculation of query frequency or traffic volume going through their 1.1.1.1 DNS resolver.

“They spelled out how their popularity algorithm is designed to reflect real human use and exclude automated traffic (they said they’re good at this),” Greenland wrote on LinkedIn. “So something has evidently gone wrong internally. We should have two rankings: one representing trust and real human use, and another derived from raw DNS volume.”

Why might it be a good idea to wholly separate malicious domains from the list? Greenland notes that Cloudflare Domain Rankings see widespread use for trust and safety determination, by browsers, DNS resolvers, safe browsing APIs and things like TRANCO.

“TRANCO is a respected open source list of the top million domains, and Cloudflare Radar is one of their five data providers,” he continued. “So there can be serious knock-on effects when a malicious domain features in Cloudflare’s top 10/100/1000/million. To many people and systems, the top 10 and 100 are naively considered safe and trusted, even though algorithmically-defined top-N lists will always be somewhat crude.”

Over this past week, Cloudflare started redacting portions of the malicious Aisuru domains from its Top Domains list, leaving only their domain suffix visible. Sometime in the past 24 hours, Cloudflare appears to have begun hiding the malicious Aisuru domains entirely from the web version of that list. However, downloading a spreadsheet of the current Top 200 domains from Cloudflare Radar shows an Aisuru domain still at the very top.

According to Cloudflare’s website, the majority of DNS queries to the top Aisuru domains — nearly 52 percent — originated from the United States. This tracks with my reporting from early October, which found Aisuru was drawing most of its firepower from IoT devices hosted on U.S. Internet providers like AT&T, Comcast and Verizon.

Experts tracking Aisuru say the botnet relies on well more than a hundred control servers, and that for the moment at least most of those domains are registered in the .su top-level domain (TLD). Dot-su is the TLD assigned to the former Soviet Union (.su’s Wikipedia page says the TLD was created just 15 months before the fall of the Berlin wall).

A Cloudflare blog post from October 27 found that .su had the highest “DNS magnitude” of any TLD, referring to a metric estimating the popularity of a TLD based on the number of unique networks querying Cloudflare’s 1.1.1.1 resolver. The report concluded that the top .su hostnames were associated with a popular online world-building game, and that more than half of the queries for that TLD came from the United States, Brazil and Germany [it’s worth noting that servers for the world-building game Minecraft were some of Aisuru’s most frequent targets].

A simple and crude way to detect Aisuru bot activity on a network may be to set an alert on any systems attempting to contact domains ending in .su. This TLD is frequently abused for cybercrime and by cybercrime forums and services, and blocking access to it entirely is unlikely to raise any legitimate complaints.

Exceptions in Cranelift and Wasmtime

Scientists Need a Positive Vision for AI

For many in the research community, it’s gotten harder to be optimistic about the impacts of artificial intelligence.

As authoritarianism is rising around the world, AI-generated “slop” is overwhelming legitimate media, while AI-generated deepfakes are spreading misinformation and parroting extremist messages. AI is making warfare more precise and deadly amidst intransigent conflicts. AI companies are exploiting people in the global South who work as data labelers, and profiting from content creators worldwide by using their work without license or compensation. The industry is also affecting an already-roiling climate with its ...

Cybercriminals Targeting Payroll Sites

Microsoft is warning of a scam involving online payroll systems. Criminals use social engineering to steal people’s credentials, and then divert direct deposits into accounts that they control. Sometimes they do other things to make it harder for the victim to realize what is happening.

I feel like this kind of thing is happening everywhere, with everything. As we move more of our personal and professional lives online, we enable criminals to subvert the very systems we rely on.

Helm @ KubeCon + CloudNativeCon NA '25

The Helm team is headed to KubeCon + CloudNativeCon NA '25 in Atlanta, Georgia next week and it's truly a special one for us! This time around, as we celebrate our 10th birthday (fun fact, Helm was launched at the first KubeCon in 2015), we will also be releasing the highly anticipated Helm 4! Join us for a series of exciting activities throughout the week -- read on for more details!

Helm Booth in Project Pavilion

Don't miss out on meeting our project maintainers at the Helm booth - we'll be hanging out the second half of each day. Drop by to ask questions, learn about what's in Helm 4, and pick up special Hazel swag celebrating Helm's 10th birthday!

-

TUES Nov 11 @ 03:30 PM - 07:45 PM ET

-

WED Nov 12 @ 02:00 PM - 05:00 PM ET

-

THUR Nov 13 @ 12:30 PM - 02:00 PM ET

LOCATION: 8B, back side of Flux and across from Argo

Tuesday November 11, 2025

Simplifying Advanced AI Model Serving on Kubernetes Using Helm Charts

TIME: 12:00 PM - 12:30 PM ET

LOCATION: Building B | Level 4 | B401-402

SPEAKERS: Ajay Vohra & Caron Zhang

The AI model serving landscape on Kubernetes presents practitioners with an overwhelming array of technology choices: From inference servers like Ray Serve and Triton Inference Server, inference engines like vLLM, and orchestration platforms like Ray Cluster and KServe. While this diversity drives innovation, it also creates complexity. Teams often prematurely standardize on limited technology stacks to manage this complexity.

This talk introduces an innovative Helm-based approach that abstracts the complexity of AI model serving while preserving the flexibility to leverage the best tools for each use case. Our solution is accelerator agnostic, and provides a consistent YAML interface for deploying and experimenting with various serving technologies.

We'll demonstrate this approach through two concrete examples of multi-node, multi-accelerator model serving with auto scaling: 1/ Ray Serve + vLLM + Ray Cluster, and 2/ LeaderWorkerSet + Triton Inference Server + vLLM + Ray Cluster + HPA.

Wednesday November 12, 2025

Maintainer Track: Introducing Helm 4

TIME: 11:00 AM - 11:30 AM ET

LOCATION: Building C | Level 3 | Georgia Ballroom 1

SPEAKERS: Matt Farina & Robert Sirchia (Helm Maintainers)

The wait is over! After six years with Helm v3, Helm v4 is finally here. In this session you'll learn about Helm v4, why there was 6 years between major versions (from backwards compatible feature development to maintainer ups and downs), what's new in Helm v4, how long Helm v3 will still be supported, and what comes next. Could that include a Helm v5?

Contribfest: Hands-On With Helm 4: Wasm Plugins, OCI, and Resource Sequencing. Oh My!

TIME: 02:15pm - 03:30 PM ET

LOCATION: Building B | Level 2 | B207

SPEAKERS: Andrew Block, Scott Rigby, & George Jenkins (Helm Maintainers)

Join Helm maintainers for an interactive session contributing to core Helm and building integrations with some of Helm 4's emerging features. We'll guide contributors through creating Helm 4's newest enhancements including WebAssembly plugins, enhancements to how OCI content is manged, and implementing resource sequencing for controlled deployment order. Attendees will explore how to build Download/Postrender/CLI plugins in WebAssembly, develop capabilities related to changes to Helm's management of OCI content including repository prefixes and aliases, and use approaches for sequencing chart deployments beyond Helm's traditional mechanisms.

This session is geared toward anyone interested in Helm development including leveraging and building upon some of the latest features associated with Helm 4!

Helm 4 Release Party

TIME: 06:00 PM - 09:00 PM EST

LOCATION: Max Lager's Wood-Fired Grill & Brewery

Replicated and the CNCF are throwing a Helm 4 Release Party to celebrate the release of Helm 4! Drop by for a low country boil and hang out with the Helm project maintainers for the night! See the invitation here, and don't forget to save your spot – RSVP here.

Thursday November 13, 2025

Mission Abort: Intercepting Dangerous Deletes Before Helm Hits Apply

TIME: 01:45 PM - 02:15 PM ET

LOCATION: Building B | Level 5 | Thomas Murphy Ballroom 4

SPEAKERS: Payal Godhani

What if your next Helm deployment silently deletes a LoadBalancer, a Gateway, or an entire namespace? We’ve lived that nightmare—multiple times. In this talk, we’ll share how we turned painful Sev1 outages into a resilient, guardrail-first deployment strategy. By integrating Helm Diff and Argo CD Diff, we built a system that scans every deployment for destructive changes—like the removal of LoadBalancers, KGateways, Services, PVCs, or Namespaces—and blocks them unless explicitly approved. This second-layer approval acts as a safety circuit for your release pipelines. No guesswork. No blind deploys. Just real-time visibility into what’s about to break—before it actually does. Whether you’re managing a single cluster or an entire fleet, this talk will show you how to stop fearing Helm and start trusting it again. Because resilience isn’t about avoiding failure—it’s about learning, adapting, and building guardrails that protect everyone.

Announcing Vitess 23.0.0

AI Summarization Optimization

These days, the most important meeting attendee isn’t a person: It’s the AI notetaker.

This system assigns action items and determines the importance of what is said. If it becomes necessary to revisit the facts of the meeting, its summary is treated as impartial evidence.

But clever meeting attendees can manipulate this system’s record by speaking more to what the underlying AI weights for summarization and importance than to their colleagues. As a result, you can expect some meeting attendees to use language more likely to be captured in summaries, timing their interventions strategically, repeating key points, and employing formulaic phrasing that AI models are more likely to pick up on. Welcome to the world of AI summarization optimization (AISO)...

Alleged Jabber Zeus Coder ‘MrICQ’ in U.S. Custody

A Ukrainian man indicted in 2012 for conspiring with a prolific hacking group to steal tens of millions of dollars from U.S. businesses was arrested in Italy and is now in custody in the United States, KrebsOnSecurity has learned.

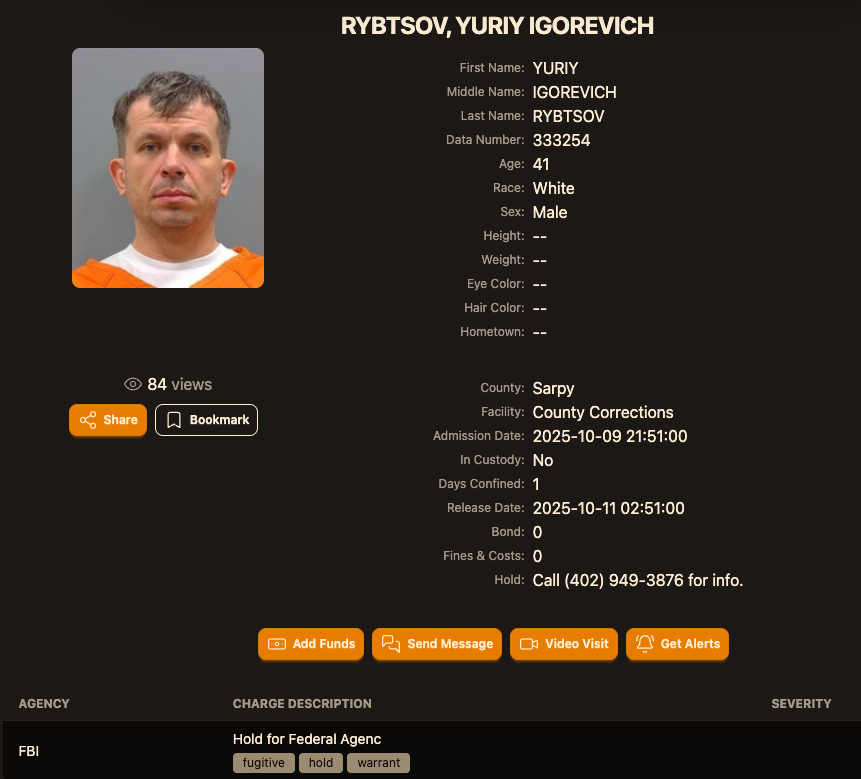

Sources close to the investigation say Yuriy Igorevich Rybtsov, a 41-year-old from the Russia-controlled city of Donetsk, Ukraine, was previously referenced in U.S. federal charging documents only by his online handle “MrICQ.” According to a 13-year-old indictment (PDF) filed by prosecutors in Nebraska, MrICQ was a developer for a cybercrime group known as “Jabber Zeus.”

Image: lockedup dot wtf.

The Jabber Zeus name is derived from the malware they used — a custom version of the ZeuS banking trojan — that stole banking login credentials and would send the group a Jabber instant message each time a new victim entered a one-time passcode at a financial institution website. The gang targeted mostly small to mid-sized businesses, and they were an early pioneer of so-called “man-in-the-browser” attacks, malware that can silently intercept any data that victims submit in a web-based form.

Once inside a victim company’s accounts, the Jabber Zeus crew would modify the firm’s payroll to add dozens of “money mules,” people recruited through elaborate work-at-home schemes to handle bank transfers. The mules in turn would forward any stolen payroll deposits — minus their commissions — via wire transfers to other mules in Ukraine and the United Kingdom.

The 2012 indictment targeting the Jabber Zeus crew named MrICQ as “John Doe #3,” and said this person handled incoming notifications of newly compromised victims. The Department of Justice (DOJ) said MrICQ also helped the group launder the proceeds of their heists through electronic currency exchange services.

Two sources familiar with the Jabber Zeus investigation said Rybtsov was arrested in Italy, although the exact date and circumstances of his arrest remain unclear. A summary of recent decisions (PDF) published by the Italian Supreme Court states that in April 2025, Rybtsov lost a final appeal to avoid extradition to the United States.

According to the mugshot website lockedup[.]wtf, Rybtsov arrived in Nebraska on October 9, and was being held under an arrest warrant from the U.S. Federal Bureau of Investigation (FBI).

The data breach tracking service Constella Intelligence found breached records from the business profiling site bvdinfo[.]com showing that a 41-year-old Yuriy Igorevich Rybtsov worked in a building at 59 Barnaulska St. in Donetsk. Further searching on this address in Constella finds the same apartment building was shared by a business registered to Vyacheslav “Tank” Penchukov, the leader of the Jabber Zeus crew in Ukraine.

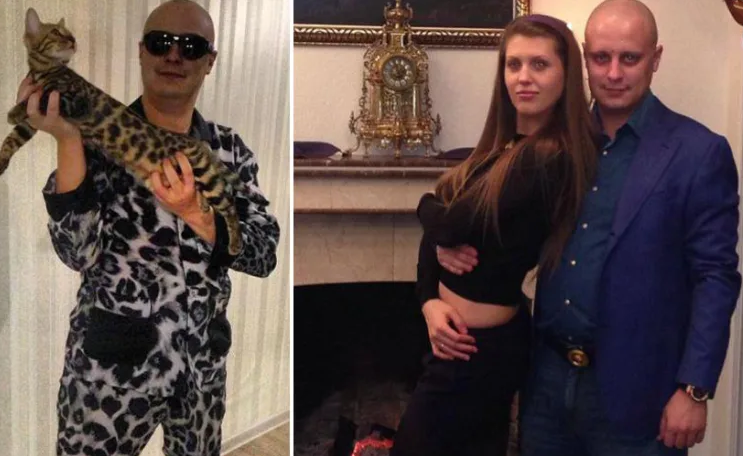

Vyacheslav “Tank” Penchukov, seen here performing as “DJ Slava Rich” in Ukraine, in an undated photo from social media.

Penchukov was arrested in 2022 while traveling to meet his wife in Switzerland. Last year, a federal court in Nebraska sentenced Penchukov to 18 years in prison and ordered him to pay more than $73 million in restitution.

Lawrence Baldwin is founder of myNetWatchman, a threat intelligence company based in Georgia that began tracking and disrupting the Jabber Zeus gang in 2009. myNetWatchman had secretly gained access to the Jabber chat server used by the Ukrainian hackers, allowing Baldwin to eavesdrop on the daily conversations between MrICQ and other Jabber Zeus members.

Baldwin shared those real-time chat records with multiple state and federal law enforcement agencies, and with this reporter. Between 2010 and 2013, I spent several hours each day alerting small businesses across the country that their payroll accounts were about to be drained by these cybercriminals.

Those notifications, and Baldwin’s tireless efforts, saved countless would-be victims a great deal of money. In most cases, however, we were already too late. Nevertheless, the pilfered Jabber Zeus group chats provided the basis for dozens of stories published here about small businesses fighting their banks in court over six- and seven-figure financial losses.

Baldwin said the Jabber Zeus crew was far ahead of its peers in several respects. For starters, their intercepted chats showed they worked to create a highly customized botnet directly with the author of the original Zeus Trojan — Evgeniy Mikhailovich Bogachev, a Russian man who has long been on the FBI’s “Most Wanted” list. The feds have a standing $3 million reward for information leading to Bogachev’s arrest.

Evgeniy M. Bogachev, in undated photos.

The core innovation of Jabber Zeus was an alert that MrICQ would receive each time a new victim entered a one-time password code into a phishing page mimicking their financial institution. The gang’s internal name for this component was “Leprechaun,” (the video below from myNetWatchman shows it in action). Jabber Zeus would actually re-write the HTML code as displayed in the victim’s browser, allowing them to intercept any passcodes sent by the victim’s bank for multi-factor authentication.

“These guys had compromised such a large number of victims that they were getting buried in a tsunami of stolen banking credentials,” Baldwin told KrebsOnSecurity. “But the whole point of Leprechaun was to isolate the highest-value credentials — the commercial bank accounts with two-factor authentication turned on. They knew these were far juicier targets because they clearly had a lot more money to protect.”

Baldwin said the Jabber Zeus trojan also included a custom “backconnect” component that allowed the hackers to relay their bank account takeovers through the victim’s own infected PC.

“The Jabber Zeus crew were literally connecting to the victim’s bank account from the victim’s IP address, or from the remote control function and by fully emulating the device,” he said. “That trojan was like a hot knife through butter of what everyone thought was state-of-the-art secure online banking at the time.”

Although the Jabber Zeus crew was in direct contact with the Zeus author, the chats intercepted by myNetWatchman show Bogachev frequently ignored the group’s pleas for help. The government says the real leader of the Jabber Zeus crew was Maksim Yakubets, a 38-year Ukrainian man with Russian citizenship who went by the hacker handle “Aqua.”

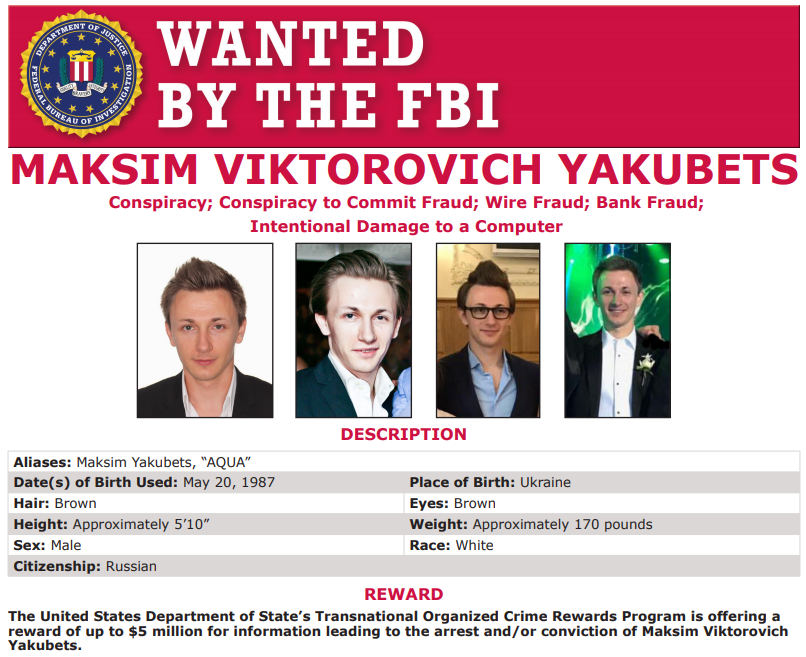

Alleged Evil Corp leader Maksim “Aqua” Yakubets. Image: FBI

The Jabber chats intercepted by Baldwin show that Aqua interacted almost daily with MrICQ, Tank and other members of the hacking team, often facilitating the group’s money mule and cashout activities remotely from Russia.

The government says Yakubets/Aqua would later emerge as the leader of an elite cybercrime ring of at least 17 hackers that referred to themselves internally as “Evil Corp.” Members of Evil Corp developed and used the Dridex (a.k.a. Bugat) trojan, which helped them siphon more than $100 million from hundreds of victim companies in the United States and Europe.

This 2019 story about the government’s $5 million bounty for information leading to Yakubets’s arrest includes excerpts of conversations between Aqua, Tank, Bogachev and other Jabber Zeus crew members discussing stories I’d written about their victims. Both Baldwin and I were interviewed at length for a new weekly six-part podcast by the BBC that delves deep into the history of Evil Corp. Episode One focuses on the evolution of Zeus, while the second episode centers on an investigation into the group by former FBI agent Jim Craig.

Image: https://www.bbc.co.uk/programmes/w3ct89y8

Friday Squid Blogging: Giant Squid at the Smithsonian

I can’t believe that I haven’t yet posted this picture of a giant squid at the Smithsonian.

As usual, you can also use this squid post to talk about the security stories in the news that I haven’t covered.

Will AI Strengthen or Undermine Democracy?

Listen to the Audio on NextBigIdeaClub.com

Below, co-authors Bruce Schneier and Nathan E. Sanders share five key insights from their new book, Rewiring Democracy: How AI Will Transform Our Politics, Government, and Citizenship.

What’s the big idea?

AI can be used both for and against the public interest within democracies. It is already being used in the governing of nations around the world, and there is no escaping its continued use in the future by leaders, policy makers, and legal enforcers. How we wire AI into democracy today will determine if it becomes a tool of oppression or empowerment...

Announcing Linkerd 2.19: Post-quantum cryptography

Today we’re happy to announce Linkerd 2.19! This release introduces a significant state-of-the-art security improvement for Linkerd: a modernized TLS stack that uses post-quantum key exchange algorithms by default.

Linkerd has now seen almost a decade of continuous improvement and evolution. Our goal is to build a service mesh that our users can rely on for 100 years. To do this, we partner with users like Grammarly to ensure that Linkerd can accelerate the full scale and scope of modern software environments—and then we feed those lessons directly back into the product. Linkerd 2.19 release is the third major version since the announcement of Buoyant’s profitability and Linkerd project sustainability a year ago, and continues our laser focus on operational simplicity—delivering the notoriously complex service mesh feature set in a way that is manageable, scalable, and performant.

How Non-Developers Can Contribute to Prometheus

My first introduction to the Prometheus project was through the Linux Foundation mentorship program, where I conducted UX research. I remember the anxiety I felt when I was selected as a mentee. I was new not just to Prometheus, but to observability entirely. I worried I was in over my head, working in a heavily developer-focused domain with no development background.

That anxiety turned out to be unfounded. I went on to make meaningful contributions to the project, and I've learned that what I experienced is nearly universal among non-technical contributors to open source.

If you're feeling that same uncertainty, this post is for you. I'll share the challenges you're likely to face (or already face), why your contributions matter, and how to find your place in the Prometheus community.

The Challenges Non-Technical Contributors Face

As a non-technical contributor, I've had my share of obstacles in open source. And from conversations with others navigating these spaces, I've found the struggles are remarkably consistent. Here are the most common barriers:

1. The Technical Intimidation Factor

I've felt out of place in open source spaces, mostly because technical contributors vastly outnumber non-technical ones. Even the non-technical people often have technical backgrounds or have been around long enough to understand what's happening.

When every conversation references concepts you don't know, it's easy to feel intimidated. You join meetings and stay silent throughout. You respond in the chat instead of unmuting because you don't trust yourself to speak up in a recorded meeting where everyone else seems fluent in a technical language you're still learning.

2. Unclear Value Proposition

Open source projects rarely spell out their non-technical needs the way a job posting would. You would hardly find an issue titled "Need: someone to interview users and write case studies" or "Wanted: community manager to organize monthly meetups." Instead, you’re more likely to see a backlog of GitHub issues about bugs, feature requests, and code refactoring.

Even if you have valuable skills, you don't know where they're needed, how to articulate your value, or whether your contributions will be seen as mission-critical or just nice-to-have. Without a clear sense of how you fit in, it's difficult to reach out confidently. You end up sending vague messages like "I'd love to help! Let me know if there's anything I can do", which rarely leads anywhere because maintainers are busy and don't have time to figure out how to match your skills to their needs.

3. Lack of Visible Non-Technical Contributors

One of the things that draws me to an open source community or project is finding other people like me. I think it's the same way for most people. Representation matters. It's hard to be what you can't see.

It’s even more difficult to find non-technical contributors because their contributions are often invisible in the ways projects typically showcase work. GitHub contribution graphs count commits. Changelogs list code changes and bug fixes. You only get the "contributor" label when you've created a pull request that got merged. So, even when people are organizing events, supporting users, or conducting research, their work doesn't show up in the same prominent ways code does.

4. The Onboarding Gap

A typical "Contributing Guide" will walk you through setting up a development environment, creating a branch, running tests, and submitting a pull request. But it rarely explains how to contribute documentation improvements, where design feedback should go, or how community support is organized.

You see "Join our community" with a link to a Slack workspace. But between joining and making your first contribution, there's a significant gap. There are hundreds of people in dozens of channels. Who's a maintainer and who's just another community member? Which channel is appropriate for your questions? Who should you tag when you need guidance?

Why These Gaps Exist

It's worth acknowledging that most of the time, these gaps aren't intentional. Projects don't set out to exclude non-technical contributors or make it harder for them to participate.

In most cases, a small group of developers build something useful and decide to open-source it. They invite people they know who might need it (often other developers) to contribute. The project grows organically within those networks. It becomes a community of developers building tools for developers, and certain functions simply don't feel necessary yet. Marketing? The word spreads naturally through tech circles. Community management? The community is small and self-organizing. UX design? They're developers comfortable with command-line interfaces, so they may not fully consider the experience of using a graphical interface.

None of this is malicious. It's just that the project evolved in a context where those skills weren't obviously needed.

The shift happens when someone, often a non-technical contributor who sees the potential, steps in and says: "You've built something valuable and grown an impressive community. But here's what you might be missing. Here's how documentation could lower the barrier to entry. Here's how community management could retain contributors. Here's how user research could guide your roadmap."

Why Non-Technical Contributions Matter

Prometheus is a powerful monitoring system backed by a large community of developers. But like any open source project, it needs more than code to thrive.

It needs accessible documentation. From my experience working with engineers, most would rather focus on building than writing docs, and understandably so. Engineers who know the system inside out often write documentation that assumes knowledge newcomers don't have. What makes perfect sense to someone who built the feature can feel impenetrable to someone encountering it for the first time. A technical writer testing the product from an end user's perspective, not a builder's, can bridge that gap and lower the barrier to entry.

It needs organization. The GitHub issues backlog has hundreds of open items that haven't been triaged. Maintainers spend valuable time parsing what users actually need instead of building solutions. A project manager or someone with triage experience could turn that chaos into a clear roadmap, allowing maintainers to spend their time building solutions.

It needs community support. Imagine a user who joins the Slack workspace, excited to contribute. They don't know where to start. They ask a question that gets buried in the stream of messages. They quietly leave. The project just lost a potential contributor because no one was there to welcome them and point them in the right direction.

These are the situations non-technical contributions can help prevent. Good documentation lowers the barrier to entry, which means more adoption, more feedback, and better features. Active community management retains contributors who would otherwise drift away, which means distributed knowledge and less maintainer burnout. Organization and triage turn scattered input into actionable priorities.

The Prometheus maintainers are doing exceptional work building a robust, scalable monitoring system. But they can't do everything, and they shouldn't have to. The question now isn't whether non-technical contributions matter. It's whether we create the space for them to happen.

Practical Ways You Can Contribute to Prometheus

If you're ready to contribute to Prometheus but aren't sure where to start, here are some areas where non-technical skills are actively needed.

1. Join the UX Efforts

Prometheus is actively working to improve its user experience, and the community now has a UX Working Group dedicated to this effort.

If you're a UX researcher, designer, or someone with an eye for usability, this is an excellent time to get involved. The working group is still taking shape, with ongoing discussions about priorities and processes. Join the Slack channel to participate in these conversations and watch for upcoming announcements about specific ways to contribute.

I can tell you from experience that the community is receptive to UX contributions, and your work will have a real impact.

2. Write for the Prometheus Blog

If you're a technical writer or content creator, the Prometheus blog is a natural entry point. The blog publishes tutorials, case studies, best practices, community updates, and generally, content that helps users get more value from Prometheus.

Check out the blog content guide to understand what makes a strong blog proposal and how to publish a post on the blog. There's an audience eager to learn from your experience.

3. Improve and Maintain Documentation

Documentation is one of those perpetual needs in open source. There's always something that could be clearer, more complete, or better organized. The Prometheus docs repo is no exception.

You can contribute by fixing typos and broken links, expanding getting-started guides, creating tutorials for common monitoring scenarios, or triaging issues to help prioritize what needs attention. Even if you don't consider yourself a technical writer, if you've ever been confused by the docs and figured something out, you can help make it clearer for the next person.

4. Help Organize PromCon

PromCon is Prometheus's annual conference, and it takes significant coordination to pull off. The organizing team handles everything from speaker selection and scheduling to venue logistics and sponsor relationships.

If you have experience in event planning, sponsor outreach, marketing, or communications, the PromCon organizers would welcome your help. Reach out to the organizing team or watch for announcements in the Prometheus community channels.

5. Advocate and Amplify

Finally, one of the simplest but most impactful things you can do is talk about Prometheus. Write blog posts about how you're using Prometheus. Give talks at local meetups or conferences. Share tips and learnings on social media. Create video tutorials or live streams. Recommend Prometheus to teams evaluating monitoring solutions.

Every piece of content, every conference talk, every social media post expands Prometheus's reach and helps new users discover it.

How to Get Started

If you're ready to contribute to Prometheus, here's what I've learned from my own experience navigating the community as a non-technical contributor:

Start by introducing yourself. When you join the #prometheus-dev Slack channel, say hello. Slack doesn't always make it obvious when someone new joins, so if you stay silent, people simply won't know you're there. A simple introduction—your name, what you do, what brought you to Prometheus—is enough to make your presence known.

Attend community meetings. Check out the community calendar and sync the meetings that interest you. Even if you don't understand everything being discussed at first (and that's completely normal), stay. The more you sit in, the more you'll learn about the community's needs and find more opportunities to contribute.

Observe before you act. It's tempting to jump in with ideas immediately, but spending time as an observer first pays off. Read through Slack discussions and conversations in GitHub issues. Browse the documentation. Notice what kinds of contributions are being made. You'll start to see patterns: recurring questions, documentation gaps, areas where help is needed. That's where your opportunity lies.

Ask questions. Everyone was new once. If something isn't clear, ask. If you don't get a response right away, give it some time—people are busy—then follow up. The community is welcoming, but you have to make yourself visible.

The Prometheus community has room for you. Now you know exactly where to begin.

The AI-Designed Bioweapon Arms Race

Interesting article about the arms race between AI systems that invent/design new biological pathogens, and AI systems that detect them before they’re created:

The team started with a basic test: use AI tools to design variants of the toxin ricin, then test them against the software that is used to screen DNA orders. The results of the test suggested there was a risk of dangerous protein variants slipping past existing screening software, so the situation was treated like the equivalent of a zero-day vulnerability.

[…]

Details of that original test are ...

Kubewarden 1.30 Release

GKE 10 Year Anniversary, with Gari Singh

GKE turned 10 in 2025! In this episode, we talk with GKE PM Gari Singh about GKE's journey from early container orchestration to AI-driven ops. Discover Autopilot, IPPR, and a bold vision for the future of Kubernetes.

Do you have something cool to share? Some questions? Let us know:

-

mail: [email protected]

-

bluesky: @kubernetespodcast.com

News of the week

-

Cloud Native Computing Foundation Announces Knative's Graduation

-

Introducing Headlamp Plugin for Karpenter - Scaling and Visibility

Links from the interview

-

In-place Vertical Scaling of Pods - Resize CPU and Memory Resources assigned to Containers

-

GKE under the hood: Container-optimized compute delivers fast autoscaling for Autopilot

Signal’s Post-Quantum Cryptographic Implementation

Signal has just rolled out its quantum-safe cryptographic implementation.

Ars Technica has a really good article with details:

Ultimately, the architects settled on a creative solution. Rather than bolt KEM onto the existing double ratchet, they allowed it to remain more or less the same as it had been. Then they used the new quantum-safe ratchet to implement a parallel secure messaging system.

Now, when the protocol encrypts a message, it sources encryption keys from both the classic Double Ratchet and the new ratchet. It then mixes the two keys together (using a cryptographic key derivation function) to get a new encryption key that has all of the security of the classical Double Ratchet but now has quantum security, too...

Aisuru Botnet Shifts from DDoS to Residential Proxies

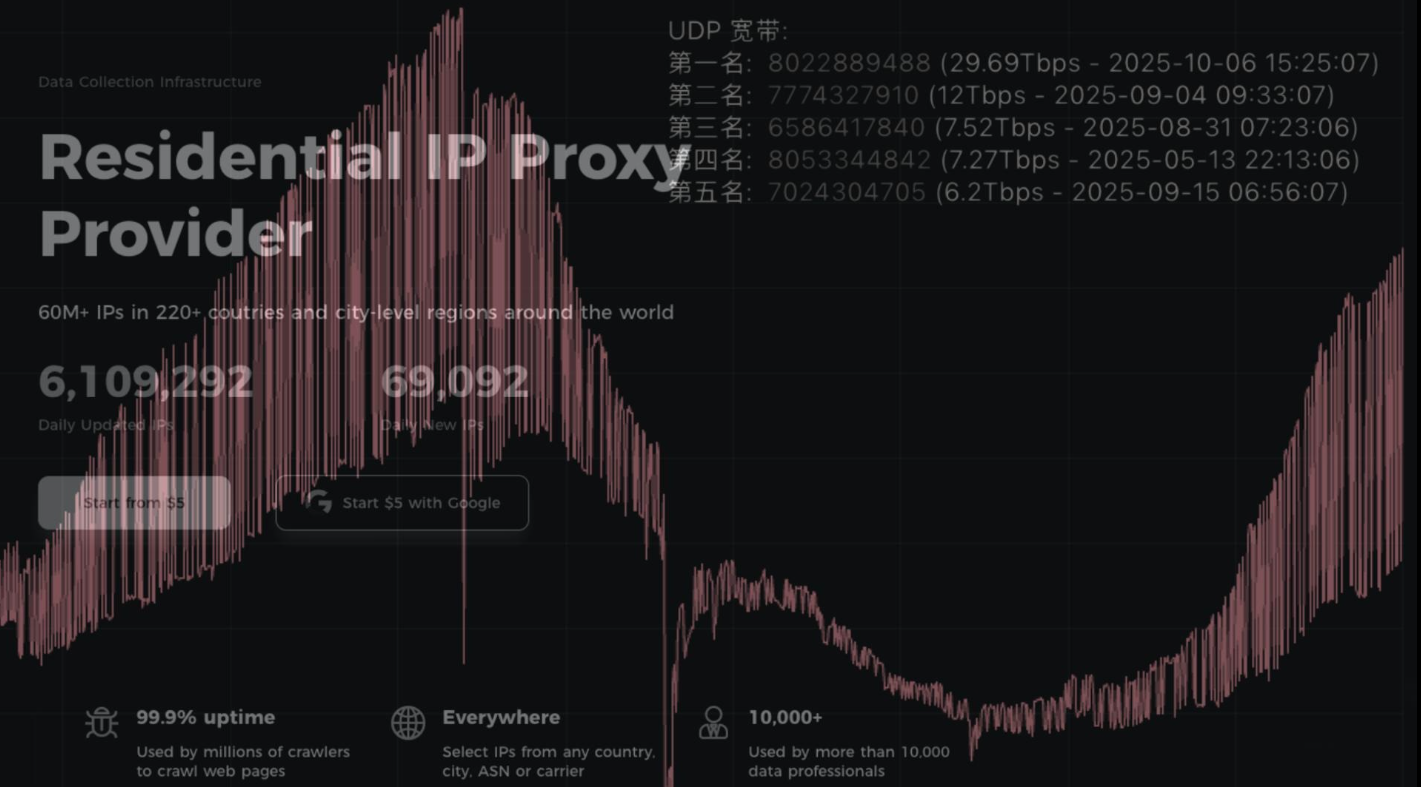

Aisuru, the botnet responsible for a series of record-smashing distributed denial-of-service (DDoS) attacks this year, recently was overhauled to support a more low-key, lucrative and sustainable business: Renting hundreds of thousands of infected Internet of Things (IoT) devices to proxy services that help cybercriminals anonymize their traffic. Experts say a glut of proxies from Aisuru and other sources is fueling large-scale data harvesting efforts tied to various artificial intelligence (AI) projects, helping content scrapers evade detection by routing their traffic through residential connections that appear to be regular Internet users.

First identified in August 2024, Aisuru has spread to at least 700,000 IoT systems, such as poorly secured Internet routers and security cameras. Aisuru’s overlords have used their massive botnet to clobber targets with headline-grabbing DDoS attacks, flooding targeted hosts with blasts of junk requests from all infected systems simultaneously.

In June, Aisuru hit KrebsOnSecurity.com with a DDoS clocking at 6.3 terabits per second — the biggest attack that Google had ever mitigated at the time. In the weeks and months that followed, Aisuru’s operators demonstrated DDoS capabilities of nearly 30 terabits of data per second — well beyond the attack mitigation capabilities of most Internet destinations.

These digital sieges have been particularly disruptive this year for U.S.-based Internet service providers (ISPs), in part because Aisuru recently succeeded in taking over a large number of IoT devices in the United States. And when Aisuru launches attacks, the volume of outgoing traffic from infected systems on these ISPs is often so high that it can disrupt or degrade Internet service for adjacent (non-botted) customers of the ISPs.

“Multiple broadband access network operators have experienced significant operational impact due to outbound DDoS attacks in excess of 1.5Tb/sec launched from Aisuru botnet nodes residing on end-customer premises,” wrote Roland Dobbins, principal engineer at Netscout, in a recent executive summary on Aisuru. “Outbound/crossbound attack traffic exceeding 1Tb/sec from compromised customer premise equipment (CPE) devices has caused significant disruption to wireline and wireless broadband access networks. High-throughput attacks have caused chassis-based router line card failures.”

The incessant attacks from Aisuru have caught the attention of federal authorities in the United States and Europe (many of Aisuru’s victims are customers of ISPs and hosting providers based in Europe). Quite recently, some of the world’s largest ISPs have started informally sharing block lists identifying the rapidly shifting locations of the servers that the attackers use to control the activities of the botnet.

Experts say the Aisuru botmasters recently updated their malware so that compromised devices can more easily be rented to so-called “residential proxy” providers. These proxy services allow paying customers to route their Internet communications through someone else’s device, providing anonymity and the ability to appear as a regular Internet user in almost any major city worldwide.

From a website’s perspective, the IP traffic of a residential proxy network user appears to originate from the rented residential IP address, not from the proxy service customer. Proxy services can be used in a legitimate manner for several business purposes — such as price comparisons or sales intelligence. But they are massively abused for hiding cybercrime activity (think advertising fraud, credential stuffing) because they can make it difficult to trace malicious traffic to its original source.

And as we’ll see in a moment, this entire shadowy industry appears to be shifting its focus toward enabling aggressive content scraping activity that continuously feeds raw data into large language models (LLMs) built to support various AI projects.

‘INSANE’ GROWTH

Riley Kilmer is co-founder of spur.us, a service that tracks proxy networks. Kilmer said all of the top proxy services have grown exponentially over the past six months — with some adding between 10 to 200 times more proxies for rent.

“I just checked, and in the last 90 days we’ve seen 250 million unique residential proxy IPs,” Kilmer said. “That is insane. That is so high of a number, it’s unheard of. These proxies are absolutely everywhere now.”

To put Kilmer’s comments in perspective, here was Spur’s view of the Top 10 proxy networks by approximate install base, circa May 2025:

AUPROXIES_PROXY 66,097

RAYOBYTE_PROXY 43,894

OXYLABS_PROXY 43,008

WEBSHARE_PROXY 39,800

IPROYAL_PROXY 32,723

PROXYCHEAP_PROXY 26,368

IPIDEA_PROXY 26,202

MYPRIVATEPROXY_PROXY 25,287

HYPE_PROXY 18,185

MASSIVE_PROXY 17,152

Today, Spur says it is tracking an unprecedented spike in available proxies across all providers, including;

LUMINATI_PROXY 11,856,421

NETNUT_PROXY 10,982,458

ABCPROXY_PROXY 9,294,419

OXYLABS_PROXY 6,754,790

IPIDEA_PROXY 3,209,313

EARNFM_PROXY 2,659,913

NODEMAVEN_PROXY 2,627,851

INFATICA_PROXY 2,335,194

IPROYAL_PROXY 2,032,027

YILU_PROXY 1,549,155

Reached for comment about the apparent rapid growth in their proxy network, Oxylabs (#4 on Spur’s list) said while their proxy pool did grow recently, it did so at nowhere near the rate cited by Spur.

“We don’t systematically track other providers’ figures, and we’re not aware of any instances of 10× or 100× growth, especially when it comes to a few bigger companies that are legitimate businesses,” the company said in a written statement.

Bright Data was formerly known as Luminati Networks, the name that is currently at the top of Spur’s list of the biggest residential proxy networks, with more than 11 million proxies. Bright Data likewise told KrebsOnSecurity that Spur’s current estimates of its proxy network are dramatically overstated and inaccurate.

“We did not actively initiate nor do we see any 10x or 100x expansion of our network, which leads me to believe that someone might be presenting these IPs as Bright Data’s in some way,” said Rony Shalit, Bright Data’s chief compliance and ethics officer. “In many cases in the past, due to us being the leading data collection proxy provider, IPs were falsely tagged as being part of our network, or while being used by other proxy providers for malicious activity.”

“Our network is only sourced from verified IP providers and a robust opt-in only residential peers, which we work hard and in complete transparency to obtain,” Shalit continued. “Every DC, ISP or SDK partner is reviewed and approved, and every residential peer must actively opt in to be part of our network.”

HK NETWORK

Even Spur acknowledges that Luminati and Oxylabs are unlike most other proxy services on their top proxy providers list, in that these providers actually adhere to “know-your-customer” policies, such as requiring video calls with all customers, and strictly blocking customers from reselling access.

Benjamin Brundage is founder of Synthient, a startup that helps companies detect proxy networks. Brundage said if there is increasing confusion around which proxy networks are the most worrisome, it’s because nearly all of these lesser-known proxy services have evolved into highly incestuous bandwidth resellers. What’s more, he said, some proxy providers do not appreciate being tracked and have been known to take aggressive steps to confuse systems that scan the Internet for residential proxy nodes.

Brundage said most proxy services today have created their own software development kit or SDK that other app developers can bundle with their code to earn revenue. These SDKs quietly modify the user’s device so that some portion of their bandwidth can be used to forward traffic from proxy service customers.

“Proxy providers have pools of constantly churning IP addresses,” he said. “These IP addresses are sourced through various means, such as bandwidth-sharing apps, botnets, Android SDKs, and more. These providers will often either directly approach resellers or offer a reseller program that allows users to resell bandwidth through their platform.”

Many SDK providers say they require full consent before allowing their software to be installed on end-user devices. Still, those opt-in agreements and consent checkboxes may be little more than a formality for cybercriminals like the Aisuru botmasters, who can earn a commission each time one of their infected devices is forced to install some SDK that enables one or more of these proxy services.

Depending on its structure, a single provider may operate hundreds of different proxy pools at a time — all maintained through other means, Brundage said.

“Often, you’ll see resellers maintaining their own proxy pool in addition to an upstream provider,” he said. “It allows them to market a proxy pool to high-value clients and offer an unlimited bandwidth plan for cheap reduce their own costs.”

Some proxy providers appear to be directly in league with botmasters. Brundage identified one proxy provider that was aggressively advertising cheap and plentiful bandwidth to content scraping companies. After scanning that provider’s pool of available proxies, Brundage said he found a one-to-one match with IP addresses he’d previously mapped to the Aisuru botnet.

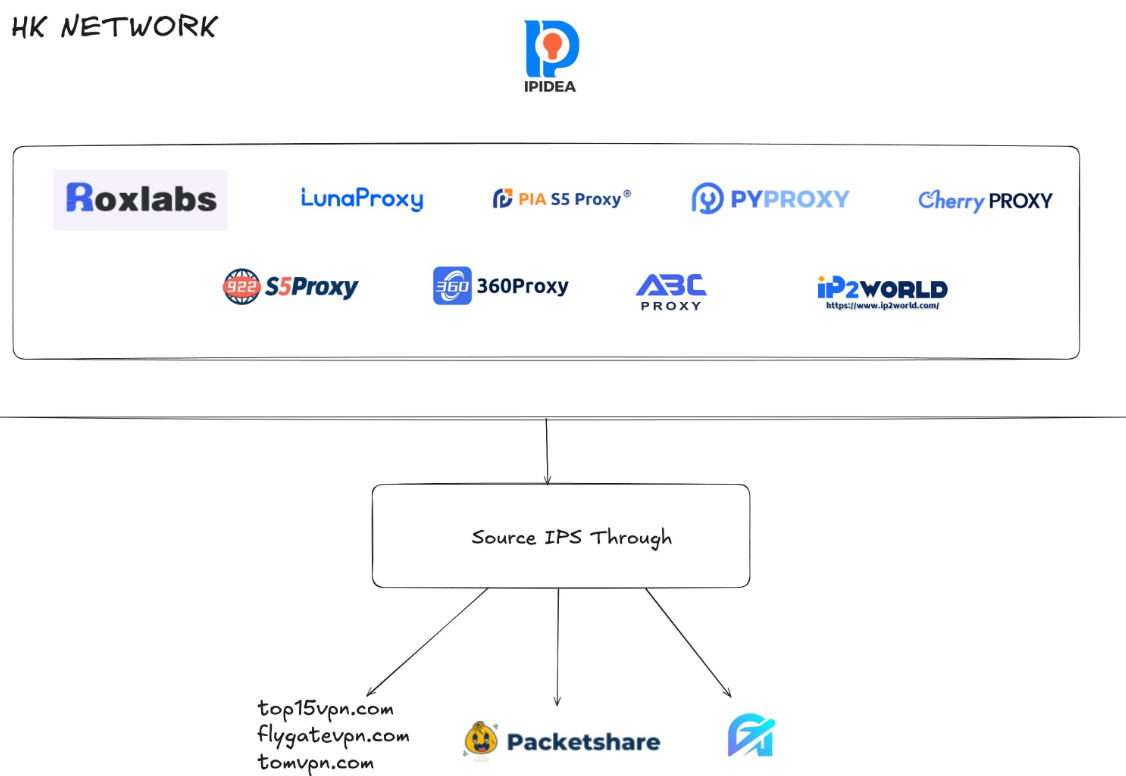

Brundage says that by almost any measurement, the world’s largest residential proxy service is IPidea, a China-based proxy network. IPidea is #5 on Spur’s Top 10, and Brundage said its brands include ABCProxy (#3), Roxlabs, LunaProxy, PIA S5 Proxy, PyProxy, 922Proxy, 360Proxy, IP2World, and Cherry Proxy. Spur’s Kilmer said they also track Yilu Proxy (#10) as IPidea.

Brundage said all of these providers operate under a corporate umbrella known on the cybercrime forums as “HK Network.”

“The way it works is there’s this whole reseller ecosystem, where IPidea will be incredibly aggressive and approach all these proxy providers with the offer, ‘Hey, if you guys buy bandwidth from us, we’ll give you these amazing reseller prices,'” Brundage explained. “But they’re also very aggressive in recruiting resellers for their apps.”

A graphic depicting the relationship between proxy providers that Synthient found are white labeling IPidea proxies. Image: Synthient.com.

Those apps include a range of low-cost and “free” virtual private networking (VPN) services that indeed allow users to enjoy a free VPN, but which also turn the user’s device into a traffic relay that can be rented to cybercriminals, or else parceled out to countless other proxy networks.

“They have all this bandwidth to offload,” Brundage said of IPidea and its sister networks. “And they can do it through their own platforms, or they go get resellers to do it for them by advertising on sketchy hacker forums to reach more people.”

One of IPidea’s core brands is 922S5Proxy, which is a not-so-subtle nod to the 911S5Proxy service that was hugely popular between 2015 and 2022. In July 2022, KrebsOnSecurity published a deep dive into 911S5Proxy’s origins and apparent owners in China. Less than a week later, 911S5Proxy announced it was closing down after the company’s servers were massively hacked.

That 2022 story named Yunhe Wang from Beijing as the apparent owner and/or manager of the 911S5 proxy service. In May 2024, the U.S. Department of Justice arrested Mr Wang, alleging that his network was used to steal billions of dollars from financial institutions, credit card issuers, and federal lending programs. At the same time, the U.S. Treasury Department announced sanctions against Wang and two other Chinese nationals for operating 911S5Proxy.

The website for 922Proxy.

DATA SCRAPING FOR AI

In recent months, multiple experts who track botnet and proxy activity have shared that a great deal of content scraping which ultimate benefits AI companies is now leveraging these proxy networks to further obfuscate their aggressive data-slurping activity. That’s because by routing it through residential IP addresses, content scraping firms can make their traffic far trickier to filter out.

“It’s really difficult to block, because there’s a risk of blocking real people,” Spur’s Kilmer said of the LLM scraping activity that is fed through individual residential IP addresses, which are often shared by multiple customers at once.

Kilmer says the AI industry has brought a veneer of legitimacy to residential proxy business, which has heretofore mostly been associated with sketchy affiliate money making programs, automated abuse, and unwanted Internet traffic.

“Web crawling and scraping has always been a thing, but AI made it like a commodity, data that had to be collected,” Kilmer said. “Everybody wanted to monetize their own data pots, and how they monetize that is different across the board.”

Kilmer said many LLM-related scrapers rely on residential proxies in cases where the content provider has restricted access to their platform in some way, such as forcing interaction through an app, or keeping all content behind a login page with multi-factor authentication.

“Where the cost of data is out of reach — there is some exclusivity or reason they can’t access the data — they’ll turn to residential proxies so they look like a real person accessing that data,” Kilmer said of the content scraping efforts.

Aggressive AI crawlers increasingly are overloading community-maintained infrastructure, causing what amounts to persistent DDoS attacks on vital public resources. A report earlier this year from LibreNews found some open-source projects now see as much as 97 percent of their traffic originating from AI company bots, dramatically increasing bandwidth costs, service instability, and burdening already stretched-thin maintainers.

Cloudflare is now experimenting with tools that will allow content creators to charge a fee to AI crawlers to scrape their websites. The company’s “pay-per-crawl” feature is currently in a private beta, but it lets publishers set their own prices that bots must pay before scraping content.

On October 22, the social media and news network Reddit sued Oxylabs (PDF) and several other proxy providers, alleging that their systems enabled the mass-scraping of Reddit user content even though Reddit had taken steps to block such activity.

“Recognizing that Reddit denies scrapers like them access to its site, Defendants scrape the data from Google’s search results instead,” the lawsuit alleges. “They do so by masking their identities, hiding their locations, and disguising their web scrapers as regular people (among other techniques) to circumvent or bypass the security restrictions meant to stop them.”

Denas Grybauskas, chief governance and strategy officer at Oxylabs, said the company was shocked and disappointed by the lawsuit.

“Reddit has made no attempt to speak with us directly or communicate any potential concerns,” Grybauskas said in a written statement. “Oxylabs has always been and will continue to be a pioneer and an industry leader in public data collection, and it will not hesitate to defend itself against these allegations. Oxylabs’ position is that no company should claim ownership of public data that does not belong to them. It is possible that it is just an attempt to sell the same public data at an inflated price.”

As big and powerful as Aisuru may be, it is hardly the only botnet that is contributing to the overall broad availability of residential proxies. For example, on June 5 the FBI’s Internet Crime Complaint Center warned that an IoT malware threat dubbed BADBOX 2.0 had compromised millions of smart-TV boxes, digital projectors, vehicle infotainment units, picture frames, and other IoT devices.

In July 2025, Google filed a lawsuit in New York federal court against the Badbox botnet’s alleged perpetrators. Google said the Badbox 2.0 botnet “compromised more than 10 million uncertified devices running Android’s open-source software, which lacks Google’s security protections. Cybercriminals infected these devices with pre-installed malware and exploited them to conduct large-scale ad fraud and other digital crimes.”

A FAMILIAR DOMAIN NAME

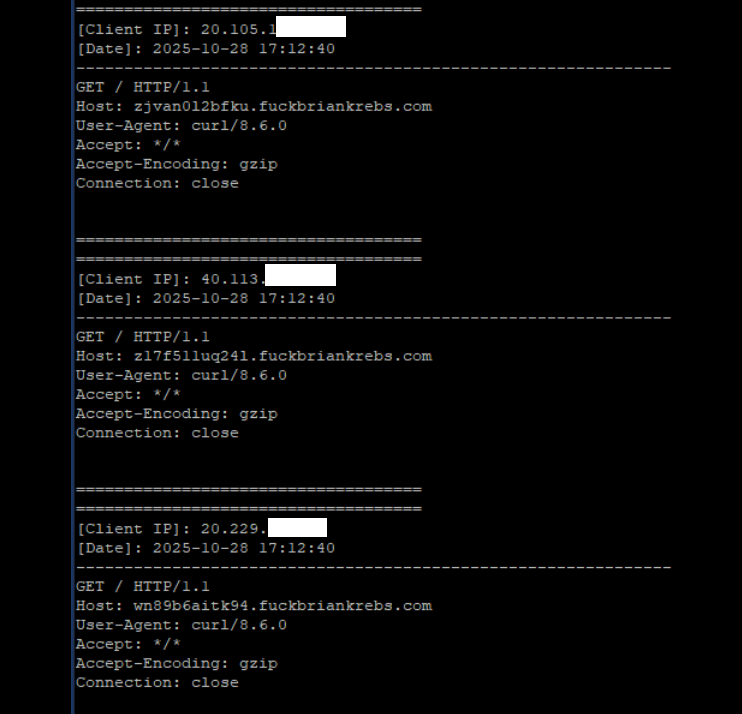

Brundage said the Aisuru botmasters have their own SDK, and for some reason part of its code tells many newly-infected systems to query the domain name fuckbriankrebs[.]com. This may be little more than an elaborate “screw you” to this site’s author: One of the botnet’s alleged partners goes by the handle “Forky,” and was identified in June by KrebsOnSecurity as a young man from Sao Paulo, Brazil.

Brundage noted that only systems infected with Aisuru’s Android SDK will be forced to resolve the domain. Initially, there was some discussion about whether the domain might have some utility as a “kill switch” capable of disrupting the botnet’s operations, although Brundage and others interviewed for this story say that is unlikely.

A tiny sample of the traffic after a DNS server was enabled on the newly registered domain fuckbriankrebs dot com. Each unique IP address requested its own unique subdomain. Image: Seralys.

For one thing, they said, if the domain was somehow critical to the operation of the botnet, why was it still unregistered and actively for-sale? Why indeed, we asked. Happily, the domain name was deftly snatched up last week by Philippe Caturegli, “chief hacking officer” for the security intelligence company Seralys.

Caturegli enabled a passive DNS server on that domain and within a few hours received more than 700,000 requests for unique subdomains on fuckbriankrebs[.]com.

But even with that visibility into Aisuru, it is difficult to use this domain check-in feature to measure its true size, Brundage said. After all, he said, the systems that are phoning home to the domain are only a small portion of the overall botnet.

“The bots are hardcoded to just spam lookups on the subdomains,” he said. “So anytime an infection occurs or it runs in the background, it will do one of those DNS queries.”

Caturegli briefly configured all subdomains on fuckbriankrebs dot com to display this ASCII art image to visiting systems today.

The domain fuckbriankrebs[.]com has a storied history. On its initial launch in 2009, it was used to spread malicious software by the Cutwail spam botnet. In 2011, the domain was involved in a notable DDoS against this website from a botnet powered by Russkill (a.k.a. “Dirt Jumper”).

Domaintools.com finds that in 2015, fuckbriankrebs[.]com was registered to an email address attributed to David “Abdilo” Crees, a 27-year-old Australian man sentenced in May 2025 to time served for cybercrime convictions related to the Lizard Squad hacking group.

Social Engineering People’s Credit Card Details

Good Wall Street Journal article on criminal gangs that scam people out of their credit card information:

Your highway toll payment is now past due, one text warns. You have U.S. Postal Service fees to pay, another threatens. You owe the New York City Department of Finance for unpaid traffic violations.

The texts are ploys to get unsuspecting victims to fork over their credit-card details. The gangs behind the scams take advantage of this information to buy iPhones, gift cards, clothing and cosmetics.

Criminal organizations operating out of China, which investigators blame for the toll and postage messages, have used them to make more than $1 billion over the last three years, according to the Department of Homeland Security...