Feed aggregator

Friday Squid Blogging: Squid Fishing Tips

This is a video of advice for squid fishing in Puget Sound.

As usual, you can also use this squid post to talk about the security stories in the news that I haven’t covered.

I Am in the Epstein Files

Once. Someone named “Vincenzo lozzo” wrote to Epstein in email, in 2016: “I wouldn’t pay too much attention to this, Schneier has a long tradition of dramatizing and misunderstanding things.” The topic of the email is DDoS attacks, and it is unclear what I am dramatizing and misunderstanding.

Rabbi Schneier is also mentioned, also incidentally, also once. As far as either of us know, we are not related.

iPhone Lockdown Mode Protects Washington Post Reporter

404Media is reporting that the FBI could not access a reporter’s iPhone because it had Lockdown Mode enabled:

The court record shows what devices and data the FBI was able to ultimately access, and which devices it could not, after raiding the home of the reporter, Hannah Natanson, in January as part of an investigation into leaks of classified information. It also provides rare insight into the apparent effectiveness of Lockdown Mode, or at least how effective it might be before the FBI may try other techniques to access the device.

“Because the iPhone was in Lockdown mode, CART could not extract that device,” the court record reads, referring to the FBI’s Computer Analysis Response Team, a unit focused on performing forensic analyses of seized devices. The document is written by the government, and is opposing the return of Natanson’s devices...

Dragonfly v2.4.0 is released

Dragonfly v2.4.0 is released! Thanks to all of the contributors who made this Dragonfly release happen.

New features and enhancements

load-aware scheduling algorithm

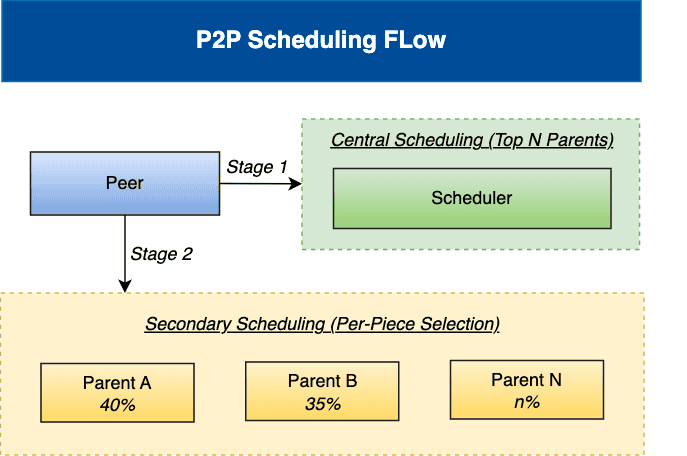

A two-stage scheduling algorithm combining central scheduling with node-level secondary scheduling to optimize P2P download performance, based on real-time load awareness.

For more information, please refer to the Scheduling.

Vortex protocol support for P2P file transfer

Dragonfly provides the new Vortex transfer protocol based on TLV to improve the download performance in the internal network. Use the TLV (Tag-Length-Value) format as a lightweight protocol to replace gRPC for data transfer between peers. TCP-based Vortex reduces large file download time by 50% and QUIC-based Vortex by 40% compared to gRPC, both effectively reducing peak memory usage.

For more information, please refer to the TCP Protocol Support for P2P File Transfer and QUIC Protocol Support for P2P File Transfer.

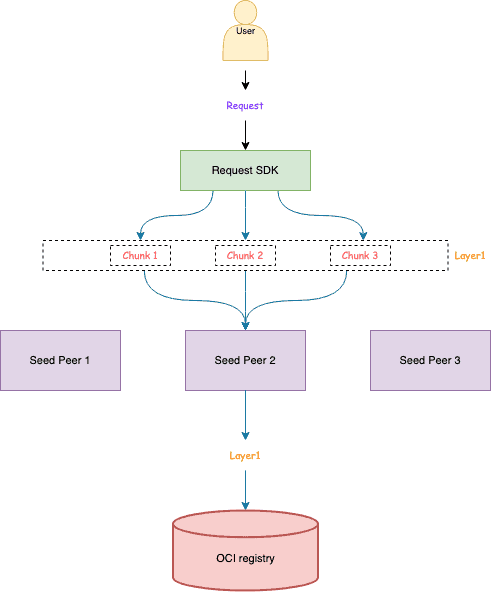

Request SDK

A SDK for routing User requests to Seed Peers using consistent hashing, replacing the previous Kubernetes Service load balancing approach.

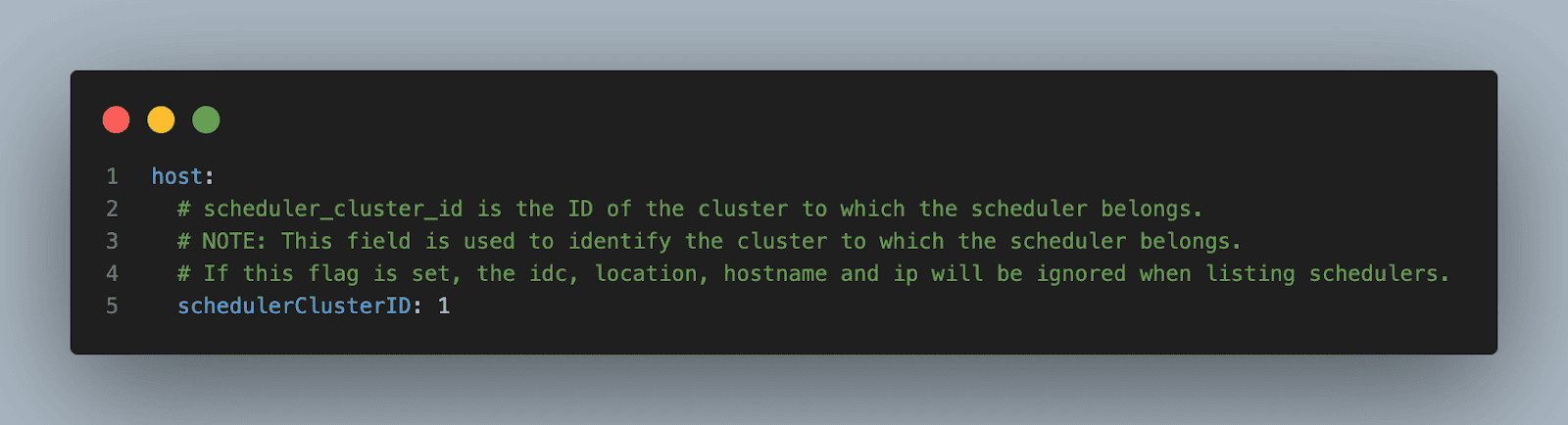

Simple multi‑cluster Kubernetes deployment with scheduler cluster ID

Dragonfly supports a simplified feature for deploying and managing multiple Kubernetes clusters by explicitly assigning a schedulerClusterID to each cluster. This approach allows users to directly control cluster affinity without relying on location‑based scheduling metadata such as IDC, hostname, or IP.

Using this feature, each Peer, Seed Peer, and Scheduler determines its target scheduler cluster through a clearly defined scheduler cluster ID. This ensures precise separation between clusters and predictable cross‑cluster behavior.

For more information, please refer to the Create Dragonfly Cluster Simple.

Performance and resource optimization for Manager and Scheduler components

Enhanced service performance and resource utilization across Manager and Scheduler components while significantly reducing CPU and memory overhead, delivering improved system efficiency and better resource management.

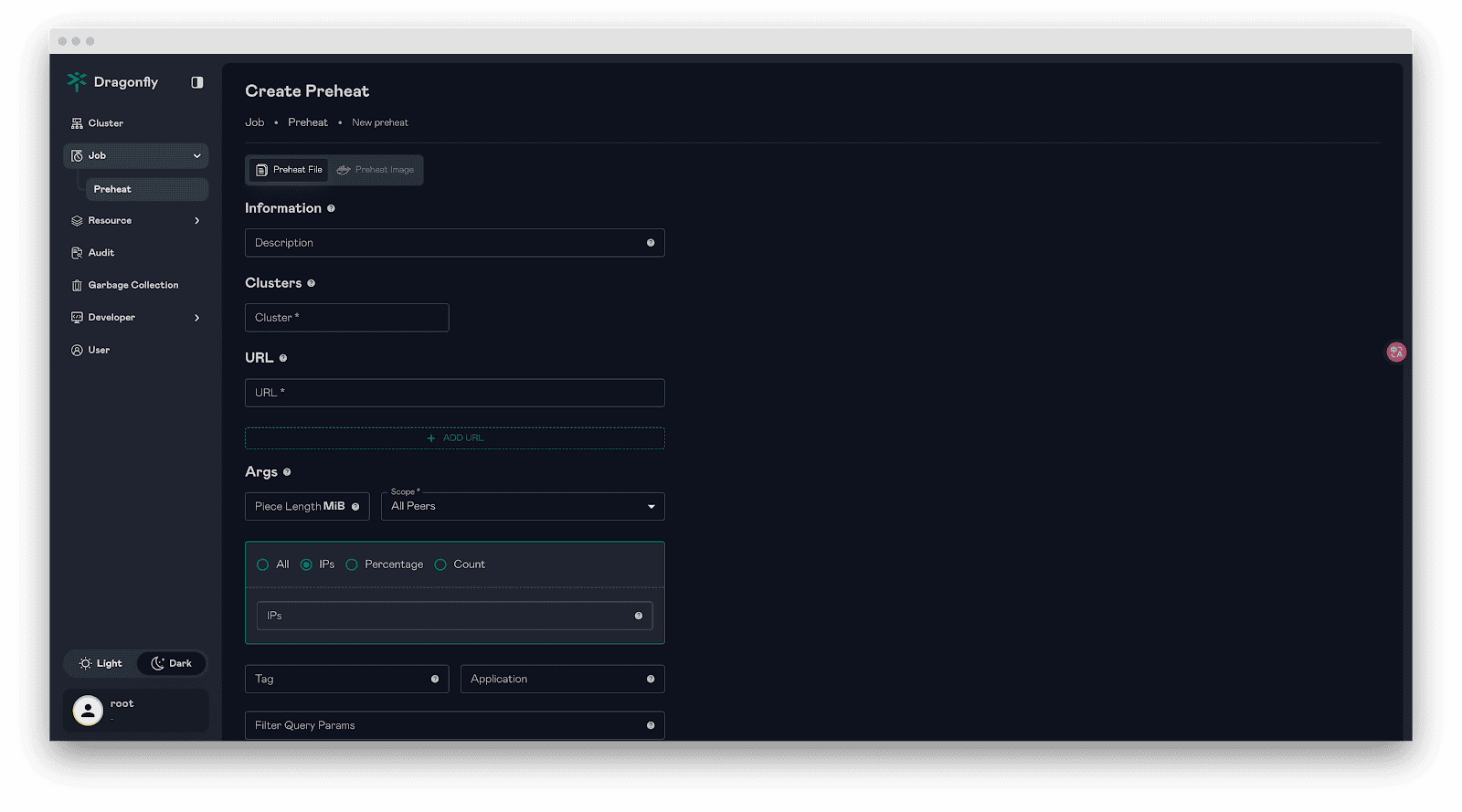

Enhanced preheating

- Support for IP-based peer selection in preheating jobs with priority-based selection logic where IP specification takes highest priority, followed by count-based and percentage-based selection.

- Support for preheating multiple URLs in a single request.

- Support for preheating file and image via Scheduler gRPC interface.

Calculate task ID based on image blob SHA256 to avoid redundant downloads

The Client now supports calculating task IDs directly from the SHA256 hash of image blobs, instead of using the download URL. This enhancement prevents redundant downloads and data duplication when the same blob is accessed from different registry domains.

Cache HTTP 307 redirects for split downloads

Support for caching HTTP 307 (Temporary Redirect) responses to optimize Dragonfly’s multi-piece download performance. When a download URL is split into multiple pieces, the redirect target is now cached, eliminating redundant redirect requests and reducing latency.

Go Client deprecated and replaced by Rust client

The Go client has been deprecated and replaced by the Rust Client. All future development and maintenance will focus exclusively on the Rust client, which offers improved performance, stability, and reliability.

For more information, please refer to the dragoflyoss/client.

Additional enhancements

- Enable 64K page size support for ARM64 in the Dragonfly Rust client.

- Fix missing git commit metadata in dfget version output.

- Support for config_path of io.containerd.cri.v1.images plugin for containerd V3 configuration.

- Replaces glibc DNS resolver with hickory-dns in reqwest to implement DNS caching and prevent excessive DNS lookups during piece downloads.

- Support for the –include-files flag to selectively download files from a directory.

- Add the –no-progress flag to disable the download progress bar output.

- Support for custom request headers in backend operations, enabling flexible header configuration for HTTP requests.

- Refactored log output to reduce redundant logging and improve overall logging efficiency.

Significant bug fixes

- Modified the database field type from text to longtext to support storing the information of preheating job.

- Fixed panic on repeated seed peer service stops during Scheduler shutdown.

- Fixed broker authentication failure when specifying the Redis password without setting a username.

Nydus

New features and enhancements

- Nydusd: Add CRC32 validation support for both RAFS V5 and V6 formats, enhancing data integrity verification.

- Nydusd: Support resending FUSE requests during nydusd restoration, improving daemon recovery reliability.

- Nydusd: Enhance VFS state saving mechanism for daemon hot upgrade and failover.

- Nydusify: Introduce Nydus-to-OCI reverse conversion capability, enabling seamless migration back to OCI format.

- Nydusify: Implement zero-disk transfer for image copy, significantly reducing local disk usage during copy operations.

- Snapshotter: Builtin blob.meta in bootstrap for blob fetch reliability for RAFS v6 image.

Significant bug fixes

- Nydusd: Fix auth token fetching for access_token field in registry authentication.

- Nydusd: Add recursive inode/dentry invalidation for umount API.

- Nydus Image: Fix multiple issues in optimize subcommand and add backend configuration support.

- Snapshotter: Implement lazy parent recovery for proxy mode to handle missing parent snapshots.

We encourage you to visit the d7y.io website to find out more.

Others

You can see CHANGELOG for more details.

Links

- Dragonfly Website: https://d7y.io/

- Dragonfly Repository: https://github.com/dragonflyoss/dragonfly

- Dragonfly Client Repository: https://github.com/dragonflyoss/client

- Dragonfly Console Repository: https://github.com/dragonflyoss/console

- Dragonfly Charts Repository: https://github.com/dragonflyoss/helm-charts

- Dragonfly Monitor Repository: https://github.com/dragonflyoss/monitoring

Dragonfly Github

Backdoor in Notepad++

Hackers associated with the Chinese government used a Trojaned version of Notepad++ to deliver malware to selected users.

Notepad++ said that officials with the unnamed provider hosting the update infrastructure consulted with incident responders and found that it remained compromised until September 2. Even then, the attackers maintained credentials to the internal services until December 2, a capability that allowed them to continue redirecting selected update traffic to malicious servers. The threat actor “specifically targeted Notepad++ domain with the goal of exploiting insufficient update verification controls that existed in older versions of Notepad++.” Event logs indicate that the hackers tried to re-exploit one of the weaknesses after it was fixed but that the attempt failed...

Kubewarden 1.32 Release

US Declassifies Information on JUMPSEAT Spy Satellites

The US National Reconnaissance Office has declassified information about a fleet of spy satellites operating between 1971 and 2006.

I’m actually impressed to see a declassification only two decades after decommission.

Stopping Bad Bots Without Blocking the Good Ones

Microsoft is Giving the FBI BitLocker Keys

Microsoft gives the FBI the ability to decrypt BitLocker in response to court orders: about twenty times per year.

It’s possible for users to store those keys on a device they own, but Microsoft also recommends BitLocker users store their keys on its servers for convenience. While that means someone can access their data if they forget their password, or if repeated failed attempts to login lock the device, it also makes them vulnerable to law enforcement subpoenas and warrants.

Introducing Node Readiness Controller

In the standard Kubernetes model, a node’s suitability for workloads hinges on a single binary "Ready" condition. However, in modern Kubernetes environments, nodes require complex infrastructure dependencies—such as network agents, storage drivers, GPU firmware, or custom health checks—to be fully operational before they can reliably host pods.

Today, on behalf of the Kubernetes project, I am announcing the Node Readiness Controller. This project introduces a declarative system for managing node taints, extending the readiness guardrails during node bootstrapping beyond standard conditions. By dynamically managing taints based on custom health signals, the controller ensures that workloads are only placed on nodes that met all infrastructure-specific requirements.

Why the Node Readiness Controller?

Core Kubernetes Node "Ready" status is often insufficient for clusters with sophisticated bootstrapping requirements. Operators frequently struggle to ensure that specific DaemonSets or local services are healthy before a node enters the scheduling pool.

The Node Readiness Controller fills this gap by allowing operators to define custom scheduling gates tailored to specific node groups. This enables you to enforce distinct readiness requirements across heterogeneous clusters, ensuring for example, that GPU equipped nodes only accept pods once specialized drivers are verified, while general purpose nodes follow a standard path.

It provides three primary advantages:

- Custom Readiness Definitions: Define what ready means for your specific platform.

- Automated Taint Management: The controller automatically applies or removes node taints based on condition status, preventing pods from landing on unready infrastructure.

- Declarative Node Bootstrapping: Manage multi-step node initialization reliably, with a clear observability into the bootstrapping process.

Core concepts and features

The controller centers around the NodeReadinessRule (NRR) API, which allows you to define declarative gates for your nodes.

Flexible enforcement modes

The controller supports two distinct operational modes:

- Continuous enforcement

- Actively maintains the readiness guarantee throughout the node’s entire lifecycle. If a critical dependency (like a device driver) fails later, the node is immediately tainted to prevent new scheduling.

- Bootstrap-only enforcement

- Specifically for one-time initialization steps, such as pre-pulling heavy images or hardware provisioning. Once conditions are met, the controller marks the bootstrap as complete and stops monitoring that specific rule for the node.

Condition reporting

The controller reacts to Node Conditions rather than performing health checks itself. This decoupled design allows it to integrate seamlessly with other tools existing in the ecosystem as well as custom solutions:

- Node Problem Detector (NPD): Use existing NPD setups and custom scripts to report node health.

- Readiness Condition Reporter: A lightweight agent provided by the project that can be deployed to periodically check local HTTP endpoints and patch node conditions accordingly.

Operational safety with dry run

Deploying new readiness rules across a fleet carries inherent risk. To mitigate this, dry run mode allows operators to first simulate impact on the cluster. In this mode, the controller logs intended actions and updates the rule's status to show affected nodes without applying actual taints, enabling safe validation before enforcement.

Example: CNI bootstrapping

The following NodeReadinessRule ensures a node remains unschedulable until its CNI agent is functional. The controller monitors a custom cniplugin.example.net/NetworkReady condition and only removes the readiness.k8s.io/acme.com/network-unavailable taint once the status is True.

apiVersion: readiness.node.x-k8s.io/v1alpha1

kind: NodeReadinessRule

metadata:

name: network-readiness-rule

spec:

conditions:

- type: "cniplugin.example.net/NetworkReady"

requiredStatus: "True"

taint:

key: "readiness.k8s.io/acme.com/network-unavailable"

effect: "NoSchedule"

value: "pending"

enforcementMode: "bootstrap-only"

nodeSelector:

matchLabels:

node-role.kubernetes.io/worker: ""

Demo:

Getting involved

The Node Readiness Controller is just getting started, with our initial releases out, and we are seeking community feedback to refine the roadmap. Following our productive Unconference discussions at KubeCon NA 2025, we are excited to continue the conversation in person.

Join us at KubeCon + CloudNativeCon Europe 2026 for our maintainer track session: Addressing Non-Deterministic Scheduling: Introducing the Node Readiness Controller.

In the meantime, you can contribute or track our progress here:

- GitHub: https://sigs.k8s.io/node-readiness-controller

- Slack: Join the conversation in #sig-node-readiness-controller

- Documentation: Getting Started

You own your availability: resilience in the age of third-party dependencies

AI Coding Assistants Secretly Copying All Code to China

There’s a new report about two AI coding assistants, used by 1.5 million developers, that are surreptitiously sending a copy of everything they ingest to China.

Maybe avoid using them.

IT automation with agentic AI: Introducing the MCP server for Red Hat Ansible Automation Platform

Friday Squid Blogging: New Squid Species Discovered

A new species of squid. pretends to be a plant:

Scientists have filmed a never-before-seen species of deep-sea squid burying itself upside down in the seafloor—a behavior never documented in cephalopods. They captured the bizarre scene while studying the depths of the Clarion-Clipperton Zone (CCZ), an abyssal plain in the Pacific Ocean targeted for deep-sea mining.

The team described the encounter in a study published Nov. 25 in the journal Ecology, writing that the animal appears to be an undescribed species of whiplash squid. At a depth of roughly 13,450 feet (4,100 meters), the squid had buried almost its entire body in sediment and was hanging upside down, with its siphon and two long ...

New Conversion from cgroup v1 CPU Shares to v2 CPU Weight

I'm excited to announce the implementation of an improved conversion formula from cgroup v1 CPU shares to cgroup v2 CPU weight. This enhancement addresses critical issues with CPU priority allocation for Kubernetes workloads when running on systems with cgroup v2.

Background

Kubernetes was originally designed with cgroup v1 in mind, where CPU shares were defined simply by assigning the container's CPU requests in millicpu form.

For example, a container requesting 1 CPU (1024m) would get (cpu.shares = 1024).

After a while, cgroup v1 started being replaced by its successor, cgroup v2. In cgroup v2, the concept of CPU shares (which ranges from 2 to 262144, or from 2¹ to 2¹⁸) was replaced with CPU weight (which ranges from [1, 10000], or 10⁰ to 10⁴).

With the transition to cgroup v2,

KEP-2254

introduced a conversion formula to map cgroup v1 CPU shares to cgroup v2 CPU

weight. The conversion formula was defined as: cpu.weight = (1 + ((cpu.shares - 2) * 9999) / 262142)

This formula linearly maps values from [2¹, 2¹⁸] to [10⁰, 10⁴].

While this approach is simple, the linear mapping imposes a few significant problems and impacts both performance and configuration granularity.

Problems with previous conversion formula

The current conversion formula creates two major issues:

1. Reduced priority against non-Kubernetes workloads

In cgroup v1, the default value for CPU shares is 1024, meaning a container

requesting 1 CPU has equal priority with system processes that live outside

of Kubernetes' scope.

However, in cgroup v2, the default CPU weight is 100, but the current

formula converts 1 CPU (1024m) to only ≈39 weight - less than 40% of the

default.

Example:

- Container requesting 1 CPU (1024m)

- cgroup v1:

cpu.shares = 1024(equal to default) - cgroup v2 (current):

cpu.weight = 39(much lower than default 100)

This means that after moving to cgroup v2, Kubernetes (or OCI) workloads would de-facto reduce their CPU priority against non-Kubernetes processes. The problem can be severe for setups with many system daemons that run outside of Kubernetes' scope and expect Kubernetes workloads to have priority, especially in situations of resource starvation.

2. Unmanageable granularity

The current formula produces very low values for small CPU requests, limiting the ability to create sub-cgroups within containers for fine-grained resource distribution (which will possibly be much easier moving forward, see KEP #5474 for more info).

Example:

- Container requesting 100m CPU

- cgroup v1:

cpu.shares = 102 - cgroup v2 (current):

cpu.weight = 4(too low for sub-cgroup configuration)

With cgroup v1, requesting 100m CPU which led to 102 CPU shares was manageable in the sense that sub-cgroups could have been created inside the main container, assigning fine-grained CPU priorities for different groups of processes. With cgroup v2 however, having 4 shares is very hard to distribute between sub-cgroups since it's not granular enough.

With plans to allow writable cgroups for unprivileged containers, this becomes even more relevant.

New conversion formula

Description

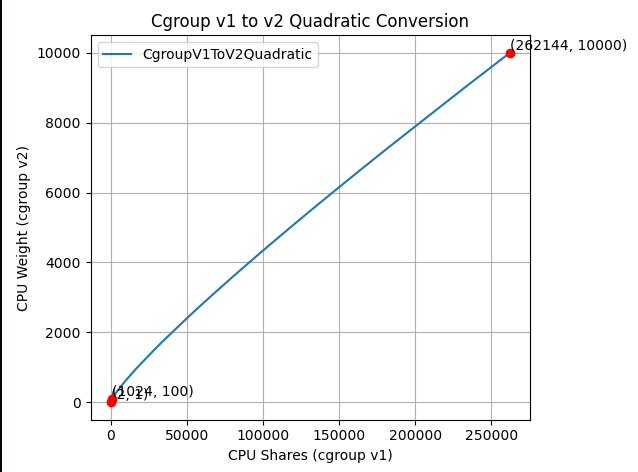

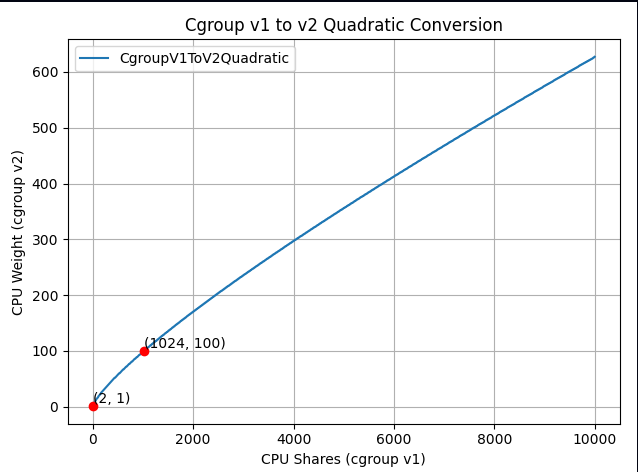

The new formula is more complicated, but does a much better job mapping between cgroup v1 CPU shares and cgroup v2 CPU weight:

$$cpu.weight = \lceil 10^{(L^{2}/612 + 125L/612 - 7/34)} \rceil, \text{ where: } L = \log_2(cpu.shares)$$The idea is that this is a quadratic function to cross the following values:

- (2, 1): The minimum values for both ranges.

- (1024, 100): The default values for both ranges.

- (262144, 10000): The maximum values for both ranges.

Visually, the new function looks as follows:

And if you zoom in to the important part:

The new formula is "close to linear", yet it is carefully designed to map the ranges in a clever way so the three important points above would cross.

How it solves the problems

-

Better priority alignment:

- A container requesting 1 CPU (1024m) will now get a

cpu.weight = 102. This value is close to cgroup v2's default 100. This restores the intended priority relationship between Kubernetes workloads and system processes.

- A container requesting 1 CPU (1024m) will now get a

-

Improved granularity:

- A container requesting 100m CPU will get

cpu.weight = 17, (see here). Enables better fine-grained resource distribution within containers.

- A container requesting 100m CPU will get

Adoption and integration

This change was implemented at the OCI layer. In other words, this is not implemented in Kubernetes itself; therefore the adoption of the new conversion formula depends solely on the OCI runtime adoption.

For example:

- runc: The new formula is enabled from version 1.3.2.

- crun: The new formula is enabled from version 1.23.

Impact on existing deployments

Important: Some consumers may be affected if they assume the older linear conversion formula. Applications or monitoring tools that directly calculate expected CPU weight values based on the previous formula may need updates to account for the new quadratic conversion. This is particularly relevant for:

- Custom resource management tools that predict CPU weight values.

- Monitoring systems that validate or expect specific weight values.

- Applications that programmatically set or verify CPU weight values.

The Kubernetes project recommends testing the new conversion formula in non-production environments before upgrading OCI runtimes to ensure compatibility with existing tooling.

Where can I learn more?

For those interested in this enhancement:

- Kubernetes GitHub Issue #131216 - Detailed technical analysis and examples, including discussions and reasoning for choosing the above formula.

- KEP-2254: cgroup v2 - Original cgroup v2 implementation in Kubernetes.

- Kubernetes cgroup documentation - Current resource management guidance.

How do I get involved?

For those interested in getting involved with Kubernetes node-level features, join the Kubernetes Node Special Interest Group. We always welcome new contributors and diverse perspectives on resource management challenges.

AIs Are Getting Better at Finding and Exploiting Security Vulnerabilities

From an Anthropic blog post:

In a recent evaluation of AI models’ cyber capabilities, current Claude models can now succeed at multistage attacks on networks with dozens of hosts using only standard, open-source tools, instead of the custom tools needed by previous generations. This illustrates how barriers to the use of AI in relatively autonomous cyber workflows are rapidly coming down, and highlights the importance of security fundamentals like promptly patching known vulnerabilities.

[…]

A notable development during the testing of Claude Sonnet 4.5 is that the model can now succeed on a minority of the networks without the custom cyber toolkit needed by previous generations. In particular, Sonnet 4.5 can now exfiltrate all of the (simulated) personal information in a high-fidelity simulation of the Equifax data breach—one of the costliest cyber attacks in historyusing only a Bash shell on a widely-available Kali Linux host (standard, open-source tools for penetration testing; not a custom toolkit). Sonnet 4.5 accomplishes this by instantly recognizing a publicized CVE and writing code to exploit it without needing to look it up or iterate on it. Recalling that the original Equifax breach happened by exploiting a publicized CVE that had not yet been patched, the prospect of highly competent and fast AI agents leveraging this approach underscores the pressing need for security best practices like prompt updates and patches...

How Banco do Brasil uses hyperautomation and platform engineering to drive efficiency

From if to how: A year of post-quantum reality

Ingress NGINX: Statement from the Kubernetes Steering and Security Response Committees

In March 2026, Kubernetes will retire Ingress NGINX, a piece of critical infrastructure for about half of cloud native environments. The retirement of Ingress NGINX was announced for March 2026, after years of public warnings that the project was in dire need of contributors and maintainers. There will be no more releases for bug fixes, security patches, or any updates of any kind after the project is retired. This cannot be ignored, brushed off, or left until the last minute to address. We cannot overstate the severity of this situation or the importance of beginning migration to alternatives like Gateway API or one of the many third-party Ingress controllers immediately.

To be abundantly clear: choosing to remain with Ingress NGINX after its retirement leaves you and your users vulnerable to attack. None of the available alternatives are direct drop-in replacements. This will require planning and engineering time. Half of you will be affected. You have two months left to prepare.

Existing deployments will continue to work, so unless you proactively check, you may not know you are affected until you are compromised. In most cases, you can check to find out whether or not you rely on Ingress NGINX by running kubectl get pods --all-namespaces --selector app.kubernetes.io/name=ingress-nginx with cluster administrator permissions.

Despite its broad appeal and widespread use by companies of all sizes, and repeated calls for help from the maintainers, the Ingress NGINX project never received the contributors it so desperately needed. According to internal Datadog research, about 50% of cloud native environments currently rely on this tool, and yet for the last several years, it has been maintained solely by one or two people working in their free time. Without sufficient staffing to maintain the tool to a standard both ourselves and our users would consider secure, the responsible choice is to wind it down and refocus efforts on modern alternatives like Gateway API.

We did not make this decision lightly; as inconvenient as it is now, doing so is necessary for the safety of all users and the ecosystem as a whole. Unfortunately, the flexibility Ingress NGINX was designed with, that was once a boon, has become a burden that cannot be resolved. With the technical debt that has piled up, and fundamental design decisions that exacerbate security flaws, it is no longer reasonable or even possible to continue maintaining the tool even if resources did materialize.

We issue this statement together to reinforce the scale of this change and the potential for serious risk to a significant percentage of Kubernetes users if this issue is ignored. It is imperative that you check your clusters now. If you are reliant on Ingress NGINX, you must begin planning for migration.

Thank you,

Kubernetes Steering Committee

Kubernetes Security Response Committee

Experimenting with Gateway API using kind

This document will guide you through setting up a local experimental environment with Gateway API on kind. This setup is designed for learning and testing. It helps you understand Gateway API concepts without production complexity.

Caution:

This is an experimentation learning setup, and should not be used for production. The components used on this document are not suited for production usage. Once you're ready to deploy Gateway API in a production environment, select an implementation that suits your needs.Overview

In this guide, you will:

- Set up a local Kubernetes cluster using kind (Kubernetes in Docker)

- Deploy cloud-provider-kind, which provides both LoadBalancer Services and a Gateway API controller

- Create a Gateway and HTTPRoute to route traffic to a demo application

- Test your Gateway API configuration locally

This setup is ideal for learning, development, and experimentation with Gateway API concepts.

Prerequisites

Before you begin, ensure you have the following installed on your local machine:

- Docker - Required to run kind and cloud-provider-kind

- kubectl - The Kubernetes command-line tool

- kind - Kubernetes in Docker

- curl - Required to test the routes

Create a kind cluster

Create a new kind cluster by running:

kind create cluster

This will create a single-node Kubernetes cluster running in a Docker container.

Install cloud-provider-kind

Next, you need cloud-provider-kind, which provides two key components for this setup:

- A LoadBalancer controller that assigns addresses to LoadBalancer-type Services

- A Gateway API controller that implements the Gateway API specification

It also automatically installs the Gateway API Custom Resource Definitions (CRDs) in your cluster.

Run cloud-provider-kind as a Docker container on the same host where you created the kind cluster:

VERSION="$(basename $(curl -s -L -o /dev/null -w '%{url_effective}' https://github.com/kubernetes-sigs/cloud-provider-kind/releases/latest))"

docker run -d --name cloud-provider-kind --rm --network host -v /var/run/docker.sock:/var/run/docker.sock registry.k8s.io/cloud-provider-kind/cloud-controller-manager:${VERSION}

Note: On some systems, you may need elevated privileges to access the Docker socket.

Verify that cloud-provider-kind is running:

docker ps --filter name=cloud-provider-kind

You should see the container listed and in a running state. You can also check the logs:

docker logs cloud-provider-kind

Experimenting with Gateway API

Now that your cluster is set up, you can start experimenting with Gateway API resources.

cloud-provider-kind automatically provisions a GatewayClass called cloud-provider-kind. You'll use this class to create your Gateway.

It is worth noticing that while kind is not a cloud provider, the project is named as cloud-provider-kind as it provides features that simulate a cloud-enabled environment.

Deploy a Gateway

The following manifest will:

- Create a new namespace called

gateway-infra - Deploy a Gateway that listens on port 80

- Accept HTTPRoutes with hostnames matching the

*.exampledomain.examplepattern - Allow routes from any namespace to attach to the Gateway.

Note: In real clusters, prefer Same or Selector values on the

allowedRoutesnamespace selector field to limit attachments.

Apply the following manifest:

---

apiVersion: v1

kind: Namespace

metadata:

name: gateway-infra

---

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: gateway

namespace: gateway-infra

spec:

gatewayClassName: cloud-provider-kind

listeners:

- name: default

hostname: "*.exampledomain.example"

port: 80

protocol: HTTP

allowedRoutes:

namespaces:

from: All

Then verify that your Gateway is properly programmed and has an address assigned:

kubectl get gateway -n gateway-infra gateway

Expected output:

NAME CLASS ADDRESS PROGRAMMED AGE

gateway cloud-provider-kind 172.18.0.3 True 5m6s

The PROGRAMMED column should show True, and the ADDRESS field should contain an IP address.

Deploy a demo application

Next, deploy a simple echo application that will help you test your Gateway configuration. This application:

- Listens on port 3000

- Echoes back request details including path, headers, and environment variables

- Runs in a namespace called

demo

Apply the following manifest:

apiVersion: v1

kind: Namespace

metadata:

name: demo

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/name: echo

name: echo

namespace: demo

spec:

ports:

- name: http

port: 3000

protocol: TCP

targetPort: 3000

selector:

app.kubernetes.io/name: echo

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/name: echo

name: echo

namespace: demo

spec:

selector:

matchLabels:

app.kubernetes.io/name: echo

template:

metadata:

labels:

app.kubernetes.io/name: echo

spec:

containers:

- env:

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

image: registry.k8s.io/gateway-api/echo-basic:v20251204-v1.4.1

name: echo-basic

Create an HTTPRoute

Now create an HTTPRoute to route traffic from your Gateway to the echo application. This HTTPRoute will:

- Respond to requests for the hostname

some.exampledomain.example - Route traffic to the echo application

- Attach to the Gateway in the

gateway-infranamespace

Apply the following manifest:

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: echo

namespace: demo

spec:

parentRefs:

- name: gateway

namespace: gateway-infra

hostnames: ["some.exampledomain.example"]

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: echo

port: 3000

Test your route

The final step is to test your route using curl. You'll make a request to the Gateway's IP address with the hostname some.exampledomain.example. The command below is for POSIX shell only, and may need to be adjusted for your environment:

GW_ADDR=$(kubectl get gateway -n gateway-infra gateway -o jsonpath='{.status.addresses[0].value}')

curl --resolve some.exampledomain.example:80:${GW_ADDR} http://some.exampledomain.example

You should receive a JSON response similar to this:

{

"path": "/",

"host": "some.exampledomain.example",

"method": "GET",

"proto": "HTTP/1.1",

"headers": {

"Accept": [

"*/*"

],

"User-Agent": [

"curl/8.15.0"

]

},

"namespace": "demo",

"ingress": "",

"service": "",

"pod": "echo-dc48d7cf8-vs2df"

}

If you see this response, congratulations! Your Gateway API setup is working correctly.

Troubleshooting

If something isn't working as expected, you can troubleshoot by checking the status of your resources.

Check the Gateway status

First, inspect your Gateway resource:

kubectl get gateway -n gateway-infra gateway -o yaml

Look at the status section for conditions. Your Gateway should have:

Accepted: True- The Gateway was accepted by the controllerProgrammed: True- The Gateway was successfully configured.status.addressespopulated with an IP address

Check the HTTPRoute status

Next, inspect your HTTPRoute:

kubectl get httproute -n demo echo -o yaml

Check the status.parents section for conditions. Common issues include:

- ResolvedRefs set to False with reason

BackendNotFound; this means that the backend Service doesn't exist or has the wrong name - Accepted set to False; this means that the route couldn't attach to the Gateway (check namespace permissions or hostname matching)

Example error when a backend is not found:

status:

parents:

- conditions:

- lastTransitionTime: "2026-01-19T17:13:35Z"

message: backend not found

observedGeneration: 2

reason: BackendNotFound

status: "False"

type: ResolvedRefs

controllerName: kind.sigs.k8s.io/gateway-controller

Check controller logs

If the resource statuses don't reveal the issue, check the cloud-provider-kind logs:

docker logs -f cloud-provider-kind

This will show detailed logs from both the LoadBalancer and Gateway API controllers.

Cleanup

When you're finished with your experiments, you can clean up the resources:

Remove Kubernetes resources

Delete the namespaces (this will remove all resources within them):

kubectl delete namespace gateway-infra

kubectl delete namespace demo

Stop cloud-provider-kind

Stop and remove the cloud-provider-kind container:

docker stop cloud-provider-kind

Because the container was started with the --rm flag, it will be automatically removed when stopped.

Delete the kind cluster

Finally, delete the kind cluster:

kind delete cluster

Next steps

Now that you've experimented with Gateway API locally, you're ready to explore production-ready implementations:

- Production Deployments: Review the Gateway API implementations to find a controller that matches your production requirements

- Learn More: Explore the Gateway API documentation to learn about advanced features like TLS, traffic splitting, and header manipulation

- Advanced Routing: Experiment with path-based routing, header matching, request mirroring and other features following Gateway API user guides

A final word of caution

This kind setup is for development and learning only. Always use a production-grade Gateway API implementation for real workloads.