CNCF Blog Projects Category

Dragonfly v2.4.0 is released

Dragonfly v2.4.0 is released! Thanks to all of the contributors who made this Dragonfly release happen.

New features and enhancements

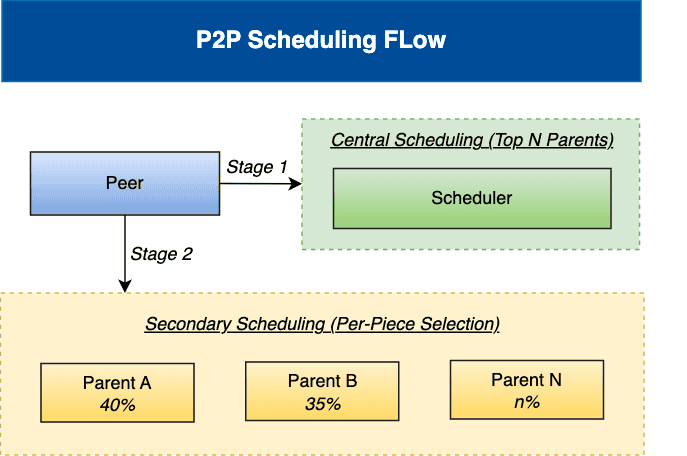

load-aware scheduling algorithm

A two-stage scheduling algorithm combining central scheduling with node-level secondary scheduling to optimize P2P download performance, based on real-time load awareness.

For more information, please refer to the Scheduling.

Vortex protocol support for P2P file transfer

Dragonfly provides the new Vortex transfer protocol based on TLV to improve the download performance in the internal network. Use the TLV (Tag-Length-Value) format as a lightweight protocol to replace gRPC for data transfer between peers. TCP-based Vortex reduces large file download time by 50% and QUIC-based Vortex by 40% compared to gRPC, both effectively reducing peak memory usage.

For more information, please refer to the TCP Protocol Support for P2P File Transfer and QUIC Protocol Support for P2P File Transfer.

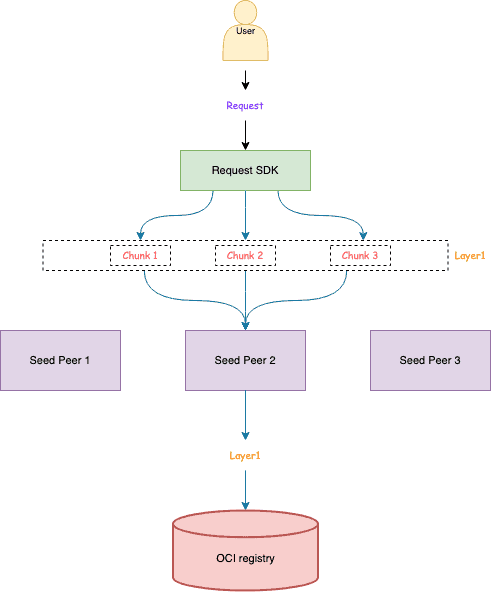

Request SDK

A SDK for routing User requests to Seed Peers using consistent hashing, replacing the previous Kubernetes Service load balancing approach.

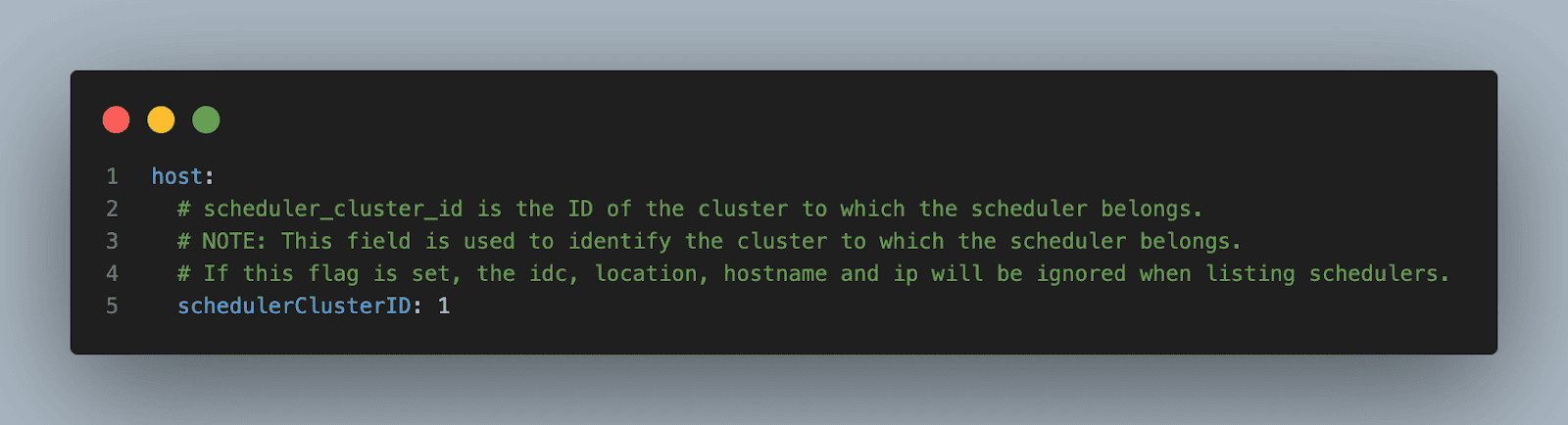

Simple multi‑cluster Kubernetes deployment with scheduler cluster ID

Dragonfly supports a simplified feature for deploying and managing multiple Kubernetes clusters by explicitly assigning a schedulerClusterID to each cluster. This approach allows users to directly control cluster affinity without relying on location‑based scheduling metadata such as IDC, hostname, or IP.

Using this feature, each Peer, Seed Peer, and Scheduler determines its target scheduler cluster through a clearly defined scheduler cluster ID. This ensures precise separation between clusters and predictable cross‑cluster behavior.

For more information, please refer to the Create Dragonfly Cluster Simple.

Performance and resource optimization for Manager and Scheduler components

Enhanced service performance and resource utilization across Manager and Scheduler components while significantly reducing CPU and memory overhead, delivering improved system efficiency and better resource management.

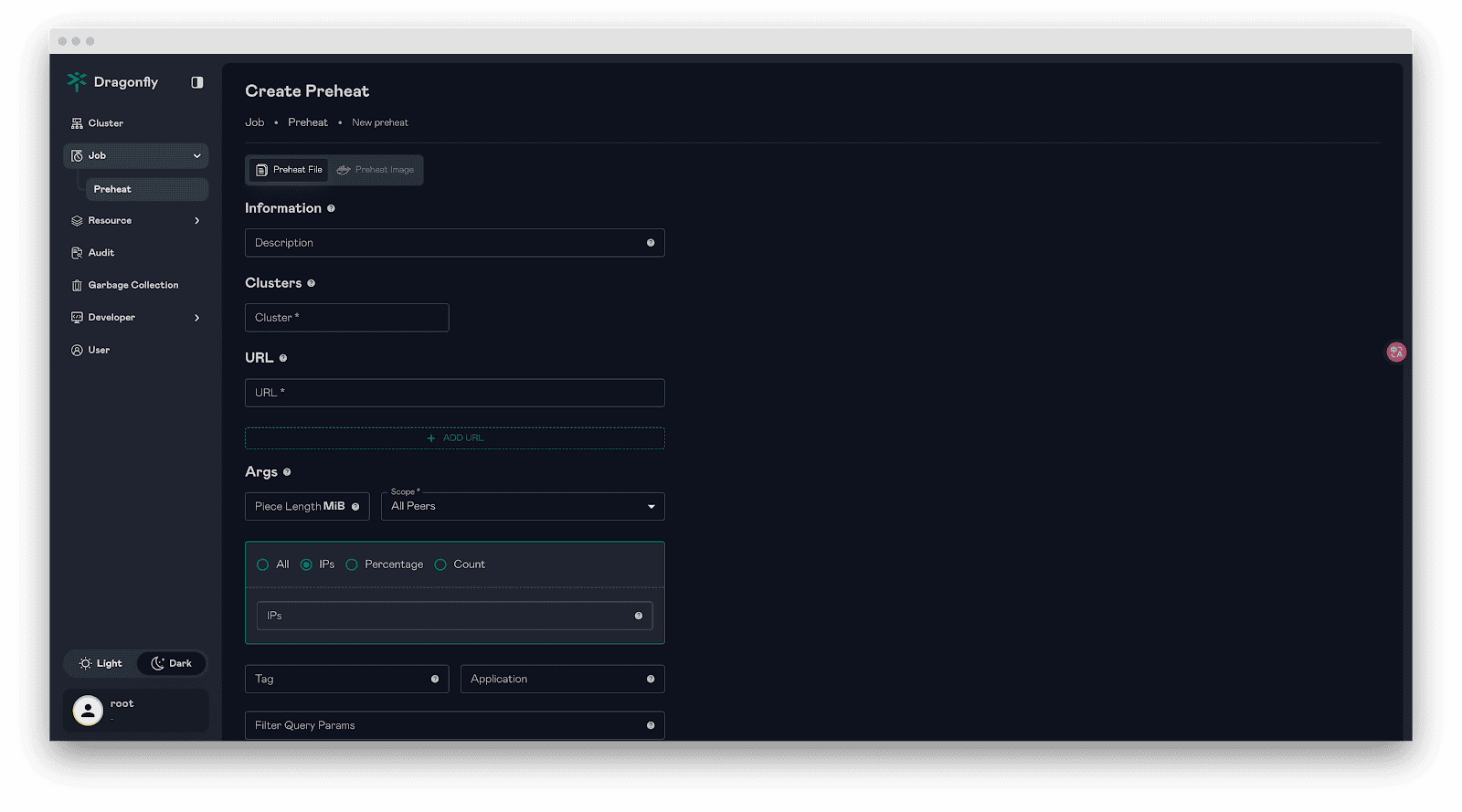

Enhanced preheating

- Support for IP-based peer selection in preheating jobs with priority-based selection logic where IP specification takes highest priority, followed by count-based and percentage-based selection.

- Support for preheating multiple URLs in a single request.

- Support for preheating file and image via Scheduler gRPC interface.

Calculate task ID based on image blob SHA256 to avoid redundant downloads

The Client now supports calculating task IDs directly from the SHA256 hash of image blobs, instead of using the download URL. This enhancement prevents redundant downloads and data duplication when the same blob is accessed from different registry domains.

Cache HTTP 307 redirects for split downloads

Support for caching HTTP 307 (Temporary Redirect) responses to optimize Dragonfly’s multi-piece download performance. When a download URL is split into multiple pieces, the redirect target is now cached, eliminating redundant redirect requests and reducing latency.

Go Client deprecated and replaced by Rust client

The Go client has been deprecated and replaced by the Rust Client. All future development and maintenance will focus exclusively on the Rust client, which offers improved performance, stability, and reliability.

For more information, please refer to the dragoflyoss/client.

Additional enhancements

- Enable 64K page size support for ARM64 in the Dragonfly Rust client.

- Fix missing git commit metadata in dfget version output.

- Support for config_path of io.containerd.cri.v1.images plugin for containerd V3 configuration.

- Replaces glibc DNS resolver with hickory-dns in reqwest to implement DNS caching and prevent excessive DNS lookups during piece downloads.

- Support for the –include-files flag to selectively download files from a directory.

- Add the –no-progress flag to disable the download progress bar output.

- Support for custom request headers in backend operations, enabling flexible header configuration for HTTP requests.

- Refactored log output to reduce redundant logging and improve overall logging efficiency.

Significant bug fixes

- Modified the database field type from text to longtext to support storing the information of preheating job.

- Fixed panic on repeated seed peer service stops during Scheduler shutdown.

- Fixed broker authentication failure when specifying the Redis password without setting a username.

Nydus

New features and enhancements

- Nydusd: Add CRC32 validation support for both RAFS V5 and V6 formats, enhancing data integrity verification.

- Nydusd: Support resending FUSE requests during nydusd restoration, improving daemon recovery reliability.

- Nydusd: Enhance VFS state saving mechanism for daemon hot upgrade and failover.

- Nydusify: Introduce Nydus-to-OCI reverse conversion capability, enabling seamless migration back to OCI format.

- Nydusify: Implement zero-disk transfer for image copy, significantly reducing local disk usage during copy operations.

- Snapshotter: Builtin blob.meta in bootstrap for blob fetch reliability for RAFS v6 image.

Significant bug fixes

- Nydusd: Fix auth token fetching for access_token field in registry authentication.

- Nydusd: Add recursive inode/dentry invalidation for umount API.

- Nydus Image: Fix multiple issues in optimize subcommand and add backend configuration support.

- Snapshotter: Implement lazy parent recovery for proxy mode to handle missing parent snapshots.

We encourage you to visit the d7y.io website to find out more.

Others

You can see CHANGELOG for more details.

Links

- Dragonfly Website: https://d7y.io/

- Dragonfly Repository: https://github.com/dragonflyoss/dragonfly

- Dragonfly Client Repository: https://github.com/dragonflyoss/client

- Dragonfly Console Repository: https://github.com/dragonflyoss/console

- Dragonfly Charts Repository: https://github.com/dragonflyoss/helm-charts

- Dragonfly Monitor Repository: https://github.com/dragonflyoss/monitoring

Dragonfly Github

OpenCost: Reflecting on 2025 and looking ahead to 2026

The OpenCost project has had a fruitful year in terms of releases, our wonderful mentees and contributors, and fun gatherings at KubeCons.

If you’re new to OpenCost, it is an open-source cost and resources management tool that is an Incubating project in the Cloud Native Computing Foundation (CNCF). It was created by IBM Kubecost and continues to be maintained and supported by IBM Kubecost, Randoli, and a wider community of partners, including the major cloud providers.

OpenCost releases

The OpenCost project had 11 releases in 2025. These include new features and capabilities that improve the experience for both users and contributors. Here are a few highlights:

- Promless: OpenCost can be configured to run without Prometheus, using environment variables which can be set using helm. Users will be able to run OpenCost using the Collector Datasource (beta) which can be run without Prometheus.

- OpenCost MCP server: AI agents can now query cost data in real-time using natural language. They can analyze spending patterns across namespaces, pods, and nodes, generate cost reports and recommendations automatically, and provide other insights from OpenCost data.

- Export system: The project now has a generic export framework to make it possible to export cost data in a type-safe way.

- Diagnostics system: OpenCost has a complete diagnostic framework with an interface, runners, and export capabilities.

- Heartbeat system: You can do system health tracking with timestamped heartbeat events for export and more.

- Cloud providers: There are continued improvements for users to track cloud and multi-cloud metrics. We appreciate contributions from Oracle (including providing hosting for our demo) and DigitalOcean (for recent cloud services provider work).

Thanks to our maintainers and contributors who make these releases possible and successful, including our mentees and community contributors as well.

Mentorship and community management

Our project has been committed to mentorship through the Linux Foundation for a while, and we continue to have fantastic mentees who bring innovation and support to the community. Manas Sivakumar was a summer 2025 mentee and worked on writing Integration tests for OpenCost’s enterprise readiness. Manas’ work is now part of the OpenCost integration testing pipeline for all future contributions.

- Adesh Pal, a mentee, made a big splash with the OpenCost MCP server. The MCP server now comes by default and needs no configuration. It outputs readable markdown on metrics as well as step-by-step suggestions to make improvements.

- Sparsh Raj has been in our community for a while and has become our most recent mentee. Sparsh has written a blog post on KubeModel, the foundation of OpenCost’s Data Model 2.0. Sparsh’s work will meet the needs for a robust and scalable data model that can handle Kubernetes complexity and constantly shifting resources.

- On the community side, Tamao Nakahara was brought into the IBM Kubecost team for a few months of open source and developer experience expertise. Tamao helped organize the regular OpenCost community meetings, leading actions around events, the website, and docs. On the website, Tamao improved the UX for new and returning users, and brought in Ginger Walker to help clean up the docs.

Events and talks

As a CNCF incubating project, OpenCost participated in the key KubeCon events. Most recently, the team was at KubeCon + CloudNativeCon Atlanta 2025, where maintainer Matt Bolt from IBM Kubecost kicked off the week with a Project Lightning talk. During a co-located event that day, Rajith Attapattu, CTO of contributing company Randoli, also gave a talk on OpenCost. Dee Zeis, Rajith, and Tamao also answered questions at the OpenCost kiosk in the Project Pavilion.

Earlier in the year, the team was also at both KubeCon + CloudNativeCon in London and Japan, giving talks and running the OpenCost kiosks.

2026!

What’s in store for OpenCost in the coming year? Aside from meeting all of you at future KubeCon + CloudNativeCon’s, we’re also excited about a few roadmap highlights. As mentioned, our LFX mentee Sparsh is working on KubeModel, which will be important for improvements to OpenCost’s data model. As AI continues to increase in adoption, the team is also working on building out costing features to track AI usage. Finally, supply chain security improvements are a priority.

We’re looking forward to seeing more of you in the community in the next year!

HolmesGPT: Agentic troubleshooting built for the cloud native era

If you’ve ever debugged a production incident, you know that the hardest part often isn’t the fix, it’s finding where to begin. Most on-call engineers end up spending hours piecing together clues, fighting time pressure, and trying to make sense of scattered data. You’ve probably run into one or more of these challenges:

- Unwritten knowledge and missing context:

You’re pulled into an outage for a service you barely know. The original owners have changed teams, the documentation is half-written, and the “runbook” is either stale or missing altogether. You spend the first 30 minutes trying to find someone who’s seen this issue before — and if you’re unlucky, this incident is a new one. - Tool overload and context switching:

Your screen looks like an air traffic control dashboard. You’re running monitoring queries, flipping between Grafana and Application Insights, checking container logs, and scrolling through traces — all while someone’s asking for an ETA in the incident channel. Correlating data across tools is manual, slow, and mentally exhausting. - Overwhelming complexity and knowledge gaps:

Modern cloud-native systems like Kubernetes are powerful, but they’ve made troubleshooting far more complex. Every layer — nodes, pods, controllers, APIs, networking, autoscalers – introduces its own failure modes. To diagnose effectively, you need deep expertise across multiple domains, something even seasoned engineers can’t always keep up with.

The challenges require a solution that can look across signals, recall patterns from past incidents, and guide you toward the most likely cause.

This is where HolmesGPT, a CNCF Sandbox project, could help.

HolmesGPT was accepted as a CNCF Sandbox project in October 2025. It’s built to simplify the chaos of production debugging – bringing together logs, metrics, and traces from different sources, reasoning over them, and surfacing clear, data-backed insights in plain language.

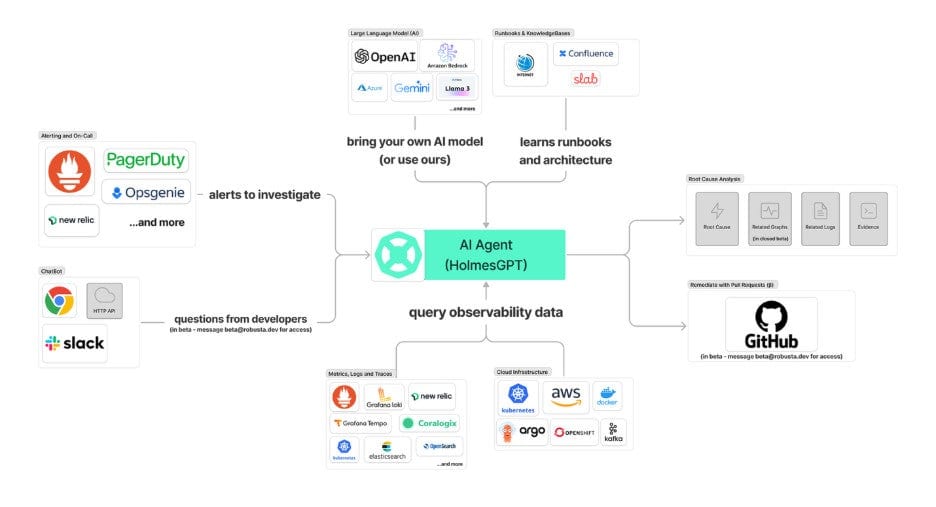

What is HolmesGPT?

HolmesGPT is an open-source AI troubleshooting agent built for Kubernetes and cloud-native environments. It combines observability telemetry, LLM reasoning, and structured runbooks to accelerate root cause analysis and suggest next actions.

Unlike static dashboards or chatbots, HolmesGPT is agentic: it actively decides what data to fetch, runs targeted queries, and iteratively refines its hypotheses – all while staying within your environment.

Key benefits:

- AI-native control loop: HolmesGPT uses an agentic task list approach

- Open architecture: Every integration and toolset is open and extensible, works with existing runbooks and MCP servers

- Data privacy: Models can run locally or inside your cluster or on the cloud

- Community-driven: Designed around CNCF principles of openness, interoperability, and transparency.

How it works

When you run:

holmes ask “Why is my pod in crash loop back off state” HolmesGPT:

- Understands intent → it recognizes you want to diagnose a pod restart issue

- Creates a task list → breaks down the problem into smaller chunks and executes each of them separately

- Queries data sources → runs Prometheus queries, collects Kubernetes events or logs, inspects pod specs including which pod

- Correlates context → detects that a recent deployment updated the image

- Explains and suggests fixes → returns a natural language diagnosis and remediation steps.

Here’s a simplified overview of the architecture:

Extensible by design

HolmesGPT’s architecture allows contributors to add new components:

- Toolsets: Build custom commands for internal observability pipelines or expose existing tools through a Model Context Protocol (MCP) server.

- Evals: Add custom evals to benchmark performance, cost , latency of models

- Runbooks: Codify best practices (e.g., “diagnose DNS failures” or “debug PVC provisioning”).

Example of a simple custom tool:

holmes:

toolsets:

kubernetes/pod_status:

description: "Check the status of a Kubernetes pod."

tools:

- name: "get_pod"

description: "Fetch pod details from a namespace."

command: "kubectl get pod {{ pod }} -n {{ namespace }}"

Getting started

- Install Holmesgpt

There are 4-5 ways to install Holmesgpt, one of the easiest ways to get started is through pip.

brew tap robusta-dev/homebrew-holmesgpt

brew install holmesgpt

The detailed installation guide has instructions for helm, CLI and the UI.

- Setup the LLM (Any Open AI compatible LLM) by setting the API Key

In most cases, this means setting the appropriate environment variable based on the LLM provider.

- Run it locally

holmes ask "what is wrong with the user-profile-import pod?" --model="anthropic/claude-sonnet-4-5"

- Explore other features

- GitHub: https://github.com/robusta-dev/holmesgpt

- Docs: holmesgpt.dev

How to get involved

HolmesGPT is entirely community-driven and welcomes all forms of contribution:

Area How you can help Integrations Add new toolsets for your observability tools or CI/CD pipelines. Runbooks Encode operational expertise for others to reuse. Evaluation Help build benchmarks for AI reasoning accuracy and observability insights. Docs and tutorials Improve onboarding, create demos, or contribute walkthroughs. Community Join discussions around governance and CNCF Sandbox progression.All contributions follow the CNCF Code of Conduct.

Further Resources

- GitHub Repository

- Join CNCF Slack → #holmesgpt

- Contributing Guide

Cilium releases 2025 annual report: A decade of cloud native networking

A decade on from its first commit in 2015, 2025 marks a significant milestone for the Cilium project. The community has published the 2025 Cilium Annual Report: A Decade of Cloud Native Networking, which reflects on the project’s evolution, key milestones, and notable developments over the past year.

What began as an experimental container networking effort has grown into a mature, widely adopted platform, bringing together cloud native networking, observability, and security through an eBPF-based architecture.As Cilium enters its second decade, the community continues to grow in both size and momentum, with sustained high-volume development, widespread production adoption, and expanding use cases including virtual machines and large-scale AI infrastructure.

We invite you to explore the 2025 Annual Report and celebrate a decade of cloud native networking with the community.

For any questions or feedback, please reach out to [email protected].

Building platforms using kro for composition

Recent industry developments, such as Amazon’s announcement of the new EKS capabilities, highlight a trend toward supporting platforms with managed GitOps, cloud resource operators, and composition tooling. In particular, the involvement of Kube Resource Orchestrator (kro)—a young, cross-cloud initiative—reflects growing ecosystem interest in simplifying Kubernetes-native resource grouping. Its inclusion in the capabilities package signals that major cloud providers recognize the value of the SIG Cloud Provider–maintained project and its potential role in future platform-engineering workflows.

This is a win for platform engineers. The composition of Kubernetes resources is becoming increasingly important as declarative Infrastructure as Code (IaC) tooling expands the number of objects we manage. For example, CNCF graduated project Crossplane, and the cloud-specific alternatives, such as AWS Controller for Kubernetes (ACK), which is packaged with EKS Capabilities both can add hundreds or even thousands of new CRDs to a cluster.

With composition available as a managed service, platform teams can focus on their mission to build what is unique to their business but common to their teams. They achieve this by combining composition with encapsulation of all associated processes and decoupled delivery across any target environment.

The rise of Kubernetes-native composition

The core value of kro lies in the idea of a ResourceGraphDefinition. Each definition abstracts many Kubernetes objects behind a single API. This API specifies what users may configure when requesting an instance, which resources are created per request, how those sub-resources depend on each other, and what status should be exposed back to the users and dependent resources. kro then acts as a controller that responds to these definitions by creating a new user-facing CRD and managing requests against it through an optimized resource DAG. This abstraction can reduce reliance on tools such as Helm, Kustomize, or hand-written operators when creating consistent patterns.

The collaboration between and the investment across cloud vendors contributing to kro is a bright sign for our industry. However, challenges remain for end users adopting these frameworks. It can often feel like they are trapped in the “How to draw an owl” meme, where kro helps teams sketch the ovals for the head and body, but drawing the rest of the platform owl requires a big leap for the platform engineers doing the work.

Where kro fits in platform design

Effective platforms demonstrate results across three outcomes based on time to delivery:

- Time for a user to get a new service they depend on to deliver their value

- Time to patch all instances of an existing service or capability

- Time to introduce a new business-compliant capability

Across the industry, we see platforms not only improving these metrics but fundamentally shifting beliefs about what is possible. Users are getting the tools they need to take new ideas to production in minutes, not months. A handful of engineers are managing continuous compliance and regular patching. Specialists bring their requirements directly to users without a central team bottleneck.

Universally successful platforms that deliver on these outcomes are designed around three principles:

Composition over simple abstraction

Composition enables teams to build from low-level components to high-value through common abstraction APIs. kro’s ResourceGroups provide an additional Kubernetes-native approach alongside Crossplane compositions, Helm charts, and Kratix Promises.

Encapsulation of configuration, policy, and process

Enterprise platforms must provide more than resources. They need clear ways to capture all the weird and wonderful (business-critical) requirements and processes they have built over the years. Yes, this can mean declarative code, but also imperative API calls, operational workflows that incorporate manual steps, legacy integrations with off-line systems, and, of course, interactions with non-Kubernetes resources. Safe composition depends on the ability to apply a single testable change that covers all affected systems.

Decoupled delivery across many environments

Organizations of sufficient scale and complexity need to support complex topologies, including multi-cluster Kubernetes and non-Kubernetes-based infrastructure. Platforms need to enable timely upgrades across their entire topology to reduce CVE risk while managing diverse and specialized compute, including modern options like GPUs and Functions-as-a-Service (FaaS), as well as legacy options such as mainframes or Red Hat Virtualization.

Achieving overall scalability, auditability, and resilience requires prioritizing each in the proper context. Centralized planning gives control. Decentralized delivery allows scale. A platform should enable the definition of rules and enforcement in a central orchestrator, then rely on distributed deployment engines to deliver the capability in the correct places and form. This avoids the limits of tightly coupled orchestration and reduces the operational burden of scale.

kro is strong in the first principle. It offers a clear, Kubernetes-native composition that lets teams package complex deployments, hide unnecessary details, and encode organizational defaults. Features such as CEL templating demonstrate a focus on helping engineers manage dependencies across Kubernetes objects when creating higher-level abstractions.

Where platforms need more than kro

It is important to acknowledge that kro does not aim to address the second or third principles. This is not a criticism. It reflects a focused scope, following the Unix philosophy of doing one thing well while integrating cleanly with the wider ecosystem.

kro is a powerful mechanism for packaging resource definitions and orchestrating them within a single cluster. It does not try to manage resources across clusters, handle workflows such as approvals, or integrate with systems such as ServiceNow, mainframes, or proprietary APIs that require imperative actions. The power comes from its Kubernetes-native design, which makes it easy to integrate with tools such as for scheduling, Kyverno or OPA for policy as code (PaC), and IaC controllers such as Crossplane.

The harder challenge is how to meet all three principles in a sustainable way. How can you make platform changes that are both quick and safe? The simplest answer is to enable encapsulated and testable packages that allow changes across infrastructure, configuration, policy, and process from a single implementation.

Platform orchestration frameworks—such as Kratix and others in the ecosystem—aim to address these workflow and multi-environment needs. Kratix provides a Kubernetes-native framework for delivering managed services that reflect organizational standards, with support for long-running workflows, integration with enterprise systems, and managed delivery to clusters, airgapped hardware, or mainframes. kro provides composition rather than orchestration, which allows these tools to complement each other.

Looking ahead at a growing ecosystem

The project’s multi-vendor contributions and Kubernetes SIG governance reflect growing community engagement around kro. Many contributors highlight the value of a portable, Kubernetes-native model for grouping and orchestrating resources, and the importance of reducing manual dependency management for platform teams.

The next stage for organizations is understanding how kro fits into their broader architecture. kro is an important tool for composition. Ultimately, platform value comes from tying that composition to capabilities that encapsulate configuration, policy, process workflows, and decoupled deployment across diverse environments.

Emerging standards will help organisations meet the core tests of platform value: safe self-service, consistent compliance, simple fleet upgrades, and a contribution model that scales. With standards come tools that enable platform engineers to continue to reuse capabilities, collaborate more effectively, and deliver predictable behavior across clusters and clouds.

Lima v2.0: New features for secure AI workflows

On November 6th, the Lima project team shipped the second major release of Lima. In this release, the team are expanding the project focus to cover AI as well as containers.

What is Lima ?

Lima (Linux Machines) is a command line tool to launch a local Linux virtual machine, with the primary focus on running containers on a laptop.

The project began in May 2021, with the aim of promoting containerd including nerdctl (contaiNERD CTL) to Mac users. The project joined the CNCF in September 2022 as a Sandbox project, and was promoted to the Incubating level in October 2025. Through the growth of the project, the scope has expanded to support non-container workloads and non-macOS hosts too.

See also: “Lima becomes a CNCF incubating project”.

Updates in v2.0

Plugins

Lima now provides the plugin infrastructure that allows third-parties to implement new features without modifying Lima itself:

- VM driver plugins: for additional hypervisors.

- CLI plugins: for additional subcommands of the `limactl` command.

- URL scheme plugins: for additional URL schemes to be passed as `limactl create SCHEME:SPEC` .

The plugin interfaces are still experimental and subject to change. The interfaces will be stabilized in future releases.

GPU acceleration

Lima now supports the VM driver for krunkit, providing GPU acceleration for Linux VM running on macOS hosts.

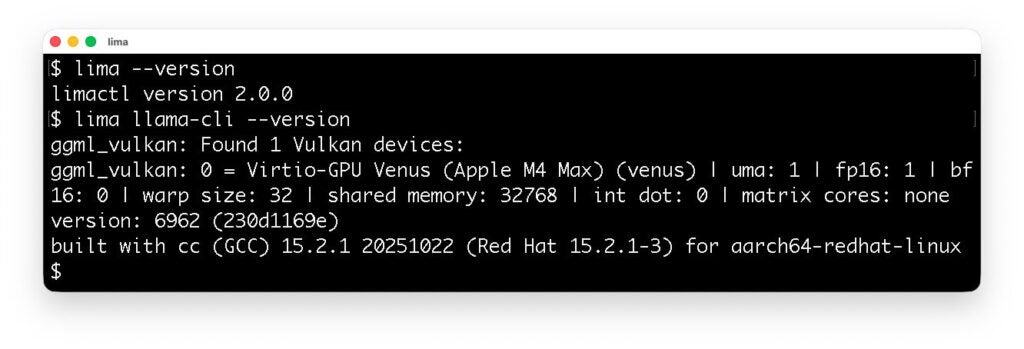

The following screenshot shows that llama.cpp running in Lima detects the Apple M4 Max processor as a virtualized GPU.

Model Context Protocol

Lima now provides Model Context Protocol (MCP) tools for reading, writing, and executing local files using a VM sandbox:

globlist_directoryread_filerun_shell_commandsearch_file_contentwrite_file

Lima’s MCP tools are inspired by Google Gemini CLI’s built-in tools, and can be used as a secure alternative for those built-in tools. See the configuration guide here: https://lima-vm.io/docs/config/ai/outside/mcp/

Other improvements

- The

`limactl start`command now accepts the`--progress`flag to show the progress of the provisioning scripts. - The `

limactl (create|edit|start)`commands now accept the`--mount-only DIR`flag to only mount the specified host directory. In Lima v1.x, this had to be specified in a very complex syntax:`--set ".mounts=[{\"location\":\"$(pwd)\", \"writable\":true}]"` . - The

`limactl shell`command now accepts the`--preserve-env`flag to propagate the environment variables from the host to the guest. - UDP ports are now forwarded by default in addition to TCP ports.

- Multiple host users can now run Lima simultaneously. This allows running Lima as a separate user account for enhanced security, using “Alcoholless” Homebrew.

See also the release note: https://github.com/lima-vm/lima/releases/tag/v2.0.0 .

We appreciate all the contributors who made this release possible, especially Ansuman Sahoo who contributed the VM driver plugin subsystem and the krunkit VM driver, through the Google Summer of Code (GSoC) 2025.

Expanding the focus to hardening AI

While Lima was originally made for promoting containerd to Mac users, it has been known to be useful for a variety of other use cases as well. One of the most notable emerging use cases is to run an AI coding agent inside a VM in order to isolate the agent from direct access to host files and commands. This setup ensures that even if an AI agent is deceived by malicious instructions searched from the Internet (e.g., fake package installations), any potential damage is confined within the VM or limited to files specified to be mounted from the host.

There are two kinds of scenarios to run an AI agent with Lima: AI inside Lima, and AI outside Lima.

AI inside Lima

This is the most common scenario; just run an AI agent inside Lima. The documentation features several examples of hardening AI agents running in Lima:

A local LLM can be used too, with the GPU acceleration feature available in the krunkit VM driver.

AI outside Lima

This scenario refers to running an AI agent as a host process outside Lima. Lima covers this scenario by providing the MCP tools that intercept file accesses and command executions.

Getting started: AI inside Lima

This section introduces how to run an AI agent (Gemini CLI) inside Lima so as to prevent the AI from directly accessing host files and commands.

If you are using Homebrew, Lima can be installed using:

brew install limaFor other installation methods, see https://lima-vm.io/docs/installation/ .

An instance of the Lima virtual machine can be created and started by running `limactl start`. However, as the default configuration mounts the entire home directory from the host, it is highly recommended to limit the mount scope to the current directory (.), especially when running an AI agent:

mkdir -p ~/test

cd ~/test

limactl start --mount-only .To allow writing to the mount directory, append the `:w` suffix to the mount specification:

limactl start --mount-only .:wFor example, you can run AI agents such as Gemini CLI. This can be installed and executed inside Lima using the `lima` commands as follows:

lima sudo snap install node --classic

lima sudo npm install -g @google/gemini-cli

lima geminiGemini CLI can arbitrarily read, write, and execute files inside the VM, however, it cannot access host files except mounted ones.

To run other AI agents, see https://lima-vm.io/docs/examples/ai/.

Istio at KubeCon + CloudNativeCon North America 2025: Community highlights and project progress

KubeCon + CloudNativeCon North America 2025 lit up Atlanta from November 10–13, bringing together one of the largest gatherings of open-source practitioners, platform engineers, and maintainers across the cloud native ecosystem. For the Istio community, the week was defined by packed rooms, long hallway conversations, and a genuine sense of shared progress across service mesh, Gateway API, security, and AI-driven platforms.

Before the main conference began, the community kicked things off with Istio Day on November 10, a colocated event filled with deep technical sessions, migration stories, and future-looking discussions that set the tone for the rest of the week.

Istio Day at KubeCon + CloudNativeCon NA

Istio Day brought together practitioners, contributors, and adopters for an afternoon of learning, sharing, and open conversations about where service mesh, and Istio, are headed next.

Istio Day opened with welcome remarks from the program co-chairs, setting the tone for an afternoon focused on real-world mesh evolution and the rapid growth of the Istio community. The agenda highlighted three major themes driving Istio’s future: AI-driven traffic patterns, the advancement of Ambient Mesh—including multicluster adoption, and modernizing traffic entry with Gateway API. Speakers across the ecosystem shared practical lessons on scaling, migration, reliability, and operating increasingly complex workloads with Istio.

The co-chairs closed the day by recognizing the speakers, contributors, and a community continuing to push service-mesh innovation forward. Recordings of all sessions are available at the CNCF YouTube channel.

Istio at KubeCon + CloudNativeCon

Outside of Istio Day, the project was highly visible across KubeCon + CloudNativeCon Atlanta, with maintainers, end users, and contributors sharing technical deep dives, production stories, and cutting-edge research. Istio appeared not only across expo booths and breakout sessions, but also throughout several of the keynotes, where companies showcased how Istio plays a critical role in powering their platforms at scale.

The week’s momentum fully met its stride when the Istio community reconvened with the Istio Project Update, where project leads shared latest releases and roadmap advances. In Istio: Set Sailing With Istio Without Sidecars, attendees explored how sidecar-less Ambient Mesh architecture is rapidly moving from experiment to adoption, opening new possibilities for simpler deployments and leaner data-planes.

The session Lessons Applied Building a Next-Generation AI Proxy took the crowd behind the scenes of how mesh technologies adapt to AI-driven traffic patterns and over at Automated Rightsizing for Istio DaemonSet Workloads (Poster Session), practitioners gathered to compare strategies for optimizing control-plane resources, tuning for high scale, and reducing cost without sacrificing performance.

The narrative of traffic-management evolution featured prominently in Gateway API: Table Stakes and its faster sibling Know Before You Go! Speedrun Intro to Gateway API. Meanwhile, Return of the Mesh: Gateway API’s Epic Quest for Unity scaled that conversation: how traffic, API, mesh, and routing converge into one architecture that simplifies complexity rather than multiplies it.

For long-term reflection, 5 Key Lessons From 8 Years of Building Kgateway delivered hard-earned wisdom from years of system design. In GAMMA in Action: How Careem Migrated To Istio Without Downtime, the real-world migration story—a major production rollout that stayed up during transition—provided a roadmap for teams seeking safe mesh adoption at scale.

Safety and rollout risks took center stage in Taming Rollout Risks in Distributed Web Apps: A Location-Aware Gradual Deployment Approach, where strategies for regional rollouts, steering traffic, and minimizing user impact were laid out.

Finally, operations and day-two reality were tackled in End-to-End Security With gRPC in Kubernetes and On-Call the Easy Way With Agents, reminding everyone that mesh isn’t just about architecture, but about how teams run software safely, reliably, and confidently.

Community spaces: ContribFest, Maintainer Track and the Project Pavilion

At the Project Pavilion, the Istio kiosk was constantly buzzing, drawing users with questions about Ambient Mesh, AI workloads, and deployment best practices.

The Maintainer Track brought contributors together to collaborate on roadmap topics, triage issues, and discuss key areas of investment for the next year.

At ContribFest, new contributors joined maintainers to work through good-first issues, discuss contribution pathways, and get their first PRs lined up.

Istio maintainers eecognized at the CNCF Community Awards

This year’s CNCF Community Awards were a proud moment for the project. Two Istio maintainers received well-deserved recognition:

Daniel Hawton — “Chop Wood, Carry Water” Award

John Howard — Top Committer Award

Beyond these awards, Istio was also represented prominently in conference leadership. Faseela K, one of the KubeCon + CloudNativeCon NA co-chairs and an Istio maintainer, participated in a keynote panel on Cloud Native for Good. During closing remarks, it was also announced that Lin Sun, another long-time Istio maintainer, will serve as an upcoming KubeCon + CloudNativeCon co-chair.

What we heard in Atlanta

Across sessions, kiosks, and hallways, a few themes emerged:

- Ambient Mesh is moving quickly from exploration to real-world adoption.

- AI workloads are reshaping traffic patterns and operational practices.

- Multicluster deployments are becoming standard, with stronger focus on identity and failover.

- Gateway API is solidifying as the future of modern traffic management.

- Contributor growth is accelerating, supported by ContribFest and hands-on community guidance.

Looking ahead

KubeCon + CloudNativeCon NA 2025 showcased a vibrant, rapidly growing community taking on some of the toughest challenges in cloud infrastructure—from AI traffic management to zero-downtime migrations, from planet-scale control planes to the next generation of sidecar-less mesh. As we look ahead to 2026, the momentum from Atlanta makes one thing clear: the future of service mesh is bright, and the Istio community is leading it together.

See you in Amsterdam!

Announcing Kyverno release 1.16

Kyverno 1.16 delivers major advancements in policy as code for Kubernetes, centered on a new generation of CEL-based policies now available in beta with a clear path to GA. This release introduces partial support for namespaced CEL policies to confine enforcement and minimize RBAC, aligning with least-privilege best practices. Observability is significantly enhanced with full metrics for CEL policies and native event generation, enabling precise visibility and faster troubleshooting. Security and governance get sharper controls through fine-grained policy exceptions tailored for CEL policies, and validation use cases broaden with the integration of an HTTP authorizer into ValidatingPolicy. Finally, we’re debuting the Kyverno SDK, laying the foundation for ecosystem integrations and custom tooling.

CEL policy types

CEL policies in beta

CEL policy types are introduced as v1beta. The promotion plan provides a clear, non‑breaking path: v1 will be made available in 1.17 with GA targeted for 1.18. This release includes the cluster‑scoped family (Validating, Mutating, Generating, Deleting, ImageValidating) at v1beta1 and adds namespaced variants for validation, deleting, and image validation; namespaced Generating and Mutating will follow in 1.17. PolicyException and GlobalContextEntry will advance in step to keep versions aligned; see the promotion roadmap in this tracking issue.

Namespaced policies

Kyverno 1.16 introduces namespaced CEL policy types— NamespacedValidatingPolicy, NamespacedDeletingPolicy, and NamespacedImageValidatingPolicy—which mirror their cluster-scoped counterparts but apply only within the policy’s namespace. This lets teams enforce guardrails with least-privilege RBAC and without central changes, improving multi-tenancy and safety during rollout. Choose namespaced types for team-owned namespaces and cluster-scoped types for global controls.

Observability upgrades

CEL policies now have comprehensive, native observability for faster diagnosis:

- Validating policy execution latency Metrics: kyverno_validating_policy_execution_duration_seconds_count, …_sum, …_bucket

- What it measures: Time spent evaluating validating policies per admission/background execution as a Prometheus histogram.

- Key labels: policy_name, policy_background_mode, policy_validation_mode (enforce/audit), resource_kind, resource_namespace, resource_request_operation (create/update/delete), execution_cause (admission_request/background_scan), result (PASS/FAIL).

- Mutating policy execution latency: kyverno_mutating_policy_execution_duration_seconds_count, …_sum, …_bucket

- What it measures: Time spent executing mutating policies (admission/background) as a Prometheus histogram.

- Key labels: policy_name, policy_background_mode, resource_kind, resource_namespace, resource_request_operation, execution_cause, result.

- Generating policy execution latency Metrics: kyverno_generating_policy_execution_duration_seconds_count, …_sum, …_bucket

- What it measures: Time spent executing generating policies when evaluating requests or during background scans.

- Key labels: policy_name, policy_background_mode, resource_kind, resource_namespace, resource_request_operation, execution_cause, result.

- Image-validating policy execution latency Metrics: kyverno_image_validating_policy_execution_duration_seconds_count, …_sum, …_bucket

- What it measures: Time spent evaluating image-related validating policies (e.g., image verification) as a Prometheus histogram.

- Key labels: policy_name, policy_background_mode, resource_kind, resource_namespace, resource_request_operation, execution_cause, result.

CEL policies now emit Kubernetes Events for passes, violations, errors, and compile/load issues with rich context (policy/rule, resource, user, mode). This provides instant, kubectl-visible feedback and easier correlation with admission decisions and metrics during rollout and troubleshooting.

Fine-grained policy exceptions

Image-based exceptions

This exception allows Pods in ci using images, via images attribute, that match the provided patterns while keeping the no-latest rule enforced for all other images. It narrows the bypass to specific namespaces and teams for auditability.

apiVersion: policies.kyverno.io/v1beta1

kind: PolicyException

metadata:

name: allow-ci-latest-images

namespace: ci

spec:

policyRefs:

- name: restrict-image-tag

kind: ValidatingPolicy

images:

- "ghcr.io/kyverno/*:latest"

matchConditions:

- expression: "has(object.metadata.labels.team) && object.metadata.labels.team == 'platform'"The following ValidatingPolicy references exceptions.allowedImages which skips validation checks for white-listed image(s):

apiVersion: policies.kyverno.io/v1beta1

kind: ValidatingPolicy

metadata:

name: restrict-image-tag

spec:

rules:

- name: broker-config

matchConstraints:

resourceRules:

- apiGroups: [apps]

apiVersions: [v1]

operations: [CREATE, UPDATE]

resources: [pods]

validations:

- message: "Containers must not allow privilege escalation unless they are in the allowed images list."

expression: >

object.spec.containers.all(container,

string(container.image) in exceptions.allowedImages ||

(

has(container.securityContext) &&

has(container.securityContext.allowPrivilegeEscalation) &&

container.securityContext.allowPrivilegeEscalation == false

)

)Value-based exceptions

This exception allows a list of values via allowedValues used by a CEL validation for a constrained set of targets so teams can proceed without weakening the entire policy.

apiVersion: policies.kyverno.io/v1beta1

kind: PolicyException

metadata:

name: allow-debug-annotation

namespace: dev

spec:

policyRefs:

- name: check-security-context

kind: ValidatingPolicy

allowedValues:

- "debug-mode-temporary"

matchConditions:

- expression: "object.metadata.name.startsWith('experiments-')"Here’s the policy leverages above allowed values. It denies resources unless the annotation value is present in exceptions.allowedValues.

apiVersion: policies.kyverno.io/v1beta1

kind: ValidatingPolicy

metadata:

name: check-security-context

spec:

matchConstraints:

resourceRules:

- apiGroups: [apps]

apiVersions: [v1]

operations: [CREATE, UPDATE]

resources: [deployments]

variables:

- name: allowedCapabilities

expression: "['AUDIT_WRITE','CHOWN','DAC_OVERRIDE','FOWNER','FSETID','KILL','MKNOD','NET_BIND_SERVICE','SETFCAP','SETGID','SETPCAP','SETUID','SYS_CHROOT']"

validations:

- expression: >-

object.spec.containers.all(container,

container.?securityContext.?capabilities.?add.orValue([]).all(capability,

capability in exceptions.allowedValues ||

capability in variables.allowedCapabilities))

message: >-

Any capabilities added beyond the allowed list (AUDIT_WRITE, CHOWN, DAC_OVERRIDE, FOWNER,

FSETID, KILL, MKNOD, NET_BIND_SERVICE, SETFCAP, SETGID, SETPCAP, SETUID, SYS_CHROOT)

are disallowed.

Configurable reporting status

This exception sets reportResult: pass, so when it matches, Policy Reports show “pass” rather than the default “skip”, improving dashboards and SLO signals during planned waivers.

apiVersion: policies.kyverno.io/v1beta1

kind: PolicyException

metadata:

name: exclude-skipped-deployment-2

labels:

polex.kyverno.io/priority: "0.2"

spec:

policyRefs:

- name: "with-multiple-exceptions"

kind: ValidatingPolicy

matchConditions:

- name: "check-name"

expression: "object.metadata.name == 'skipped-deployment'"

reportResult: passKyverno Authz Server

Beyond enriching admission-time validation, Kyverno now extends policy decisions to your service edge. The Kyverno Authz Server applies Kyverno policies to authorize requests for Envoy (via the External Authorization filter) and for plain HTTP services as a standalone HTTP authorization server, returning allow/deny decisions based on the same policy engine you use in Kubernetes. This unifies policy enforcement across admission, gateways, and services, enabling consistent guardrails and faster adoption without duplicating logic. See the project page for details: kyverno/kyverno-authz.

Introducing the Kyverno SDK

Alongside embedding CEL policy evaluation in controllers, CLIs, and CI, there’s now a companion SDK for service-edge authorization. The SDK lets you load Kyverno policies, compile them, and evaluate incoming requests to produce allow/deny decisions with structured results—powering Envoy External Authorization and plain HTTP services without duplicating policy logic. It’s designed for gateways, sidecars, and app middleware with simple Go APIs, optional metrics/hooks, and a path to unify admission-time and runtime enforcement. Note that kyverno-authz is still in active development; start with non-critical paths and add strict timeouts as you evaluate. See the SDK package for details: kyverno SDK.

Other features and enhancements

Label-based reporting configuration

Kyverno now supports label-based report suppression. Add the label reports.kyverno.io/disabled (any value, e.g., “true”) to any policy— ClusterPolicy, CEL policy types, ValidatingAdmissionPolicy, or MutatingAdmissionPolicy—to prevent all reporting (both ephemeral and PolicyReports) for that policy. This lets teams silence noisy or staging policies without changing enforcement; remove the label to resume reporting.

Use Kyverno CEL libraries in policy matchConditions

Kyverno 1.16 enables Kyverno CEL libraries in policy matchConditions, not just in rule bodies, so you can target when rules run using richer, context-aware checks. These expressions are evaluated by Kyverno but are not used to build admission webhook matchConditions—webhook routing remains unchanged.

Getting started and backward compatibility

Upgrading to Kyverno 1.16

To upgrade to Kyverno 1.16, you can use Helm:

helm repo update

helm upgrade --install kyverno kyverno/kyverno -n kyverno --version 3.6.0Backward compatibility

Kyverno 1.16 remains fully backward compatible with existing ClusterPolicy resources. You can continue running current policies and adopt the new policy types incrementally; once CEL policy types reach GA, the legacy ClusterPolicy API will enter a formal deprecation process following our standard, non‑breaking schedule.

Roadmap

We’re building on 1.16 with a clear, low‑friction path forward. In 1.17, CEL policy types will be available as v1, migration tooling and docs will focus on making upgrades routine. We will continue to expand CEL libraries, samples, and performance optimizations. With SDK and kyverno‑authz maturation to unify admission‑time and runtime enforcement paths. See the release board for the in‑flight work and timelines: Release 1.17.0 Project Board.

Conclusion

Kyverno 1.16 marks a pivotal step toward a unified, CEL‑powered policy platform: you can adopt the new policy types in beta today, move enforcement closer to teams with namespaced policies, and gain sharper visibility with native Events and detailed latency metrics. Fine‑grained exceptions make rollouts safer without weakening guardrails, while label‑based report suppression and CEL in matchConditions reduce noise and let you target policy execution precisely.

Looking ahead, the path to v1 and GA is clear, and the ecosystem is expanding with the Kyverno Authz Server and SDK to bring the same policy engine to gateways and services. Upgrade when ready, start with audits where useful, and tell us what you build—your feedback will shape the final polish and the journey to GA.

OpenFGA Becomes a CNCF Incubating Project

The CNCF Technical Oversight Committee (TOC) has voted to accept OpenFGA as a CNCF incubating project.

What is OpenFGA?

OpenFGA is an authorization engine that addresses the challenge of implementing complex access control at scale in modern software applications. Inspired by Google’s global access control system, Zanzibar, OpenFGA leverages Relationship-Based Access Control (ReBAC). This allows developers to define permissions based on relationships between users and objects (e.g., who can view which document). By serving as an external service with an API and multiple SDKs, it centralizes and abstracts the authorization logic out of the application code. This separation of concerns significantly improves developer velocity by simplifying security implementation and ensures that access rules are consistent, scalable, and easy to audit across all services, solving a critical complexity problem for developers building distributed systems.

OpenFGA’s History

OpenFGA was developed by a group of Okta employees, and is the foundation for the Auth0 FGA commercial offering.

The project was accepted as a CNCF Sandbox project in September 2022. Since then, it has been deployed by hundreds of companies and received multiple contributions. Some major moments and updates include:

- 37 companies publicly acknowledge using it in production.

- Engineers from Grafana Labs and GitPod have become official maintainers.

- OpenFGA was invited to present on the Maintainer’s track at Kubecon + CloudNativeCon Europe 2025.

- A MySQL storage adapter was contributed by TwinTag and SQLite storage adapter was contributed by Grafana Labs.

- OpenFGA started hosting a monthly OpenFGA community meeting in April 2023

- Several developer experience improvements, like:

- New SDKs for Python and Java

- IDE integrations with VS Code and IntelliJ

- A CLI with support for model testing

- A Terraform Provider was donated to the project by Maurice Ackel

- A new caching implementation and multiple performance improvements shipped over the last year.

- OpenFGA also added the ListObjects endpoint to retrieve all resources a user has a specific relation with a resource. Additionally, OpenFGA added the ListUsers endpoint to retrieve all users that have a specific relation with a resource.

Further, OpenFGA integrates with multiple CNCF projects:

- OpenTelemetry for tracing and telemetry

- Helm for deployment

- Grafana dashboards for monitoring

- Prometheus for metrics collection

- ArtifactHub for Helm chart distribution

Maintainer Perspective

“Seeing companies successfully deploy OpenFGA in production demonstrates its viability as an authorization solution. Our focus now is on growth. CNCF Incubation provides increased credibility and visibility – attracting a broader set of contributors and helping secure long-term sustainability. We anticipate this phase supporting us collectively build the definitive and centralized service for fine-grained authorization that the cloud native ecosystem can continue to trust.

— Andres Aguiar, OpenFGA Maintainer and Director of Product at Okta

“When Grafana adopted OpenFGA the community was incredibly welcoming, and we’ve been fortunate to collaborate on enhancements like SQLite support. We are excited to work with CNCF to continue the evolution of the OpenFGA platform.”

— Dan Cech, Distinguished Engineer, Grafana Labs

From the TOC

“Authorization is one of the most complex and critical problems in distributed systems, and OpenFGA provides a clean, scalable solution that developers can actually adopt. Its ReBAC model and API-first approach simplify how teams think about access control, removing layers of custom logic from applications. What impressed me most during the due diligence process was the project’s momentum—strong community growth, diverse maintainers, and real-world production deployments. OpenFGA is quickly becoming a foundational building block for secure, cloud native applications.”

— Ricardo Aravena, CNCF TOC Sponsor

“As the TOC Sponsor for OpenFGA’s incubation, I’ve had the opportunity to work closely with the maintainers and see their deep technical rigor and commitment to excellence firsthand. OpenFGA reflects the kind of thoughtful engineering and collaboration that drives the CNCF ecosystem forward. By externalizing authorization through a developer-friendly API, OpenFGA empowers teams to scale security with the same agility as their infrastructure. Throughout the incubation process, the maintainers have been exceptionally responsive and precise in addressing feedback, demonstrating the project’s maturity and readiness for broader adoption. With growing adoption and strong technical foundations, I’m excited to see how the OpenFGA community continues to expand its capabilities and help organizations strengthen access control across cloud native environments.”

— Faseela Kundattil, CNCF TOC Sponsor

Main Components

Some main components of the project include:

- The OpenFGA server designed to answer authorization requests fast and at scale

- SDKs for Go, .NET, JS, Java, Python

- A CLI to interact with the OpenFGA server and test authorization models

- Helm Charts to deploy to Kubernetes

- Integrations with VS Code and Jetbrains

Notable Milestones

- 4,300+ GitHub Stars

- 2246 Pull Requests

- 459 Issues

- 96 Contributors, 652 across repositories

- 89 Releases

Looking Ahead

OpenFGA is a database, and as with any database, there will always be work to improve performance for every type of query. Future goals of the roadmap are to make it simpler for maintainers to contribute to SDKs; launch new SDKs for Ruby, Rust, and PHP; add support for the AuthZen standard; add new visualization options and open sourcing the OpenFGA playground tool; improve observability; add streaming API endpoints for better performance; and include more robust error handling with new write-conflict options.

You can learn more about OpenFGA here.

As a CNCF-hosted project, OpenFGA is part of a neutral foundation aligned with its technical interests, as well as the larger Linux Foundation, which provides governance, marketing support, and community outreach. OpenFGA joins incubating technologies Backstage, Buildpacks, cert-manager, Chaos Mesh, CloudEvents, Container Network Interface (CNI), Contour, Cortex, CubeFS, Dapr, Dragonfly, Emissary-Ingress, Falco, gRPC, in-toto, Keptn, Keycloak, Knative, KubeEdge, Kubeflow, KubeVela, KubeVirt, Kyverno, Litmus, Longhorn, NATS, Notary, OpenFeature, OpenKruise, OpenMetrics, OpenTelemetry, Operator Framework, Thanos, and Volcano. For more information on maturity requirements for each level, please visit the CNCF Graduation Criteria.

Self-hosted human and machine identities in Keycloak 26.4

Keycloak is a leading open source solution in the cloud-native ecosystem for Identity and Access Management, a key component of accessing applications and their data.

With the release of Keycloak 26.4, we’ve added features for both machine and human identities. New features focus on security enhancement, deeper integration, and improved server administration. See below for the release highlights, or dive deeper in our Keycloak 26.4 release announcement.

Keycloak recently surpassed 30k GitHub stars and 1,350 contributors. If you’re attending KubeCon + CloudNativeCon North America in Atlanta, stop by and say hi—we’d love to hear how you’re using Keycloak!

What’s New in 26.4

Passwordless user authentication with Passkeys

Keycloak now offers full support for Passkeys. As secure, passwordless authentication becomes the new standard, we’ve made passkeys simple to configure. For environments that are unable to adopt passkeys, Keycloak continues to support OTP and recovery codes. You can find a passkey walkthrough on the Keycloak blog.

Tightened OpenID Connect security with FAPI 2 and DPoP

Keycloak 26.4 implements the Financial-grade API (FAPI) 2.0 standard, ensuring strong security best practices. This includes support for Demonstrating Proof-of-Possession (DPoP), which is a safer way to handle tokens in public OpenID Connect clients.

Simplified deployments across multiple availability zones

Deployment across multiple availability zones or data centers is simplified in 26.4:

- Split-brain detection

- Full support in the Keycloak Operator

- Latency optimizations when Keycloak nodes run in different data centers

Keycloak docs contain a full step-by-step guide, and we published a blog post on how to scale to 2,000 logins/sec and 10,000 token refreshes/sec.

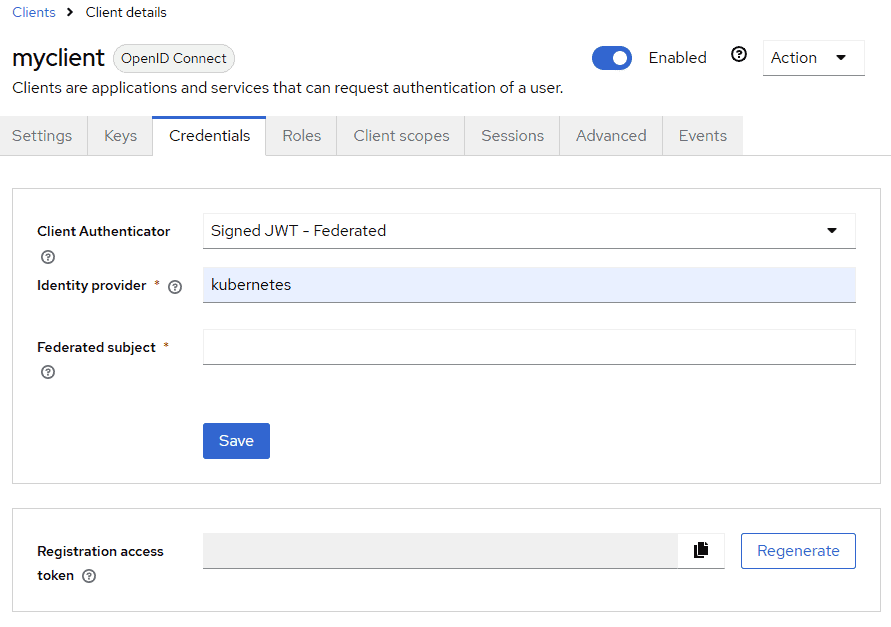

Authenticating applications with Kubernetes service account tokens or SPIFFE

When applications interact with Keycloak around OpenID Connect, each confidential server-side application needs credentials. This usually comes with the churn to distribute and rotate them regularly.

With 26.4, you can use Kubernetes service account tokens, which are automatically distributed to each Pod when running on Kubernetes. This removes the need to distribute and rotate an extra pair of credentials. For use cases inside and outside Kubernetes, you can also use SPIFFE.

To test this preview feature:

- Enable the features

client-auth-federated:v1,spiffe:v1, andkubernetes-service-accounts:v1. - Register a Kubernetes or SPIFFE identity provider in Keycloak.

- For a client registered in Keycloak, configure the Client Authenticator in the Credentials tab as Signed JWT – Federated, referencing the identity provider created in the previous step and the expected subject in the JWT.

Looking ahead

Keycloak’s roadmap includes:

- Improved MCP support to better support AI agents

- Improved token exchange

- Automating administrative tasks using workflows triggered by authentication events.

You can follow our journey at keycloak.org and get involved. Our nightly builds give you early access to Keycloak’s latest features.

Connecting distributed Kubernetes with Cilium and SD-WAN: Building an intelligent network fabric

Learn how Kubernetes-native traffic management and SD-WAN integration can deliver consistent security, observability, and performance across distributed clusters.

The challenge of distributed Kubernetes networking

Modern businesses are rapidly adopting distributed architectures to meet growing demands for performance, resilience, and global reach. This shift is driven by emerging workloads that demand distributed infrastructure: AI/ML model training distributed across GPU clusters, real-time edge analytics processing IoT data streams, and global enterprise operations that require seamless connectivity across on-premises workloads, data centers, cloud providers, and edge locations.

Today businesses are increasingly struggling to ensure secure, reliable and high-performance global connectivity while maintaining visibility across this distributed infrastructure. How do you maintain consistent end-to-end policies when applications traverse multiple network boundaries? How do you optimize performance for latency-sensitive critical applications when they could be running anywhere? And how do you gain clear visibility into application communication across this complex, multi-cluster, multi-cloud landscape?

This is where a modern, integrated approach to networking becomes essential, one that understands both the intricacies of Kubernetes and the demands of wide-area connectivity. Let’s explore a proposal for seamlessly bridging your Kubernetes clusters, regardless of location, while intelligently managing the underlying network paths. Such an integrated approach solves several critical business needs:

- Unified security posture: Consistent policy enforcement from the wide-area network down to individual microservices.

- Optimized performance: Intelligent traffic routing that adapts to real-time conditions and application requirements.

- Global visibility: End-to-end observability across all layers of the network stack.

In this post we discuss how to interconnect Cilium with a Software-Defined Wide Area Network (SD-WAN) fabric to extend Kubernetes-native traffic management and security policies into the underlying network interconnect. Learn how such integration simplifies operations while delivering the performance and security modern distributed workloads demand.

Towards an intelligent network fabric

Imagine a globally distributed service deployed across dozens of locations worldwide. Latency-critical microservices are deployed at the edge, critical workloads run on-premises for data protection, while elastic services leverage public cloud scalability. These components must constantly communicate across cluster boundaries: IoT streams flow to central management, customer data replicates across regions for sovereignty compliance, and real-time analytics span multiple sites.

Bridging Kubernetes and SD-WAN with Cilium

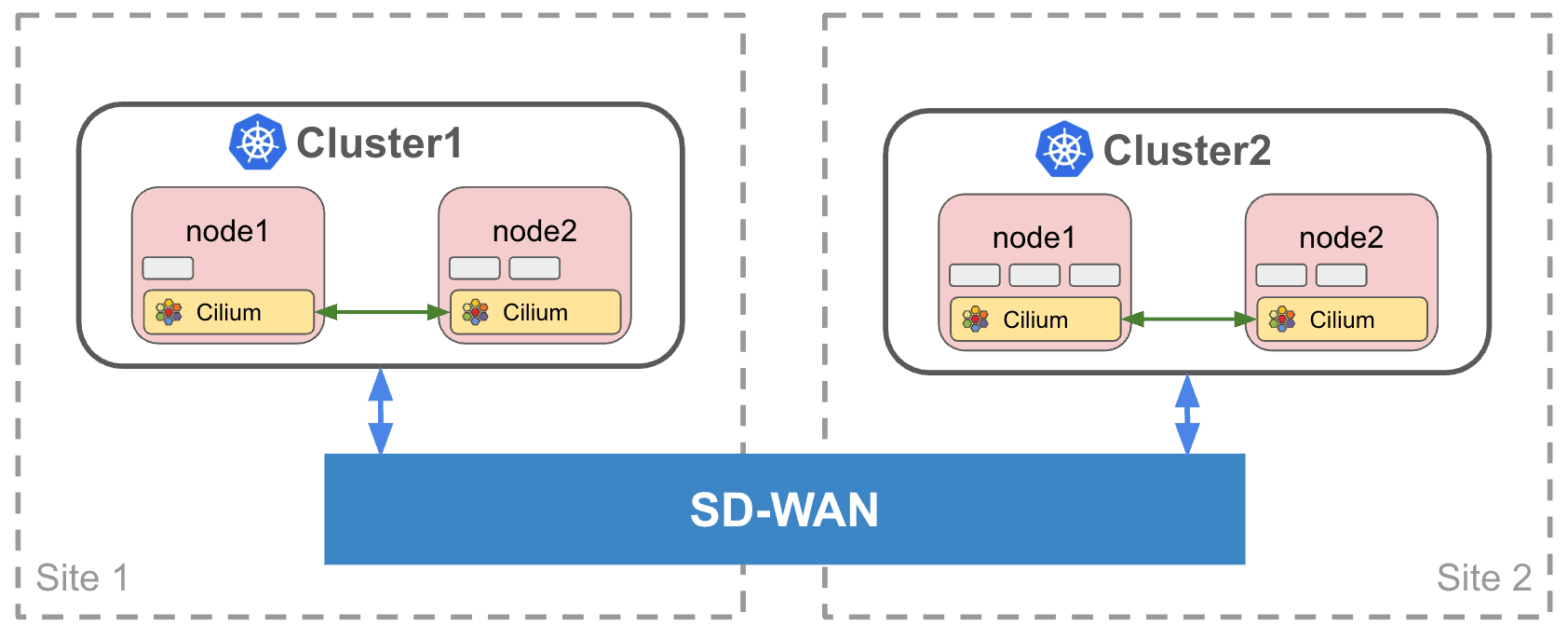

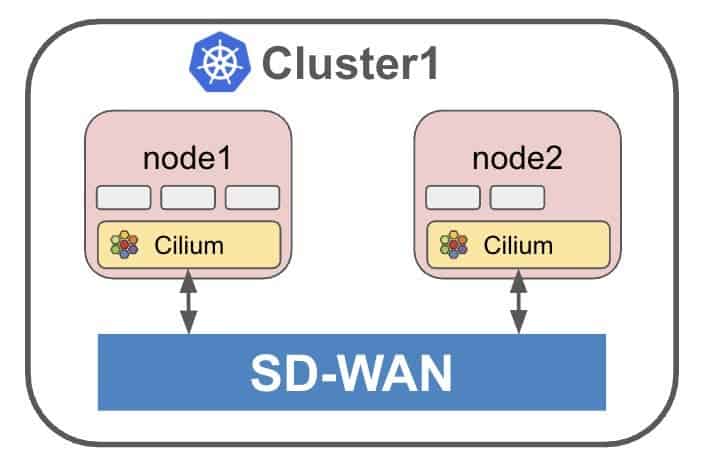

Enter Cilium, a universal networking layer connecting Kubernetes workloads, VMs, and physical servers across clouds, data centers, and edge locations. Simply mark a service as “global” and Cilium ensures its availability throughout your distributed multi-cluster infrastructure (Figure 1). But even single-cluster Kubernetes deployments may benefit from an intelligent WAN interconnect, when different nodes and physical servers of the same cluster may run at multiple geographically diverse locations (Figure 2). No matter at which location a service is running, Cilium intelligently routes and balances load across the entire deployment.

Yet a critical gap remains: controlling how traffic traverses the underlying network interconnect. Modern wide-area SDNs like a modern SD-WAN implementation (such as Cisco Catalyst SD-WAN) would easily deliver the intelligent interconnect these services need, by providing performance-guarantees for SD-WAN tunnels between sites with traffic differentiation. Unfortunately, currently leveraging these capabilities in a Kubernetes-native way remains a challenge.

Figure 1: Multi-cluster scenario: An SD-WAN connects multiple Kubernetes clusters.

Figure 2: Single-cluster scenario: An SD-WAN fabric interconnects geographically distributed nodes of a single Kubernetes cluster.

We suggest leveraging the concept of a Kubernetes operator to bridge this divide. Continuously monitoring the Kubernetes API, the operator could translate service requirements into SD-WAN policies, automatically mapping inter-cluster traffic to appropriate network paths based on your declared intent. Simply annotate your Kubernetes service manifests, and the operator handles the rest. For the purposes of this guide we will use a minimalist SD-WAN operator.Other SD-WAN operators (such asAWI Catalyst SD-WAN Operator) offer varying degrees of Kubernetes integration.

The role of a Kubernetes operator

Need guaranteed performance for business-critical global services? One service annotation will route traffic through a dedicated SD-WAN tunnel, bypassing congestion and bottlenecks. Require encryption for sensitive data flows? Another annotation ensures tamper-resistant paths between clusters. In general, such an intelligent cloud SD-WAN interconnect would provide the following features:

- Map services to specific SD-WAN tunnels for optimized routing (see below).

- Provide end-to-end Service Level Objectives (SLOs) across sites and nodes.

- Implement comprehensive monitoring to track service health and performance across the entire network.

- Enable selective traffic inspection or blocking on the interconnect for enhanced security and compliance.

- Isolate tenants’ inter-cluster traffic in distributed multi-tenant workloads.

A Kubernetes operator can bring these capabilities, and many more, into the Kubernetes ecosystem, maintaining the declarative, GitOps-friendly approach cloud-native teams expect.

Enforcing traffic policies with Cilium and Cisco Catalyst SD-WAN

In this guide, we demonstrate how an operator can enforce granular traffic policies for Kubernetes services using Cilium and Cisco Catalyst SD-WAN. The setup ensures secure, prioritized routing for business-critical services while allowing best-effort traffic to use default paths.

We will assume that SD-WAN connectivity is established between the clusters/nodes so that the SD-WAN interconnects all Kubernetes deployment sites (nodes/clusters) and routes pod-to-pod traffic seamlessly across the WAN. We further assume Cilium is configured in Native Routing Mode so that we have full visibility into the traffic that travels between the clusters/nodes in the SD-WAN.

Once installed, the SD-WAN operator will automatically generate SD-WAN policies based on your Kubernetes service configurations. This seamless integration ensures that your network policies adapt dynamically as your Kubernetes environment evolves.

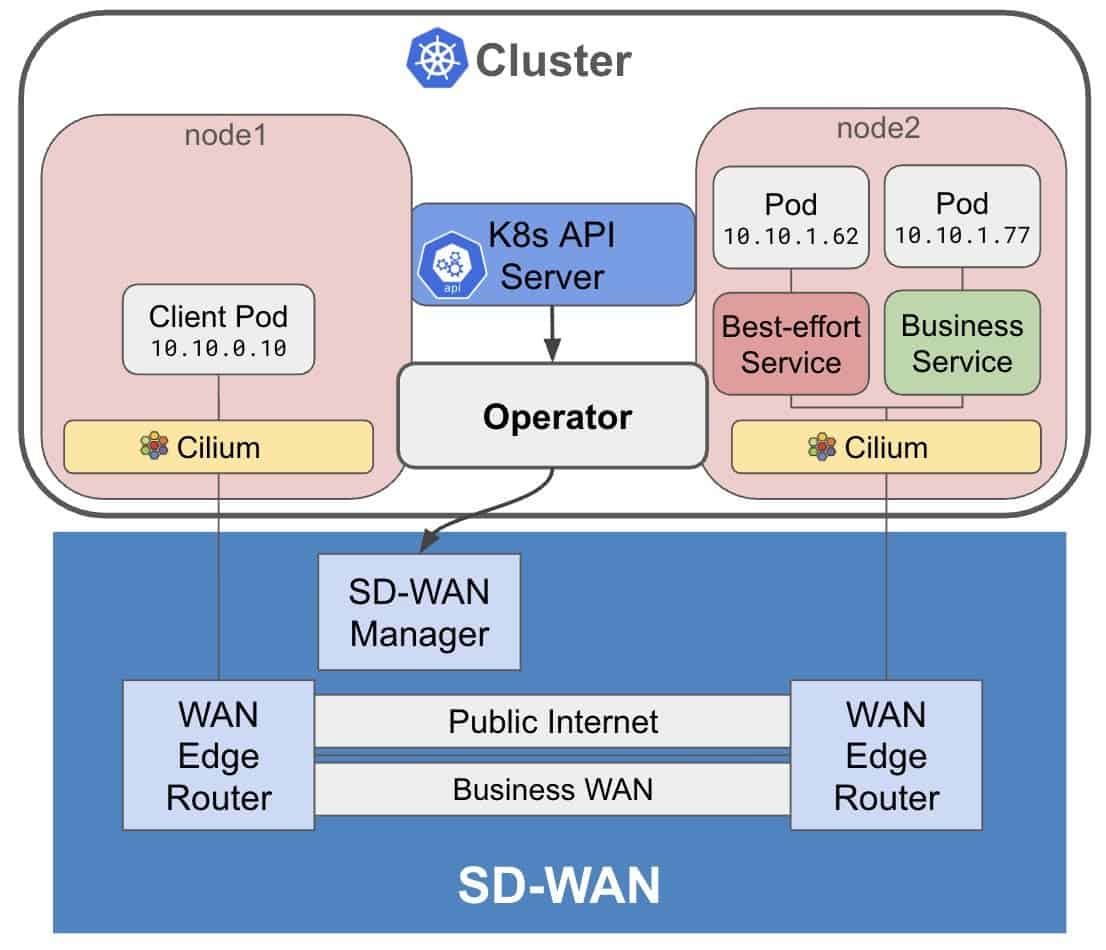

To illustrate, let’s look at a demo environment (see Figure 3) featuring:

- A Kubernetes cluster with two nodes deployed in the “single-cluster scenario” (Figure 2).

- Nodes interconnected via Cilium, running over two distinct SD-WAN tunnels.

In this setup, as you define or update Kubernetes services, the operator will automatically program the underlying SD-WAN fabric to enforce the appropriate connectivity and security policies for your workloads.

Figure 3: A simplified demo setup with a single-cluster Kubernetes with two nodes interconnected with Cilium and a modern SD-WAN implementation (such as Cisco Catalyst SD-WAN). Note: This pattern extends seamlessly to multi-cluster deployments.

End-to-end policy enforcement example

Within the cluster, we will deploy two services, each with specific connectivity and security requirements:

- Best-effort service: Designed for non-sensitive workloads, this service leverages standard network connectivity. It is ideal for applications where best-effort delivery is sufficient and there are no stringent security or performance requirements.

- Business service: This service is responsible for handling business-critical traffic that requires reliable performance. To maintain stringent service levels, all traffic for the Business Service must be routed exclusively through the dedicated Business WAN (bizinternet) SD-WAN tunnel. This approach ensures optimized network performance and strong isolation from general-purpose traffic – ensuring that critical communications remain secure and uninterrupted.

By tailoring network policies to the unique needs of each service, we achieve both operational efficiency for routine workloads and robust protection for sensitive business data.

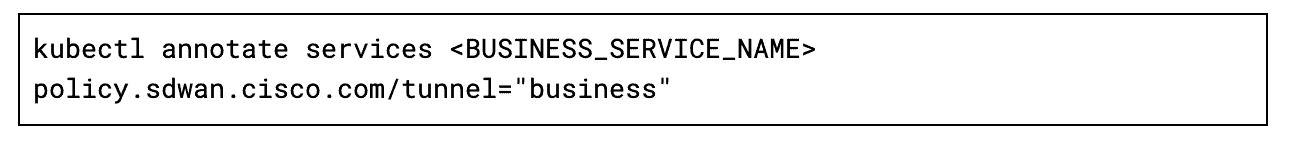

By default, all traffic crossing the cluster boundary uses the default tunnel. In order to ensure that the Business Service uses the Business WAN we just need to add a Kubernetes annotation to the corresponding Kubernetes Service:

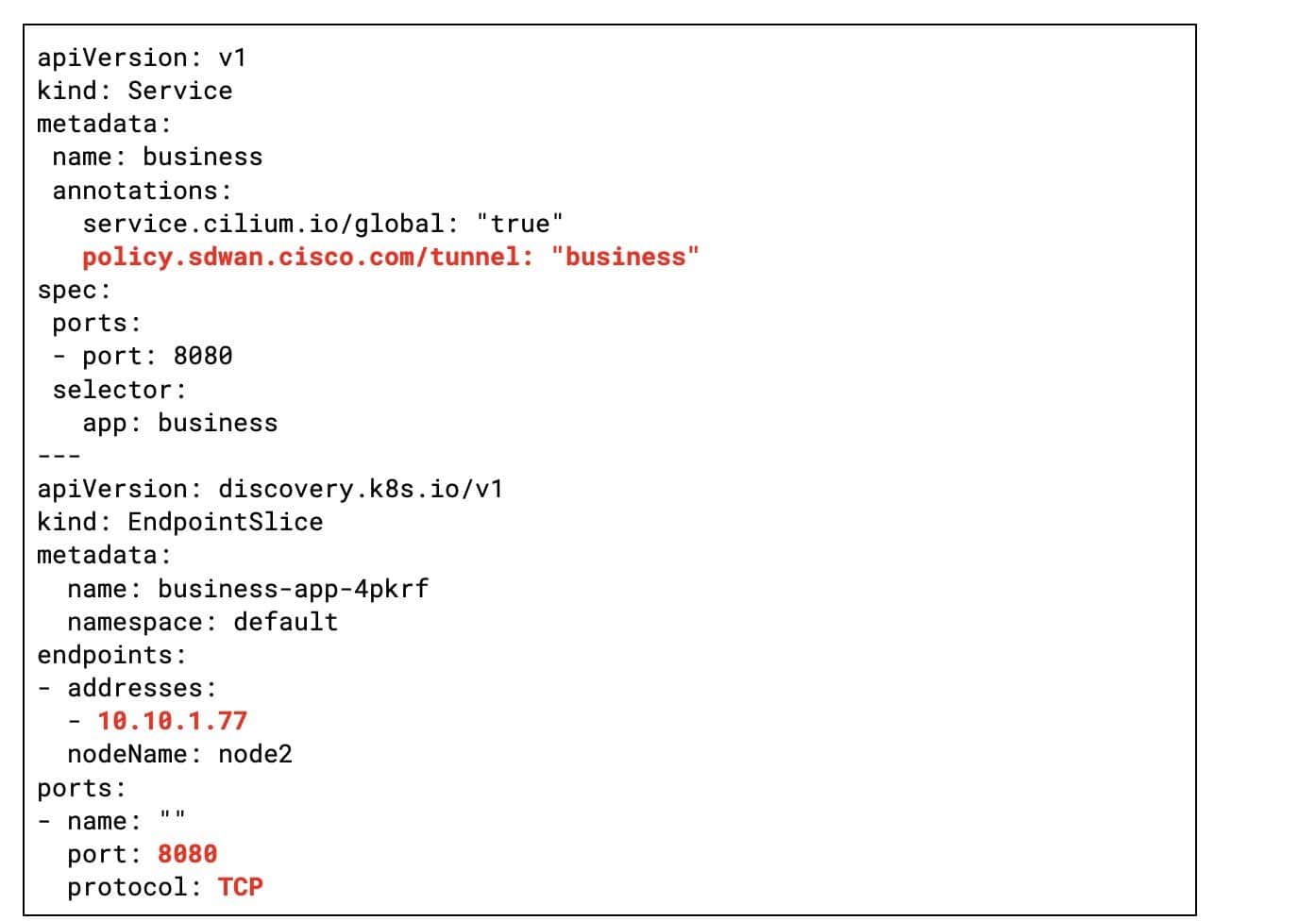

How does this work? The SD-WAN operator watches Service objects, extracts endpoint IPs/ports from the Business Service pods, and dynamically programs the SD-WAN to enforce the business tunnel policy (see Figure 4).

Figure 4: The Kubernetes objects read by the SD-WAN operator to instantiate the SD-WAN rules for the business service.

Future directions: Observability and SLO awareness

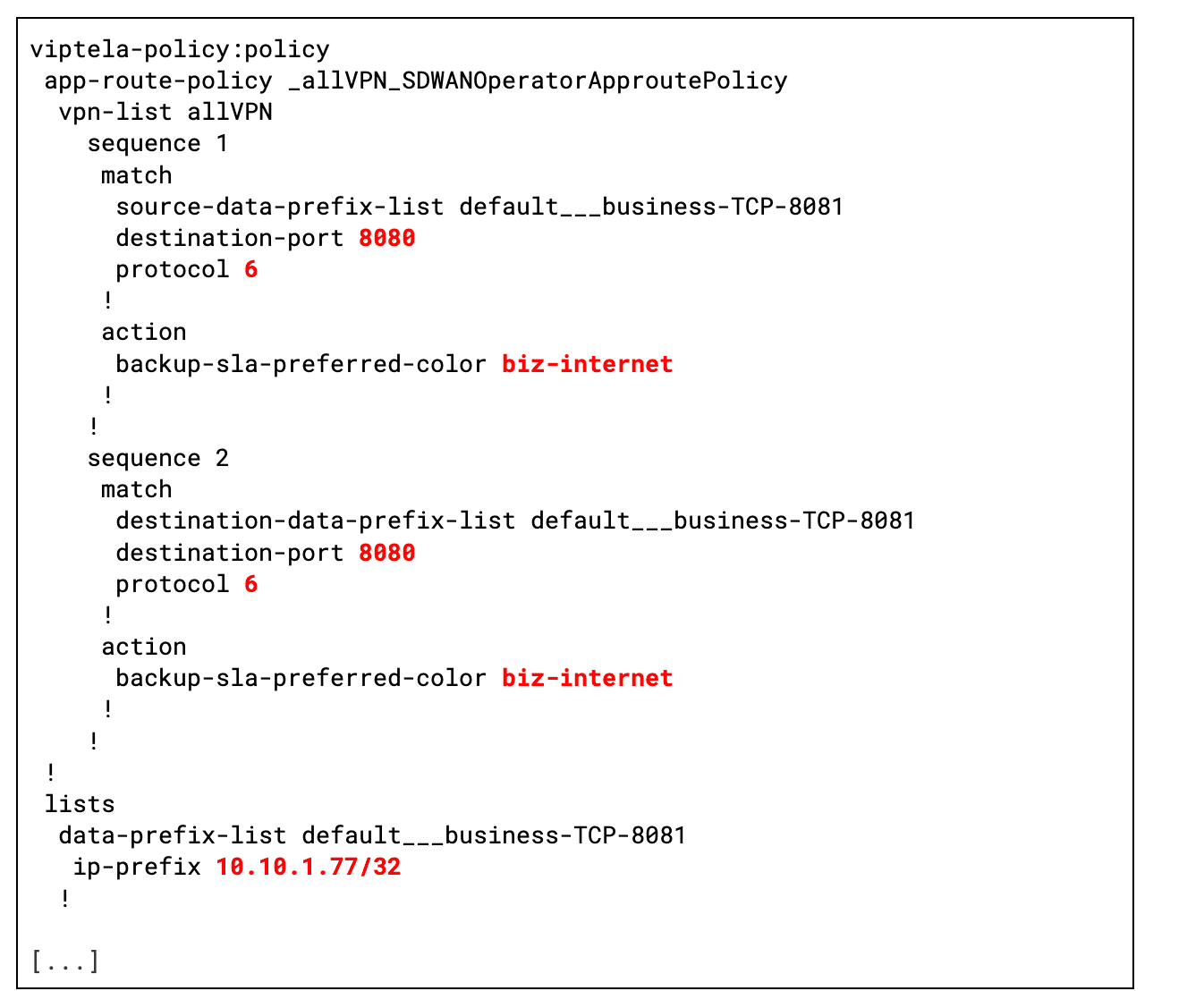

Meanwhile, Figure 5 illustrates the SD-WAN configuration generated by the SD-WAN operator. The configuration highlights two key aspects:

- Business WAN tunnel enforcement: All traffic destined for the pods of the Business Service’ is strictly routed through the dedicated bizinternet SD-WAN tunnel. This ensures that business-critical traffic receives optimized performance as it traverses the network.

- Traffic identification: The SD-WAN dynamically identifies Business Service traffic by inspecting the source and destination IP addresses and ports of the service endpoints. This granular detection enables precise policy enforcement, ensuring that only the intended traffic is routed through the secure business tunnel.

Together, these mechanisms provide robust security and fine-grained control over service-specific traffic flows within and across your Kubernetes clusters.

Figure 5: The SD-WAN configuration issued by the operator

Conclusion

By using a Kubernetes operator it is possible to integrate Cilium and a modern SD-WAN implementation (such as Cisco Catalyst SD-WAN) into a single end-to-end framework to intelligently connect distributed workloads at controlled security and performance. Key takeaways:

- Annotation-driven end-to-end policies: Kubernetes service annotations simplify policy definition, enabling developers to declare intent without needing SD-WAN expertise.

- Automated SD-WAN programming: An SD-WAN operator bridges Kubernetes and the SD-WAN, translating service configurations into real-time network policies.

- Secure multi-tenancy: Critical services are isolated in dedicated tunnels. At the same time, best-effort traffic shares the default tunnel, optimizing security and cost.

This demo operator, however, demonstrates only the first step by providing just the bare intelligent connectivity features. Future work includes exploring end-to-end observability, monitoring and tracing tools. Today, Hubble provides an observability layer to Cilium that can show flows from a Kubernetes perspective, while Cisco Catalyst SD-WAN Manager and Cisco Catalyst SD-WAN Analytics provide extended network observability and visibility, however the missing bit is a single plane of glass. In addition, further future work might consider, exposing the SD-WAN SLOs to Kubernetes for automatic service mapping, and extending the framework to new use cases.

Learn more

Feel free to reach out to the authors at the contact details below. Visit cilium.io to learn more about Cilium. More details on Cisco Catalyst SD-WAN can be found on:

https://www.cisco.com/site/us/en/solutions/networking/sdwan/catalyst/index.html

The creators of Cilium and Cisco Catalyst SD-WAN are also hiring! Check out https://jobs.cisco.com/jobs/SearchJobs/sdwan or https://jobs.cisco.com/jobs/SearchJobs/isovalent for their listings.

Authors:

Gábor Rétvári

- Twitter: @littleredspam

- LinkedIn: https://www.linkedin.com/in/GaborRetvari/

Tamás Lévai

- E-mail: [email protected]

- LinkedIn: https://www.linkedin.com/in/tamaslevai/