Feed aggregator

On Hacking Back

Former DoJ attorney John Carlin writes about hackback, which he defines thus: “A hack back is a type of cyber response that incorporates a counterattack designed to proactively engage with, disable, or collect evidence about an attacker. Although hack backs can take on various forms, they are—by definition—not passive defensive measures.”

His conclusion:

As the law currently stands, specific forms of purely defense measures are authorized so long as they affect only the victim’s system or data.

At the other end of the spectrum, offensive measures that involve accessing or otherwise causing damage or loss to the hacker’s systems are likely prohibited, absent government oversight or authorization. And even then parties should proceed with caution in light of the heightened risks of misattribution, collateral damage, and retaliation...

Prepare for a post-quantum future with RHEL 9.7

Ingress NGINX Retirement: What You Need to Know

To prioritize the safety and security of the ecosystem, Kubernetes SIG Network and the Security Response Committee are announcing the upcoming retirement of Ingress NGINX. Best-effort maintenance will continue until March 2026. Afterward, there will be no further releases, no bugfixes, and no updates to resolve any security vulnerabilities that may be discovered. Existing deployments of Ingress NGINX will continue to function and installation artifacts will remain available.

We recommend migrating to one of the many alternatives. Consider migrating to Gateway API, the modern replacement for Ingress. If you must continue using Ingress, many alternative Ingress controllers are listed in the Kubernetes documentation. Continue reading for further information about the history and current state of Ingress NGINX, as well as next steps.

About Ingress NGINX

Ingress is the original user-friendly way to direct network traffic to workloads running on Kubernetes. (Gateway API is a newer way to achieve many of the same goals.) In order for an Ingress to work in your cluster, there must be an Ingress controller running. There are many Ingress controller choices available, which serve the needs of different users and use cases. Some are cloud-provider specific, while others have more general applicability.

Ingress NGINX was an Ingress controller, developed early in the history of the Kubernetes project as an example implementation of the API. It became very popular due to its tremendous flexibility, breadth of features, and independence from any particular cloud or infrastructure provider. Since those days, many other Ingress controllers have been created within the Kubernetes project by community groups, and by cloud native vendors. Ingress NGINX has continued to be one of the most popular, deployed as part of many hosted Kubernetes platforms and within innumerable independent users’ clusters.

History and Challenges

The breadth and flexibility of Ingress NGINX has caused maintenance challenges. Changing expectations about cloud native software have also added complications. What were once considered helpful options have sometimes come to be considered serious security flaws, such as the ability to add arbitrary NGINX configuration directives via the "snippets" annotations. Yesterday’s flexibility has become today’s insurmountable technical debt.

Despite the project’s popularity among users, Ingress NGINX has always struggled with insufficient or barely-sufficient maintainership. For years, the project has had only one or two people doing development work, on their own time, after work hours and on weekends. Last year, the Ingress NGINX maintainers announced their plans to wind down Ingress NGINX and develop a replacement controller together with the Gateway API community. Unfortunately, even that announcement failed to generate additional interest in helping maintain Ingress NGINX or develop InGate to replace it. (InGate development never progressed far enough to create a mature replacement; it will also be retired.)

Current State and Next Steps

Currently, Ingress NGINX is receiving best-effort maintenance. SIG Network and the Security Response Committee have exhausted our efforts to find additional support to make Ingress NGINX sustainable. To prioritize user safety, we must retire the project.

In March 2026, Ingress NGINX maintenance will be halted, and the project will be retired. After that time, there will be no further releases, no bugfixes, and no updates to resolve any security vulnerabilities that may be discovered. The GitHub repositories will be made read-only and left available for reference.

Existing deployments of Ingress NGINX will not be broken. Existing project artifacts such as Helm charts and container images will remain available.

In most cases, you can check whether you use Ingress NGINX by running kubectl get pods \--all-namespaces \--selector app.kubernetes.io/name=ingress-nginx with cluster administrator permissions.

We would like to thank the Ingress NGINX maintainers for their work in creating and maintaining this project–their dedication remains impressive. This Ingress controller has powered billions of requests in datacenters and homelabs all around the world. In a lot of ways, Kubernetes wouldn’t be where it is without Ingress NGINX, and we are grateful for so many years of incredible effort.

SIG Network and the Security Response Committee recommend that all Ingress NGINX users begin migration to Gateway API or another Ingress controller immediately. Many options are listed in the Kubernetes documentation: Gateway API, Ingress. Additional options may be available from vendors you work with.

OpenFGA Becomes a CNCF Incubating Project

The CNCF Technical Oversight Committee (TOC) has voted to accept OpenFGA as a CNCF incubating project.

What is OpenFGA?

OpenFGA is an authorization engine that addresses the challenge of implementing complex access control at scale in modern software applications. Inspired by Google’s global access control system, Zanzibar, OpenFGA leverages Relationship-Based Access Control (ReBAC). This allows developers to define permissions based on relationships between users and objects (e.g., who can view which document). By serving as an external service with an API and multiple SDKs, it centralizes and abstracts the authorization logic out of the application code. This separation of concerns significantly improves developer velocity by simplifying security implementation and ensures that access rules are consistent, scalable, and easy to audit across all services, solving a critical complexity problem for developers building distributed systems.

OpenFGA’s History

OpenFGA was developed by a group of Okta employees, and is the foundation for the Auth0 FGA commercial offering.

The project was accepted as a CNCF Sandbox project in September 2022. Since then, it has been deployed by hundreds of companies and received multiple contributions. Some major moments and updates include:

- 37 companies publicly acknowledge using it in production.

- Engineers from Grafana Labs and GitPod have become official maintainers.

- OpenFGA was invited to present on the Maintainer’s track at Kubecon + CloudNativeCon Europe 2025.

- A MySQL storage adapter was contributed by TwinTag and SQLite storage adapter was contributed by Grafana Labs.

- OpenFGA started hosting a monthly OpenFGA community meeting in April 2023

- Several developer experience improvements, like:

- New SDKs for Python and Java

- IDE integrations with VS Code and IntelliJ

- A CLI with support for model testing

- A Terraform Provider was donated to the project by Maurice Ackel

- A new caching implementation and multiple performance improvements shipped over the last year.

- OpenFGA also added the ListObjects endpoint to retrieve all resources a user has a specific relation with a resource. Additionally, OpenFGA added the ListUsers endpoint to retrieve all users that have a specific relation with a resource.

Further, OpenFGA integrates with multiple CNCF projects:

- OpenTelemetry for tracing and telemetry

- Helm for deployment

- Grafana dashboards for monitoring

- Prometheus for metrics collection

- ArtifactHub for Helm chart distribution

Maintainer Perspective

“Seeing companies successfully deploy OpenFGA in production demonstrates its viability as an authorization solution. Our focus now is on growth. CNCF Incubation provides increased credibility and visibility – attracting a broader set of contributors and helping secure long-term sustainability. We anticipate this phase supporting us collectively build the definitive and centralized service for fine-grained authorization that the cloud native ecosystem can continue to trust.

— Andres Aguiar, OpenFGA Maintainer and Director of Product at Okta

“When Grafana adopted OpenFGA the community was incredibly welcoming, and we’ve been fortunate to collaborate on enhancements like SQLite support. We are excited to work with CNCF to continue the evolution of the OpenFGA platform.”

— Dan Cech, Distinguished Engineer, Grafana Labs

From the TOC

“Authorization is one of the most complex and critical problems in distributed systems, and OpenFGA provides a clean, scalable solution that developers can actually adopt. Its ReBAC model and API-first approach simplify how teams think about access control, removing layers of custom logic from applications. What impressed me most during the due diligence process was the project’s momentum—strong community growth, diverse maintainers, and real-world production deployments. OpenFGA is quickly becoming a foundational building block for secure, cloud native applications.”

— Ricardo Aravena, CNCF TOC Sponsor

“As the TOC Sponsor for OpenFGA’s incubation, I’ve had the opportunity to work closely with the maintainers and see their deep technical rigor and commitment to excellence firsthand. OpenFGA reflects the kind of thoughtful engineering and collaboration that drives the CNCF ecosystem forward. By externalizing authorization through a developer-friendly API, OpenFGA empowers teams to scale security with the same agility as their infrastructure. Throughout the incubation process, the maintainers have been exceptionally responsive and precise in addressing feedback, demonstrating the project’s maturity and readiness for broader adoption. With growing adoption and strong technical foundations, I’m excited to see how the OpenFGA community continues to expand its capabilities and help organizations strengthen access control across cloud native environments.”

— Faseela Kundattil, CNCF TOC Sponsor

Main Components

Some main components of the project include:

- The OpenFGA server designed to answer authorization requests fast and at scale

- SDKs for Go, .NET, JS, Java, Python

- A CLI to interact with the OpenFGA server and test authorization models

- Helm Charts to deploy to Kubernetes

- Integrations with VS Code and Jetbrains

Notable Milestones

- 4,300+ GitHub Stars

- 2246 Pull Requests

- 459 Issues

- 96 Contributors, 652 across repositories

- 89 Releases

Looking Ahead

OpenFGA is a database, and as with any database, there will always be work to improve performance for every type of query. Future goals of the roadmap are to make it simpler for maintainers to contribute to SDKs; launch new SDKs for Ruby, Rust, and PHP; add support for the AuthZen standard; add new visualization options and open sourcing the OpenFGA playground tool; improve observability; add streaming API endpoints for better performance; and include more robust error handling with new write-conflict options.

You can learn more about OpenFGA here.

As a CNCF-hosted project, OpenFGA is part of a neutral foundation aligned with its technical interests, as well as the larger Linux Foundation, which provides governance, marketing support, and community outreach. OpenFGA joins incubating technologies Backstage, Buildpacks, cert-manager, Chaos Mesh, CloudEvents, Container Network Interface (CNI), Contour, Cortex, CubeFS, Dapr, Dragonfly, Emissary-Ingress, Falco, gRPC, in-toto, Keptn, Keycloak, Knative, KubeEdge, Kubeflow, KubeVela, KubeVirt, Kyverno, Litmus, Longhorn, NATS, Notary, OpenFeature, OpenKruise, OpenMetrics, OpenTelemetry, Operator Framework, Thanos, and Volcano. For more information on maturity requirements for each level, please visit the CNCF Graduation Criteria.

Prompt Injection in AI Browsers

This is why AIs are not ready to be personal assistants:

A new attack called ‘CometJacking’ exploits URL parameters to pass to Perplexity’s Comet AI browser hidden instructions that allow access to sensitive data from connected services, like email and calendar.

In a realistic scenario, no credentials or user interaction are required and a threat actor can leverage the attack by simply exposing a maliciously crafted URL to targeted users.

[…]

CometJacking is a prompt-injection attack where the query string processed by the Comet AI browser contains malicious instructions added using the ‘collection’ parameter of the URL...

A deeper look at post-quantum cryptography support in Red Hat OpenShift 4.20 control plane

Expanding Kubewarden Scope

New Attacks Against Secure Enclaves

Encryption can protect data at rest and data in transit, but does nothing for data in use. What we have are secure enclaves. I’ve written about this before:

Almost all cloud services have to perform some computation on our data. Even the simplest storage provider has code to copy bytes from an internal storage system and deliver them to the user. End-to-end encryption is sufficient in such a narrow context. But often we want our cloud providers to be able to perform computation on our raw data: search, analysis, AI model training or fine-tuning, and more. Without expensive, esoteric techniques, such as secure multiparty computation protocols or homomorphic encryption techniques that can perform calculations on encrypted data, cloud servers require access to the unencrypted data to do anything useful...

The New 2025 OWASP Top 10 List: What Changed, and What You Need to Know

Announcing the 2025 Steering Committee Election Results

The 2025 Steering Committee Election is now complete. The Kubernetes Steering Committee consists of 7 seats, 4 of which were up for election in 2025. Incoming committee members serve a term of 2 years, and all members are elected by the Kubernetes Community.

The Steering Committee oversees the governance of the entire Kubernetes project. With that great power comes great responsibility. You can learn more about the steering committee’s role in their charter.

Thank you to everyone who voted in the election; your participation helps support the community’s continued health and success.

Results

Congratulations to the elected committee members whose two year terms begin immediately (listed in alphabetical order by GitHub handle):

- Kat Cosgrove (@katcosgrove), Minimus

- Paco Xu (@pacoxu), DaoCloud

- Rita Zhang (@ritazh), Microsoft

- Maciej Szulik (@soltysh), Defense Unicorns

They join continuing members:

- Antonio Ojea (@aojea), Google

- Benjamin Elder (@BenTheElder), Google

- Sascha Grunert (@saschagrunert), Red Hat

Maciej Szulik and Paco Xu are returning Steering Committee Members.

Big thanks!

Thank you and congratulations on a successful election to this round’s election officers:

- Christoph Blecker (@cblecker)

- Nina Polshakova (@npolshakova)

- Sreeram Venkitesh (@sreeram-venkitesh)

Thanks to the Emeritus Steering Committee Members. Your service is appreciated by the community:

- Stephen Augustus (@justaugustus), Bloomberg

- Patrick Ohly (@pohly), Intel

And thank you to all the candidates who came forward to run for election.

Get involved with the Steering Committee

This governing body, like all of Kubernetes, is open to all. You can follow along with Steering Committee meeting notes and weigh in by filing an issue or creating a PR against their repo. They have an open meeting on the first Monday at 8am PT of every month. They can also be contacted at their public mailing list [email protected].

You can see what the Steering Committee meetings are all about by watching past meetings on the YouTube Playlist.

This post was adapted from one written by the Contributor Comms Subproject. If you want to write stories about the Kubernetes community, learn more about us.

Drilling Down on Uncle Sam’s Proposed TP-Link Ban

The U.S. government is reportedly preparing to ban the sale of wireless routers and other networking gear from TP-Link Systems, a tech company that currently enjoys an estimated 50% market share among home users and small businesses. Experts say while the proposed ban may have more to do with TP-Link’s ties to China than any specific technical threats, much of the rest of the industry serving this market also sources hardware from China and ships products that are insecure fresh out of the box.

A TP-Link WiFi 6 AX1800 Smart WiFi Router (Archer AX20).

The Washington Post recently reported that more than a half-dozen federal departments and agencies were backing a proposed ban on future sales of TP-Link devices in the United States. The story said U.S. Department of Commerce officials concluded TP-Link Systems products pose a risk because the U.S.-based company’s products handle sensitive American data and because the officials believe it remains subject to jurisdiction or influence by the Chinese government.

TP-Link Systems denies that, saying that it fully split from the Chinese TP-Link Technologies over the past three years, and that its critics have vastly overstated the company’s market share (TP-Link puts it at around 30 percent). TP-Link says it has headquarters in California, with a branch in Singapore, and that it manufactures in Vietnam. The company says it researches, designs, develops and manufactures everything except its chipsets in-house.

TP-Link Systems told The Post it has sole ownership of some engineering, design and manufacturing capabilities in China that were once part of China-based TP-Link Technologies, and that it operates them without Chinese government supervision.

“TP-Link vigorously disputes any allegation that its products present national security risks to the United States,” Ricca Silverio, a spokeswoman for TP-Link Systems, said in a statement. “TP-Link is a U.S. company committed to supplying high-quality and secure products to the U.S. market and beyond.”

Cost is a big reason TP-Link devices are so prevalent in the consumer and small business market: As this February 2025 story from Wired observed regarding the proposed ban, TP-Link has long had a reputation for flooding the market with devices that are considerably cheaper than comparable models from other vendors. That price point (and consistently excellent performance ratings) has made TP-Link a favorite among Internet service providers (ISPs) that provide routers to their customers.

In August 2024, the chairman and the ranking member of the House Select Committee on the Strategic Competition Between the United States and the Chinese Communist Party called for an investigation into TP-Link devices, which they said were found on U.S. military bases and for sale at exchanges that sell them to members of the military and their families.

“TP-Link’s unusual degree of vulnerabilities and required compliance with PRC law are in and of themselves disconcerting,” the House lawmakers warned in a letter (PDF) to the director of the Commerce Department. “When combined with the PRC government’s common use of SOHO [small office/home office] routers like TP-Link to perpetrate extensive cyberattacks in the United States, it becomes significantly alarming.”

The letter cited a May 2023 blog post by Check Point Research about a Chinese state-sponsored hacking group dubbed “Camaro Dragon” that used a malicious firmware implant for some TP-Link routers to carry out a sequence of targeted cyberattacks against European foreign affairs entities. Check Point said while it only found the malicious firmware on TP-Link devices, “the firmware-agnostic nature of the implanted components indicates that a wide range of devices and vendors may be at risk.”

In a report published in October 2024, Microsoft said it was tracking a network of compromised TP-Link small office and home office routers that has been abused by multiple distinct Chinese state-sponsored hacking groups since 2021. Microsoft found the hacker groups were leveraging the compromised TP-Link systems to conduct “password spraying” attacks against Microsoft accounts. Password spraying involves rapidly attempting to access a large number of accounts (usernames/email addresses) with a relatively small number of commonly used passwords.

TP-Link rightly points out that most of its competitors likewise source components from China. The company also correctly notes that advanced persistent threat (APT) groups from China and other nations have leveraged vulnerabilities in products from their competitors, such as Cisco and Netgear.

But that may be cold comfort for TP-Link customers who are now wondering if it’s smart to continue using these products, or whether it makes sense to buy more costly networking gear that might only be marginally less vulnerable to compromise.

Almost without exception, the hardware and software that ships with most consumer-grade routers includes a number of default settings that need to be changed before the devices can be safely connected to the Internet. For example, bring a new router online without changing the default username and password and chances are it will only take a few minutes before it is probed and possibly compromised by some type of Internet-of-Things botnet. Also, it is incredibly common for the firmware in a brand new router to be dangerously out of date by the time it is purchased and unboxed.

Until quite recently, the idea that router manufacturers should make it easier for their customers to use these products safely was something of anathema to this industry. Consumers were largely left to figure that out on their own, with predictably disastrous results.

But over the past few years, many manufacturers of popular consumer routers have begun forcing users to perform basic hygiene — such as changing the default password and updating the internal firmware — before the devices can be used as a router. For example, most brands of “mesh” wireless routers — like Amazon’s Eero, Netgear’s Orbi series, or Asus’s ZenWifi — require online registration that automates these critical steps going forward (or at least through their stated support lifecycle).

For better or worse, less expensive, traditional consumer routers like those from Belkin and Linksys also now automate this setup by heavily steering customers toward installing a mobile app to complete the installation (this often comes as a shock to people more accustomed to manually configuring a router). Still, these products tend to put the onus on users to check for and install available updates periodically. Also, they’re often powered by underwhelming or else bloated firmware, and a dearth of configurable options.

Of course, not everyone wants to fiddle with mobile apps or is comfortable with registering their router so that it can be managed or monitored remotely in the cloud. For those hands-on folks — and for power users seeking more advanced router features like VPNs, ad blockers and network monitoring — the best advice is to check if your router’s stock firmware can be replaced with open-source alternatives, such as OpenWrt or DD-WRT.

These open-source firmware options are compatible with a wide range of devices, and they generally offer more features and configurability. Open-source firmware can even help extend the life of routers years after the vendor stops supporting the underlying hardware, but it still requires users to manually check for and install any available updates.

Happily, TP-Link users spooked by the proposed ban may have an alternative to outright junking these devices, as many TP-Link routers also support open-source firmware options like OpenWRT. While this approach may not eliminate any potential hardware-specific security flaws, it could serve as an effective hedge against more common vendor-specific vulnerabilities, such as undocumented user accounts, hard-coded credentials, and weaknesses that allow attackers to bypass authentication.

Regardless of the brand, if your router is more than four or five years old it may be worth upgrading for performance reasons alone — particularly if your home or office is primarily accessing the Internet through WiFi.

NB: The Post’s story notes that a substantial portion of TP-Link routers and those of its competitors are purchased or leased through ISPs. In these cases, the devices are typically managed and updated remotely by your ISP, and equipped with custom profiles responsible for authenticating your device to the ISP’s network. If this describes your setup, please do not attempt to modify or replace these devices without first consulting with your Internet provider.

Friday Squid Blogging: Squid Game: The Challenge, Season Two

The second season of the Netflix reality competition show Squid Game: The Challenge has dropped. (Too many links to pick a few—search for it.)

As usual, you can also use this squid post to talk about the security stories in the news that I haven’t covered.

Self-hosted human and machine identities in Keycloak 26.4

Keycloak is a leading open source solution in the cloud-native ecosystem for Identity and Access Management, a key component of accessing applications and their data.

With the release of Keycloak 26.4, we’ve added features for both machine and human identities. New features focus on security enhancement, deeper integration, and improved server administration. See below for the release highlights, or dive deeper in our Keycloak 26.4 release announcement.

Keycloak recently surpassed 30k GitHub stars and 1,350 contributors. If you’re attending KubeCon + CloudNativeCon North America in Atlanta, stop by and say hi—we’d love to hear how you’re using Keycloak!

What’s New in 26.4

Passwordless user authentication with Passkeys

Keycloak now offers full support for Passkeys. As secure, passwordless authentication becomes the new standard, we’ve made passkeys simple to configure. For environments that are unable to adopt passkeys, Keycloak continues to support OTP and recovery codes. You can find a passkey walkthrough on the Keycloak blog.

Tightened OpenID Connect security with FAPI 2 and DPoP

Keycloak 26.4 implements the Financial-grade API (FAPI) 2.0 standard, ensuring strong security best practices. This includes support for Demonstrating Proof-of-Possession (DPoP), which is a safer way to handle tokens in public OpenID Connect clients.

Simplified deployments across multiple availability zones

Deployment across multiple availability zones or data centers is simplified in 26.4:

- Split-brain detection

- Full support in the Keycloak Operator

- Latency optimizations when Keycloak nodes run in different data centers

Keycloak docs contain a full step-by-step guide, and we published a blog post on how to scale to 2,000 logins/sec and 10,000 token refreshes/sec.

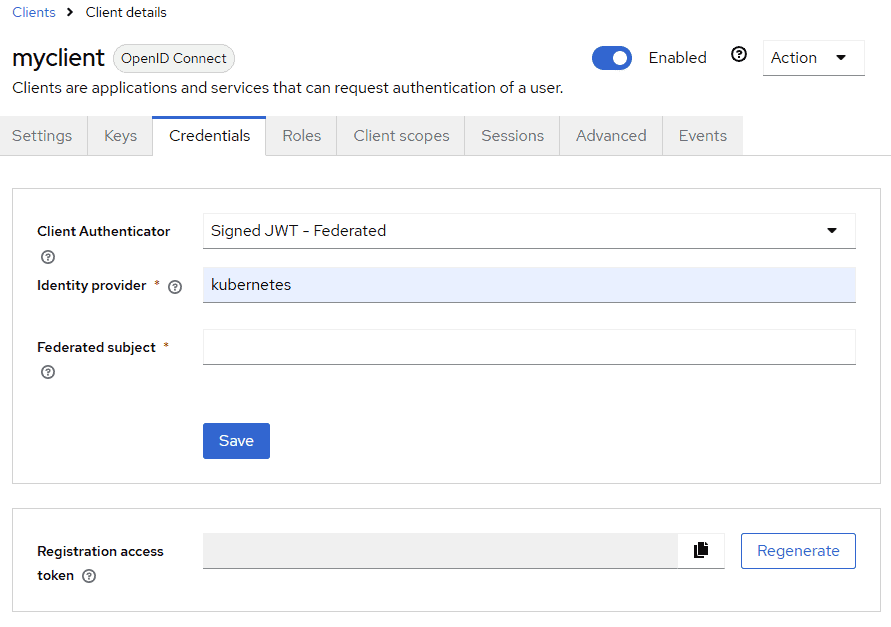

Authenticating applications with Kubernetes service account tokens or SPIFFE

When applications interact with Keycloak around OpenID Connect, each confidential server-side application needs credentials. This usually comes with the churn to distribute and rotate them regularly.

With 26.4, you can use Kubernetes service account tokens, which are automatically distributed to each Pod when running on Kubernetes. This removes the need to distribute and rotate an extra pair of credentials. For use cases inside and outside Kubernetes, you can also use SPIFFE.

To test this preview feature:

- Enable the features

client-auth-federated:v1,spiffe:v1, andkubernetes-service-accounts:v1. - Register a Kubernetes or SPIFFE identity provider in Keycloak.

- For a client registered in Keycloak, configure the Client Authenticator in the Credentials tab as Signed JWT – Federated, referencing the identity provider created in the previous step and the expected subject in the JWT.

Looking ahead

Keycloak’s roadmap includes:

- Improved MCP support to better support AI agents

- Improved token exchange

- Automating administrative tasks using workflows triggered by authentication events.

You can follow our journey at keycloak.org and get involved. Our nightly builds give you early access to Keycloak’s latest features.

Faking Receipts with AI

Over the past few decades, it’s become easier and easier to create fake receipts. Decades ago, it required special paper and printers—I remember a company in the UK advertising its services to people trying to cover up their affairs. Then, receipts became computerized, and faking them required some artistic skills to make the page look realistic.

Now, AI can do it all:

Several receipts shown to the FT by expense management platforms demonstrated the realistic nature of the images, which included wrinkles in paper, detailed itemization that matched real-life menus, and signatures...

Gateway API 1.4: New Features

Ready to rock your Kubernetes networking? The Kubernetes SIG Network community presented the General Availability (GA) release of Gateway API (v1.4.0)! Released on October 6, 2025, version 1.4.0 reinforces the path for modern, expressive, and extensible service networking in Kubernetes.

Gateway API v1.4.0 brings three new features to the Standard channel (Gateway API's GA release channel):

- BackendTLSPolicy for TLS between gateways and backends

supportedFeaturesin GatewayClass status- Named rules for Routes

and introduces three new experimental features:

- Mesh resource for service mesh configuration

- Default gateways to ease configuration burden**

externalAuthfilter for HTTPRoute

Graduations to Standard Channel

Backend TLS policy

Leads: Candace Holman, Norwin Schnyder, Katarzyna Łach

GEP-1897: BackendTLSPolicy

BackendTLSPolicy is a new Gateway API type for specifying the TLS configuration of the connection from the Gateway to backend pod(s). . Prior to the introduction of BackendTLSPolicy, there was no API specification that allowed encrypted traffic on the hop from Gateway to backend.

The BackendTLSPolicy validation configuration requires a hostname. This hostname

serves two purposes. It is used as the SNI header when connecting to the backend and

for authentication, the certificate presented by the backend must match this hostname,

unless subjectAltNames is explicitly specified.

If subjectAltNames (SANs) are specified, the hostname is only used for SNI, and authentication is performed against the SANs instead. If you still need to authenticate against the hostname value in this case, you MUST add it to the subjectAltNames list.

BackendTLSPolicy validation configuration also requires either caCertificateRefs or wellKnownCACertificates.

caCertificateRefs refer to one or more (up to 8) PEM-encoded TLS certificate bundles. If there are no specific certificates to use,

then depending on your implementation, you may use wellKnownCACertificates,

set to "System" to tell the Gateway to use an implementation-specific set of trusted CA Certificates.

In this example, the BackendTLSPolicy is configured to use certificates defined in the auth-cert ConfigMap

to connect with a TLS-encrypted upstream connection where pods backing the auth service are expected to serve a

valid certificate for auth.example.com. It uses subjectAltNames with a Hostname type, but you may also use a URI type.

apiVersion: gateway.networking.k8s.io/v1

kind: BackendTLSPolicy

metadata:

name: tls-upstream-auth

spec:

targetRefs:

- kind: Service

name: auth

group: ""

sectionName: "https"

validation:

caCertificateRefs:

- group: "" # core API group

kind: ConfigMap

name: auth-cert

subjectAltNames:

- type: "Hostname"

hostname: "auth.example.com"

In this example, the BackendTLSPolicy is configured to use system certificates to connect with a TLS-encrypted backend connection where Pods backing the dev Service are expected to serve a valid certificate for dev.example.com.

apiVersion: gateway.networking.k8s.io/v1

kind: BackendTLSPolicy

metadata:

name: tls-upstream-dev

spec:

targetRefs:

- kind: Service

name: dev

group: ""

sectionName: "btls"

validation:

wellKnownCACertificates: "System"

hostname: dev.example.com

More information on the configuration of TLS in Gateway API can be found in Gateway API - TLS Configuration.

Status information about the features that an implementation supports

Leads: Lior Lieberman, Beka Modebadze

GEP-2162: Supported features in GatewayClass Status

GatewayClass status has a new field, supportedFeatures.

This addition allows implementations to declare the set of features they support. This provides a clear way for users and tools to understand the capabilities of a given GatewayClass.

This feature's name for conformance tests (and GatewayClass status reporting) is SupportedFeatures.

Implementations must populate the supportedFeatures field in the .status of the GatewayClass before the GatewayClass

is accepted, or in the same operation.

Here’s an example of a supportedFeatures published under GatewayClass' .status:

apiVersion: gateway.networking.k8s.io/v1

kind: GatewayClass

...

status:

conditions:

- lastTransitionTime: "2022-11-16T10:33:06Z"

message: Handled by Foo controller

observedGeneration: 1

reason: Accepted

status: "True"

type: Accepted

supportedFeatures:

- HTTPRoute

- HTTPRouteHostRewrite

- HTTPRoutePortRedirect

- HTTPRouteQueryParamMatching

Graduation of SupportedFeatures to Standard, helped improve the conformance testing process for Gateway API. The conformance test suite will now automatically run tests based on the features populated in the GatewayClass' status. This creates a strong, verifiable link between an implementation's declared capabilities and the test results, making it easier for implementers to run the correct conformance tests and for users to trust the conformance reports.

This means when the SupportedFeatures field is populated in the GatewayClass status there will be no need for additional

conformance tests flags like –suported-features, or –exempt or –all-features.

It's important to note that Mesh features are an exception to this and can be tested for conformance by using

Conformance Profiles, or by manually providing any combination of features related flags until the dedicated resource

graduates from the experimental channel.

Named rules for Routes

GEP-995: Adding a new name field to all xRouteRule types (HTTPRouteRule, GRPCRouteRule, etc.)

Leads: Guilherme Cassolato

This enhancement enables route rules to be explicitly identified and referenced across the Gateway API ecosystem. Some of the key use cases include:

- Status: Allowing status conditions to reference specific rules directly by name.

- Observability: Making it easier to identify individual rules in logs, traces, and metrics.

- Policies: Enabling policies (GEP-713) to target specific route rules via the

sectionNamefield in theirtargetRef[s]. - Tooling: Simplifying filtering and referencing of route rules in tools such as

gwctl,kubectl, and general-purpose utilities likejqandyq. - Internal configuration mapping: Facilitating the generation of internal configurations that reference route rules by name within gateway and mesh implementations.

This follows the same well-established pattern already adopted for Gateway listeners, Service ports, Pods (and containers), and many other Kubernetes resources.

While the new name field is optional (so existing resources remain valid), its use is strongly encouraged. Implementations are not expected to assign a default value, but they may enforce constraints such as immutability.

Finally, keep in mind that the name format is validated,

and other fields (such as sectionName)

may impose additional, indirect constraints.

Experimental channel changes

Enabling external Auth for HTTPRoute

Giving Gateway API the ability to enforce authentication and maybe authorization as well at the Gateway or HTTPRoute level has been a highly requested feature for a long time. (See the GEP-1494 issue for some background.)

This Gateway API release adds an Experimental filter in HTTPRoute that tells the Gateway API implementation to call out to an external service to authenticate (and, optionally, authorize) requests.

This filter is based on the Envoy ext_authz API, and allows talking to an Auth service that uses either gRPC or HTTP for its protocol.

Both methods allow the configuration of what headers to forward to the Auth service, with the HTTP protocol allowing some extra information like a prefix path.

A HTTP example might look like this (noting that this example requires the Experimental channel to be installed and an implementation that supports External Auth to actually understand the config):

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: require-auth

namespace: default

spec:

parentRefs:

- name: your-gateway-here

rules:

- matches:

- path:

type: Prefix

value: /admin

filters:

- type: ExternalAuth

externalAuth:

protocol: HTTP

backendRef:

name: auth-service

http:

# These headers are always sent for the HTTP protocol,

# but are included here for illustrative purposes

allowedHeaders:

- Host

- Method

- Path

- Content-Length

- Authorization

backendRefs:

- name: admin-backend

port: 8080

This allows the backend Auth service to use the supplied headers to make a determination about the authentication for the request.

When a request is allowed, the external Auth service will respond with a 200 HTTP response code, and optionally extra headers to be included in the request that is forwarded to the backend. When the request is denied, the Auth service will respond with a 403 HTTP response.

Since the Authorization header is used in many authentication methods, this method can be used to do Basic, Oauth, JWT, and other common authentication and authorization methods.

Mesh resource

Lead(s): Flynn

GEP-3949: Mesh-wide configuration and supported features

Gateway API v1.4.0 introduces a new experimental Mesh resource, which provides a way to configure mesh-wide settings and discover the features supported by a given mesh implementation. This resource is analogous to the Gateway resource and will initially be mainly used for conformance testing, with plans to extend its use to off-cluster Gateways in the future.

The Mesh resource is cluster-scoped and, as an experimental feature, is named XMesh and resides in the gateway.networking.x-k8s.io API group. A key field is controllerName, which specifies the mesh implementation responsible for the resource. The resource's status stanza indicates whether the mesh implementation has accepted it and lists the features the mesh supports.

One of the goals of this GEP is to avoid making it more difficult for users to adopt a mesh. To simplify adoption, mesh implementations are expected to create a default Mesh resource upon startup if one with a matching controllerName doesn't already exist. This avoids the need for manual creation of the resource to begin using a mesh.

The new XMesh API kind, within the gateway.networking.x-k8s.io/v1alpha1 API group, provides a central point for mesh configuration and feature discovery (source).

A minimal XMesh object specifies the controllerName:

apiVersion: gateway.networking.x-k8s.io/v1alpha1

kind: XMesh

metadata:

name: one-mesh-to-mesh-them-all

spec:

controllerName: one-mesh.example.com/one-mesh

The mesh implementation populates the status field to confirm it has accepted the resource and to list its supported features ( source):

status:

conditions:

- type: Accepted

status: "True"

reason: Accepted

supportedFeatures:

- name: MeshHTTPRoute

- name: OffClusterGateway

Introducing default Gateways

Lead(s): Flynn

GEP-3793: Allowing Gateways to program some routes by default.

For application developers, one common piece of feedback has been the need to explicitly name a parent Gateway for every single north-south Route. While this explicitness prevents ambiguity, it adds friction, especially for developers who just want to expose their application to the outside world without worrying about the underlying infrastructure's naming scheme. To address this, we have introduce the concept of Default Gateways.

For application developers: Just "use the default"

As an application developer, you often don't care about the specific Gateway your traffic flows through, you just want it to work. With this enhancement, you can now create a Route and simply ask it to use a default Gateway.

This is done by setting the new useDefaultGateways field in your Route's spec.

Here’s a simple HTTPRoute that uses a default Gateway:

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: my-route

spec:

useDefaultGateways: All

rules:

- backendRefs:

- name: my-service

port: 80

That's it! No more need to hunt down the correct Gateway name for your environment. Your Route is now a "defaulted Route."

For cluster operators: You're still in control

This feature doesn't take control away from cluster operators ("Chihiro"). In fact, they have explicit control over which Gateways can act as a default. A Gateway will only accept these defaulted Routes if it is configured to do so.

You can also use a ValidatingAdmissionPolicy to either require or even forbid for Routes to rely on a default Gateway.

As a cluster operator, you can designate a Gateway as a default

by setting the (new) .spec.defaultScope field:

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: my-default-gateway

namespace: default

spec:

defaultScope: All

# ... other gateway configuration

Operators can choose to have no default Gateways, or even multiple.

How it works and key details

-

To maintain a clean, GitOps-friendly workflow, a default Gateway does not modify the

spec.parentRefsof your Route. Instead, the binding is reflected in the Route'sstatusfield. You can always inspect thestatus.parentsstanza of your Route to see exactly which Gateway or Gateways have accepted it. This preserves your original intent and avoids conflicts with CD tools. -

The design explicitly supports having multiple Gateways designated as defaults within a cluster. When this happens, a defaulted Route will bind to all of them. This enables cluster operators to perform zero-downtime migrations and testing of new default Gateways.

-

You can create a single Route that handles both north-south traffic (traffic entering or leaving the cluster, via a default Gateway) and east-west/mesh traffic (traffic between services within the cluster), by explicitly referencing a Service in

parentRefs.

Default Gateways represent a significant step forward in making the Gateway API simpler and more intuitive for everyday use cases, bridging the gap between the flexibility needed by operators and the simplicity desired by developers.

Configuring client certificate validation

Lead(s): Arko Dasgupta, Katarzyna Łach

GEP-91: Address connection coalescing security issue

This release brings updates for configuring client certificate validation, addressing a critical security vulnerability related to connection reuse. HTTP connection coalescing is a web performance optimization that allows a client to reuse an existing TLS connection for requests to different domains. While this reduces the overhead of establishing new connections, it introduces a security risk in the context of API gateways. The ability to reuse a single TLS connection across multiple Listeners brings the need to introduce shared client certificate configuration in order to avoid unauthorized access.

Why SNI-based mTLS is not the answer

One might think that using Server Name Indication (SNI) to differentiate between Listeners would solve this problem. However, TLS SNI is not a reliable mechanism for enforcing security policies in a connection coalescing scenario. A client could use a single TLS connection for multiple peer connections, as long as they are all covered by the same certificate. This means that a client could establish a connection by indicating one peer identity (using SNI), and then reuse that connection to access a different virtual host that is listening on the same IP address and port. That reuse, which is controlled by client side heuristics, could bypass mutual TLS policies that were specific to the second listener configuration.

Here's an example to help explain it:

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: wildcard-tls-gateway

spec:

gatewayClassName: example

listeners:

- name: foo-https

protocol: HTTPS

port: 443

hostname: foo.example.com

tls:

certificateRefs:

- group: "" # core API group

kind: Secret

name: foo-example-com-cert # SAN: foo.example.com

- name: wildcard-https

protocol: HTTPS

port: 443

hostname: "*.example.com"

tls:

certificateRefs:

- group: "" # core API group

kind: Secret

name: wildcard-example-com-cert # SAN: *.example.com

I have configured a Gateway with two listeners, both having overlapping hostnames.

My intention is for the foo-http listener to be accessible only by clients presenting the foo-example-com-cert certificate.

In contrast, the wildcard-https listener should allow access to a broader audience using any certificate valid for the *.example.com domain.

Consider a scenario where a client initially connects to foo.example.com. The server requests and successfully validates the

foo-example-com-cert certificate, establishing the connection. Subsequently, the same client wishes to access other sites within this domain,

such as bar.example.com, which is handled by the wildcard-https listener. Due to connection reuse,

clients can access wildcard-https backends without an additional TLS handshake on the existing connection.

This process functions as expected.

However, a critical security vulnerability arises when the order of access is reversed.

If a client first connects to bar.example.com and presents a valid bar.example.com certificate, the connection is successfully established.

If this client then attempts to access foo.example.com, the existing connection's client certificate will not be re-validated.

This allows the client to bypass the specific certificate requirement for the foo backend, leading to a serious security breach.

The solution: per-port TLS configuration

The updated Gateway API gains a tls field in the .spec of a Gateway, that allows you to define a default client certificate

validation configuration for all Listeners, and then if needed override it on a per-port basis. This provides a flexible and

powerful way to manage your TLS policies.

Here’s a look at the updated API definitions (shown as Go source code):

// GatewaySpec defines the desired state of Gateway.

type GatewaySpec struct {

...

// GatewayTLSConfig specifies frontend tls configuration for gateway.

TLS *GatewayTLSConfig `json:"tls,omitempty"`

}

// GatewayTLSConfig specifies frontend tls configuration for gateway.

type GatewayTLSConfig struct {

// Default specifies the default client certificate validation configuration

Default TLSConfig `json:"default"`

// PerPort specifies tls configuration assigned per port.

PerPort []TLSPortConfig `json:"perPort,omitempty"`

}

// TLSPortConfig describes a TLS configuration for a specific port.

type TLSPortConfig struct {

// The Port indicates the Port Number to which the TLS configuration will be applied.

Port PortNumber `json:"port"`

// TLS store the configuration that will be applied to all Listeners handling

// HTTPS traffic and matching given port.

TLS TLSConfig `json:"tls"`

}

Breaking changes

Standard GRPCRoute - .spec field required (technicality)

The promotion of GRPCRoute to Standard introduces a minor but technically breaking change regarding the presence of the top-level .spec field.

As part of achieving Standard status, the Gateway API has tightened the OpenAPI schema validation within the GRPCRoute

CustomResourceDefinition (CRD)

to explicitly ensure the spec field is required for all GRPCRoute resources.

This change enforces stricter conformance to Kubernetes object standards and enhances the resource's stability and predictability.

While it is highly unlikely that users were attempting to define a GRPCRoute without any specification, any existing automation

or manifests that might have relied on a relaxed interpretation allowing a completely absent spec field will now fail validation

and must be updated to include the .spec field, even if empty.

Experimental CORS support in HTTPRoute - breaking change for allowCredentials field

The Gateway API subproject has introduced a breaking change to the Experimental CORS support in HTTPRoute, concerning the allowCredentials field

within the CORS policy.

This field's definition has been strictly aligned with the upstream CORS specification, which dictates that the corresponding

Access-Control-Allow-Credentials header must represent a Boolean value.

Previously, the implementation might have been overly permissive, potentially accepting non-standard or string representations such as

true due to relaxed schema validation.

Users who were configuring CORS rules must now review their manifests and ensure the value for allowCredentials

strictly conforms to the new, more restrictive schema.

Any existing HTTPRoute definitions that do not adhere to this stricter validation will now be rejected by the API server,

requiring a configuration update to maintain functionality.

Improving the development and usage experience

As part of this release, we have improved some of the developer experience workflow:

- Added Kube API Linter to the CI/CD pipelines, reducing the burden of API reviewers and also reducing the amount of common mistakes.

- Improving the execution time of CRD tests with the usage of

envtest.

Additionally, as part of the effort to improve Gateway API usage experience, some efforts were made to remove some ambiguities and some old tech-debts from our documentation website:

- The API reference is now explicit when a field is

experimental. - The GEP (GatewayAPI Enhancement Proposal) navigation bar is automatically generated, reflecting the real status of the enhancements.

Try it out

Unlike other Kubernetes APIs, you don't need to upgrade to the latest version of Kubernetes to get the latest version of Gateway API. As long as you're running Kubernetes 1.26 or later, you'll be able to get up and running with this version of Gateway API.

To try out the API, follow the Getting Started Guide.

As of this writing, seven implementations are already conformant with Gateway API v1.4.0. In alphabetical order:

- Agent Gateway (with kgateway)

- Airlock Microgateway

- Envoy Gateway

- GKE Gateway

- Istio

- kgateway

- Traefik Proxy

Get involved

Wondering when a feature will be added? There are lots of opportunities to get involved and help define the future of Kubernetes routing APIs for both ingress and service mesh.

- Check out the user guides to see what use-cases can be addressed.

- Try out one of the existing Gateway controllers.

- Or join us in the community and help us build the future of Gateway API together!

The maintainers would like to thank everyone who's contributed to Gateway API, whether in the form of commits to the repo, discussion, ideas, or general support. We could never have made this kind of progress without the support of this dedicated and active community.

Related Kubernetes blog articles

- Gateway API v1.3.0: Advancements in Request Mirroring, CORS, Gateway Merging, and Retry Budgets (June 2025)

- Gateway API v1.2: WebSockets, Timeouts, Retries, and More (November 2024)

- Gateway API v1.1: Service mesh, GRPCRoute, and a whole lot more (May 2024)

- New Experimental Features in Gateway API v1.0 (November 2023)

- Gateway API v1.0: GA Release (October 2023)

Rigged Poker Games

The Department of Justice has indicted thirty-one people over the high-tech rigging of high-stakes poker games.

In a typical legitimate poker game, a dealer uses a shuffling machine to shuffle the cards randomly before dealing them to all the players in a particular order. As set forth in the indictment, the rigged games used altered shuffling machines that contained hidden technology allowing the machines to read all the cards in the deck. Because the cards were always dealt in a particular order to the players at the table, the machines could determine which player would have the winning hand. This information was transmitted to an off-site member of the conspiracy, who then transmitted that information via cellphone back to a member of the conspiracy who was playing at the table, referred to as the “Quarterback” or “Driver.” The Quarterback then secretly signaled this information (usually by prearranged signals like touching certain chips or other items on the table) to other co-conspirators playing at the table, who were also participants in the scheme. Collectively, the Quarterback and other players in on the scheme (i.e., the cheating team) used this information to win poker games against unwitting victims, who sometimes lost tens or hundreds of thousands of dollars at a time. The defendants used other cheating technology as well, such as a chip tray analyzer (essentially, a poker chip tray that also secretly read all cards using hidden cameras), an x-ray table that could read cards face down on the table, and special contact lenses or eyeglasses that could read pre-marked cards. ...

Cloudflare Scrubs Aisuru Botnet from Top Domains List

For the past week, domains associated with the massive Aisuru botnet have repeatedly usurped Amazon, Apple, Google and Microsoft in Cloudflare’s public ranking of the most frequently requested websites. Cloudflare responded by redacting Aisuru domain names from their top websites list. The chief executive at Cloudflare says Aisuru’s overlords are using the botnet to boost their malicious domain rankings, while simultaneously attacking the company’s domain name system (DNS) service.

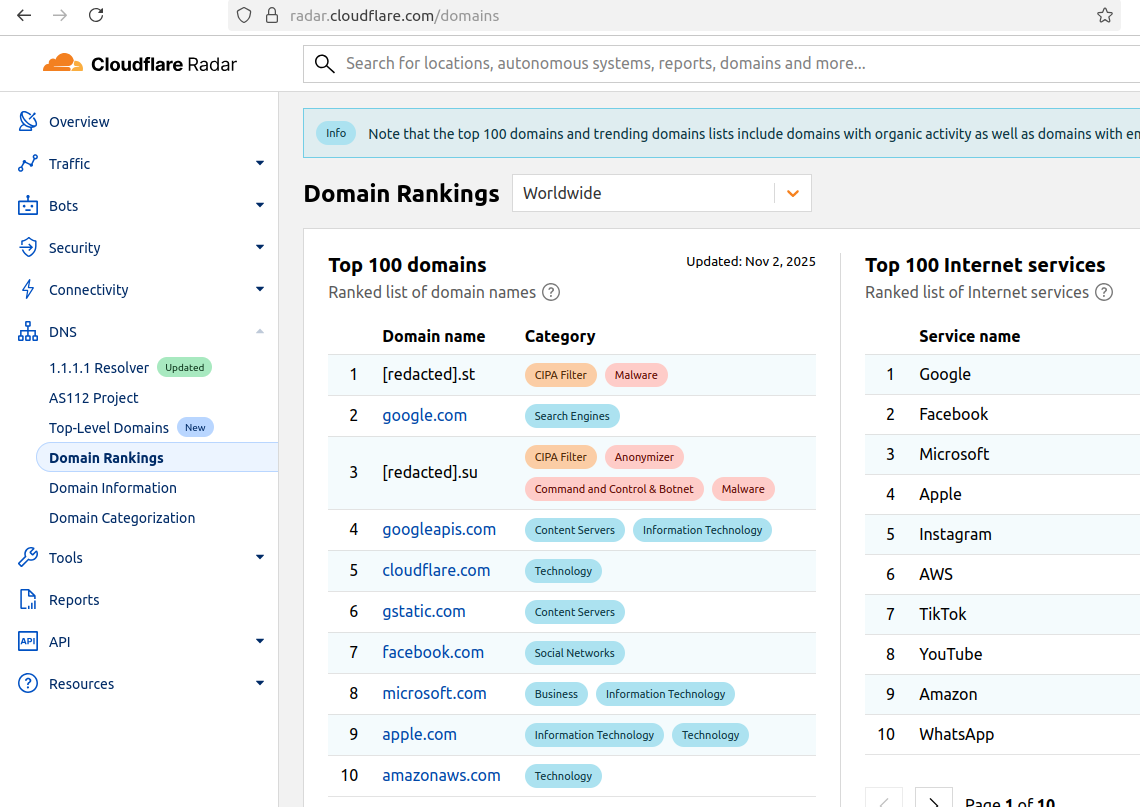

The #1 and #3 positions in this chart are Aisuru botnet controllers with their full domain names redacted. Source: radar.cloudflare.com.

Aisuru is a rapidly growing botnet comprising hundreds of thousands of hacked Internet of Things (IoT) devices, such as poorly secured Internet routers and security cameras. The botnet has increased in size and firepower significantly since its debut in 2024, demonstrating the ability to launch record distributed denial-of-service (DDoS) attacks nearing 30 terabits of data per second.

Until recently, Aisuru’s malicious code instructed all infected systems to use DNS servers from Google — specifically, the servers at 8.8.8.8. But in early October, Aisuru switched to invoking Cloudflare’s main DNS server — 1.1.1.1 — and over the past week domains used by Aisuru to control infected systems started populating Cloudflare’s top domain rankings.

As screenshots of Aisuru domains claiming two of the Top 10 positions ping-ponged across social media, many feared this was yet another sign that an already untamable botnet was running completely amok. One Aisuru botnet domain that sat prominently for days at #1 on the list was someone’s street address in Massachusetts followed by “.com”. Other Aisuru domains mimicked those belonging to major cloud providers.

Cloudflare tried to address these security, brand confusion and privacy concerns by partially redacting the malicious domains, and adding a warning at the top of its rankings:

“Note that the top 100 domains and trending domains lists include domains with organic activity as well as domains with emerging malicious behavior.”

Cloudflare CEO Matthew Prince told KrebsOnSecurity the company’s domain ranking system is fairly simplistic, and that it merely measures the volume of DNS queries to 1.1.1.1.

“The attacker is just generating a ton of requests, maybe to influence the ranking but also to attack our DNS service,” Prince said, adding that Cloudflare has heard reports of other large public DNS services seeing similar uptick in attacks. “We’re fixing the ranking to make it smarter. And, in the meantime, redacting any sites we classify as malware.”

Renee Burton, vice president of threat intel at the DNS security firm Infoblox, said many people erroneously assumed that the skewed Cloudflare domain rankings meant there were more bot-infected devices than there were regular devices querying sites like Google and Apple and Microsoft.

“Cloudflare’s documentation is clear — they know that when it comes to ranking domains you have to make choices on how to normalize things,” Burton wrote on LinkedIn. “There are many aspects that are simply out of your control. Why is it hard? Because reasons. TTL values, caching, prefetching, architecture, load balancing. Things that have shared control between the domain owner and everything in between.”

Alex Greenland is CEO of the anti-phishing and security firm Epi. Greenland said he understands the technical reason why Aisuru botnet domains are showing up in Cloudflare’s rankings (those rankings are based on DNS query volume, not actual web visits). But he said they’re still not meant to be there.

“It’s a failure on Cloudflare’s part, and reveals a compromise of the trust and integrity of their rankings,” he said.

Greenland said Cloudflare planned for its Domain Rankings to list the most popular domains as used by human users, and it was never meant to be a raw calculation of query frequency or traffic volume going through their 1.1.1.1 DNS resolver.

“They spelled out how their popularity algorithm is designed to reflect real human use and exclude automated traffic (they said they’re good at this),” Greenland wrote on LinkedIn. “So something has evidently gone wrong internally. We should have two rankings: one representing trust and real human use, and another derived from raw DNS volume.”

Why might it be a good idea to wholly separate malicious domains from the list? Greenland notes that Cloudflare Domain Rankings see widespread use for trust and safety determination, by browsers, DNS resolvers, safe browsing APIs and things like TRANCO.

“TRANCO is a respected open source list of the top million domains, and Cloudflare Radar is one of their five data providers,” he continued. “So there can be serious knock-on effects when a malicious domain features in Cloudflare’s top 10/100/1000/million. To many people and systems, the top 10 and 100 are naively considered safe and trusted, even though algorithmically-defined top-N lists will always be somewhat crude.”

Over this past week, Cloudflare started redacting portions of the malicious Aisuru domains from its Top Domains list, leaving only their domain suffix visible. Sometime in the past 24 hours, Cloudflare appears to have begun hiding the malicious Aisuru domains entirely from the web version of that list. However, downloading a spreadsheet of the current Top 200 domains from Cloudflare Radar shows an Aisuru domain still at the very top.

According to Cloudflare’s website, the majority of DNS queries to the top Aisuru domains — nearly 52 percent — originated from the United States. This tracks with my reporting from early October, which found Aisuru was drawing most of its firepower from IoT devices hosted on U.S. Internet providers like AT&T, Comcast and Verizon.

Experts tracking Aisuru say the botnet relies on well more than a hundred control servers, and that for the moment at least most of those domains are registered in the .su top-level domain (TLD). Dot-su is the TLD assigned to the former Soviet Union (.su’s Wikipedia page says the TLD was created just 15 months before the fall of the Berlin wall).

A Cloudflare blog post from October 27 found that .su had the highest “DNS magnitude” of any TLD, referring to a metric estimating the popularity of a TLD based on the number of unique networks querying Cloudflare’s 1.1.1.1 resolver. The report concluded that the top .su hostnames were associated with a popular online world-building game, and that more than half of the queries for that TLD came from the United States, Brazil and Germany [it’s worth noting that servers for the world-building game Minecraft were some of Aisuru’s most frequent targets].

A simple and crude way to detect Aisuru bot activity on a network may be to set an alert on any systems attempting to contact domains ending in .su. This TLD is frequently abused for cybercrime and by cybercrime forums and services, and blocking access to it entirely is unlikely to raise any legitimate complaints.

Exceptions in Cranelift and Wasmtime

Scientists Need a Positive Vision for AI

For many in the research community, it’s gotten harder to be optimistic about the impacts of artificial intelligence.

As authoritarianism is rising around the world, AI-generated “slop” is overwhelming legitimate media, while AI-generated deepfakes are spreading misinformation and parroting extremist messages. AI is making warfare more precise and deadly amidst intransigent conflicts. AI companies are exploiting people in the global South who work as data labelers, and profiting from content creators worldwide by using their work without license or compensation. The industry is also affecting an already-roiling climate with its ...

Cybercriminals Targeting Payroll Sites

Microsoft is warning of a scam involving online payroll systems. Criminals use social engineering to steal people’s credentials, and then divert direct deposits into accounts that they control. Sometimes they do other things to make it harder for the victim to realize what is happening.

I feel like this kind of thing is happening everywhere, with everything. As we move more of our personal and professional lives online, we enable criminals to subvert the very systems we rely on.