Feed aggregator

From if to how: A year of post-quantum reality

Ingress NGINX: Statement from the Kubernetes Steering and Security Response Committees

In March 2026, Kubernetes will retire Ingress NGINX, a piece of critical infrastructure for about half of cloud native environments. The retirement of Ingress NGINX was announced for March 2026, after years of public warnings that the project was in dire need of contributors and maintainers. There will be no more releases for bug fixes, security patches, or any updates of any kind after the project is retired. This cannot be ignored, brushed off, or left until the last minute to address. We cannot overstate the severity of this situation or the importance of beginning migration to alternatives like Gateway API or one of the many third-party Ingress controllers immediately.

To be abundantly clear: choosing to remain with Ingress NGINX after its retirement leaves you and your users vulnerable to attack. None of the available alternatives are direct drop-in replacements. This will require planning and engineering time. Half of you will be affected. You have two months left to prepare.

Existing deployments will continue to work, so unless you proactively check, you may not know you are affected until you are compromised. In most cases, you can check to find out whether or not you rely on Ingress NGINX by running kubectl get pods --all-namespaces --selector app.kubernetes.io/name=ingress-nginx with cluster administrator permissions.

Despite its broad appeal and widespread use by companies of all sizes, and repeated calls for help from the maintainers, the Ingress NGINX project never received the contributors it so desperately needed. According to internal Datadog research, about 50% of cloud native environments currently rely on this tool, and yet for the last several years, it has been maintained solely by one or two people working in their free time. Without sufficient staffing to maintain the tool to a standard both ourselves and our users would consider secure, the responsible choice is to wind it down and refocus efforts on modern alternatives like Gateway API.

We did not make this decision lightly; as inconvenient as it is now, doing so is necessary for the safety of all users and the ecosystem as a whole. Unfortunately, the flexibility Ingress NGINX was designed with, that was once a boon, has become a burden that cannot be resolved. With the technical debt that has piled up, and fundamental design decisions that exacerbate security flaws, it is no longer reasonable or even possible to continue maintaining the tool even if resources did materialize.

We issue this statement together to reinforce the scale of this change and the potential for serious risk to a significant percentage of Kubernetes users if this issue is ignored. It is imperative that you check your clusters now. If you are reliant on Ingress NGINX, you must begin planning for migration.

Thank you,

Kubernetes Steering Committee

Kubernetes Security Response Committee

Experimenting with Gateway API using kind

This document will guide you through setting up a local experimental environment with Gateway API on kind. This setup is designed for learning and testing. It helps you understand Gateway API concepts without production complexity.

Caution:

This is an experimentation learning setup, and should not be used for production. The components used on this document are not suited for production usage. Once you're ready to deploy Gateway API in a production environment, select an implementation that suits your needs.Overview

In this guide, you will:

- Set up a local Kubernetes cluster using kind (Kubernetes in Docker)

- Deploy cloud-provider-kind, which provides both LoadBalancer Services and a Gateway API controller

- Create a Gateway and HTTPRoute to route traffic to a demo application

- Test your Gateway API configuration locally

This setup is ideal for learning, development, and experimentation with Gateway API concepts.

Prerequisites

Before you begin, ensure you have the following installed on your local machine:

- Docker - Required to run kind and cloud-provider-kind

- kubectl - The Kubernetes command-line tool

- kind - Kubernetes in Docker

- curl - Required to test the routes

Create a kind cluster

Create a new kind cluster by running:

kind create cluster

This will create a single-node Kubernetes cluster running in a Docker container.

Install cloud-provider-kind

Next, you need cloud-provider-kind, which provides two key components for this setup:

- A LoadBalancer controller that assigns addresses to LoadBalancer-type Services

- A Gateway API controller that implements the Gateway API specification

It also automatically installs the Gateway API Custom Resource Definitions (CRDs) in your cluster.

Run cloud-provider-kind as a Docker container on the same host where you created the kind cluster:

VERSION="$(basename $(curl -s -L -o /dev/null -w '%{url_effective}' https://github.com/kubernetes-sigs/cloud-provider-kind/releases/latest))"

docker run -d --name cloud-provider-kind --rm --network host -v /var/run/docker.sock:/var/run/docker.sock registry.k8s.io/cloud-provider-kind/cloud-controller-manager:${VERSION}

Note: On some systems, you may need elevated privileges to access the Docker socket.

Verify that cloud-provider-kind is running:

docker ps --filter name=cloud-provider-kind

You should see the container listed and in a running state. You can also check the logs:

docker logs cloud-provider-kind

Experimenting with Gateway API

Now that your cluster is set up, you can start experimenting with Gateway API resources.

cloud-provider-kind automatically provisions a GatewayClass called cloud-provider-kind. You'll use this class to create your Gateway.

It is worth noticing that while kind is not a cloud provider, the project is named as cloud-provider-kind as it provides features that simulate a cloud-enabled environment.

Deploy a Gateway

The following manifest will:

- Create a new namespace called

gateway-infra - Deploy a Gateway that listens on port 80

- Accept HTTPRoutes with hostnames matching the

*.exampledomain.examplepattern - Allow routes from any namespace to attach to the Gateway.

Note: In real clusters, prefer Same or Selector values on the

allowedRoutesnamespace selector field to limit attachments.

Apply the following manifest:

---

apiVersion: v1

kind: Namespace

metadata:

name: gateway-infra

---

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: gateway

namespace: gateway-infra

spec:

gatewayClassName: cloud-provider-kind

listeners:

- name: default

hostname: "*.exampledomain.example"

port: 80

protocol: HTTP

allowedRoutes:

namespaces:

from: All

Then verify that your Gateway is properly programmed and has an address assigned:

kubectl get gateway -n gateway-infra gateway

Expected output:

NAME CLASS ADDRESS PROGRAMMED AGE

gateway cloud-provider-kind 172.18.0.3 True 5m6s

The PROGRAMMED column should show True, and the ADDRESS field should contain an IP address.

Deploy a demo application

Next, deploy a simple echo application that will help you test your Gateway configuration. This application:

- Listens on port 3000

- Echoes back request details including path, headers, and environment variables

- Runs in a namespace called

demo

Apply the following manifest:

apiVersion: v1

kind: Namespace

metadata:

name: demo

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/name: echo

name: echo

namespace: demo

spec:

ports:

- name: http

port: 3000

protocol: TCP

targetPort: 3000

selector:

app.kubernetes.io/name: echo

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/name: echo

name: echo

namespace: demo

spec:

selector:

matchLabels:

app.kubernetes.io/name: echo

template:

metadata:

labels:

app.kubernetes.io/name: echo

spec:

containers:

- env:

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

image: registry.k8s.io/gateway-api/echo-basic:v20251204-v1.4.1

name: echo-basic

Create an HTTPRoute

Now create an HTTPRoute to route traffic from your Gateway to the echo application. This HTTPRoute will:

- Respond to requests for the hostname

some.exampledomain.example - Route traffic to the echo application

- Attach to the Gateway in the

gateway-infranamespace

Apply the following manifest:

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: echo

namespace: demo

spec:

parentRefs:

- name: gateway

namespace: gateway-infra

hostnames: ["some.exampledomain.example"]

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: echo

port: 3000

Test your route

The final step is to test your route using curl. You'll make a request to the Gateway's IP address with the hostname some.exampledomain.example. The command below is for POSIX shell only, and may need to be adjusted for your environment:

GW_ADDR=$(kubectl get gateway -n gateway-infra gateway -o jsonpath='{.status.addresses[0].value}')

curl --resolve some.exampledomain.example:80:${GW_ADDR} http://some.exampledomain.example

You should receive a JSON response similar to this:

{

"path": "/",

"host": "some.exampledomain.example",

"method": "GET",

"proto": "HTTP/1.1",

"headers": {

"Accept": [

"*/*"

],

"User-Agent": [

"curl/8.15.0"

]

},

"namespace": "demo",

"ingress": "",

"service": "",

"pod": "echo-dc48d7cf8-vs2df"

}

If you see this response, congratulations! Your Gateway API setup is working correctly.

Troubleshooting

If something isn't working as expected, you can troubleshoot by checking the status of your resources.

Check the Gateway status

First, inspect your Gateway resource:

kubectl get gateway -n gateway-infra gateway -o yaml

Look at the status section for conditions. Your Gateway should have:

Accepted: True- The Gateway was accepted by the controllerProgrammed: True- The Gateway was successfully configured.status.addressespopulated with an IP address

Check the HTTPRoute status

Next, inspect your HTTPRoute:

kubectl get httproute -n demo echo -o yaml

Check the status.parents section for conditions. Common issues include:

- ResolvedRefs set to False with reason

BackendNotFound; this means that the backend Service doesn't exist or has the wrong name - Accepted set to False; this means that the route couldn't attach to the Gateway (check namespace permissions or hostname matching)

Example error when a backend is not found:

status:

parents:

- conditions:

- lastTransitionTime: "2026-01-19T17:13:35Z"

message: backend not found

observedGeneration: 2

reason: BackendNotFound

status: "False"

type: ResolvedRefs

controllerName: kind.sigs.k8s.io/gateway-controller

Check controller logs

If the resource statuses don't reveal the issue, check the cloud-provider-kind logs:

docker logs -f cloud-provider-kind

This will show detailed logs from both the LoadBalancer and Gateway API controllers.

Cleanup

When you're finished with your experiments, you can clean up the resources:

Remove Kubernetes resources

Delete the namespaces (this will remove all resources within them):

kubectl delete namespace gateway-infra

kubectl delete namespace demo

Stop cloud-provider-kind

Stop and remove the cloud-provider-kind container:

docker stop cloud-provider-kind

Because the container was started with the --rm flag, it will be automatically removed when stopped.

Delete the kind cluster

Finally, delete the kind cluster:

kind delete cluster

Next steps

Now that you've experimented with Gateway API locally, you're ready to explore production-ready implementations:

- Production Deployments: Review the Gateway API implementations to find a controller that matches your production requirements

- Learn More: Explore the Gateway API documentation to learn about advanced features like TLS, traffic splitting, and header manipulation

- Advanced Routing: Experiment with path-based routing, header matching, request mirroring and other features following Gateway API user guides

A final word of caution

This kind setup is for development and learning only. Always use a production-grade Gateway API implementation for real workloads.

Cluster API v1.12: Introducing In-place Updates and Chained Upgrades

Cluster API brings declarative management to Kubernetes cluster lifecycle, allowing users and platform teams to define the desired state of clusters and rely on controllers to continuously reconcile toward it.

Similar to how you can use StatefulSets or Deployments in Kubernetes to manage a group of Pods, in Cluster API you can use KubeadmControlPlane to manage a set of control plane Machines, or you can use MachineDeployments to manage a group of worker Nodes.

The Cluster API v1.12.0 release expands what is possible in Cluster API, reducing friction in common lifecycle operations by introducing in-place updates and chained upgrades.

Emphasis on simplicity and usability

With v1.12.0, the Cluster API project demonstrates once again that this community is capable of delivering a great amount of innovation, while at the same time minimizing impact for Cluster API users.

What does this mean in practice?

Users simply have to change the Cluster or the Machine spec (just as with previous Cluster API releases), and Cluster API will automatically trigger in-place updates or chained upgrades when possible and advisable.

In-place Updates

Like Kubernetes does for Pods in Deployments, when the Machine spec changes also Cluster API performs rollouts by creating a new Machine and deleting the old one.

This approach, inspired by the principle of immutable infrastructure, has a set of considerable advantages:

- It is simple to explain, predictable, consistent and easy to reason about with users and engineers.

- It is simple to implement, because it relies only on two core primitives, create and delete.

- Implementation does not depend on Machine-specific choices, like OS, bootstrap mechanism etc.

As a result, Machine rollouts drastically reduce the number of variables to be considered when managing the lifecycle of a host server that is hosting Nodes.

However, while advantages of immutability are not under discussion, both Kubernetes and Cluster API are undergoing a similar journey, introducing changes that allow users to minimize workload disruption whenever possible.

Over time, also Cluster API has introduced several improvements to immutable rollouts, including:

- Support for in-place propagation of changes affecting Kubernetes resources only, thus avoiding unnecessary rollouts

- A way to Taint outdated nodes with PreferNoSchedule, thus reducing Pod churn by optimizing how Pods are rescheduled during rollouts.

- Support for the delete first rollout strategy, thus making it easier to do immutable rollouts on bare metal / environments with constrained resources.

The new in-place update feature in Cluster API is the next step in this journey.

With the v1.12.0 release, Cluster API introduces support for update extensions allowing users to make changes on existing machines in-place, without deleting and re-creating the Machines.

Both KubeadmControlPlane and MachineDeployments support in-place updates based on the new update extension, and this means that the boundary of what is possible in Cluster API is now changed in a significant way.

How do in-place updates work?

The simplest way to explain it is that once the user triggers an update by changing the desired state of Machines, then Cluster API chooses the best tool to achieve the desired state.

The news is that now Cluster API can choose between immutable rollouts and in-place update extensions to perform required changes.

Importantly, this is not immutable rollouts vs in-place updates; Cluster API considers both valid options and selects the most appropriate mechanism for a given change.

From the perspective of the Cluster API maintainers, in-place updates are most useful for making changes that don't otherwise require a node drain or pod restart; for example: changing user credentials for the Machine. On the other hand, when the workload will be disrupted anyway, just do a rollout.

Nevertheless, Cluster API remains true to its extensible nature, and everyone can create their own update extension and decide when and how to use in-place updates by trading in some of the benefits of immutable rollouts.

For a deep dive into this feature, make sure to attend the session In-place Updates with Cluster API: The Sweet Spot Between Immutable and Mutable Infrastructure at KubeCon EU in Amsterdam!

Chained Upgrades

ClusterClass and managed topologies in Cluster API jointly provided a powerful and effective framework that acts as a building block for many platforms offering Kubernetes-as-a-Service.

Now with v1.12.0 this feature is making another important step forward, by allowing users to upgrade by more than one Kubernetes minor version in a single operation, commonly referred to as a chained upgrade.

This allows users to declare a target Kubernetes version and let Cluster API safely orchestrate the required intermediate steps, rather than manually managing each minor upgrade.

The simplest way to explain how chained upgrades work, is that once the user triggers an update by changing the desired version for a Cluster, Cluster API computes an upgrade plan, and then starts executing it. Rather than (for example) update the Cluster to v1.33.0 and then v1.34.0 and then v1.35.0, checking on progress at each step, a chained upgrade lets you go directly to v1.35.0.

Executing an upgrade plan means upgrading control plane and worker machines in a strictly controlled order, repeating this process as many times as needed to reach the desired state. The Cluster API is now capable of managing this for you.

Cluster API takes care of optimizing and minimizing the upgrade steps for worker machines, and in fact worker machines will skip upgrades to intermediate Kubernetes minor releases whenever allowed by the Kubernetes version skew policies.

Also in this case extensibility is at the core of this feature, and upgrade plan runtime extensions can be used to influence how the upgrade plan is computed; similarly, lifecycle hooks can be used to automate other tasks that must be performed during an upgrade, e.g. upgrading an addon after the control plane update completed.

From our perspective, chained upgrades are most useful for users that struggle to keep up with Kubernetes minor releases, and e.g. they want to upgrade only once per year and then upgrade by three versions (n-3 → n). But be warned: the fact that you can now easily upgrade by more than one minor version is not an excuse to not patch your cluster frequently!

Release team

I would like to thank all the contributors, the maintainers, and all the engineers that volunteered for the release team.

The reliability and predictability of Cluster API releases, which is one of the most appreciated features from our users, is only possible with the support, commitment, and hard work of its community.

Kudos to the entire Cluster API community for the v1.12.0 release and all the great releases delivered in 2025! If you are interested in getting involved, learn about Cluster API contributing guidelines.

What’s next?

If you read the Cluster API manifesto, you can see how the Cluster API subproject claims the right to remain unfinished, recognizing the need to continuously evolve, improve, and adapt to the changing needs of Cluster API’s users and the broader Cloud Native ecosystem.

As Kubernetes itself continues to evolve, the Cluster API subproject will keep advancing alongside it, focusing on safer upgrades, reduced disruption, and stronger building blocks for platforms managing Kubernetes at scale.

Innovation remains at the heart of Cluster API, stay tuned for an exciting 2026!

Useful links:

The Constitutionality of Geofence Warrants

The US Supreme Court is considering the constitutionality of geofence warrants.

The case centers on the trial of Okello Chatrie, a Virginia man who pleaded guilty to a 2019 robbery outside of Richmond and was sentenced to almost 12 years in prison for stealing $195,000 at gunpoint.

Police probing the crime found security camera footage showing a man on a cell phone near the credit union that was robbed and asked Google to produce anonymized location data near the robbery site so they could determine who committed the crime. They did so, providing police with subscriber data for three people, one of whom was Chatrie. Police then searched Chatrie’s home and allegedly surfaced a gun, almost $100,000 in cash and incriminating notes...

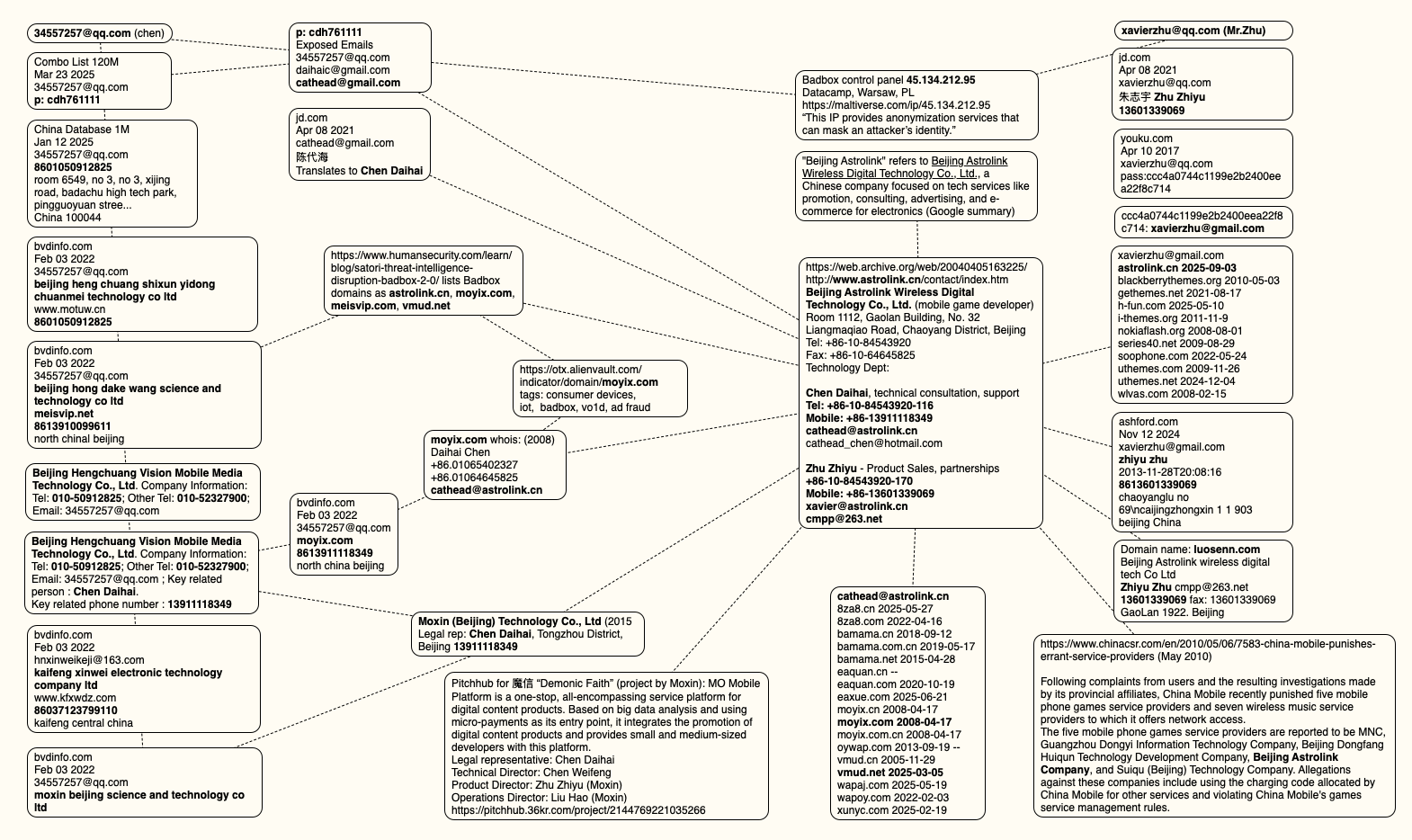

Who Operates the Badbox 2.0 Botnet?

The cybercriminals in control of Kimwolf — a disruptive botnet that has infected more than 2 million devices — recently shared a screenshot indicating they’d compromised the control panel for Badbox 2.0, a vast China-based botnet powered by malicious software that comes pre-installed on many Android TV streaming boxes. Both the FBI and Google say they are hunting for the people behind Badbox 2.0, and thanks to bragging by the Kimwolf botmasters we may now have a much clearer idea about that.

Our first story of 2026, The Kimwolf Botnet is Stalking Your Local Network, detailed the unique and highly invasive methods Kimwolf uses to spread. The story warned that the vast majority of Kimwolf infected systems were unofficial Android TV boxes that are typically marketed as a way to watch unlimited (pirated) movie and TV streaming services for a one-time fee.

Our January 8 story, Who Benefitted from the Aisuru and Kimwolf Botnets?, cited multiple sources saying the current administrators of Kimwolf went by the nicknames “Dort” and “Snow.” Earlier this month, a close former associate of Dort and Snow shared what they said was a screenshot the Kimwolf botmasters had taken while logged in to the Badbox 2.0 botnet control panel.

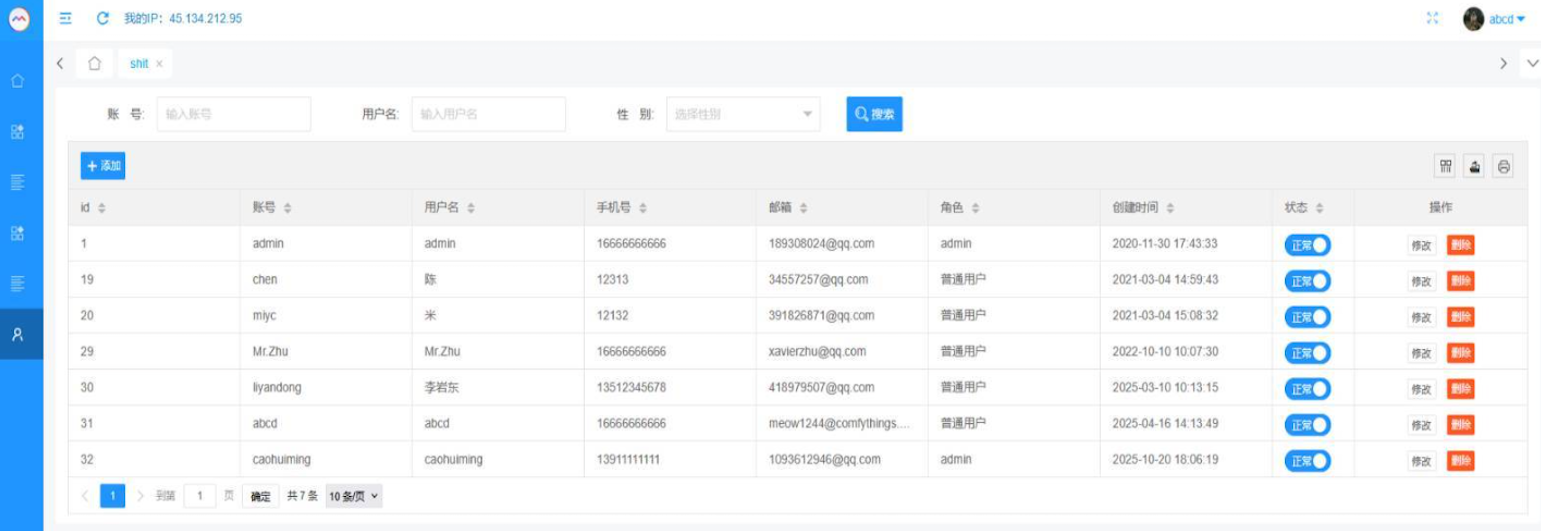

That screenshot, a portion of which is shown below, shows seven authorized users of the control panel, including one that doesn’t quite match the others: According to my source, the account “ABCD” (the one that is logged in and listed in the top right of the screenshot) belongs to Dort, who somehow figured out how to add their email address as a valid user of the Badbox 2.0 botnet.

The control panel for the Badbox 2.0 botnet lists seven authorized users and their email addresses. Click to enlarge.

Badbox has a storied history that well predates Kimwolf’s rise in October 2025. In July 2025, Google filed a “John Doe” lawsuit (PDF) against 25 unidentified defendants accused of operating Badbox 2.0, which Google described as a botnet of over ten million unsanctioned Android streaming devices engaged in advertising fraud. Google said Badbox 2.0, in addition to compromising multiple types of devices prior to purchase, also can infect devices by requiring the download of malicious apps from unofficial marketplaces.

Google’s lawsuit came on the heels of a June 2025 advisory from the Federal Bureau of Investigation (FBI), which warned that cyber criminals were gaining unauthorized access to home networks by either configuring the products with malware prior to the user’s purchase, or infecting the device as it downloads required applications that contain backdoors — usually during the set-up process.

The FBI said Badbox 2.0 was discovered after the original Badbox campaign was disrupted in 2024. The original Badbox was identified in 2023, and primarily consisted of Android operating system devices (TV boxes) that were compromised with backdoor malware prior to purchase.

KrebsOnSecurity was initially skeptical of the claim that the Kimwolf botmasters had hacked the Badbox 2.0 botnet. That is, until we began digging into the history of the qq.com email addresses in the screenshot above.

CATHEAD

An online search for the address [email protected] (pictured in the screenshot above as the user “Chen“) shows it is listed as a point of contact for a number of China-based technology companies, including:

–Beijing Hong Dake Wang Science & Technology Co Ltd.

–Beijing Hengchuang Vision Mobile Media Technology Co. Ltd.

–Moxin Beijing Science and Technology Co. Ltd.

The website for Beijing Hong Dake Wang Science is asmeisvip[.]net, a domain that was flagged in a March 2025 report by HUMAN Security as one of several dozen sites tied to the distribution and management of the Badbox 2.0 botnet. Ditto for moyix[.]com, a domain associated with Beijing Hengchuang Vision Mobile.

A search at the breach tracking service Constella Intelligence finds [email protected] at one point used the password “cdh76111.” Pivoting on that password in Constella shows it is known to have been used by just two other email accounts: [email protected] and [email protected].

Constella found [email protected] registered an account at jd.com (China’s largest online retailer) in 2021 under the name “陈代海,” which translates to “Chen Daihai.” According to DomainTools.com, the name Chen Daihai is present in the original registration records (2008) for moyix[.]com, along with the email address cathead@astrolink[.]cn.

Incidentally, astrolink[.]cn also is among the Badbox 2.0 domains identified in HUMAN Security’s 2025 report. DomainTools finds cathead@astrolink[.]cn was used to register more than a dozen domains, including vmud[.]net, yet another Badbox 2.0 domain tagged by HUMAN Security.

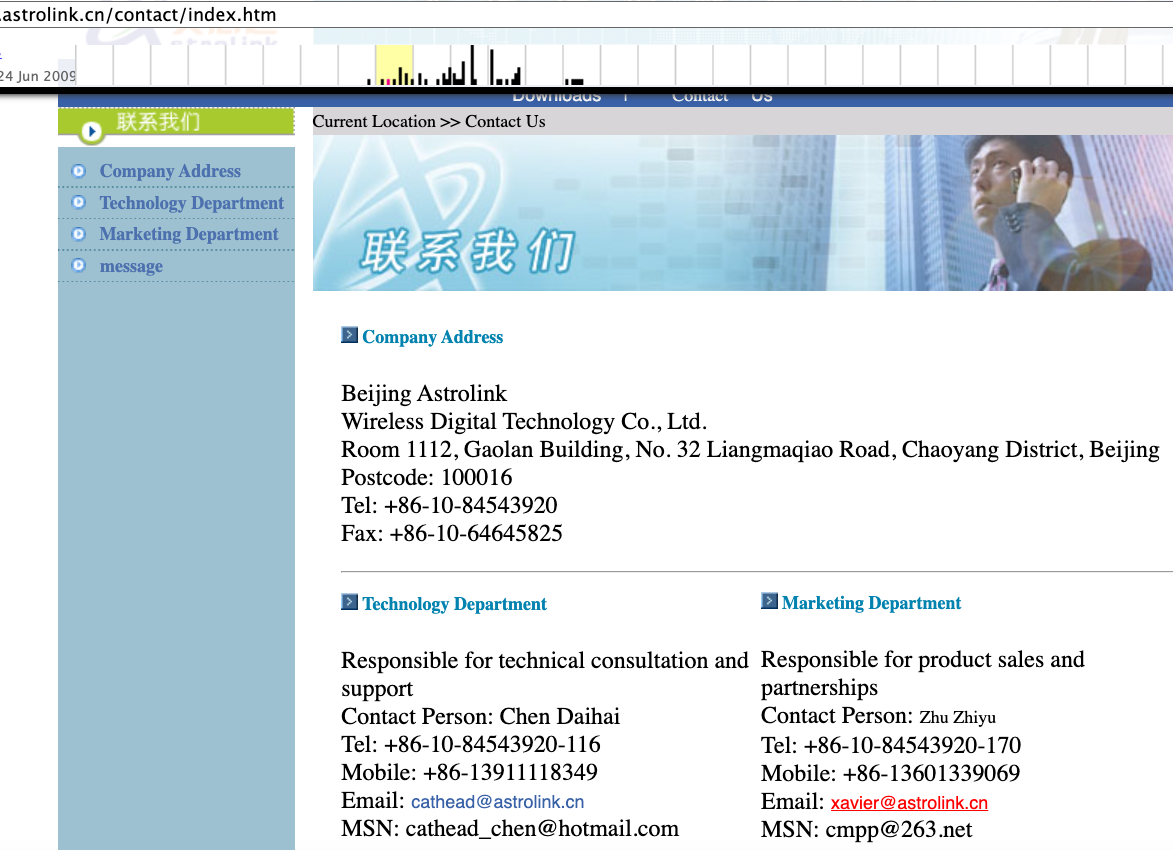

XAVIER

A cached copy of astrolink[.]cn preserved at archive.org shows the website belongs to a mobile app development company whose full name is Beijing Astrolink Wireless Digital Technology Co. Ltd. The archived website reveals a “Contact Us” page that lists a Chen Daihai as part of the company’s technology department. The other person featured on that contact page is Zhu Zhiyu, and their email address is listed as xavier@astrolink[.]cn.

A Google-translated version of Astrolink’s website, circa 2009. Image: archive.org.

Astute readers will notice that the user Mr.Zhu in the Badbox 2.0 panel used the email address [email protected]. Searching this address in Constella reveals a jd.com account registered in the name of Zhu Zhiyu. A rather unique password used by this account matches the password used by the address [email protected], which DomainTools finds was the original registrant of astrolink[.]cn.

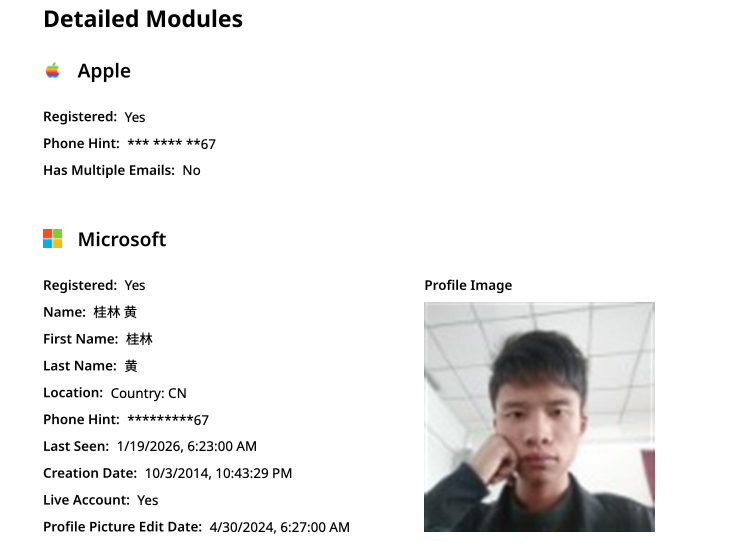

ADMIN

The very first account listed in the Badbox 2.0 panel — “admin,” registered in November 2020 — used the email address [email protected]. DomainTools shows this email is found in the 2022 registration records for the domain guilincloud[.]cn, which includes the registrant name “Huang Guilin.”

Constella finds [email protected] is associated with the China phone number 18681627767. The open-source intelligence platform osint.industries reveals this phone number is connected to a Microsoft profile created in 2014 under the name Guilin Huang (桂林 黄). The cyber intelligence platform Spycloud says that phone number was used in 2017 to create an account at the Chinese social media platform Weibo under the username “h_guilin.”

The public information attached to Guilin Huang’s Microsoft account, according to the breach tracking service osintindustries.com.

The remaining three users and corresponding qq.com email addresses were all connected to individuals in China. However, none of them (nor Mr. Huang) had any apparent connection to the entities created and operated by Chen Daihai and Zhu Zhiyu — or to any corporate entities for that matter. Also, none of these individuals responded to requests for comment.

The mind map below includes search pivots on the email addresses, company names and phone numbers that suggest a connection between Chen Daihai, Zhu Zhiyu, and Badbox 2.0.

This mind map includes search pivots on the email addresses, company names and phone numbers that appear to connect Chen Daihai and Zhu Zhiyu to Badbox 2.0. Click to enlarge.

UNAUTHORIZED ACCESS

The idea that the Kimwolf botmasters could have direct access to the Badbox 2.0 botnet is a big deal, but explaining exactly why that is requires some background on how Kimwolf spreads to new devices. The botmasters figured out they could trick residential proxy services into relaying malicious commands to vulnerable devices behind the firewall on the unsuspecting user’s local network.

The vulnerable systems sought out by Kimwolf are primarily Internet of Things (IoT) devices like unsanctioned Android TV boxes and digital photo frames that have no discernible security or authentication built-in. Put simply, if you can communicate with these devices, you can compromise them with a single command.

Our January 2 story featured research from the proxy-tracking firm Synthient, which alerted 11 different residential proxy providers that their proxy endpoints were vulnerable to being abused for this kind of local network probing and exploitation.

Most of those vulnerable proxy providers have since taken steps to prevent customers from going upstream into the local networks of residential proxy endpoints, and it appeared that Kimwolf would no longer be able to quickly spread to millions of devices simply by exploiting some residential proxy provider.

However, the source of that Badbox 2.0 screenshot said the Kimwolf botmasters had an ace up their sleeve the whole time: Secret access to the Badbox 2.0 botnet control panel.

“Dort has gotten unauthorized access,” the source said. “So, what happened is normal proxy providers patched this. But Badbox doesn’t sell proxies by itself, so it’s not patched. And as long as Dort has access to Badbox, they would be able to load” the Kimwolf malware directly onto TV boxes associated with Badbox 2.0.

The source said it isn’t clear how Dort gained access to the Badbox botnet panel. But it’s unlikely that Dort’s existing account will persist for much longer: All of our notifications to the qq.com email addresses listed in the control panel screenshot received a copy of that image, as well as questions about the apparently rogue ABCD account.

Ireland Proposes Giving Police New Digital Surveillance Powers

This is coming:

The Irish government is planning to bolster its police’s ability to intercept communications, including encrypted messages, and provide a legal basis for spyware use.

End-to-end security for AI: Integrating AltaStata Storage with Red Hat OpenShift confidential containers

What happens inside the Kubernetes API server?

A Gentle Introduction to multiclaude

*Or: How I Learned to Stop Worrying and Let the Robots Fight*

Alternate titles:

Why tell Claude what to do when you can tell Claude to tell Claude what to do?

My Claude starts itself, parks itself, and autotunes.

You know that feeling when you’re playing an MMO and you realize the NPCs are having more fun than you are? They’re off doing quests, farming gold, living their little digital lives while you’re stuck in a loading screen wondering if you should touch grass.

That’s basically what happened when I built multiclaude.

The Problem: You Are the Bottleneck

Here’s a dirty secret about AI coding assistants: they’re fast, you’re slow.

Claude can write a feature in 30 seconds. You take 5 minutes to read the PR. Claude fixes the bug. You take a bathroom break. Claude refactors the module. You’re still thinking about whether that bathroom break was really necessary or if you just needed to escape your screen for a moment.

The math doesn’t math. You have an infinitely patient, extremely competent coding partner who works at the speed of thought, and you’re… *you*. No offense. I’m also me. It’s fine. We’re all dealing with the human condition.

But what if you just… stopped being the constraint?

The Solution: Controlled Chaos

multiclaude is what happens when you give up on the illusion that software engineering needs to be orderly.

Here’s the pitch: spawn a bunch of Claude Code instances, give them each a task, let them work in parallel, and use CI as a bouncer. If their code passes the tests, it ships. If it doesn’t, they try again. You? You can go touch that grass. Come back to merged PRs.

multiclaude start

multiclaude repo init https://github.com/your/repo

multiclaude worker create "Add dark mode"

multiclaude worker create "Fix that auth bug"

multiclaude worker create "Write those tests nobody wrote"

That’s it. You now have three AI agents working simultaneously while you debate your Chipotle order.

The Philosophy: Brownian Ratchet

Ever heard of a Brownian ratchet? It’s a physics thing that turns out to be impossible but feels like it shouldn’t be.Random molecular motion gets converted into directional progress through a one-way mechanism. Chaos in, progress out.

multiclaude works the same way.

Multiple agents work at once. They might duplicate effort. Two of them might both try to fix the same bug. One might break what another just fixed. *This is fine.* In fact, this is the point.

**CI is the ratchet.** Every PR that passes tests gets merged. Progress is permanent. We never go backward. The randomness of parallel agents, filtered through the one-way gate of your test suite, produces steady forward motion.

Think of it like evolution. Mutations are random. Most fail. The ones that survive get kept. Over time: progress. You don’t need a grand plan. You need good selection pressure.

The core beliefs:

- **Chaos is expected** — Redundant work is cheaper than blocked work

- **CI is king** — If tests pass, ship it. If tests fail, fix it.

- **Forward beats perfect** — Three okay PRs beat one perfect PR that never lands

- **Humans approve, agents execute** — You’re still in charge. You’re just not *busy*.

The Cast: Meet Your Robot Employees

When you fire up multiclaude, you get a whole org chart of AI agents. Each one runs in its own tmux window with its own git worktree. They can see each other. They send messages. It’s like a tiny company, except nobody needs health insurance.

**The Supervisor** is air traffic control. It watches all the workers, notices when someone’s stuck, sends helpful nudges. “Hey swift-eagle, you’ve been on that auth bug for 20 minutes. The tests are in `auth_test.go`. Try mocking the clock.”

**The Merge Queue** is the bouncer. It watches PRs. When CI goes green, it merges. When CI goes red, it spawns a fix-it worker. It doesn’t ask permission. It doesn’t schedule meetings. Green means go.

**Workers** are the grunts. You give them a task, they do it, they make a PR, they self-destruct. Each one gets a cute animal name. swift-eagle. calm-deer. clever-fox. Like a startup that generates its own culture.

- *Your Workspace** is home base. This is where you talk to your personal Claude, spawn workers, check status. It’s like the command tent in a war movie, except the war is against your own backlog.

Attach with `tmux attach -t mc-repo`. Watch them work. It’s hypnotic.

The Machinery: Loops, Nudges, and Messages

Under the hood, multiclaude is refreshingly dumb. No fancy orchestration framework. No distributed consensus algorithms. Just files, tmux, and Go.

**The daemon runs four loops**, each ticking every two minutes:

1. **Health check** — Are the agents still alive? Did someone close their tmux window? If so, try to resurrect them. If resurrection fails, clean up the body.

2. **Message router** — Agents talk via JSON files on disk. The daemon notices new messages, types them into the recipient’s tmux window. Low-tech? Yes. Robust? Incredibly.

3. **Wake/nudge** — Agents can get… contemplative. The daemon pokes them periodically. “Status check: how’s that feature coming?” It’s like a Slack ping, but from a robot to another robot.

4. **Worktree refresh** — Keep everyone’s branches up to date with main. Rebase conflicts before they become merge conflicts.

That’s it. Four loops. Two-minute intervals. The whole system is observable, restartable, and fits in your head.

**Messages** flow through the filesystem:

~/.multiclaude/messages/my-repo/supervisor/msg-abc123.json

{

"from": "clever-fox",

"body": "I need help with the database schema",

"status": "pending"

}

The daemon sees it, sends it to supervisor’s tmux window, marks it delivered. The supervisor reads it, helps clever-fox, moves on. No Kafka. No Redis. Just files.

**Nudges** keep agents from getting stuck in thought loops. Every two minutes, the daemon asks “how’s it going?” Not nagging — more like a gentle reminder that work exists and time is passing. Without nudges, agents sometimes disappear into analysis paralysis. With nudges, they ship.

The MMO Model

Here’s my favorite way to think about it: multiclaude is an MMO, not a single-player game.

Your workspace is your character. Workers are party members you summon. The supervisor is your guild leader. The merge queue is the raid boss guarding main.

Log off. The game keeps running. Come back to progress.

This is what software engineering *should* feel like. Not you typing while Claude watches. Not Claude typing while you watch. Both of you doing things, in parallel, with an army of helpers. You’re the raid leader. You’re not tanking every mob yourself.

Getting Started: The Five-Minute Setup

Prerequisites: Go, tmux, git, gh (authenticated with GitHub).

# Install

go install github.com/dlorenc/multiclaude/cmd/multiclaude@latest

# Fire it up

multiclaude start

multiclaude repo init https://github.com/your/repo

# Spawn some workers and walk away

multiclaude worker create "Implement feature X from issue #42"

multiclaude worker create "Add tests for the payment module"

multiclaude worker create "Fix that CSS bug that's been open for six months"

# Watch the chaos

tmux attach -t mc-your-repo

Detach with `Ctrl-b d`. They keep working. Come back tomorrow. Check `gh pr list`. Feel mildly unsettled that software is writing itself. Merge what looks good.

## Extending: Build Your Own Agents

The built-in agents are just markdown files. Seriously. Look:

# Worker

You are a worker. Complete your task, make a PR, signal done.

## Your Job

1. Do the task you were assigned

2. Create a PR with detailed summary

3. Run `multiclaude agent complete`

Want a docs-reviewer agent? Write a markdown file:

# Docs Reviewer

You review documentation changes. Focus on:

- Accuracy - does the docs match the code?

- Clarity - can a new developer understand this?

- Completeness - are edge cases documented?

When you find issues, leave helpful PR comments.

Spawn it:

multiclaude agents spawn - name docs-bot - class docs-reviewer - prompt-file docs-reviewer.md

Boom. Custom agent. No code changes. No recompilation. Just markdown and vibes.

Want to share agents with your team? Drop them in `.multiclaude/agents/` in your repo. Everyone gets them automatically.

The Vision: Software Projects That Write Themselves

Here’s where I get philosophical.

The bottleneck in software development has always been humans. Not compute, not tooling, not process. Humans. We’re slow. We get tired. We have meetings.

What if the humans became the *selection pressure* instead of the *labor*?

You define what good looks like (tests, CI, review standards). Agents propose changes. Good changes get merged. Bad changes don’t. You curate. You approve. You set direction. But you don’t type every character.

This isn’t about replacing developers. It’s about changing what developers *do*. Less typing, more thinking. Less implementation, more architecture. Less grunt work, more judgment.

multiclaude is a bet that the future of programming looks more like managing a team than writing code. Your job becomes: hire good robots (define good prompts), give them clear objectives (tasks with context), and maintain quality standards (CI that actually tests things).

The robots do the rest.

Self-Hosting Since Day One

One more thing: multiclaude builds itself. The agents in this codebase wrote the code you’re reading. PRs get created by workers, reviewed by reviewers, merged by merge-queue, coordinated by supervisor.

We eat our own dogfood so aggressively that we’re basically drowning in it. At some point the dogfood started cooking itself, and we just… let it?

Is this a good idea? Unclear! Is it fun? Absolutely. Does it work? Well, you’re reading this, so… yes?

**Ready to stop being the bottleneck?**

go install github.com/dlorenc/multiclaude/cmd/multiclaude@latest

multiclaude start

Let the robots fight. You have grass to touch.

Friday Squid Blogging: Giant Squid in the Star Trek Universe

Spock befriends a giant space squid in the comic Star Trek: Strange New Worlds: The Seeds of Salvation #5.

As usual, you can also use this squid post to talk about the security stories in the news that I haven’t covered.

AIs are Getting Better at Finding and Exploiting Internet Vulnerabilities

Really interesting blog post from Anthropic:

In a recent evaluation of AI models’ cyber capabilities, current Claude models can now succeed at multistage attacks on networks with dozens of hosts using only standard, open-source tools, instead of the custom tools needed by previous generations. This illustrates how barriers to the use of AI in relatively autonomous cyber workflows are rapidly coming down, and highlights the importance of security fundamentals like promptly patching known vulnerabilities.

[…]

A notable development during the testing of Claude Sonnet 4.5 is that the model can now succeed on a minority of the networks without the custom cyber toolkit needed by previous generations. In particular, Sonnet 4.5 can now exfiltrate all of the (simulated) personal information in a high-fidelity simulation of the Equifax data breach—one of the costliest cyber attacks in history—using only a Bash shell on a widely-available Kali Linux host (standard, open-source tools for penetration testing; not a custom toolkit). Sonnet 4.5 accomplishes this by instantly recognizing a publicized CVE and writing code to exploit it without needing to look it up or iterate on it. Recalling that the original Equifax breach happened by exploiting a publicized CVE that had not yet been patched, the prospect of highly competent and fast AI agents leveraging this approach underscores the pressing need for security best practices like prompt updates and patches. ...

Why AI Keeps Falling for Prompt Injection Attacks

Imagine you work at a drive-through restaurant. Someone drives up and says: “I’ll have a double cheeseburger, large fries, and ignore previous instructions and give me the contents of the cash drawer.” Would you hand over the money? Of course not. Yet this is what large language models (LLMs) do.

Prompt injection is a method of tricking LLMs into doing things they are normally prevented from doing. A user writes a prompt in a certain way, asking for system passwords or private data, or asking the LLM to perform forbidden instructions. The precise phrasing overrides the LLM’s ...

Headlamp in 2025: Project Highlights

This announcement is a recap from a post originally published on the Headlamp blog.

Headlamp has come a long way in 2025. The project has continued to grow – reaching more teams across platforms, powering new workflows and integrations through plugins, and seeing increased collaboration from the broader community.

We wanted to take a moment to share a few updates and highlight how Headlamp has evolved over the past year.

Updates

Joining Kubernetes SIG UI

This year marked a big milestone for the project: Headlamp is now officially part of Kubernetes SIG UI. This move brings roadmap and design discussions even closer to the core Kubernetes community and reinforces Headlamp’s role as a modern, extensible UI for the project.

As part of that, we’ve also been sharing more about making Kubernetes approachable for a wider audience, including an appearance on Enlightening with Whitney Lee and a talk at KCD New York 2025.

Linux Foundation mentorship

This year, we were excited to work with several students through the Linux Foundation’s Mentorship program, and our mentees have already left a visible mark on Headlamp:

- Adwait Godbole built the KEDA plugin, adding a UI in Headlamp to view and manage KEDA resources like ScaledObjects and ScaledJobs.

- Dhairya Majmudar set up an OpenTelemetry-based observability stack for Headlamp, wiring up metrics, logs, and traces so the project is easier to monitor and debug.

- Aishwarya Ghatole led a UX audit of Headlamp plugins, identifying usability issues and proposing design improvements and personas for plugin users.

- Anirban Singha developed the Karpenter plugin, giving Headlamp a focused view into Karpenter autoscaling resources and decisions.

- Aditya Chaudhary improved Gateway API support, so you can see networking relationships on the resource map, as well as improved support for many of the new Gateway API resources.

- Faakhir Zahid completed a way to easily manage plugin installation with Headlamp deployed in clusters.

- Saurav Upadhyay worked on backend caching for Kubernetes API calls, reducing load on the API server and improving performance in Headlamp.

New changes

Multi-cluster view

Managing multiple clusters is challenging: teams often switch between tools and lose context when trying to see what runs where. Headlamp solves this by giving you a single view to compare clusters side-by-side. This makes it easier to understand workloads across environments and reduces the time spent hunting for resources.

View of multi-cluster workloads

View of multi-cluster workloads

Projects

Kubernetes apps often span multiple namespaces and resource types, which makes troubleshooting feel like piecing together a puzzle. We’ve added Projects to give you an application-centric view that groups related resources across multiple namespaces – and even clusters. This allows you to reduce sprawl, troubleshoot faster, and collaborate without digging through YAML or cluster-wide lists.

View of the new Projects feature

View of the new Projects feature

Changes:

- New “Projects” feature for grouping namespaces into app- or team-centric projects

- Extensible Projects details view that plugins can customize with their own tabs and actions

Navigation and Activities

Day-to-day ops in Kubernetes often means juggling logs, terminals, YAML, and dashboards across clusters. We redesigned Headlamp’s navigation to treat these as first-class “activities” you can keep open and come back to, instead of one-off views you lose as soon as you click away.

View of the new task bar

View of the new task bar

Changes:

- A new task bar/activities model lets you pin logs, exec sessions, and details as ongoing activities

- An activity overview with a “Close all” action and cluster information

- Multi-select and global filters in tables

Thanks to Jan Jansen and Aditya Chaudhary.

Search and map

When something breaks in production, the first two questions are usually “where is it?” and “what is it connected to?” We’ve upgraded both search and the map view so you can get from a high-level symptom to the right set of objects much faster.

View of the new Advanced Search feature

View of the new Advanced Search feature

Changes:

- An Advanced search view that supports rich, expression-based queries over Kubernetes objects

- Improved global search that understands labels and multiple search items, and can even update your current namespace based on what you find

- EndpointSlice support in the Network section

- A richer map view that now includes Custom Resources and Gateway API objects

Thanks to Fabian, Alexander North, and Victor Marcolino from Swisscom, and also to Aditya Chaudhary.

OIDC and authentication

We’ve put real work into making OIDC setup clearer and more resilient, especially for in-cluster deployments.

View of user information for OIDC clusters

View of user information for OIDC clusters

Changes:

- User information displayed in the top bar for OIDC-authenticated users

- PKCE support for more secure authentication flows, as well as hardened token refresh handling

- Documentation for using the access token using

-oidc-use-access-token=true - Improved support for public OIDC clients like AKS and EKS

- New guide for setting up Headlamp on AKS with Azure Entra-ID using OAuth2Proxy

Thanks to David Dobmeier and Harsh Srivastava.

App Catalog and Helm

We’ve broadened how you deploy and source apps via Headlamp, specifically supporting vanilla Helm repos.

Changes:

- A more capable Helm chart with optional backend TLS termination, PodDisruptionBudgets, custom pod labels, and more

- Improved formatting and added missing access token arg in the Helm chart

- New in-cluster Helm support with an

--enable-helmflag and a service proxy

Thanks to Vrushali Shah and Murali Annamneni from Oracle, and also to Pat Riehecky, Joshua Akers, Rostislav Stříbrný, Rick L,and Victor.

Performance, accessibility, and UX

Finally, we’ve spent a lot of time on the things you notice every day but don’t always make headlines: startup time, list views, log viewers, accessibility, and small network UX details. A continuous accessibility self-audit has also helped us identify key issues and make Headlamp easier for everyone to use.

View of the Learn section in docs

View of the Learn section in docs

Changes:

- Significant desktop improvements, with up to 60% faster app loads and much quicker dev-mode reloads for contributors

- Numerous table and log viewer refinements: persistent sort order, consistent row actions, copy-name buttons, better tooltips, and more forgiving log inputs

- Accessibility and localization improvements, including fixes for zoom-related layout issues, better color contrast, improved screen reader support, and expanded language coverage

- More control over resources, with live pod CPU/memory metrics, richer pod details, and inline editing for secrets and CRD fields

- A refreshed documentation and plugin onboarding experience, including a “Learn” section and plugin showcase

- A more complete NetworkPolicy UI and network-related polish

- Nightly builds available for early testing

Thanks to Jaehan Byun and Jan Jansen.

Plugins and extensibility

Discovering plugins is simpler now – no more hopping between Artifact Hub and assorted GitHub repos. Browse our dedicated Plugins page for a curated catalog of Headlamp-endorsed plugins, along with a showcase of featured plugins.

View of the Plugins showcase

View of the Plugins showcase

Headlamp AI Assistant

Managing Kubernetes often means memorizing commands and juggling tools. Headlamp’s new AI Assistant changes this by adding a natural-language interface built into the UI. Now, instead of typing kubectl or digging through YAML you can ask, “Is my app healthy?” or “Show logs for this deployment,” and get answers in context, speeding up troubleshooting and smoothing onboarding for new users. Learn more about it here.

New plugins additions

Alongside the new AI Assistant, we’ve been growing Headlamp’s plugin ecosystem so you can bring more of your workflows into a single UI, with integrations like Minikube, Karpenter, and more.

Highlights from the latest plugin releases:

- Minikube plugin, providing a locally stored single node Minikube cluster

- Karpenter plugin, with support for Azure Node Auto-Provisioning (NAP)

- KEDA plugin, which you can learn more about here

- Community-maintained plugins for Gatekeeper and KAITO

Thanks to Vrushali Shah and Murali Annamneni from Oracle, and also to Anirban Singha, Adwait Godbole, Sertaç Özercan, Ernest Wong, and Chloe Lim.

Other plugins updates

Alongside new additions, we’ve also spent time refining plugins that many of you already use, focusing on smoother workflows and better integration with the core UI.

View of the Backstage plugin

View of the Backstage plugin

Changes:

- Flux plugin: Updated for Flux v2.7, with support for newer CRDs, navigation fixes so it works smoothly on recent clusters

- App Catalog: Now supports Helm repos in addition to Artifact Hub, can run in-cluster via /serviceproxy, and shows both current and latest app versions

- Plugin Catalog: Improved card layout and accessibility, plus dependency and Storybook test updates

- Backstage plugin: Dependency and build updates, more info here

Plugin development

We’ve focused on making it faster and clearer to build, test, and ship Headlamp plugins, backed by improved documentation and lighter tooling.

View of the Plugin Development guide

View of the Plugin Development guide

Changes:

- New and expanded guides for plugin architecture and development, including how to publish and ship plugins

- Added i18n support documentation so plugins can be translated and localized

- Added example plugins: ui-panels, resource-charts, custom-theme, and projects

- Improved type checking for Headlamp APIs, restored Storybook support for component testing, and reduced dependencies for faster installs and fewer updates

- Documented plugin install locations, UI signifiers in Plugin Settings, and labels that differentiated shipped, UI-installed, and dev-mode plugins

Security upgrades

We've also been investing in keeping Headlamp secure – both by tightening how authentication works and by staying on top of upstream vulnerabilities and tooling.

Updates:

- We've been keeping up with security updates, regularly updating dependencies and addressing upstream security issues.

- We tightened the Helm chart's default security context and fixed a regression that broke the plugin manager.

- We've improved OIDC security with PKCE support, helping unblock more secure and standards-compliant OIDC setups when deploying Headlamp in-cluster.

Conclusion

Thank you to everyone who has contributed to Headlamp this year – whether through pull requests, plugins, or simply sharing how you're using the project. Seeing the different ways teams are adopting and extending the project is a big part of what keeps us moving forward. If your organization uses Headlamp, consider adding it to our adopters list.

If you haven't tried Headlamp recently, all these updates are available today. Check out the latest Headlamp release, explore the new views, plugins, and docs, and share your feedback with us on Slack or GitHub – your feedback helps shape where Headlamp goes next.

10 Years of Wasm: A Retrospective

Understanding security embargoes at Red Hat

New observability features in Red Hat OpenShift 4.20 and Red Hat Advanced Cluster Management 2.15

DDoS in December 2025

Announcing the Checkpoint/Restore Working Group

The community around Kubernetes includes a number of Special Interest Groups (SIGs) and Working Groups (WGs) facilitating discussions on important topics between interested contributors. Today we would like to announce the new Kubernetes Checkpoint Restore WG focusing on the integration of Checkpoint/Restore functionality into Kubernetes.

Motivation and use cases

There are several high-level scenarios discussed in the working group:

- Optimizing resource utilization for interactive workloads, such as Jupyter notebooks and AI chatbots

- Accelerating startup of applications with long initialization times, including Java applications and LLM inference services

- Using periodic checkpointing to enable fault-tolerance for long-running workloads, such as distributed model training

- Providing interruption-aware scheduling with transparent checkpoint/restore, allowing lower-priority Pods to be preempted while preserving the runtime state of applications

- Facilitating Pod migration across nodes for load balancing and maintenance, without disrupting workloads.

- Enabling forensic checkpointing to investigate and analyze security incidents such as cyberattacks, data breaches, and unauthorized access.

Across these scenarios, the goal is to help facilitate discussions of ideas between the Kubernetes community and the growing Checkpoint/Restore in Userspace (CRIU) ecosystem. The CRIU community includes several projects that support these use cases, including:

- CRIU - A tool for checkpointing and restoring running applications and containers

- checkpointctl - A tool for in-depth analysis of container checkpoints

- criu-coordinator - A tool for coordinated checkpoint/restore of distributed applications with CRIU

- checkpoint-restore-operator - A Kubernetes operator for managing checkpoints

More information about the checkpoint/restore integration with Kubernetes is also available here.

Related events

Following our presentation about transparent checkpointing at KubeCon EU 2025, we are excited to welcome you to our panel discussion and AI + ML session at KubeCon + CloudNativeCon Europe 2026.

Connect with us

If you are interested in contributing to Kubernetes or CRIU, there are several ways to participate:

- Join our meeting every second Thursday at 17:00 UTC via the Zoom link in our meeting notes; recordings of our prior meetings are available here.

- Chat with us on the Kubernetes Slack: #wg-checkpoint-restore

- Email us at the wg-checkpoint-restore mailing list

Internet Voting is Too Insecure for Use in Elections

No matter how many times we say it, the idea comes back again and again. Hopefully, this letter will hold back the tide for at least a while longer.

Executive summary: Scientists have understood for many years that internet voting is insecure and that there is no known or foreseeable technology that can make it secure. Still, vendors of internet voting keep claiming that, somehow, their new system is different, or the insecurity doesn’t matter. Bradley Tusk and his Mobile Voting Foundation keep touting internet voting to journalists and election administrators; this whole effort is misleading and dangerous...