CNCF Projects

Kubernetes v1.34: Decoupled Taint Manager Is Now Stable

This enhancement separates the responsibility of managing node lifecycle and pod eviction into two distinct components. Previously, the node lifecycle controller handled both marking nodes as unhealthy with NoExecute taints and evicting pods from them. Now, a dedicated taint eviction controller manages the eviction process, while the node lifecycle controller focuses solely on applying taints. This separation not only improves code organization but also makes it easier to improve taint eviction controller or build custom implementations of the taint based eviction.

What's new?

The feature gate SeparateTaintEvictionController has been promoted to GA in this release.

Users can optionally disable taint-based eviction by setting --controllers=-taint-eviction-controller

in kube-controller-manager.

How can I learn more?

For more details, refer to the KEP and to the beta announcement article: Kubernetes 1.29: Decoupling taint manager from node lifecycle controller.

How to get involved?

We offer a huge thank you to all the contributors who helped with design, implementation, and review of this feature and helped move it from beta to stable:

- Ed Bartosh (@bart0sh)

- Yuan Chen (@yuanchen8911)

- Aldo Culquicondor (@alculquicondor)

- Baofa Fan (@carlory)

- Sergey Kanzhelev (@SergeyKanzhelev)

- Tim Bannister (@lmktfy)

- Maciej Skoczeń (@macsko)

- Maciej Szulik (@soltysh)

- Wojciech Tyczynski (@wojtek-t)

Kubernetes v1.34: Autoconfiguration for Node Cgroup Driver Goes GA

Historically, configuring the correct cgroup driver has been a pain point for users running new

Kubernetes clusters. On Linux systems, there are two different cgroup drivers:

cgroupfs and systemd. In the past, both the kubelet

and CRI implementation (like CRI-O or containerd) needed to be configured to use

the same cgroup driver, or else the kubelet would misbehave without any explicit

error message. This was a source of headaches for many cluster admins. Now, we've

(almost) arrived at the end of that headache.

Automated cgroup driver detection

In v1.28.0, the SIG Node community introduced the feature gate

KubeletCgroupDriverFromCRI, which instructs the kubelet to ask the CRI

implementation which cgroup driver to use. You can read more here.

After many releases of waiting for each CRI implementation to have major versions released

and packaged in major operating systems, this feature has gone GA as of Kubernetes 1.34.0.

In addition to setting the feature gate, a cluster admin needs to ensure their CRI implementation is new enough:

- containerd: Support was added in v2.0.0

- CRI-O: Support was added in v1.28.0

Announcement: Kubernetes is deprecating containerd v1.y support

While CRI-O releases versions that match Kubernetes versions, and thus CRI-O versions without this behavior are no longer supported, containerd maintains its own release cycle. containerd support for this feature is only in v2.0 and later, but Kubernetes 1.34 still supports containerd 1.7 and other LTS releases of containerd.

The Kubernetes SIG Node community has formally agreed upon a final support

timeline for containerd v1.y. The last Kubernetes release to offer this support

will be the last released version of v1.35, and support will be dropped in

v1.36.0. To assist administrators in managing this future transition,

a new detection mechanism is available. You are able to monitor

the kubelet_cri_losing_support metric to determine if any nodes in your cluster

are using a containerd version that will soon be outdated. The presence of

this metric with a version label of 1.36.0 will indicate that the node's containerd

runtime is not new enough for the upcoming requirements. Consequently, an

administrator will need to upgrade containerd to v2.0 or a later version before,

or at the same time as, upgrading the kubelet to v1.36.0.

Kubernetes v1.34: Mutable CSI Node Allocatable Graduates to Beta

The functionality for CSI drivers to update information about attachable volume count on the nodes, first introduced as Alpha in Kubernetes v1.33, has graduated to Beta in the Kubernetes v1.34 release! This marks a significant milestone in enhancing the accuracy of stateful pod scheduling by reducing failures due to outdated attachable volume capacity information.

Background

Traditionally, Kubernetes CSI drivers report a static maximum volume attachment limit when initializing. However, actual attachment capacities can change during a node's lifecycle for various reasons, such as:

- Manual or external operations attaching/detaching volumes outside of Kubernetes control.

- Dynamically attached network interfaces or specialized hardware (GPUs, NICs, etc.) consuming available slots.

- Multi-driver scenarios, where one CSI driver’s operations affect available capacity reported by another.

Static reporting can cause Kubernetes to schedule pods onto nodes that appear to have capacity but don't, leading to pods stuck in a ContainerCreating state.

Dynamically adapting CSI volume limits

With this new feature, Kubernetes enables CSI drivers to dynamically adjust and report node attachment capacities at runtime. This ensures that the scheduler, as well as other components relying on this information, have the most accurate, up-to-date view of node capacity.

How it works

Kubernetes supports two mechanisms for updating the reported node volume limits:

- Periodic Updates: CSI drivers specify an interval to periodically refresh the node's allocatable capacity.

- Reactive Updates: An immediate update triggered when a volume attachment fails due to exhausted resources (

ResourceExhaustederror).

Enabling the feature

To use this beta feature, the MutableCSINodeAllocatableCount feature gate must be enabled in these components:

kube-apiserverkubelet

Example CSI driver configuration

Below is an example of configuring a CSI driver to enable periodic updates every 60 seconds:

apiVersion: storage.k8s.io/v1

kind: CSIDriver

metadata:

name: example.csi.k8s.io

spec:

nodeAllocatableUpdatePeriodSeconds: 60

This configuration directs kubelet to periodically call the CSI driver's NodeGetInfo method every 60 seconds, updating the node’s allocatable volume count. Kubernetes enforces a minimum update interval of 10 seconds to balance accuracy and resource usage.

Immediate updates on attachment failures

When a volume attachment operation fails due to a ResourceExhausted error (gRPC code 8), Kubernetes immediately updates the allocatable count instead of waiting for the next periodic update. The Kubelet then marks the affected pods as Failed, enabling their controllers to recreate them. This prevents pods from getting permanently stuck in the ContainerCreating state.

Getting started

To enable this feature in your Kubernetes v1.34 cluster:

- Enable the feature gate

MutableCSINodeAllocatableCounton thekube-apiserverandkubeletcomponents. - Update your CSI driver configuration by setting

nodeAllocatableUpdatePeriodSeconds. - Monitor and observe improvements in scheduling accuracy and pod placement reliability.

Next steps

This feature is currently in beta and the Kubernetes community welcomes your feedback. Test it, share your experiences, and help guide its evolution to GA stability.

Join discussions in the Kubernetes Storage Special Interest Group (SIG-Storage) to shape the future of Kubernetes storage capabilities.

Kubernetes v1.34: Use An Init Container To Define App Environment Variables

Kubernetes typically uses ConfigMaps and Secrets to set environment variables, which introduces additional API calls and complexity, For example, you need to separately manage the Pods of your workloads and their configurations, while ensuring orderly updates for both the configurations and the workload Pods.

Alternatively, you might be using a vendor-supplied container that requires environment variables (such as a license key or a one-time token), but you don’t want to hard-code them or mount volumes just to get the job done.

If that's the situation you are in, you now have a new (alpha) way to

achieve that. Provided you have the EnvFiles

feature gate

enabled across your cluster, you can tell the kubelet to load a container's

environment variables from a volume (the volume must be part of the Pod that

the container belongs to).

this feature gate allows you to load environment variables directly from a file in an emptyDir volume

without actually mounting that file into the container.

It’s a simple yet elegant solution to some surprisingly common problems.

What’s this all about?

At its core, this feature allows you to point your container to a file,

one generated by an initContainer,

and have Kubernetes parse that file to set your environment variables.

The file lives in an emptyDir volume (a temporary storage space that lasts as long as the pod does),

Your main container doesn’t need to mount the volume.

The kubelet will read the file and inject these variables when the container starts.

How It Works

Here's a simple example:

apiVersion: v1

kind: Pod

spec:

initContainers:

- name: generate-config

image: busybox

command: ['sh', '-c', 'echo "CONFIG_VAR=HELLO" > /config/config.env']

volumeMounts:

- name: config-volume

mountPath: /config

containers:

- name: app-container

image: gcr.io/distroless/static

env:

- name: CONFIG_VAR

valueFrom:

fileKeyRef:

path: config.env

volumeName: config-volume

key: CONFIG_VAR

volumes:

- name: config-volume

emptyDir: {}

Using this approach is a breeze.

You define your environment variables in the pod spec using the fileKeyRef field,

which tells Kubernetes where to find the file and which key to pull.

The file itself resembles the standard for .env syntax (think KEY=VALUE),

and (for this alpha stage at least) you must ensure that it is written into

an emptyDir volume. Other volume types aren't supported for this feature.

At least one init container must mount that emptyDir volume (to write the file),

but the main container doesn’t need to—it just gets the variables handed to it at startup.

A word on security

While this feature supports handling sensitive data such as keys or tokens,

note that its implementation relies on emptyDir volumes mounted into pod.

Operators with node filesystem access could therefore

easily retrieve this sensitive data through pod directory paths.

If storing sensitive data like keys or tokens using this feature, ensure your cluster security policies effectively protect nodes against unauthorized access to prevent exposure of confidential information.

Summary

This feature will eliminate a number of complex workarounds used today, simplifying apps authoring, and opening doors for more use cases. Kubernetes stays flexible and open for feedback. Tell us how you use this feature or what is missing.

Kubernetes v1.34: Snapshottable API server cache

For years, the Kubernetes community has been on a mission to improve the stability and performance predictability of the API server.

A major focus of this effort has been taming list requests, which have historically been a primary source of high memory usage and heavy load on the etcd datastore.

With each release, we've chipped away at the problem, and today, we're thrilled to announce the final major piece of this puzzle.

The snapshottable API server cache feature has graduated to Beta in Kubernetes v1.34, culminating a multi-release effort to allow virtually all read requests to be served directly from the API server's cache.

Evolving the cache for performance and stability

The path to the current state involved several key enhancements over recent releases that paved the way for today's announcement.

Consistent reads from cache (Beta in v1.31)

While the API server has long used a cache for performance, a key milestone was guaranteeing consistent reads of the latest data from it. This v1.31 enhancement allowed the watch cache to be used for strongly-consistent read requests for the first time, a huge win as it enabled filtered collections (e.g. "a list of pods bound to this node") to be safely served from the cache instead of etcd, dramatically reducing its load for common workloads.

Taming large responses with streaming (Beta in v1.33)

Another key improvement was tackling the problem of memory spikes when transmitting large responses. The streaming encoder, introduced in v1.33, allowed the API server to send list items one by one, rather than buffering the entire multi-gigabyte response in memory. This made the memory cost of sending a response predictable and minimal, regardless of its size.

The missing piece

Despite these huge improvements, a critical gap remained. Any request for a historical LIST—most commonly used for paginating through large result sets—still had to bypass the cache and query etcd directly. This meant that the cost of retrieving the data was still unpredictable and could put significant memory pressure on the API server.

Kubernetes 1.34: snapshots complete the picture

The snapshottable API server cache solves this final piece of the puzzle. This feature enhances the watch cache, enabling it to generate efficient, point-in-time snapshots of its state.

Here’s how it works: for each update, the cache creates a lightweight snapshot. These snapshots are "lazy copies," meaning they don't duplicate objects but simply store pointers, making them incredibly memory-efficient.

When a list request for a historical resourceVersion arrives, the API server now finds the corresponding snapshot and serves the response directly from its memory.

This closes the final major gap, allowing paginated requests to be served entirely from the cache.

A new era of API Server performance ?

With this final piece in place, the synergy of these three features ushers in a new era of API server predictability and performance:

- Get Data from Cache: Consistent reads and snapshottable cache work together to ensure nearly all read requests—whether for the latest data or a historical snapshot—are served from the API server's memory.

- Send data via stream: Streaming list responses ensure that sending this data to the client has a minimal and constant memory footprint.

The result is a system where the resource cost of read operations is almost fully predictable and much more resiliant to spikes in request load.

This means dramatically reduced memory pressure, a lighter load on etcd, and a more stable, scalable, and reliable control plane for all Kubernetes clusters.

How to get started

With its graduation to Beta, the SnapshottableCache feature gate is enabled by default in Kubernetes v1.34. There are no actions required to start benefiting from these performance and stability improvements.

Acknowledgements

Special thanks for designing, implementing, and reviewing these critical features go to:

- Ahmad Zolfaghari (@ah8ad3)

- Ben Luddy (@benluddy) – Red Hat

- Chen Chen (@z1cheng) – Microsoft

- Davanum Srinivas (@dims) – Nvidia

- David Eads (@deads2k) – Red Hat

- Han Kang (@logicalhan) – CoreWeave

- haosdent (@haosdent) – Shopee

- Joe Betz (@jpbetz) – Google

- Jordan Liggitt (@liggitt) – Google

- Łukasz Szaszkiewicz (@p0lyn0mial) – Red Hat

- Maciej Borsz (@mborsz) – Google

- Madhav Jivrajani (@MadhavJivrajani) – UIUC

- Marek Siarkowicz (@serathius) – Google

- NKeert (@NKeert)

- Tim Bannister (@lmktfy)

- Wei Fu (@fuweid) - Microsoft

- Wojtek Tyczyński (@wojtek-t) – Google

...and many others in SIG API Machinery. This milestone is a testament to the community's dedication to building a more scalable and robust Kubernetes.

Path To Releasing Helm v4

The first Alpha for Helm v4 has been released. Now that Helm v4 development is in the home stretch, we wanted to share the details on what's happening and how the broader community can get involved.

Alpha Period

With the start of September, there is a freeze on new major features for Helm v4. This begins the Alpha phase, where API breaking changes will still happen, but the focus turns to stability and making sure the existing changes work as expected.

If you're a Helm user, during this period you can test out the current capabilities and provide feedback where things aren't working as expected. Just remember, this is alpha quality software and changes are still occurring.

For Helm SDK users, now is a good time to look at the API to see if there are any concerns with the design changes along with any impacts to your efforts.

The Alpha period runs through the month of September.

Beta Period

The beta period starts in October. At this point the focus is on stability in preparation for release. API breaking changes should be complete and the focus transitions to fixing any bugs to ensure there is a stable release.

Testers should file bugs as they encounter any issues.

Per release schedule policy, once the first beta version is available, the final 4.0.0 release date will be chosen and announced.

Release Candidates

At the end of October, the first release candidate will be created. This represents what we think will be released as Helm v4. If there are any major issues, they will be fixed and a new release candidate will be made.

? Release ?

The release is planned for KubeCon + CloudNativeCon North America 2025. in mid November, which is 6 years after the release of Helm v3 and 10 years after the creation of Helm. More details on the release will come.

Beyond linkerd-viz: Linkerd Metrics with OpenTelemetry

TL;DR

Linkerd, the enterprise-grade service mesh that minimizes overhead, now integrates with OpenTelemetry, often also simply called OTel. That’s pretty cool because it allows you to collect and export Linkerd’s metrics to your favorite observability tools. This integration improves your ability to monitor and troubleshoot applications effectively. Sounds interesting? Read on.

Before we dive into this topic, I want to be sure you have a basic understanding of Kubernetes. If you’re new to it, that’s ok! But I’d recommend exploring the official Kubernetes tutorials and/or experimenting with “Kind” (Kubernetes in Docker) with this simple guide.

Path To Releasing Helm v4

The first Alpha for Helm v4 has been released. Now that Helm v4 development is in the home stretch, we wanted to share the details on what's happening and how the broader community can get involved.

Kubernetes v1.34: VolumeAttributesClass for Volume Modification GA

The VolumeAttributesClass API, which empowers users to dynamically modify volume attributes, has officially graduated to General Availability (GA) in Kubernetes v1.34. This marks a significant milestone, providing a robust and stable way to tune your persistent storage directly within Kubernetes.

What is VolumeAttributesClass?

At its core, VolumeAttributesClass is a cluster-scoped resource that defines a set of mutable parameters for a volume. Think of it as a "profile" for your storage, allowing cluster administrators to expose different quality-of-service (QoS) levels or performance tiers.

Users can then specify a volumeAttributesClassName in their PersistentVolumeClaim (PVC) to indicate which class of attributes they desire. The magic happens through the Container Storage Interface (CSI): when a PVC referencing a VolumeAttributesClass is updated, the associated CSI driver interacts with the underlying storage system to apply the specified changes to the volume.

This means you can now:

- Dynamically scale performance: Increase IOPS or throughput for a busy database, or reduce it for a less critical application.

- Optimize costs: Adjust attributes on the fly to match your current needs, avoiding over-provisioning.

- Simplify operations: Manage volume modifications directly within the Kubernetes API, rather than relying on external tools or manual processes.

What is new from Beta to GA

There are two major enhancements from beta.

Cancel support from infeasible errors

To improve resilience and user experience, the GA release introduces explicit cancel support when a requested volume modification becomes infeasible. If the underlying storage system or CSI driver indicates that the requested changes cannot be applied (e.g., due to invalid arguments), users can cancel the operation and revert the volume to its previous stable configuration, preventing the volume from being left in an inconsistent state.

Quota support based on scope

While VolumeAttributesClass doesn't add a new quota type, the Kubernetes control plane can be configured to enforce quotas on PersistentVolumeClaims that reference a specific VolumeAttributesClass.

This is achieved by using the scopeSelector field in a ResourceQuota to target PVCs that have .spec.volumeAttributesClassName set to a particular VolumeAttributesClass name. Please see more details here.

Drivers support VolumeAttributesClass

- Amazon EBS CSI Driver: The AWS EBS CSI driver has robust support for VolumeAttributesClass and allows you to modify parameters like volume type (e.g., gp2 to gp3, io1 to io2), IOPS, and throughput of EBS volumes dynamically.

- Google Compute Engine (GCE) Persistent Disk CSI Driver (pd.csi.storage.gke.io): This driver also supports dynamic modification of persistent disk attributes, including IOPS and throughput, via VolumeAttributesClass.

Contact

For any inquiries or specific questions related to VolumeAttributesClass, please reach out to the SIG Storage community.

CoreDNS-1.12.4 Release

Kubernetes v1.34: Pod Replacement Policy for Jobs Goes GA

In Kubernetes v1.34, the Pod replacement policy feature has reached general availability (GA). This blog post describes the Pod replacement policy feature and how to use it in your Jobs.

About Pod Replacement Policy

By default, the Job controller immediately recreates Pods as soon as they fail or begin terminating (when they have a deletion timestamp).

As a result, while some Pods are terminating, the total number of running Pods for a Job can temporarily exceed the specified parallelism. For Indexed Jobs, this can even mean multiple Pods running for the same index at the same time.

This behavior works fine for many workloads, but it can cause problems in certain cases.

For example, popular machine learning frameworks like TensorFlow and JAX expect exactly one Pod per worker index. If two Pods run at the same time, you might encounter errors such as:

/job:worker/task:4: Duplicate task registration with task_name=/job:worker/replica:0/task:4

Additionally, starting replacement Pods before the old ones fully terminate can lead to:

- Scheduling delays by kube-scheduler as the nodes remain occupied.

- Unnecessary cluster scale-ups to accommodate the replacement Pods.

- Temporary bypassing of quota checks by workload orchestrators like Kueue.

With Pod replacement policy, Kubernetes gives you control over when the control plane replaces terminating Pods, helping you avoid these issues.

How Pod Replacement Policy works

This enhancement means that Jobs in Kubernetes have an optional field .spec.podReplacementPolicy.

You can choose one of two policies:

TerminatingOrFailed(default): Replaces Pods as soon as they start terminating.Failed: Replaces Pods only after they fully terminate and transition to theFailedphase.

Setting the policy to Failed ensures that a new Pod is only created after the previous one has completely terminated.

For Jobs with a Pod Failure Policy, the default podReplacementPolicy is Failed, and no other value is allowed.

See Pod Failure Policy to learn more about Pod Failure Policies for Jobs.

You can check how many Pods are currently terminating by inspecting the Job’s .status.terminating field:

kubectl get job myjob -o=jsonpath='{.status.terminating}'

Example

Here’s a Job example that executes a task two times (spec.completions: 2) in parallel (spec.parallelism: 2) and

replaces Pods only after they fully terminate (spec.podReplacementPolicy: Failed):

apiVersion: batch/v1

kind: Job

metadata:

name: example-job

spec:

completions: 2

parallelism: 2

podReplacementPolicy: Failed

template:

spec:

restartPolicy: Never

containers:

- name: worker

image: your-image

If a Pod receives a SIGTERM signal (deletion, eviction, preemption...), it begins terminating. When the container handles termination gracefully, cleanup may take some time.

When the Job starts, we will see two Pods running:

kubectl get pods

NAME READY STATUS RESTARTS AGE

example-job-qr8kf 1/1 Running 0 2s

example-job-stvb4 1/1 Running 0 2s

Let's delete one of the Pods (example-job-qr8kf).

With the TerminatingOrFailed policy, as soon as one Pod (example-job-qr8kf) starts terminating, the Job controller immediately creates a new Pod (example-job-b59zk) to replace it.

kubectl get pods

NAME READY STATUS RESTARTS AGE

example-job-b59zk 1/1 Running 0 1s

example-job-qr8kf 1/1 Terminating 0 17s

example-job-stvb4 1/1 Running 0 17s

With the Failed policy, the new Pod (example-job-b59zk) is not created while the old Pod (example-job-qr8kf) is terminating.

kubectl get pods

NAME READY STATUS RESTARTS AGE

example-job-qr8kf 1/1 Terminating 0 17s

example-job-stvb4 1/1 Running 0 17s

When the terminating Pod has fully transitioned to the Failed phase, a new Pod is created:

kubectl get pods

NAME READY STATUS RESTARTS AGE

example-job-b59zk 1/1 Running 0 1s

example-job-stvb4 1/1 Running 0 25s

How can you learn more?

- Read the user-facing documentation for Pod Replacement Policy, Backoff Limit per Index, and Pod Failure Policy.

- Read the KEPs for Pod Replacement Policy, Backoff Limit per Index, and Pod Failure Policy.

Acknowledgments

As with any Kubernetes feature, multiple people contributed to getting this done, from testing and filing bugs to reviewing code.

As this feature moves to stable after 2 years, we would like to thank the following people:

- Kevin Hannon - for writing the KEP and the initial implementation.

- Michał Woźniak - for guidance, mentorship, and reviews.

- Aldo Culquicondor - for guidance, mentorship, and reviews.

- Maciej Szulik - for guidance, mentorship, and reviews.

- Dejan Zele Pejchev - for taking over the feature and promoting it from Alpha through Beta to GA.

Get involved

This work was sponsored by the Kubernetes batch working group in close collaboration with the SIG Apps community.

If you are interested in working on new features in the space we recommend subscribing to our Slack channel and attending the regular community meetings.

Linkerd Edge Release Roundup: September 2025

Welcome to the September 2025 Edge Release Roundup post, where we dive into the most recent edge releases to help keep everyone up to date on the latest and greatest! This post covers edge releases from August 2025.

How to give feedback

Edge releases are a snapshot of our current development work on main; by

definition, they always have the most recent features but they may have

incomplete features, features that end up getting rolled back later, or (like

all software) even bugs. That said, edge releases are intended for

production use, and go through a rigorous set of automated and manual tests

before being released. Once released, we also document whether the release is

recommended for broad use – and when needed, we go back and update the

recommendations.

Kubernetes v1.34: PSI Metrics for Kubernetes Graduates to Beta

As Kubernetes clusters grow in size and complexity, understanding the health and performance of individual nodes becomes increasingly critical. We are excited to announce that as of Kubernetes v1.34, Pressure Stall Information (PSI) Metrics has graduated to Beta.

What is Pressure Stall Information (PSI)?

Pressure Stall Information (PSI) is a feature of the Linux kernel (version 4.20 and later) that provides a canonical way to quantify pressure on infrastructure resources, in terms of whether demand for a resource exceeds current supply. It moves beyond simple resource utilization metrics and instead measures the amount of time that tasks are stalled due to resource contention. This is a powerful way to identify and diagnose resource bottlenecks that can impact application performance.

PSI exposes metrics for CPU, memory, and I/O, categorized as either some or full pressure:

some- The percentage of time that at least one task is stalled on a resource. This indicates some level of resource contention.

full- The percentage of time that all non-idle tasks are stalled on a resource simultaneously. This indicates a more severe resource bottleneck.

PSI: 'Some' vs. 'Full' Pressure

These metrics are aggregated over 10-second, 1-minute, and 5-minute rolling windows, providing a comprehensive view of resource pressure over time.

PSI metrics in Kubernetes

With the KubeletPSI feature gate enabled, the kubelet can now collect PSI metrics from the Linux kernel and expose them through two channels: the Summary API and the /metrics/cadvisor Prometheus endpoint. This allows you to monitor and alert on resource pressure at the node, pod, and container level.

The following new metrics are available in Prometheus exposition format via /metrics/cadvisor:

container_pressure_cpu_stalled_seconds_totalcontainer_pressure_cpu_waiting_seconds_totalcontainer_pressure_memory_stalled_seconds_totalcontainer_pressure_memory_waiting_seconds_totalcontainer_pressure_io_stalled_seconds_totalcontainer_pressure_io_waiting_seconds_total

These metrics, along with the data from the Summary API, provide a granular view of resource pressure, enabling you to pinpoint the source of performance issues and take corrective action. For example, you can use these metrics to:

- Identify memory leaks: A steadily increasing

somepressure for memory can indicate a memory leak in an application. - Optimize resource requests and limits: By understanding the resource pressure of your workloads, you can more accurately tune their resource requests and limits.

- Autoscale workloads: You can use PSI metrics to trigger autoscaling events, ensuring that your workloads have the resources they need to perform optimally.

How to enable PSI metrics

To enable PSI metrics in your Kubernetes cluster, you need to:

- Ensure your nodes are running a Linux kernel version 4.20 or later and are using cgroup v2.

- Enable the

KubeletPSIfeature gate on the kubelet.

Once enabled, you can start scraping the /metrics/cadvisor endpoint with your Prometheus-compatible monitoring solution or query the Summary API to collect and visualize the new PSI metrics. Note that PSI is a Linux-kernel feature, so these metrics are not available on Windows nodes. Your cluster can contain a mix of Linux and Windows nodes, and on the Windows nodes the kubelet does not expose PSI metrics.

What's next?

We are excited to bring PSI metrics to the Kubernetes community and look forward to your feedback. As a beta feature, we are actively working on improving and extending this functionality towards a stable GA release. We encourage you to try it out and share your experiences with us.

To learn more about PSI metrics, check out the official Kubernetes documentation. You can also get involved in the conversation on the #sig-node Slack channel.

Kubernetes v1.34: Service Account Token Integration for Image Pulls Graduates to Beta

The Kubernetes community continues to advance security best practices by reducing reliance on long-lived credentials. Following the successful alpha release in Kubernetes v1.33, Service Account Token Integration for Kubelet Credential Providers has now graduated to beta in Kubernetes v1.34, bringing us closer to eliminating long-lived image pull secrets from Kubernetes clusters.

This enhancement allows credential providers to use workload-specific service account tokens to obtain registry credentials, providing a secure, ephemeral alternative to traditional image pull secrets.

What's new in beta?

The beta graduation brings several important changes that make the feature more robust and production-ready:

Required cacheType field

Breaking change from alpha: The cacheType field is required

in the credential provider configuration when using service account tokens.

This field is new in beta and must be specified to ensure proper caching behavior.

# CAUTION: this is not a complete configuration example, just a reference for the 'tokenAttributes.cacheType' field.

tokenAttributes:

serviceAccountTokenAudience: "my-registry-audience"

cacheType: "ServiceAccount" # Required field in beta

requireServiceAccount: true

Choose between two caching strategies:

Token: Cache credentials per service account token (use when credential lifetime is tied to the token). This is useful when the credential provider transforms the service account token into registry credentials with the same lifetime as the token, or when registries support Kubernetes service account tokens directly. Note: The kubelet cannot send service account tokens directly to registries; credential provider plugins are needed to transform tokens into the username/password format expected by registries.ServiceAccount: Cache credentials per service account identity (use when credentials are valid for all pods using the same service account)

Isolated image pull credentials

The beta release provides stronger security isolation for container images when using service account tokens for image pulls. It ensures that pods can only access images that were pulled using ServiceAccounts they're authorized to use. This prevents unauthorized access to sensitive container images and enables granular access control where different workloads can have different registry permissions based on their ServiceAccount.

When credential providers use service account tokens, the system tracks ServiceAccount identity (namespace, name, and UID) for each pulled image. When a pod attempts to use a cached image, the system verifies that the pod's ServiceAccount matches exactly with the ServiceAccount that was used to originally pull the image.

Administrators can revoke access to previously pulled images by deleting and recreating the ServiceAccount, which changes the UID and invalidates cached image access.

For more details about this capability, see the image pull credential verification documentation.

How it works

Configuration

Credential providers opt into using ServiceAccount tokens

by configuring the tokenAttributes field:

#

# CAUTION: this is an example configuration.

# Do not use this for your own cluster!

#

apiVersion: kubelet.config.k8s.io/v1

kind: CredentialProviderConfig

providers:

- name: my-credential-provider

matchImages:

- "*.myregistry.io/*"

defaultCacheDuration: "10m"

apiVersion: credentialprovider.kubelet.k8s.io/v1

tokenAttributes:

serviceAccountTokenAudience: "my-registry-audience"

cacheType: "ServiceAccount" # New in beta

requireServiceAccount: true

requiredServiceAccountAnnotationKeys:

- "myregistry.io/identity-id"

optionalServiceAccountAnnotationKeys:

- "myregistry.io/optional-annotation"

Image pull flow

At a high level, kubelet coordinates with your credential provider

and the container runtime as follows:

-

When the image is not present locally:

kubeletchecks its credential cache using the configuredcacheType(TokenorServiceAccount)- If needed,

kubeletrequests a ServiceAccount token for the pod's ServiceAccount and passes it, plus any required annotations, to the credential provider - The provider exchanges that token for registry credentials

and returns them to

kubelet kubeletcaches credentials per thecacheTypestrategy and pulls the image with those credentialskubeletrecords the ServiceAccount coordinates (namespace, name, UID) associated with the pulled image for later authorization checks

-

When the image is already present locally:

kubeletverifies the pod's ServiceAccount coordinates match the coordinates recorded for the cached image- If they match exactly, the cached image can be used without pulling from the registry

- If they differ,

kubeletperforms a fresh pull using credentials for the new ServiceAccount

-

With image pull credential verification enabled:

- Authorization is enforced using the recorded ServiceAccount coordinates, ensuring pods only use images pulled by a ServiceAccount they are authorized to use

- Administrators can revoke access by deleting and recreating a ServiceAccount; the UID changes and previously recorded authorization no longer matches

Audience restriction

The beta release builds on service account node audience restriction

(beta since v1.33) to ensure kubelet can only request tokens for authorized audiences.

Administrators configure allowed audiences using RBAC to enable kubelet to request service account tokens for image pulls:

#

# CAUTION: this is an example configuration.

# Do not use this for your own cluster!

#

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kubelet-credential-provider-audiences

rules:

- verbs: ["request-serviceaccounts-token-audience"]

apiGroups: [""]

resources: ["my-registry-audience"]

resourceNames: ["registry-access-sa"] # Optional: specific SA

Getting started with beta

Prerequisites

- Kubernetes v1.34 or later

- Feature gate enabled:

KubeletServiceAccountTokenForCredentialProviders=true(beta, enabled by default) - Credential provider support: Update your credential provider to handle ServiceAccount tokens

Migration from alpha

If you're already using the alpha version, the migration to beta requires minimal changes:

- Add

cacheTypefield: Update your credential provider configuration to include the requiredcacheTypefield - Review caching strategy:

Choose between

TokenandServiceAccountcache types based on your provider's behavior - Test audience restrictions: Ensure your RBAC configuration, or other cluster authorization rules, will properly restrict token audiences

Example setup

Here's a complete example for setting up a credential provider with service account tokens (this example assumes your cluster uses RBAC authorization):

#

# CAUTION: this is an example configuration.

# Do not use this for your own cluster!

#

# Service Account with registry annotations

apiVersion: v1

kind: ServiceAccount

metadata:

name: registry-access-sa

namespace: default

annotations:

myregistry.io/identity-id: "user123"

---

# RBAC for audience restriction

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: registry-audience-access

rules:

- verbs: ["request-serviceaccounts-token-audience"]

apiGroups: [""]

resources: ["my-registry-audience"]

resourceNames: ["registry-access-sa"] # Optional: specific ServiceAccount

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubelet-registry-audience

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: registry-audience-access

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

---

# Pod using the ServiceAccount

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

serviceAccountName: registry-access-sa

containers:

- name: my-app

image: myregistry.example/my-app:latest

What's next?

For Kubernetes v1.35, we - Kubernetes SIG Auth - expect the feature to stay in beta, and we will continue to solicit feedback.

You can learn more about this feature on the service account token for image pulls page in the Kubernetes documentation.

You can also follow along on the KEP-4412 to track progress across the coming Kubernetes releases.

Call to action

In this blog post,

I have covered the beta graduation of ServiceAccount token integration

for Kubelet Credential Providers in Kubernetes v1.34.

I discussed the key improvements,

including the required cacheType field

and enhanced integration with Ensure Secret Pull Images.

We have been receiving positive feedback from the community during the alpha phase and would love to hear more as we stabilize this feature for GA. In particular, we would like feedback from credential provider implementors as they integrate with the new beta API and caching mechanisms. Please reach out to us on the #sig-auth-authenticators-dev channel on Kubernetes Slack.

How to get involved

If you are interested in getting involved in the development of this feature, share feedback, or participate in any other ongoing SIG Auth projects, please reach out on the #sig-auth channel on Kubernetes Slack.

You are also welcome to join the bi-weekly SIG Auth meetings, held every other Wednesday.

Kubernetes v1.34: Introducing CPU Manager Static Policy Option for Uncore Cache Alignment

A new CPU Manager Static Policy Option called prefer-align-cpus-by-uncorecache was introduced in Kubernetes v1.32 as an alpha feature, and has graduated to beta in Kubernetes v1.34.

This CPU Manager Policy Option is designed to optimize performance for specific workloads running on processors with a split uncore cache architecture.

In this article, I'll explain what that means and why it's useful.

Understanding the feature

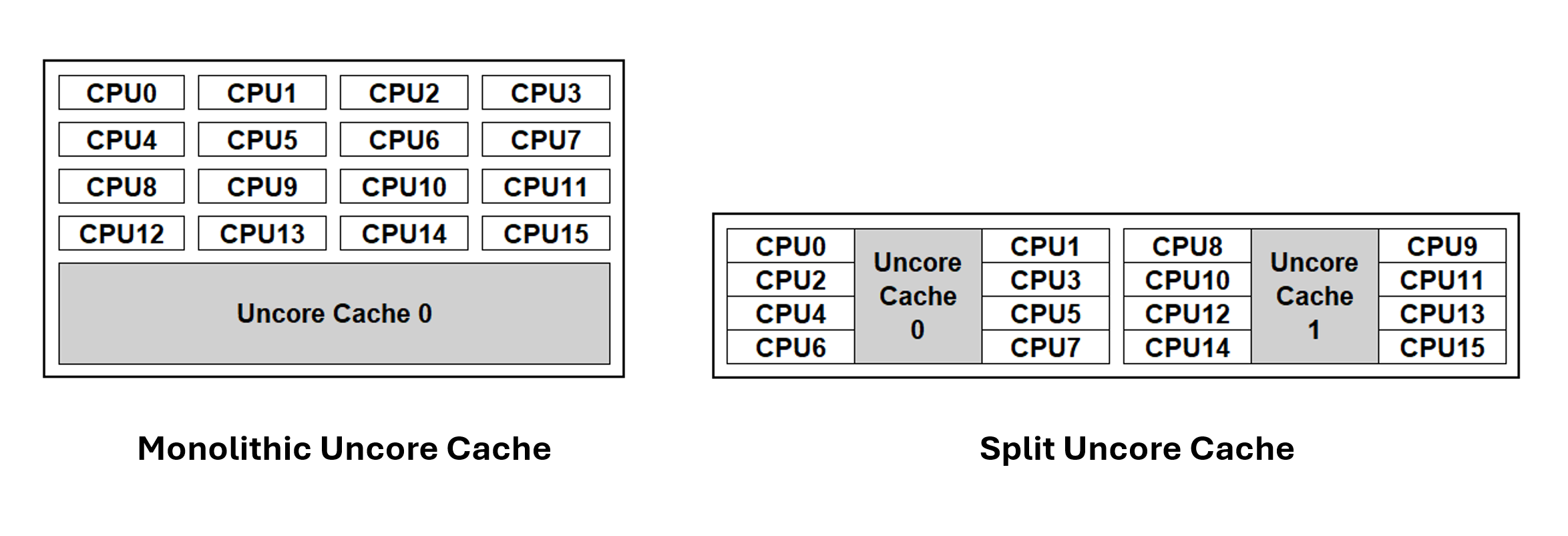

What is uncore cache?

Until relatively recently, nearly all mainstream computer processors had a monolithic last-level-cache cache that was shared across every core in a multiple CPU package. This monolithic cache is also referred to as uncore cache (because it is not linked to a specific core), or as Level 3 cache. As well as the Level 3 cache, there is other cache, commonly called Level 1 and Level 2 cache, that is associated with a specific CPU core.

In order to reduce access latency between the CPU cores and their cache, recent AMD64 and ARM

architecture based processors have introduced a split uncore cache architecture,

where the last-level-cache is divided into multiple physical caches,

that are aligned to specific CPU groupings within the physical package.

The shorter distances within the CPU package help to reduce latency.

Kubernetes is able to place workloads in a way that accounts for the cache topology within the CPU package(s).

Cache-aware workload placement

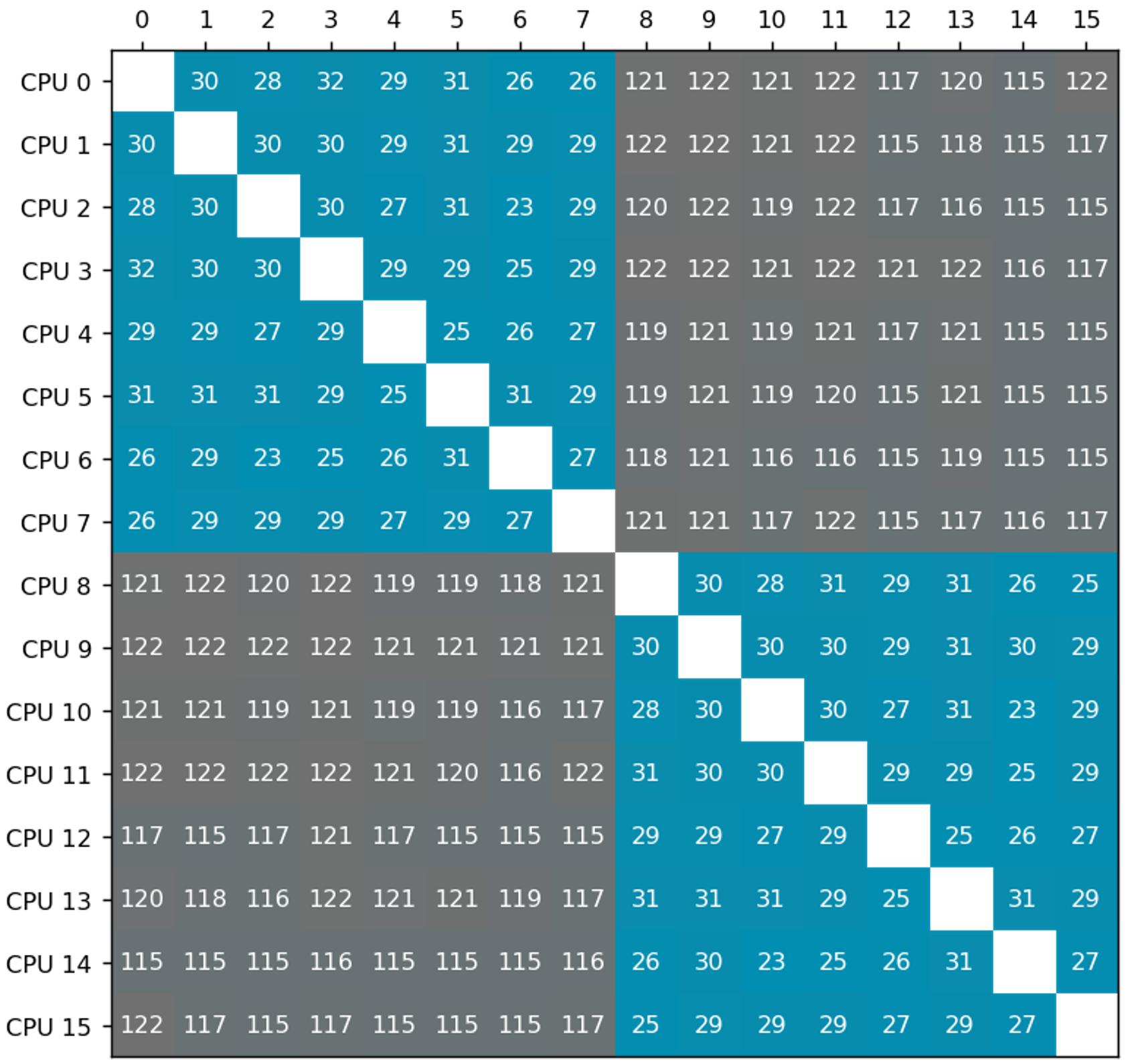

The matrix below shows the CPU-to-CPU latency measured in nanoseconds (lower is better) when

passing a packet between CPUs, via its cache coherence protocol on a processor that

uses split uncore cache.

In this example, the processor package consists of 2 uncore caches.

Each uncore cache serves 8 CPU cores.

Blue entries in the matrix represent latency between CPUs sharing the same uncore cache, while grey entries indicate latency between CPUs corresponding to different uncore caches. Latency between CPUs that correspond to different caches are higher than the latency between CPUs that belong to the same cache.

Blue entries in the matrix represent latency between CPUs sharing the same uncore cache, while grey entries indicate latency between CPUs corresponding to different uncore caches. Latency between CPUs that correspond to different caches are higher than the latency between CPUs that belong to the same cache.

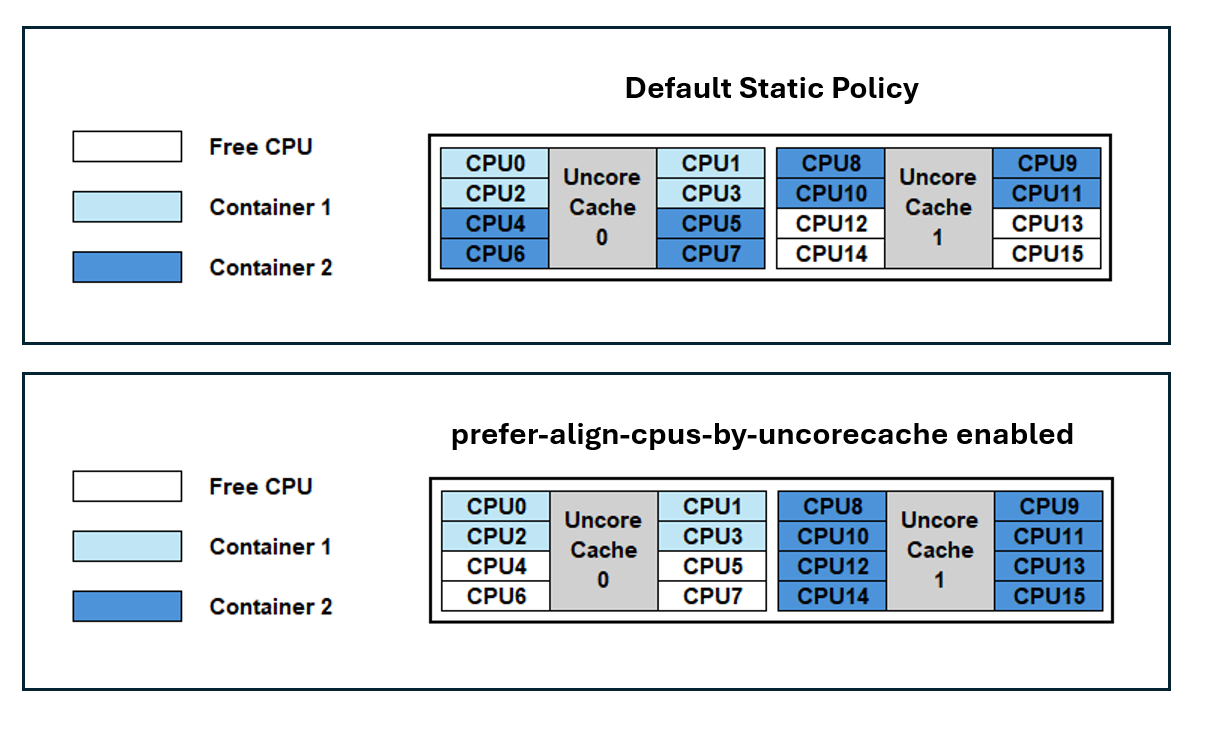

With prefer-align-cpus-by-uncorecache enabled, the

static CPU Manager attempts to allocates CPU resources for a container, such that all CPUs assigned to a container share the same uncore cache.

This policy operates on a best-effort basis, aiming to minimize the distribution of a container's CPU resources across uncore caches, based on the

container's requirements, and accounting for allocatable resources on the node.

By running a workload, where it can, on a set of CPUS that use the smallest feasible number of uncore caches, applications benefit from reduced cache latency (as seen in the matrix above), and from reduced contention against other workloads, which can result in overall higher throughput. The benefit only shows up if your nodes use a split uncore cache topology for their processors.

The following diagram below illustrates uncore cache alignment when the feature is enabled.

By default, Kubernetes does not account for uncore cache topology; containers are assigned CPU resources using a packed methodology. As a result, Container 1 and Container 2 can experience a noisy neighbor impact due to cache access contention on Uncore Cache 0. Additionally, Container 2 will have CPUs distributed across both caches which can introduce a cross-cache latency.

With prefer-align-cpus-by-uncorecache enabled, each container is isolated on an individual cache. This resolves the cache contention between the containers and minimizes the cache latency for the CPUs being utilized.

Use cases

Common use cases can include telco applications like vRAN, Mobile Packet Core, and Firewalls. It's important to note that the optimization provided by prefer-align-cpus-by-uncorecache can be dependent on the workload. For example, applications that are memory bandwidth bound may not benefit from uncore cache alignment, as utilizing more uncore caches can increase memory bandwidth access.

Enabling the feature

To enable this feature, set the CPU Manager Policy to static and enable the CPU Manager Policy Options with prefer-align-cpus-by-uncorecache.

For Kubernetes 1.34, the feature is in the beta stage and requires the CPUManagerPolicyBetaOptions

feature gate to also be enabled.

Append the following to the kubelet configuration file:

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

featureGates:

...

CPUManagerPolicyBetaOptions: true

cpuManagerPolicy: "static"

cpuManagerPolicyOptions:

prefer-align-cpus-by-uncorecache: "true"

reservedSystemCPUs: "0"

...

If you're making this change to an existing node, remove the cpu_manager_state file and then restart kubelet.

prefer-align-cpus-by-uncorecache can be enabled on nodes with a monolithic uncore cache processor. The feature will mimic a best-effort socket alignment effect and will pack CPU resources on the socket similar to the default static CPU Manager policy.

Further reading

See Node Resource Managers to learn more about the CPU Manager and the available policies.

Reference the documentation for prefer-align-cpus-by-uncorecache here.

Please see the Kubernetes Enhancement Proposal for more information on how prefer-align-cpus-by-uncorecache is implemented.

Getting involved

This feature is driven by SIG Node. If you are interested in helping develop this feature, sharing feedback, or participating in any other ongoing SIG Node projects, please attend the SIG Node meeting for more details.

Kubernetes v1.34: DRA has graduated to GA

Kubernetes 1.34 is here, and it has brought a huge wave of enhancements for Dynamic Resource Allocation (DRA)! This

release marks a major milestone with many APIs in the resource.k8s.io group graduating to General Availability (GA),

unlocking the full potential of how you manage devices on Kubernetes. On top of that, several key features have

moved to beta, and a fresh batch of new alpha features promise even more expressiveness and flexibility.

Let's dive into what's new for DRA in Kubernetes 1.34!

The core of DRA is now GA

The headline feature of the v1.34 release is that the core of DRA has graduated to General Availability.

Kubernetes Dynamic Resource Allocation (DRA) provides a flexible framework for managing specialized hardware and infrastructure resources, such as GPUs or FPGAs. DRA provides APIs that enable each workload to specify the properties of the devices it needs, but leaving it to the scheduler to allocate actual devices, allowing increased reliability and improved utilization of expensive hardware.

With the graduation to GA, DRA is stable and will be part of Kubernetes for the long run. The community can still expect a steady stream of new features being added to DRA over the next several Kubernetes releases, but they will not make any breaking changes to DRA. So users and developers of DRA drivers can start adopting DRA with confidence.

Starting with Kubernetes 1.34, DRA is enabled by default; the DRA features that have reached beta are also enabled by default.

That's because the default API version for DRA is now the stable v1 version, and not the earlier versions

(eg: v1beta1 or v1beta2) that needed explicit opt in.

Features promoted to beta

Several powerful features have been promoted to beta, adding more control, flexibility, and observability to resource management with DRA.

Admin access labelling has been updated.

In v1.34, you can restrict device support to people (or software) authorized to use it. This is meant

as a way to avoid privilege escalation if a DRA driver grants additional privileges when admin access is requested

and to avoid accessing devices which are in use by normal applications, potentially in another namespace.

The restriction works by ensuring that only users with access to a namespace with the

resource.k8s.io/admin-access: "true" label are authorized to create

ResourceClaim or ResourceClaimTemplates objects with the adminAccess field set to true. This ensures that non-admin users cannot misuse the feature.

Prioritized list lets users specify a list of acceptable devices for their workloads, rather than just a single type of device. So while the workload might run best on a single high-performance GPU, it might also be able to run on 2 mid-level GPUs. The scheduler will attempt to satisfy the alternatives in the list in order, so the workload will be allocated the best set of devices available on the node.

The kubelet's API has been updated to report on Pod resources allocated through DRA. This allows node monitoring agents to know the allocated DRA resources for Pods on a node and makes it possible to use the DRA information in the PodResources API to develop new features and integrations.

New alpha features

Kubernetes 1.34 also introduces several new alpha features that give us a glimpse into the future of resource management with DRA.

Extended resource mapping support in DRA allows cluster administrators to advertise DRA-managed resources as extended resources, allowing developers to consume them using the familiar, simpler request syntax while still benefiting from dynamic allocation. This makes it possible for existing workloads to start using DRA without modifications, simplifying the transition to DRA for both application developers and cluster administrators.

Consumable capacity introduces a flexible device sharing model where multiple, independent resource claims from unrelated pods can each be allocated a share of the same underlying physical device. This new capability is managed through optional, administrator-defined sharing policies that govern how a device's total capacity is divided and enforced by the platform for each request. This allows for sharing of devices in scenarios where pre-defined partitions are not viable. A blog about this feature is coming soon.

Binding conditions improve scheduling reliability for certain classes of devices by allowing the Kubernetes scheduler to delay binding a pod to a node until its required external resources, such as attachable devices or FPGAs, are confirmed to be fully prepared. This prevents premature pod assignments that could lead to failures and ensures more robust, predictable scheduling by explicitly modeling resource readiness before the pod is committed to a node.

Resource health status for DRA improves observability by exposing the health status of devices allocated to a Pod via Pod Status. This works whether the device is allocated through DRA or Device Plugin. This makes it easier to understand the cause of an unhealthy device and respond properly. A blog about this feature is coming soon.

What’s next?

While DRA got promoted to GA this cycle, the hard work on DRA doesn't stop. There are several features in alpha and beta that we plan to bring to GA in the next couple of releases and we are looking to continue to improve performance, scalability and reliability of DRA. So expect an equally ambitious set of features in DRA for the 1.35 release.

Getting involved

A good starting point is joining the WG Device Management Slack channel and meetings, which happen at US/EU and EU/APAC friendly time slots.

Not all enhancement ideas are tracked as issues yet, so come talk to us if you want to help or have some ideas yourself! We have work to do at all levels, from difficult core changes to usability enhancements in kubectl, which could be picked up by newcomers.

Acknowledgments

A huge thanks to the new contributors to DRA this cycle:

- Alay Patel (alaypatel07)

- Gaurav Kumar Ghildiyal (gauravkghildiyal)

- JP (Jpsassine)

- Kobayashi Daisuke (KobayashiD27)

- Laura Lorenz (lauralorenz)

- Sunyanan Choochotkaew (sunya-ch)

- Swati Gupta (guptaNswati)

- Yu Liao (yliaog)

Kubernetes v1.34: Finer-Grained Control Over Container Restarts

With the release of Kubernetes 1.34, a new alpha feature is introduced

that gives you more granular control over container restarts within a Pod. This

feature, named Container Restart Policy and Rules, allows you to specify a

restart policy for each container individually, overriding the Pod's global

restart policy. In addition, it also allows you to conditionally restart

individual containers based on their exit codes. This feature is available

behind the alpha feature gate ContainerRestartRules.

This has been a long-requested feature. Let's dive into how it works and how you can use it.

The problem with a single restart policy

Before this feature, the restartPolicy was set at the Pod level. This meant

that all containers in a Pod shared the same restart policy (Always,

OnFailure, or Never). While this works for many use cases, it can be

limiting in others.

For example, consider a Pod with a main application container and an init container that performs some initial setup. You might want the main container to always restart on failure, but the init container should only run once and never restart. With a single Pod-level restart policy, this wasn't possible.

Introducing per-container restart policies

With the new ContainerRestartRules feature gate, you can now specify a

restartPolicy for each container in your Pod's spec. You can also define

restartPolicyRules to control restarts based on exit codes. This gives you

the fine-grained control you need to handle complex scenarios.

Use cases

Let's look at some real-life use cases where per-container restart policies can be beneficial.

In-place restarts for training jobs

In ML research, it's common to orchestrate a large number of long-running AI/ML training workloads. In these scenarios, workload failures are unavoidable. When a workload fails with a retriable exit code, you want the container to restart quickly without rescheduling the entire Pod, which consumes a significant amount of time and resources. Restarting the failed container "in-place" is critical for better utilization of compute resources. The container should only restart "in-place" if it failed due to a retriable error; otherwise, the container and Pod should terminate and possibly be rescheduled.

This can now be achieved with container-level restartPolicyRules. The workload

can exit with different codes to represent retriable and non-retriable errors.

With restartPolicyRules, the workload can be restarted in-place quickly, but

only when the error is retriable.

Try-once init containers

Init containers are often used to perform initialization work for the main container, such as setting up environments and credentials. Sometimes, you want the main container to always be restarted, but you don't want to retry initialization if it fails.

With a container-level restartPolicy, this is now possible. The init container

can be executed only once, and its failure would be considered a Pod failure. If

the initialization succeeds, the main container can be always restarted.

Pods with multiple containers

For Pods that run multiple containers, you might have different restart requirements for each container. Some containers might have a clear definition of success and should only be restarted on failure. Others might need to be always restarted.

This is now possible with a container-level restartPolicy, allowing individual

containers to have different restart policies.

How to use it

To use this new feature, you need to enable the ContainerRestartRules feature

gate on your Kubernetes cluster control-plane and worker nodes running

Kubernetes 1.34+. Once enabled, you can specify the restartPolicy and

restartPolicyRules fields in your container definitions.

Here are some examples:

Example 1: Restarting on specific exit codes

In this example, the container should restart if and only if it fails with a retriable error, represented by exit code 42.

To achieve this, the container has restartPolicy: Never, and a restart

policy rule that tells Kubernetes to restart the container in-place if it exits

with code 42.

apiVersion: v1

kind: Pod

metadata:

name: restart-on-exit-codes

annotations:

kubernetes.io/description: "This Pod only restart the container only when it exits with code 42."

spec:

restartPolicy: Never

containers:

- name: restart-on-exit-codes

image: docker.io/library/busybox:1.28

command: ['sh', '-c', 'sleep 60 && exit 0']

restartPolicy: Never # Container restart policy must be specified if rules are specified

restartPolicyRules: # Only restart the container if it exits with code 42

- action: Restart

exitCodes:

operator: In

values: [42]

Example 2: A try-once init container

In this example, a Pod should always be restarted once the initialization succeeds. However, the initialization should only be tried once.

To achieve this, the Pod has an Always restart policy. The init-once

init container will only try once. If it fails, the Pod will fail. This allows

the Pod to fail if the initialization failed, but also keep running once the

initialization succeeds.

apiVersion: v1

kind: Pod

metadata:

name: fail-pod-if-init-fails

annotations:

kubernetes.io/description: "This Pod has an init container that runs only once. After initialization succeeds, the main container will always be restarted."

spec:

restartPolicy: Always

initContainers:

- name: init-once # This init container will only try once. If it fails, the Pod will fail.

image: docker.io/library/busybox:1.28

command: ['sh', '-c', 'echo "Failing initialization" && sleep 10 && exit 1']

restartPolicy: Never

containers:

- name: main-container # This container will always be restarted once initialization succeeds.

image: docker.io/library/busybox:1.28

command: ['sh', '-c', 'sleep 1800 && exit 0']

Example 3: Containers with different restart policies

In this example, there are two containers with different restart requirements. One should always be restarted, while the other should only be restarted on failure.

This is achieved by using a different container-level restartPolicy on each of

the two containers.

apiVersion: v1

kind: Pod

metadata:

name: on-failure-pod

annotations:

kubernetes.io/description: "This Pod has two containers with different restart policies."

spec:

containers:

- name: restart-on-failure

image: docker.io/library/busybox:1.28

command: ['sh', '-c', 'echo "Not restarting after success" && sleep 10 && exit 0']

restartPolicy: OnFailure

- name: restart-always

image: docker.io/library/busybox:1.28

command: ['sh', '-c', 'echo "Always restarting" && sleep 1800 && exit 0']

restartPolicy: Always

Learn more

- Read the documentation for container restart policy.

- Read the KEP for the Container Restart Rules

Roadmap

More actions and signals to restart Pods and containers are coming! Notably, there are plans to add support for restarting the entire Pod. Planning and discussions on these features are in progress. Feel free to share feedback or requests with the SIG Node community!

Your feedback is welcome!

This is an alpha feature, and the Kubernetes project would love to hear your feedback. Please try it out. This feature is driven by the SIG Node. If you are interested in helping develop this feature, sharing feedback, or participating in any other ongoing SIG Node projects, please reach out to the SIG Node community!

You can reach SIG Node by several means:

Kubernetes v1.34: User preferences (kuberc) are available for testing in kubectl 1.34

Have you ever wished you could enable interactive delete,

by default, in kubectl? Or maybe, you'd like to have custom aliases defined,

but not necessarily generate hundreds of them manually?

Look no further. SIG-CLI

has been working hard to add user preferences to kubectl,

and we are happy to announce that this functionality is reaching beta as part

of the Kubernetes v1.34 release.

How it works

A full description of this functionality is available in our official documentation, but this blog post will answer both of the questions from the beginning of this article.

Before we dive into details, let's quickly cover what the user preferences file

looks like and where to place it. By default, kubectl will look for kuberc

file in your default kubeconfig

directory, which is $HOME/.kube. Alternatively, you can specify this location

using --kuberc option or the KUBERC environment variable.

Just like every Kubernetes manifest, kuberc file will start with an apiVersion

and kind:

apiVersion: kubectl.config.k8s.io/v1beta1

kind: Preference

# the user preferences will follow here

Defaults

Let's start by setting default values for kubectl command options. Our goal

is to always use interactive delete, which means we want the --interactive

option for kubectl delete to always be set to true. This can be achieved

with the following addition to our kuberc file:

defaults:

- command: delete

options:

- name: interactive

default: "true"

In the above example, I'm introducing defaults section, which allows users to

define default values for kubectl options. In this case, we're setting the

interactive option for kubectl delete to be true by default. This default

can be overridden if a user explicitly provides a different value such as

kubectl delete --interactive=false, in which case the explicit option takes

precedence.

Another highly encouraged default from SIG-CLI, is using Server-Side Apply. To do so, you can add the following snippet to your preferences:

# continuing defaults section

- command: apply

options:

- name: server-side

default: "true"

Aliases

The ability to define aliases allows us to save precious seconds when typing

commands. I bet that you most likely have one defined for kubectl, because

typing seven letters is definitely longer than just pressing k.

For this reason, the ability to define aliases was a must-have when we decided

to implement user preferences, alongside defaulting. To define an alias for any

of the built-in commands, expand your kuberc file with the following addition:

aliases:

- name: gns

command: get

prependArgs:

- namespace

options:

- name: output

default: json

There's a lot going on above, so let me break this down. First, we're introducing

a new section: aliases. Here, we're defining a new alias gns, which is mapped

to the command get command. Next, we're defining arguments (namespace resource)

that will be inserted right after the command name. Additionally, we're setting

--output=json option for this alias. The structure of options block is identical

to the one in the defaults section.

You probably noticed that we've introduced a mechanism for prepending arguments,

and you might wonder if there is a complementary setting for appending them (in

other words, adding to the end of the command, after user-provided arguments).

This can be achieved through appendArgs block, which is presented below:

# continuing aliases section

- name: runx

command: run

options:

- name: image

default: busybox

- name: namespace

default: test-ns

appendArgs:

- --

- custom-arg

Here, we're introducing another alias: runx, which invokes kubectl run command,

passing --image and --namespace options with predefined values, and also

appending -- and custom-arg at the end of the invocation.

Debugging

We hope that kubectl user preferences will open up new possibilities for our users.

Whenever you're in doubt, feel free to run kubectl with increased verbosity.

At -v=5, you should get all the possible debugging information from this feature,

which will be crucial when reporting issues.

To learn more, I encourage you to read through our official documentation and the actual proposal.

Get involved

Kubectl user preferences feature has reached beta, and we are very interested in your feedback. We'd love to hear what you like about it and what problems you'd like to see it solve. Feel free to join SIG-CLI slack channel, or open an issue against kubectl repository. You can also join us at our community meetings, which happen every other Wednesday, and share your stories with us.

Metal3.io becomes a CNCF incubating project

The CNCF Technical Oversight Committee (TOC) has voted to accept Metal3.io as a CNCF incubating project. Metal3.io joins a growing ecosystem of technologies tackling real-world challenges at the edge of cloud native infrastructure.

What is Metal3.io?

The Metal3.io project (pronounced: “Metal Kubed”) provides components for bare metal host management with Kubernetes. You can enroll your bare metal machines, provision operating system images, and then, if you like, deploy Kubernetes clusters to them. From there, operating and upgrading your Kubernetes clusters can be handled by Metal3.io. Moreover, Metal3.io is itself a Kubernetes application, so it runs on Kubernetes and uses Kubernetes resources and APIs as its interface.

Metal3.io is also one of the providers for the Kubernetes subproject Cluster API. Cluster API provides infrastructure-agnostic Kubernetes lifecycle management, and Metal3.io brings the bare metal implementation.

Key Milestones and Ecosystem Growth

The project was started in 2019 by Red Hat and was quickly joined by Ericsson. Metal3.io then joined the CNCF sandbox in September 2020.

Metal3.io has steadily matured and grown during the sandbox phase, with:

- 57 active contributing organizations, led by Ericsson and Red Hat.

- An active community organizing weekly online meetings with working group updates, issue triaging, design discussions, etc.

- Organizations such as Fujitsu, Ikea, SUSE, Ericsson, and Red Hat among the growing list of adopters.

- New features and API iterations, including IP address management, node reuse, firmware settings, and updates management both in provisioning time and on day 2, as well as remediation for the bare metal hosts.

- A new operator, called the Ironic Standalone Operator, has been introduced to replace the shell-based deployment method for Ironic.

- Added robust security processes, regular scans of dependencies, a vulnerability disclosure process, and automated dependency updates.

Integrations Across the Cloud Native Landscape

Metal3.io connects seamlessly with many CNCF projects, including:

- Kubernetes: Metal3.io builds on the success of Kubernetes and makes use of CustomResourceDefinitions

- Cluster API: Turn the bare metal servers into Kubernetes clusters

- Cert-manager: Certificates for webhooks, etc.

- Ironic: Handles the hardware for Metal3.io by interacting with baseboard management controllers

- Prometheus: Metal3.io exposes metrics in a format that Prometheus can scrape

Technical Components

- Baremetal Operator (BMO): Exposes parts of the Ironic API as a Kubernetes native API

- Cluster API Provider Metal³ (CAPM3): Provides integration with Cluster API

- IP Address Manager (IPAM): Handles IP addresses and pools

- Ironic Standalone Operator (IrSO): Makes it easy to deploy Ironic on Kubernetes

- Ironic-Image: Container image for Ironic

Community Highlights

- 1523 GitHub Stars

- 8368 merged pull requests

- 1434 issues

- 186 contributors

- 187 Releases

Maintainer Perspective

“As a maintainer of the Metal3.io project, I’m proud of its growth towards becoming one of the leading solutions for running Kubernetes on bare metal. I take pride in how it has evolved beyond provisioning bare metal only to support broader lifecycle needs, ensuring users can sustain and operate their bare metal deployments effectively. Equally rewarding has been seeing the community come together to establish strong processes and governance, positioning Metal3.io for CNCF incubation.”

—Kashif Khan, Maintainer, Metal3.io

“Metal3.io is a testament to the power of collaboration across open source communities. It marries the battle-tested hardware support of the Ironic project with the Kubernetes API paradigm, using a lightweight Kubernetes-native deployment model. I am delighted to see it begin incubation with CNCF. I have no doubt that the forum the Metal3.io project provides will continue to drive progress in integration between Kubernetes and bare metal.”

—Zane Bitter, Maintainer, Metal3.io

From the TOC

“Metal3.io addresses a critical need for cloud native infrastructure by making bare metal as manageable and Kubernetes-native as any other platform. The project’s steady growth, technical maturity, and strong integration with the Kubernetes ecosystem made it a clear choice for incubation. We’re excited to support Metal3.io as it continues to empower organizations deploying Kubernetes at the edge and beyond.”

— Ricardo Rocha, TOC Sponsor

Looking Ahead

Metal3.io’s roadmap for 2025 includes:

- New API revisions for CAPM3, BMO, and IPAM

- Maturing IPAM as a Cluster API IPAM provider

- Multi-tenancy support

- Support for architectures other than x86_64, i.e., ARM

- Improve DHCP-less provisioning

- Simplifying Ironic deployment with IrSO

As a CNCF-hosted project, Metal3.io is part of a neutral foundation aligned with its technical interests, as well as the larger Linux Foundation, which provides governance, marketing support, and community outreach. Metal3.io joins incubating technologies ArtifactHUB, Backstage, Buildpacks, Chaos Mesh, Cloud Custodian, Container Network Interface (CNI), Contour, Cortex, Crossplane, Dragonfly, Emissary-Ingress, Flatcar, gRPC, Karmada, Keptn, Keycloak, Knative, Kubeflow, Kubescape, KubeVela, KubeVirt, Kyverno, Litmus, Longhorn, NATS, Notary, OpenCost, OpenFeature, OpenKruise, OpenTelemetry, OpenYurt, Operator Framework, Strimzi, Thanos, Volcano, and wasmCloud. For more information on maturity requirements for each level, please visit the CNCF Graduation Criteria.

We look forward to seeing how Metal3.io continues to evolve with the backing of the CNCF community.

Learn more: https://www.cncf.io/projects/metal%C2%B3/

Kubernetes v1.34: Of Wind & Will (O' WaW)

Editors: Agustina Barbetta, Alejandro Josue Leon Bellido, Graziano Casto, Melony Qin, Dipesh Rawat

Similar to previous releases, the release of Kubernetes v1.34 introduces new stable, beta, and alpha features. The consistent delivery of high-quality releases underscores the strength of our development cycle and the vibrant support from our community.

This release consists of 58 enhancements. Of those enhancements, 23 have graduated to Stable, 22 have entered Beta, and 13 have entered Alpha.

There are also some deprecations and removals in this release; make sure to read about those.

Release theme and logo

A release powered by the wind around us — and the will within us.

Every release cycle, we inherit winds that we don't really control — the state of our tooling, documentation, and the historical quirks of our project. Sometimes these winds fill our sails, sometimes they push us sideways or die down.

What keeps Kubernetes moving isn't the perfect winds, but the will of our sailors who adjust the sails, man the helm, chart the courses and keep the ship steady. The release happens not because conditions are always ideal, but because of the people who build it, the people who release it, and the bears ^, cats, dogs, wizards, and curious minds who keep Kubernetes sailing strong — no matter which way the wind blows.

This release, Of Wind & Will (O' WaW), honors the winds that have shaped us, and the will that propels us forward.

^ Oh, and you wonder why bears? Keep wondering!

Spotlight on key updates

Kubernetes v1.34 is packed with new features and improvements. Here are a few select updates the Release Team would like to highlight!

Stable: The core of DRA is GA

Dynamic Resource Allocation (DRA) enables more powerful ways to select, allocate, share, and configure GPUs, TPUs, NICs and other devices.

Since the v1.30 release, DRA has been based around claiming devices using

structured parameters that are opaque to the core of Kubernetes.

This enhancement took inspiration from dynamic provisioning for storage volumes.

DRA with structured parameters relies on a set of supporting API kinds:

ResourceClaim, DeviceClass, ResourceClaimTemplate, and ResourceSlice API types

under resource.k8s.io, while extending the .spec for Pods with a new resourceClaims field.

The resource.k8s.io/v1 APIs have graduated to stable and are now available by default.

This work was done as part of KEP #4381 led by WG Device Management.

Beta: Projected ServiceAccount tokens for kubelet image credential providers

The kubelet credential providers, used for pulling private container images, traditionally relied on long-lived Secrets stored on the node or in the cluster. This approach increased security risks and management overhead, as these credentials were not tied to the specific workload and did not rotate automatically.

To solve this, the kubelet can now request short-lived, audience-bound ServiceAccount tokens for authenticating to container registries. This allows image pulls to be authorized based on the Pod's own identity rather than a node-level credential.

The primary benefit is a significant security improvement. It eliminates the need for long-lived Secrets for image pulls, reducing the attack surface and simplifying credential management for both administrators and developers.

This work was done as part of KEP #4412 led by SIG Auth and SIG Node.

Alpha: Support for KYAML, a Kubernetes dialect of YAML

KYAML aims to be a safer and less ambiguous YAML subset, and was designed specifically for Kubernetes. Whatever version of Kubernetes you use, starting from Kubernetes v1.34 you are able to use KYAML as a new output format for kubectl.

KYAML addresses specific challenges with both YAML and JSON. YAML's significant whitespace requires careful attention to indentation and nesting, while its optional string-quoting can lead to unexpected type coercion (for example: "The Norway Bug"). Meanwhile, JSON lacks comment support and has strict requirements for trailing commas and quoted keys.

You can write KYAML and pass it as an input to any version of kubectl, because all KYAML files are also valid as YAML. With kubectl v1.34, you are also able to request KYAML output (as in kubectl get -o kyaml …) by setting environment variable KUBECTL_KYAML=true. If you prefer, you can still request the output in JSON or YAML format.

This work was done as part of KEP #5295 led by SIG CLI.

Features graduating to Stable

This is a selection of some of the improvements that are now stable following the v1.34 release.

Delayed creation of Job’s replacement Pods